Native Ceph RBD SM driver for XCP-ng

-

Hi,

Thanks for the contribution. What are you calling SMAPIv2 exactly? FYI, XCP-ng 8.2 is EOL this month, so it's better to build everything for 8.3

edit: adding @Team-Storage in the loop

-

@olivierlambert by SMAPIv2 I mean the predecessor of SMAPIv3 (eg. the old school SM driver, same as the CephFSSR driver) or maybe that's called SMAPIv1? IDK I am genuinely lost in these conventions and have a hard time finding any relevant docs

-

I took a very quick look (so I might be wrong) but it seems to be a SMAPIv1 driver.

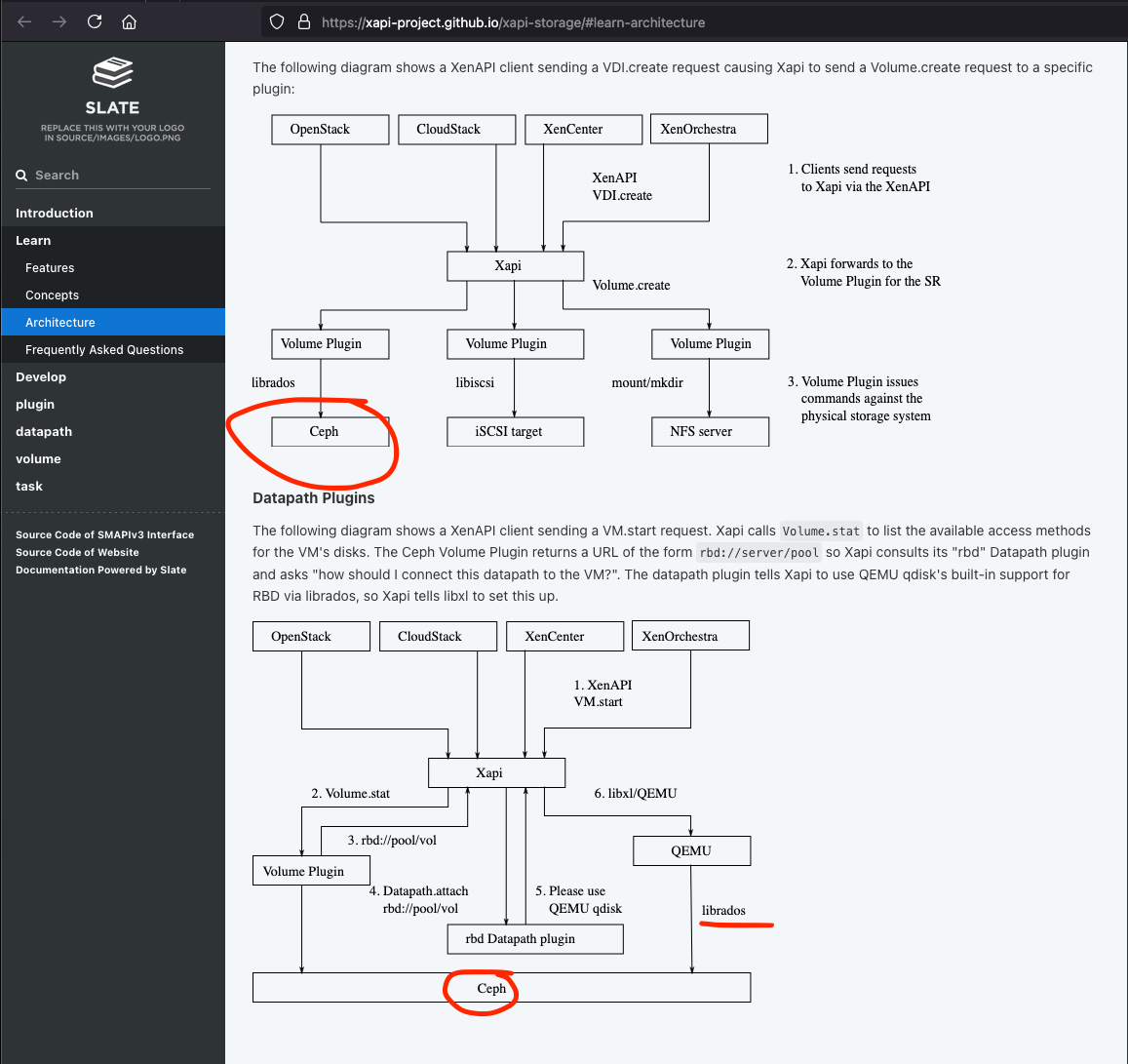

You might be better to start working directly on a SMAPIv3 driver. I would also advise to start here: https://xapi-project.github.io/xapi-storage/#introduction

You can also take a look at our first draft SMAPIv3 plugin implementation: https://github.com/xcp-ng/xcp-ng-xapi-storage/tree/master/plugins/volume/org.xen.xapi.storage.zfs-vol

-

@olivierlambert that documentation is really interesting, those diagrams are full of examples of accessing Ceph and RBD via librados, which is exactly what I am doing here LOL

Did you design those diagram based on some existing driver? It seems someone in your team already had to study Ceph concepts if it's in your very example documentation. Does the RBD driver already exists somewhere in your lab?

-

-

So, I am making some progress with my driver, but as I start understanding SM API more, I am also discovering several flaws in its implementation that limits its ability to adapt to advanced storages such as CEPH - and probably main reason why CEPH was never integrated properly and why its existing community-made integration are so overly and unnecessarily complex.

I somewhat hope that SMAPIv3 solves this problem, but I am afraid it doesn't. For now I added this comment into header of that SMAPIv1 driver, which explains the problem:

# Important notes: # Snapshot logic in this SM driver is imperfect at this moment, because the way snapshots are implemented in Xen # are fundamentally different from how snapshots work in CEPH, and sadly Xen API doesn't let the SM driver # implement this logic itself and instead forces its own (somewhat flawed and inefficient logic) # That means when the "revert to snapshot" is executed via admin UI - this driver is not notified about it in any way. # If it was, it would be able to execute a trivial "rbd rollback" CEPH action which would result in instant rollback # Instead Xen decides to create a clone from the snapshot by calling clone(), which creates another RBD # that is depending on parent snapshot, which is depending on original RBD image we wanted to rollback. # Then it calls delete on the original image which is parent of this entire new hierarchy. # This image is now impossible to delete, because it has a snapshot. Which means we need to perform a background # flatten operation, that performs physical 1:1 copy of entire image to the new clone and then destroys the snapshot # and original image. # This is brutally inefficient in comparison to native rollback (as in hours instead of seconds), but it seems with # current SM driver implementation it's not possible to do this efficiently, this requires a fix in SM APIBasically - XAPI has its own logic for how snapshots are created and managed and it forces this logic in exact same implementation on everyone - even in case that underlying storage contains its own snapshot mechanisms that can be used instead that would be FAR more efficient. Because this logic is impossible to override, hook to, or change, there isn't really any efficient way to implement snapshot logic on CEPH level.

My suggestion - instead of forcing some internal snapshot logic on SM drivers, abstract it away, just send high level requests to SM drivers such as:

- Create a snapshot of this VDI

- Revert this VDI to this snapshot

I understand for many SM drivers this could be a problem as same logic would need to be repeated in them, maybe you can make it so that if SM doesn't implement its own snapshot logic, you fallback to that default one that is implemented now?

Anyway - the way SM subsystem (at least V1, but I suspect V3 isn't any better in this) works, you can't utilize storage-level efficient features - instead you are reinventing the wheel and implementing same logic in software in extremely inefficient way.

But maybe I just overlook something, that's just how it appears to me, as there is absolutely no "revert to snapshot" overridable entry point in SM right now.

-

Well, so I found it, the culprit is not even the SM framework itself, but rather the XAPI implementation, the problem is here:

https://github.com/xapi-project/xen-api/blob/master/ocaml/xapi/xapi_vm_snapshot.ml#L250

This logic is hard-coded into XAPI - when you revert to snapshot it:

- First deletes the VDI image(s) of the VM (that is "root" for entire snapshot hierarchy - this is actually illegal in CEPH to delete such image)

- Then it creates new VDIs from the snapshot

- Then it modifies the VM and rewrites all VDI references to newly created clones from the snapshot

This is fundamentally incompatible with the native CEPH snapshot logic because in CEPH:

- You can create any amount of snapshots you want for an RBD image - but that makes it illegal to delete the RBD image as long as there is any snapshot. CEPH is using layered CoW for this, however snapshots are always read-only (which is actually fine in Xen world as it seems).

- Creation of snapshot is very fast

- Deletion of snapshot is also very fast

- Rollback of snapshot is somehow also very fast (IDK how CEPH does this though)

- You can create a clone (new RBD image) from a snapshot - that creates a parent reference to the snapshot - eg. snapshot can't be deleted until you make the new RBD independent of it via flatten operation (IO heavy and very slow).

In simple words when:

- You create image A

- You create snapshot S (A -> S)

You can very easily (cheap IO) drop S or revert A to S. However if you do what Xen does:

- You create image A

- You create snapshot S (A -> S)

- You want to revert S - Xen clones S to B (A -> S -> B) and replaces VDI ref in VM from A to B

Now it's really hard for CEPH to clean both A and S as long as B depends on both of them in the CoW hierarchy. Making B independent is IO heavy.

What I can do as a nasty workaround is that I can hide VDI for A and when the user decides they want to delete S I would just hide S as well and schedule flatten of B as some background GC cleanup job (need to investigate what are my options here), which after finish would wipe S and subsequently A (if it was a last remaining snapshot for it).

That would work, but still would be awfully inefficient software emulation of CoW, completely ignoring that we can get a real CoW from CEPH that is actually efficient and IO cheap (because it happens on storage-array level).

Now I perfectly understand why nobody ever managed to deliver native RBD support to Xen - it's just that XAPI design makes it near-impossible. No wonder we ended up with weird (also inefficient) hacks like LVM pool on top of a single RBD, or CephFS.

-

Food for thoughts for the @Team-Storage and @Team-XAPI-Network

-

Meanwhile until I finish the RBD-native driver which is probably going to take much longer than I anticipated and is probably never going to have "ideal snapshot rollback mechanism", I also decided to create another driver called LVMoRBD which is essentially same as LVMoHBA - it builds LVM-SR on top of RBD block device and is meant to work without need for some complex hacks - https://github.com/benapetr/CephRBDSR/commit/fbde8b49b180d4de60ffea477dffe712f07a4d07

it's really rather a trivial wrapper, it's main benefits as described in the commit message are the ability to auto-mount the RBD image on reboot and to use its own LVM config that enables RBD devices (so no need to modify the default shipped with xcp-ng) and some other things that make creation of LVMSR on top of RBD far easier and natural.

Again - it's not production ready yet, I will be doing many tests on it and probably still need to fix some SCSI related false-positive errors that sometimes appear in SM log. I estimate it will be ready much sooner than RBD-native driver.

LVM on top of RBD is not ideal as there is some tiny overhead, but still probably better than CephFS when it comes to RAW performance (RBD really is something like LUN from HBA)

And yes I do plan to create SMAPIv3 equivalents later when I learn how

for now I am still targetting XCP-ng 8.2 as that's what I use in production, and I haven't seen many SMAPIv3 drivers there.

for now I am still targetting XCP-ng 8.2 as that's what I use in production, and I haven't seen many SMAPIv3 drivers there. -

@benapetr This is driven by hacky logic from 16 years ago:

- on revert, unserialize the previous state, and update the VM record with its saved values. As we do not want to modify that each time we add a field in the datamodel, use some low-level database functions to iterate over the fields of a record. Not very nice as it makes some assumptions on the database layer, but seems to work allright and I don't think that database layer will change a lot in the future.

I think it might be a good idea to add a revert rpc call to the storage interface that xapi can call to, with a backup to use the current logic if necessary; xapi should be able to clean up the database afterwards. I'll ask other maintainers about this or possible alternatives, but since SMAPIv1 is considered deprecated, I doubt it will happen.

I have to say that SMAPIv3 was finally fixed upstream on June by Xenserver (migrations were finally done!) and XCP-ng should get the update that fixes it in the coming weeks. Given this, I would encourage you to take all the learnings you've acquired while doing the driver and porting it to SMAPIv3. SMAPIv1 just simply has too many problems, some of them are architectural, so in general xenserver and xcp-ng maintainers would like to see it finally go away.

for now I am still targetting XCP-ng 8.2 as that's what I use in production, and I haven't seen many SMAPIv3 drivers there.

8.2 is out of support for xenserver, and for xcp-ng yesterday was the last day it was supported, you really should update

-

@psafont thanks for the reply, but isn't that 16 year old logic part of XAPI? I mean - this same hacky logic is present in SMAPIv3 isn't it?

I was going through SMAPIv3 docs and from SM driver perspective (feature-wise) it doesn't seem much different, it looks to me more like many cosmetic changes that make packaging and modularization easier (definitely a good thing), but don't really change any fundamental SM logic - the RPCs are all same as in SMAPIv1, even porting my own driver is probably going to be pretty trivial, it's just about splitting it into multiple files and add some wrappers around it, but it still won't solve my problem - the rollback RPC is just not there, so I would need to instead support this "rollback by making another snapshot of a snapshot" logic enforced by XAPI

-

@benapetr You're right. Unfortunately, there's no VDI revert that allows the revert to happen '. This is shown in the documentation: https://xapi-project.github.io/new-docs/toolstack/features/snapshots/index.html (see revert section)

There's an old proposal to do add this: https://xapi-project.github.io/new-docs/design/snapshot-revert/index.html

But the effort fizzed out because currently the imports do not set the snapshot_of correctly, and the operation needs to work even if the field is not set correctly, as it is now. (falling back to the current code seems sensible) https://github.com/xapi-project/xen-api/pull/2058

This needs some effort to get fixed, I'll set up some ticketing so it can be prioritized accordingly.

-

This post is deleted! -

The author of the main recent effort is basically the person who posted just before you

-

@olivierlambert you basically replied just after that I noticed that and deleted my message...

-

Nothing fruitful to add....

But...

Oooof....

This will be somewhat messy to clean up. I'm rooting for you guys though!!

-

There's some nice progress on @psafont's work regarding improved revert. I'm confident we'll get there

-

Hello,

Thanks for your work !

We have some hypervisors of tests at Gladhost, we can use them with pleasure to test your work on xcp-ng 8.3 !

Best regards