Rolling Pool Update Not Working

-

I have a Pool of 2 test XCP-Ng Hosts. I saw yesterday I need 69 patches via the Pool. I've done RPU before without issue. I attempted yesterday, and nothing happened. I have a 2nd XCP-Ng lab with 2 Hosts and that one updated fine using RPU, though I only have 1 VM (XO) in the Pool. In this RPU failed Pool, I have about 10 VMs.

I do meet the requirements listed in the doc:

https://docs.xcp-ng.org/management/updates/#rolling-pool-update-rpu

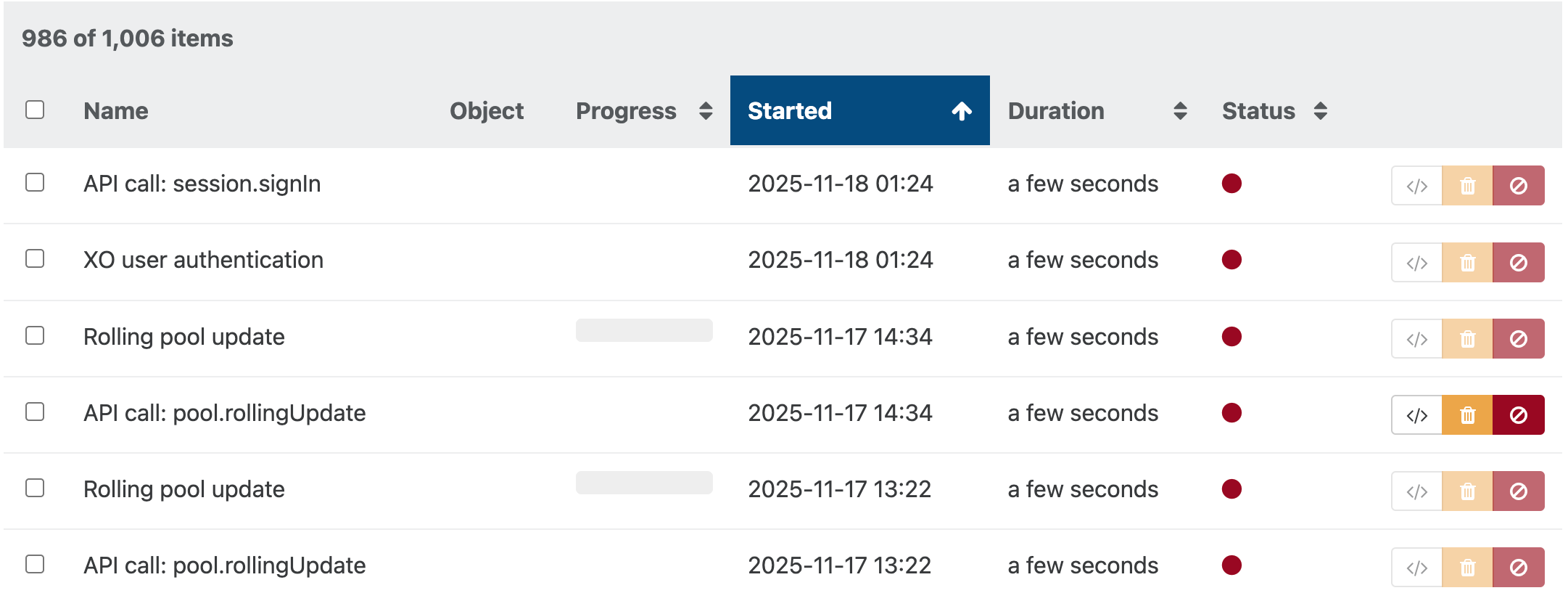

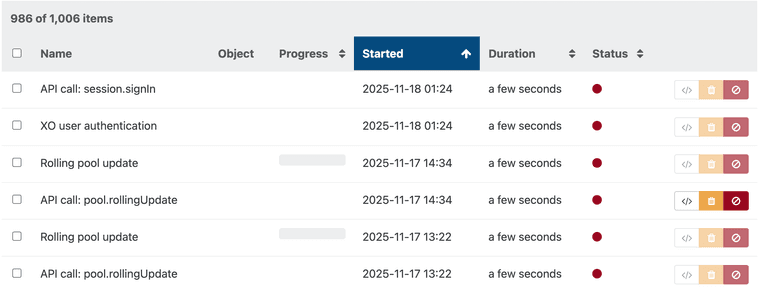

..i.e. all VMs are on shared storage. Anyway to see why this is failing? I re-attempted the RPU after about an hr, but both the initial and 2nd try show as 0 progress:

Thoughts?

Thanks! -

@coolsport00 are the HOSTs stricly identical ? VM can manually be migrated ?

is there a VM affinity in ADVANCED TAB ? is the PREVENT VM MIGRATION ENABLED ?

if possible try to shutdown the VM. -

@coolsport00 could you click on

and post the error log here ?

and post the error log here ? -

@Pilow hi...yes; thank you for that. I see the Hosts seem to have updated, but not able to reboot due to some VM (doesn't share the friendly/display VM name)...it appears. See below:

{

"id": "0mi3j7qhj",

"properties": {

"poolId": "06f0d0d0-5745-9750-12b5-f5698a0dfba2",

"poolName": "XCP-Lab",

"progress": 0,

"name": "Rolling pool update",

"userId": "dd12cef8-919e-4ab7-97ae-75253331c84f"

},

"start": 1763407364311,

"status": "failure",

"updatedAt": 1763407364581,

"tasks": [

{

"id": "9vj4btqb1qk",

"properties": {

"name": "Listing missing patches",

"total": 2,

"progress": 100

},

"start": 1763407364314,

"status": "success",

"tasks": [

{

"id": "yglme9v6vz",

"properties": {

"name": "Listing missing patches for host 42f6368c-9dd9-4ea3-ac01-188a6476280d",

"hostId": "42f6368c-9dd9-4ea3-ac01-188a6476280d",

"hostName": "nkc-xcpng-2.nkcschools.org"

},

"start": 1763407364316,

"status": "success",

"end": 1763407364318

},

{

"id": "tou8ffgte7",

"properties": {

"name": "Listing missing patches for host 1f991575-e08d-4c3d-a651-07e4ccad6769",

"hostId": "1f991575-e08d-4c3d-a651-07e4ccad6769",

"hostName": "nkc-xcpng-1.nkcschools.org"

},

"start": 1763407364317,

"status": "success",

"end": 1763407364318

}

],

"end": 1763407364319

},

{

"id": "0i923wgi5wlg",

"properties": {

"name": "Updating and rebooting"

},

"start": 1763407364319,

"status": "failure",

"end": 1763407364578,

"result": {

"code": "CANNOT_EVACUATE_HOST",

"params": [

"VM_LACKS_FEATURE,OpaqueRef:492194ea-9ad0-b759-ab20-8f72ffbb0cbb"

],

"call": {

"duration": 248,

"method": "host.assert_can_evacuate",

"params": [

" session id ",

"OpaqueRef:0619ffdc-782a-b854-c350-5ce1cc354547"

]

},

"message": "CANNOT_EVACUATE_HOST(VM_LACKS_FEATURE,OpaqueRef:492194ea-9ad0-b759-ab20-8f72ffbb0cbb)",

"name": "XapiError",

"stack": "XapiError: CANNOT_EVACUATE_HOST(VM_LACKS_FEATURE,OpaqueRef:492194ea-9ad0-b759-ab20-8f72ffbb0cbb)\n at Function.wrap (file:///opt/xo/xo-builds/xen-orchestra-202511170838/packages/xen-api/_XapiError.mjs:16:12)\n at file:///opt/xo/xo-builds/xen-orchestra-202511170838/packages/xen-api/transports/json-rpc.mjs:38:21\n at runNextTicks (node:internal/process/task_queues:65:5)\n at processImmediate (node:internal/timers:453:9)\n at process.callbackTrampoline (node:internal/async_hooks:130:17)"

}

}

],

"end": 1763407364581,

"result": {

"code": "CANNOT_EVACUATE_HOST",

"params": [

"VM_LACKS_FEATURE,OpaqueRef:492194ea-9ad0-b759-ab20-8f72ffbb0cbb"

],

"call": {

"duration": 248,

"method": "host.assert_can_evacuate",

"params": [

" session id ",

"OpaqueRef:0619ffdc-782a-b854-c350-5ce1cc354547"

]

},

"message": "CANNOT_EVACUATE_HOST(VM_LACKS_FEATURE,OpaqueRef:492194ea-9ad0-b759-ab20-8f72ffbb0cbb)",

"name": "XapiError",

"stack": "XapiError: CANNOT_EVACUATE_HOST(VM_LACKS_FEATURE,OpaqueRef:492194ea-9ad0-b759-ab20-8f72ffbb0cbb)\n at Function.wrap (file:///opt/xo/xo-builds/xen-orchestra-202511170838/packages/xen-api/_XapiError.mjs:16:12)\n at file:///opt/xo/xo-builds/xen-orchestra-202511170838/packages/xen-api/transports/json-rpc.mjs:38:21\n at runNextTicks (node:internal/process/task_queues:65:5)\n at processImmediate (node:internal/timers:453:9)\n at process.callbackTrampoline (node:internal/async_hooks:130:17)"

}

}I checked all my VMs and they are all on shared storage..so not sure why a VM is not able to live migrate?...

Thanks.

-

@coolsport00 said in Rolling Pool Update Not Working:

492194ea-9ad0-b759-ab20-8f72ffbb0cbb

go in the VM view list, and enter this UUID in the search bar on top.

any luck on filtering the VM ? -

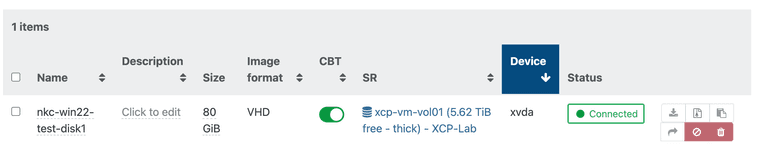

and then go to the DISK tab of this VM and take a screenshot (before removing the unnecessary DISK in the CDROM that is on a local SR of the host where this VM currently lives

)

) -

@Pilow Sorry for the delay...had to go to a mtg real quick.

Thank you for the suggestion...I was indeed able to paste in the VM GUID and get the VM name. No ISOs etc attached. And, the disk is indeed on Shared Storage

BUT....it does have snapshots. Is that the issue? I'd rather not remove them if possible. If they are the issue, can I power off the VM and resolve this issue you think?

BUT....it does have snapshots. Is that the issue? I'd rather not remove them if possible. If they are the issue, can I power off the VM and resolve this issue you think?Thanks.

-

And, to confirm...here's the screenshot (that vol shown is iscsi storage connected to both Hosts)

-

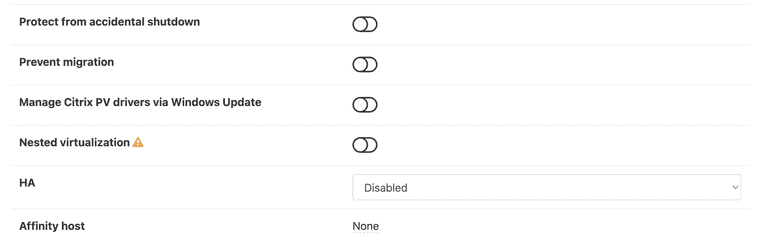

@coolsport00 are the HOSTs stricly identical ? VM can manually be migrated ?

is there a VM affinity in ADVANCED TAB ? is the PREVENT VM MIGRATION ENABLED ?

if possible try to shutdown the VM. -

@Pilow Don't think so...this is just a "test" VM; don't think I even knew you could create affinity rules for XCP/XO. Let me check...

-

@Pilow - so...I powered down the VM and re-attempted the RPU. It seems snapshots is a deterrent for being able to perform a RPU, because I see VMs migrating now so the RPU can run.

For specificity - yes, my XCP Hosts are identical (CPU, RAM, etc); there were no affinities set (see screenshot)

, etc.

, etc.Thank you for the assist!

I think @olivierlambert or his tech writers need to update the RPU requirements area of the docs. Snapshots cannot be present or RPU will fail. Weird why that is

-

C coolsport00 marked this topic as a question on

-

C coolsport00 has marked this topic as solved on