How to deploy the new k8s on latest XOA 5.106?

-

I'm trying to deploy the updated k8s cluster on XOA 5.106 but it fails quietly.

After digging through the logs I was able to find this:

{ "id": "0ma67t63q", "properties": { "method": "xoa.recipe.createKubernetesCluster", "params": { "clusterName": "VersatusHPC", "controlPlanePoolSize": 3, "k8sVersion": "1.33", "nbNodes": 3, "network": "4751905f-b4db-2d54-d05d-0c1be97a0260", "sr": "f2d0eb72-4016-4b3b-8dc0-bc2a16df6c35", "sshKey": "ssh-ed25519 AAAAC3NzaC1lZDI" }, "name": "API call: xoa.recipe.createKubernetesCluster", "userId": "fd28fb18-c3f1-429b-919f-4e8ae57dde0e", "type": "api.call" }, "start": 1746155348774, "status": "failure", "updatedAt": 1746155466728, "end": 1746155466728, "result": { "code": 10, "data": { "errors": [ { "instancePath": "", "schemaPath": "#/required", "keyword": "required", "params": { "missingProperty": "template" }, "message": "must have required property 'template'" } ] }, "message": "invalid parameters", "name": "XoError", "stack": "XoError: invalid parameters\n at Module.invalidParameters (/usr/local/lib/node_modules/xo-server/node_modules/xo-common/src/api-errors.js:21:32)\n at Xo.call (file:///usr/local/lib/node_modules/xo-server/src/xo-mixins/api.mjs:121:22)\n at Api.#callApiMethod (file:///usr/local/lib/node_modules/xo-server/src/xo-mixins/api.mjs:409:19)\n at Xoa.createCluster (/usr/local/lib/node_modules/xo-server-xoa/src/recipes/kubernetes-cluster.js:262:28)\n at Task.runInside (/usr/local/lib/node_modules/xo-server/node_modules/@vates/task/index.js:175:22)\n at Task.run (/usr/local/lib/node_modules/xo-server/node_modules/@vates/task/index.js:159:20)\n at Api.#callApiMethod (file:///usr/local/lib/node_modules/xo-server/src/xo-mixins/api.mjs:469:18)" } }However that wasn't that helpful.

I tried changing options and atributes and got a little further but it ended up with a similar issue:

{ "id": "0ma78aljw", "properties": { "method": "xoa.recipe.createKubernetesCluster", "params": { "clusterName": "VersatusHPC", "controlPlaneIpAddresses": [ "10.20.0.151/24", "10.20.0.152/24", "10.20.0.153/24" ], "controlPlanePoolSize": 3, "gatewayIpAddress": "10.20.0.1", "k8sVersion": "1.33", "nameservers": [ "10.20.0.1" ], "nbNodes": 3, "network": "a28fd0a8-70e2-8fc6-cefa-12c46c8f47cb", "searches": [ "local.versatushpc.com.br", "versatushpc.com.br" ], "sr": "f2d0eb72-4016-4b3b-8dc0-bc2a16df6c35", "sshKey": "ssh-ed25519 AAAAC3NzaC1lZDI1N", "vipAddress": "10.20.0.150/24", "workerNodeIpAddresses": [ "10.20.0.154/24", "10.20.0.155/24", "10.20.0.156/24" ] }, "name": "API call: xoa.recipe.createKubernetesCluster", "userId": "fd28fb18-c3f1-429b-919f-4e8ae57dde0e", "type": "api.call" }, "start": 1746216628124, "status": "failure", "updatedAt": 1746217416799, "end": 1746217416798, "result": { "message": "Cannot read properties of undefined (reading 'VM')", "name": "TypeError", "stack": "TypeError: Cannot read properties of undefined (reading 'VM')\n at Xoa.createCluster (/usr/local/lib/node_modules/xo-server-xoa/src/recipes/kubernetes-cluster.js:488:22)\n at Task.runInside (/usr/local/lib/node_modules/xo-server/node_modules/@vates/task/index.js:175:22)\n at Task.run (/usr/local/lib/node_modules/xo-server/node_modules/@vates/task/index.js:159:20)\n at Api.#callApiMethod (file:///usr/local/lib/node_modules/xo-server/src/xo-mixins/api.mjs:469:18)" } }I can see 3 control planes and 3 works were deployed. However it does not complete the task.

What steps I further need to take to investigate the issue?

Thanks.

-

Let me ping the appropriate team in here

Ping @Team-DevOps

-

@ferrao Thank you for the logs, with it I was able to reproduce the bug.

It's at the end of the recipe, when the tag associated with the k8s cluster is saved in the user's custom filters. This has no effect on the cluster creation, as this is done at the end when everything else is done.

To avoid this bug in future runs, you can save a custom filter from the search field in the VMs list.

About the first error you encounter, it's related to the template used to create the VM, which seems not available at the VM creation... I'm working on this to understand why.

-

@Cyrille said in How to deploy the new k8s on latest XOA 5.106?:

@ferrao Thank you for the logs, with it I was able to reproduce the bug.

It's at the end of the recipe, when the tag associated with the k8s cluster is saved in the user's custom filters. This has no effect on the cluster creation, as this is done at the end when everything else is done.

To avoid this bug in future runs, you can save a custom filter from the search field in the VMs list.

About the first error you encounter, it's related to the template used to create the VM, which seems not available at the VM creation... I'm working on this to understand why.

I think I was able to nail down the first one as a DHCP server with insufficient leases for all VMs. Because when I used static IP addresses I was able to generate the second log.

I'll try to create the tag you mentioned now and redeploy everything from scratch. Is there any specific text tag that I should create? I'm not sure if I understand 100% the procedure.

-

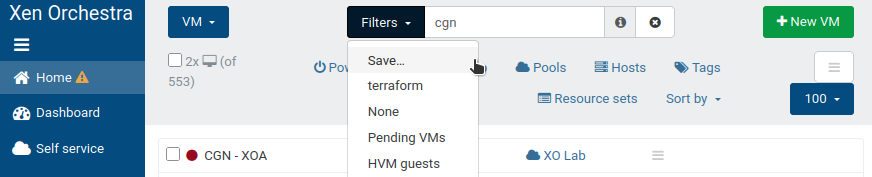

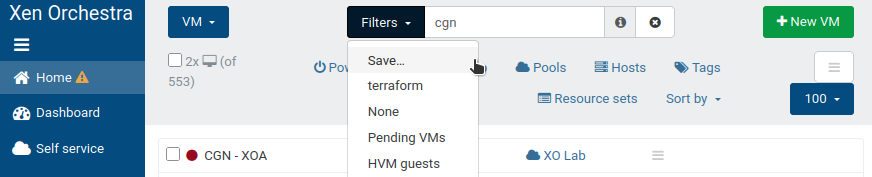

@ferrao open the VMs list (Home>VMs), click on "Filters" near the search bar, and then click "Save...", in the popup dialog enter a name for the filter and click "OK".

This should be enough to workaround the 2nd bug that happened at the end (that prevent to save the tag that is added to the clusters VMs)

-

@Cyrille said in How to deploy the new k8s on latest XOA 5.106?:

@ferrao open the VMs list (Home>VMs), click on "Filters" near the search bar, and then click "Save...", in the popup dialog enter a name for the filter and click "OK".

This should be enough to workaround the 2nd bug that happened at the end (that prevent to save the tag that is added to the clusters VMs)

Hello. Thanks, it seems to have worked. The deployment screen no longer hangs. However I don't get any feedback at the finish.

And on the Task log, the log about the Kubernetes cluster seems to be gone:

Cannot GET /rest/v0/tasks/0mabaot5tI've selected this task:

API call: xoa.recipe.createKubernetesCluster 2025-05-05 13:28Is this expected?

-

@ferrao Yes it is. At the end the task disappears unless there is an error / failure.

-

These two bugs have been fixed in the latest release