Testing ZFS with XCP-ng

-

Currently hundred of gigabytes gets transfered

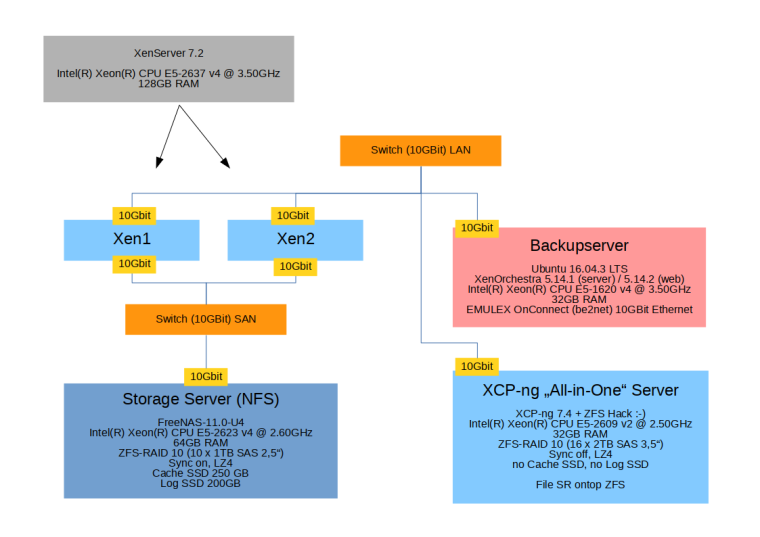

I switched xenorchestra from the VM to our hardware backup server, so we can use the 10Gbit cards. (The VM run iperf about 1,6 Gbit/s, the hardware at around 6 GBit/s.)

The data flow is:

NFS-Storage(ZFS) -> XenServer -> XO -> XCP-ng (ZFS), all connected over 10GBit Ethernet.I did not measure correct numbers, was just impressed and happy that it runs

I will try to express my data and meanings more detailed later...Setup

Settings

- Alle servers are connected via 10GBit ethernet cards (Emulex OnConnect)

- Xen1 and Xen2 are a pool, Xen1 is master

- XenOrchestra connects per http:// (no SSL to improve speed)

- DOM0 has 8GB RAM on XCP-ng AiO (so ZFS does use 4GB)

Numbers

- iperf between Backupserver and XCP-ng AiO: ~6 GBit's

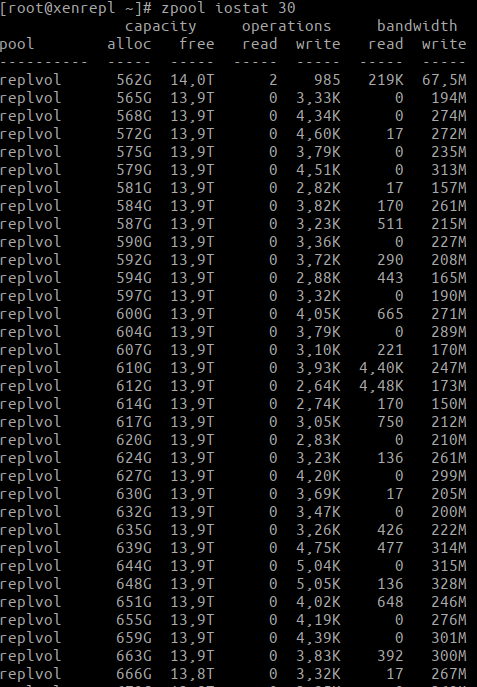

- Transfer speed of Continous Replication Job from Xen-Pool to XCP-ng AiO (output of

zpool iostatwith 30 seconds sample rate on XCP-ng AiO)

The speed varies a bit because of lz4 compression, current Compress Ratio: 1.64x

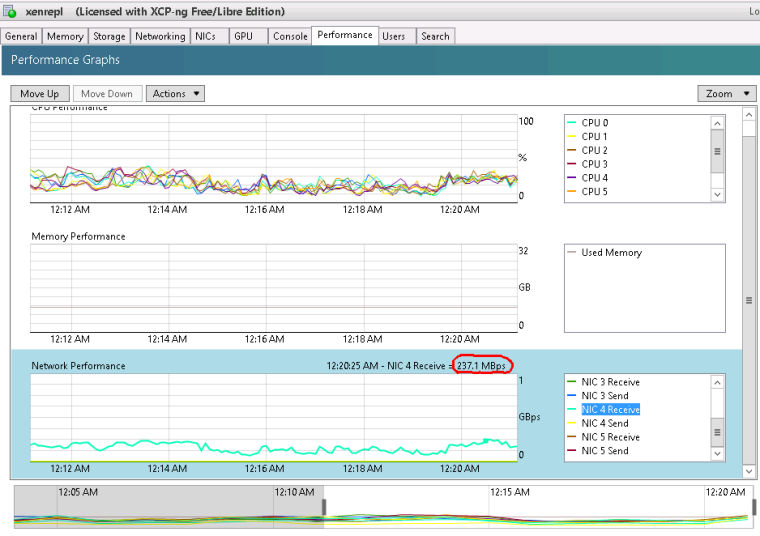

Here a quick look at the graphs (on XCP-ng AiO)

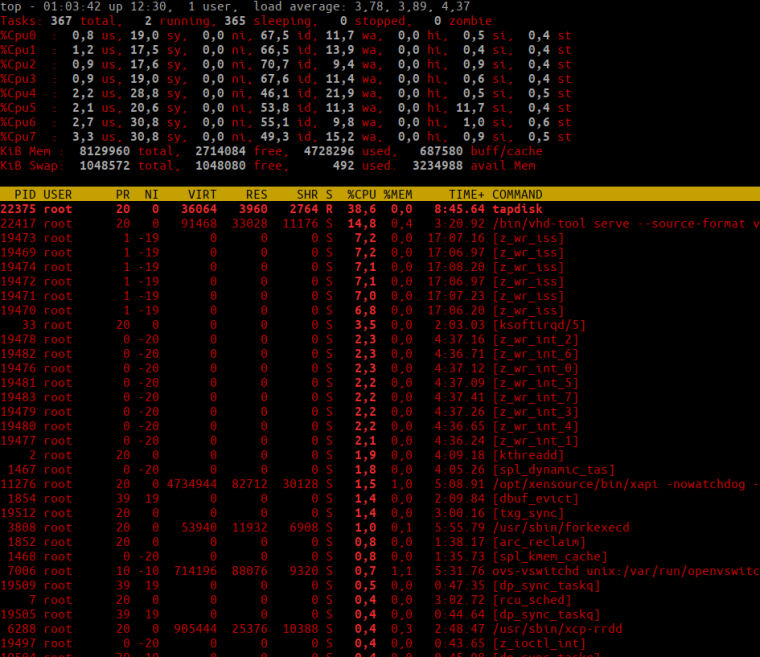

and a quick look at top (on XCP-ng AiO)

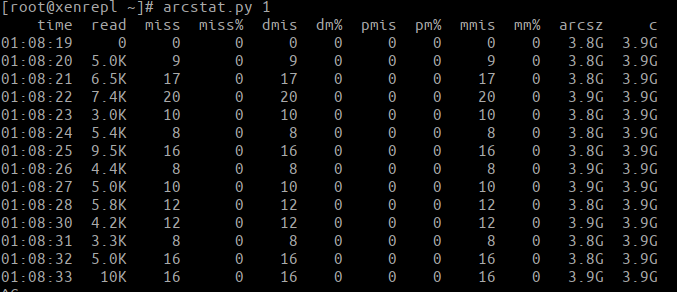

and arcstat.py (sample rate 1sec) (on XCP-ng AiO)

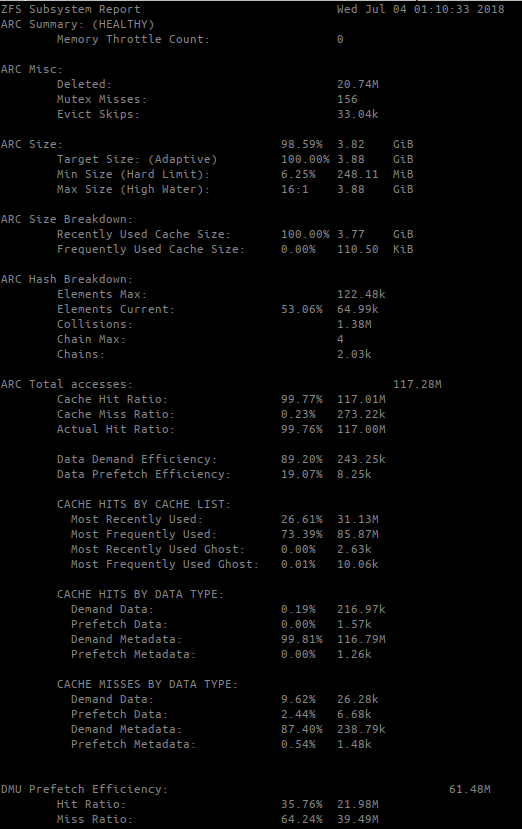

and arc_summary.py (on XCP-ng AiO)

@olivierlambert I hope you can see somehting with these numbers

-

To avoid the problem with XenOrchestra runing as VM (and not using the full 10GBit of the host) I would install it directly inside DOM0. It could be preinstalled in XCP-ng as WEB-Gui

Maybe I try this later on my AiO Server ...

-

Not a good idea to embed XO inside Dom0… (also it will cause issue if you have multiple XOs, it's meat to run at one place only).

That's why the virtual appliance is better

-

@olivierlambert My biggest Question: Why is this not a good Idea?

How do big datacenters solve the issue with the performance? Just install on Hardware? With virtual Appliance you are limited to GBit speed.

I run more than one XO's, one for Backups and one for Useraccess. Don't want users on my Backupserver.

Maybe this is something for a separat thread...

-

VM speed is not limited to GBit speed. XO proxies will deal with big infrastructures to avoid bottlenecks.

-

@olivierlambert ah, ok

-

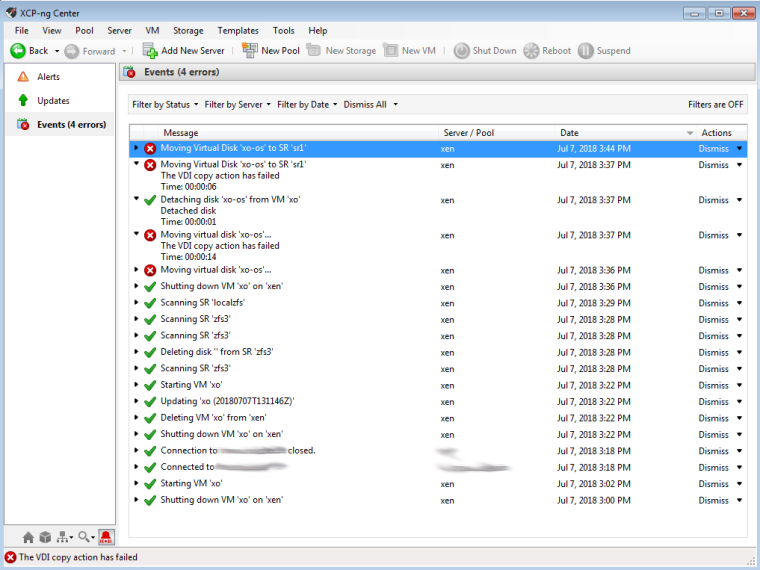

I did a little more testing with ZFS, tried to move a disk from

localpooltosrpoolSetup

- XCP-ng on single 500Gb disk, ZFS-Pool created on a separat partition on that disk (~420GB) ->

localpool-> SR namedlocalzfs - ZFS-Pool on 4 x 500GB Disks (Raid10) ->

srpool-> SR namedsr1

Error

Output of

/var/log/SMLogJul 7 15:44:07 xen SM: [28282] ['uuidgen', '-r'] Jul 7 15:44:07 xen SM: [28282] pread SUCCESS Jul 7 15:44:07 xen SM: [28282] lock: opening lock file /var/lock/sm/4c47f4b0-2504-fe56-085c-1ffe2269ddea/sr Jul 7 15:44:07 xen SM: [28282] lock: acquired /var/lock/sm/4c47f4b0-2504-fe56-085c-1ffe2269ddea/sr Jul 7 15:44:07 xen SM: [28282] vdi_create {'sr_uuid': '4c47f4b0-2504-fe56-085c-1ffe2269ddea', 'subtask_of': 'DummyRef:|62abb588-91e6-46d7-8c89-a1be48c843bd|VDI.create', 'vdi_type': 'system', 'args': ['10737418240', 'xo-os', '', '', 'false', '19700101T00:00:00Z', '', 'false'], 'o_direct': False, 'host_ref': 'OpaqueRef:ada301d3-f232-3421-527a-d511fd63f8c6', 'session_ref': 'OpaqueRef:02edf603-3cdf-444a-af75-1fb0a8d71216', 'device_config': {'SRmaster': 'true', 'location': '/mnt/srpool/sr1'}, 'command': 'vdi_create', 'sr_ref': 'OpaqueRef:0c8c4688-d402-4329-a99b-6273401246ec', 'vdi_sm_config': {}} Jul 7 15:44:07 xen SM: [28282] ['/usr/sbin/td-util', 'create', 'vhd', '10240', '/var/run/sr-mount/4c47f4b0-2504-fe56-085c-1ffe2269ddea/11a040ba-53fd-4119-80b3-7ea5a7e134c6.vhd'] Jul 7 15:44:07 xen SM: [28282] pread SUCCESS Jul 7 15:44:07 xen SM: [28282] ['/usr/sbin/td-util', 'query', 'vhd', '-v', '/var/run/sr-mount/4c47f4b0-2504-fe56-085c- 1ffe2269ddea/11a040ba-53fd-4119-80b3-7ea5a7e134c6.vhd'] Jul 7 15:44:07 xen SM: [28282] pread SUCCESS Jul 7 15:44:07 xen SM: [28282] lock: released /var/lock/sm/4c47f4b0-2504-fe56-085c-1ffe2269ddea/sr Jul 7 15:44:07 xen SM: [28282] lock: closed /var/lock/sm/4c47f4b0-2504-fe56-085c-1ffe2269ddea/sr Jul 7 15:44:07 xen SM: [28311] lock: opening lock file /var/lock/sm/319aebee-24f0-8232-0d9e-ad42a75d8154/sr Jul 7 15:44:07 xen SM: [28311] lock: acquired /var/lock/sm/319aebee-24f0-8232-0d9e-ad42a75d8154/sr Jul 7 15:44:07 xen SM: [28311] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/319aebee-24f0-8232-0d9e-ad42a75d8154/ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0.vhd'] Jul 7 15:44:07 xen SM: [28311] pread SUCCESS Jul 7 15:44:07 xen SM: [28311] vdi_attach {'sr_uuid': '319aebee-24f0-8232-0d9e-ad42a75d8154', 'subtask_of': 'DummyRef:|b0cce7fa-75bb-4677-981a-4383e5fe1487|VDI.attach', 'vdi_ref': 'OpaqueRef:9daa47a5-1cae-40c2-b62e-88a767f38877', 'vdi_on_boot': 'persist', 'args': ['false'], 'o_direct': False, 'vdi_location': 'ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0', 'host_ref': 'OpaqueRef:ada301d3-f232-3421-527a-d511fd63f8c6', 'session_ref': 'OpaqueRef:a27b0e88-cebe-41a7-bc0c-ae0eb46dd011', 'device_config': {'SRmaster': 'true', 'location': '/localpool/sr'}, 'command': 'vdi_attach', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:e4d0225c-1737-4496-8701-86a7f7ac18c1', 'vdi_uuid': 'ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0'} Jul 7 15:44:07 xen SM: [28311] lock: opening lock file /var/lock/sm/ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0/vdi Jul 7 15:44:07 xen SM: [28311] result: {'o_direct_reason': 'SR_NOT_SUPPORTED', 'params': '/dev/sm/backend/319aebee-24f0-8232-0d9e-ad42a75d8154/ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0', 'o_direct': True, 'xenstore_data': {'scsi/0x12/0x80': 'AIAAEmNhODhmOGRjLTRhYjYtNGIgIA==', 'scsi/0x12/0x83': 'AIMAMQIBAC1YRU5TUkMgIGNhODhmOGRjLTRhYjYtNGJlNy05MDg0LTFiNDFjYmM4YzFjMCA=', 'vdi-uuid': 'ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0', 'mem-pool': '319aebee-24f0-8232-0d9e-ad42a75d8154'}} Jul 7 15:44:07 xen SM: [28311] lock: closed /var/lock/sm/ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0/vdi Jul 7 15:44:07 xen SM: [28311] lock: released /var/lock/sm/319aebee-24f0-8232-0d9e-ad42a75d8154/sr Jul 7 15:44:07 xen SM: [28311] lock: closed /var/lock/sm/319aebee-24f0-8232-0d9e-ad42a75d8154/sr Jul 7 15:44:08 xen SM: [28337] lock: opening lock file /var/lock/sm/319aebee-24f0-8232-0d9e-ad42a75d8154/sr Jul 7 15:44:08 xen SM: [28337] lock: acquired /var/lock/sm/319aebee-24f0-8232-0d9e-ad42a75d8154/sr Jul 7 15:44:08 xen SM: [28337] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/319aebee-24f0-8232-0d9e-ad42a75d8154/ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0.vhd'] Jul 7 15:44:08 xen SM: [28337] pread SUCCESS Jul 7 15:44:08 xen SM: [28337] lock: released /var/lock/sm/319aebee-24f0-8232-0d9e-ad42a75d8154/sr Jul 7 15:44:08 xen SM: [28337] vdi_activate {'sr_uuid': '319aebee-24f0-8232-0d9e-ad42a75d8154', 'subtask_of': 'DummyRef:|1f6f53c2-0d1e-462c-aeea-5d109e55d44f|VDI.activate', 'vdi_ref': 'OpaqueRef:9daa47a5-1cae-40c2-b62e-88a767f38877', 'vdi_on_boot': 'persist', 'args': ['false'], 'o_direct': False, 'vdi_location': 'ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0', 'host_ref': 'OpaqueRef:ada301d3-f232-3421-527a-d511fd63f8c6', 'session_ref': 'OpaqueRef:392bd2b6-2a84-48ca-96f1-fe0bdaa96f20', 'device_config': {'SRmaster': 'true', 'location': '/localpool/sr'}, 'command': 'vdi_activate', 'vdi_allow_caching': 'false', 'sr_ref': 'OpaqueRef:e4d0225c-1737-4496-8701-86a7f7ac18c1', 'vdi_uuid': 'ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0'} Jul 7 15:44:08 xen SM: [28337] lock: opening lock file /var/lock/sm/ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0/vdi Jul 7 15:44:08 xen SM: [28337] blktap2.activate Jul 7 15:44:08 xen SM: [28337] lock: acquired /var/lock/sm/ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0/vdi Jul 7 15:44:08 xen SM: [28337] Adding tag to: ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0 Jul 7 15:44:08 xen SM: [28337] Activate lock succeeded Jul 7 15:44:08 xen SM: [28337] lock: opening lock file /var/lock/sm/319aebee-24f0-8232-0d9e-ad42a75d8154/sr Jul 7 15:44:08 xen SM: [28337] ['/usr/sbin/td-util', 'query', 'vhd', '-vpfb', '/var/run/sr-mount/319aebee-24f0-8232-0d9e-ad42a75d8154/ca88f8dc-4ab6-4be7-9084-1b41cbc8c1c0.vhd'] Jul 7 15:44:08 xen SM: [28337] pread SUCCESS - XCP-ng on single 500Gb disk, ZFS-Pool created on a separat partition on that disk (~420GB) ->

-

@borzel said in Testing ZFS with XCP-ng:

Jul 7 15:44:07 xen SM: [28311] result: {'o_direct_reason': 'SR_NOT_SUPPORTED',

Did find it after posting this and looking onto it... is this a known thing?

-

that's weird: https://github.com/xapi-project/sm/blob/master/drivers/blktap2.py#L1002

I don't even know how my tests did work before.

-

Maybe because it wasn't triggered without a VDI live migration?

-

did no livemigration ...just local copy of a vm

... ah, I should read before I answer ... -

@nraynaud Is there any info how to build blktap2 myself to test with my homelab?

I did find it here: https://xcp-ng.org/forum/topic/122/how-to-build-blktap-from-sources/3 -

I tested the updated version of blktap and build it like described in https://xcp-ng.org/forum/post/1677, but copying a vm within the SR fails

Async.VM.copy R:f286a572f8aa|xapi] Error in safe_clone_disks: Server_error(VDI_COPY_FAILED, [ End_of_file ]) -

the function

safe_clone_disksis located in https://github.com/xapi-project/xen-api/blob/72a9a2d6826e9e39d30fab0d6420de6a0dcc0dc5/ocaml/xapi/xapi_vm_clone.ml#L139it calls

clone_single_vdiin https://github.com/xapi-project/xen-api/blob/72a9a2d6826e9e39d30fab0d6420de6a0dcc0dc5/ocaml/xapi/xapi_vm_clone.ml#L116which calls

Client.Async.VDI.copyfrom a XAPI-OCaml ModuleClientwhich I can not find

-

found something in

/var/log/SMlogJul 21 19:52:40 xen SM: [17060] result: {'o_direct_reason': 'SR_NOT_SUPPORTED', 'params': '/dev/sm/backend /4c8bc619-98bd-9342-85fe-1ea4782c0cf2/8e901c80-0ead-4764-b3d9-b04b4376e4c4', 'o_direct': True, 'xenstore_data': {'scsi/0x12/0x80': 'AIAAEjhlOTAxYzgwLTBlYWQtNDcgIA==', 'scsi/0x12/0x83': 'AIMAMQIBAC1YRU5TUkMgIDhlOTAxYzgwLTBlYWQtNDc2NC1iM2Q5LWIwNGI0Mzc2ZTRjNCA=', 'vdi-uuid': '8e901c80-0ead-4764-b3d9-b04b4376e4c4', 'mem-pool': '4c8bc619-98bd-9342-85fe-1ea4782c0cf2'}}Note the

'o_direct': Truein this log entry. But I created the SR withother-config:o_direct=false -

-

yes, I did

Checked it multiple times. Will check it again today to be 100% sure.

Checked it multiple times. Will check it again today to be 100% sure.I also saw the right logoutput from @nraynaud new function where he probes the open with O_DIRECT and retries with O_DSYNC.

I have the "feel" that xapi or blktap copy's files in some situations without it's normal way. Maybe a special "file copy" without a open command?

Can I attach a debugger to blktap while in operration? This would also be needed for deep support of the whole server.

-

You can probably attach

gdbto a running blktap, yes. Install the blktap-debuginfo package that was produced when you built blktap to make debug symbols available. -

@borzel there is something fishy around here https://github.com/xapi-project/sm/blob/master/drivers/blktap2.py#L994 I am still unsure what to do.

I have not removed O_DIRECT everywhere in blktap, I was expecting to remove the remainder by the o_direct flag in python, but I guess I was wrong. we might patch the python.

-

Maybe we can create a new SR type "zfs" and copy the file SR implementation from https://github.com/xapi-project/sm/blob/master/drivers/FileSR.py

This would us later allow to create a more deeper integration of ZFS.