Delta backup fails for specific vm with VDI chain error

-

Hi Olivier!

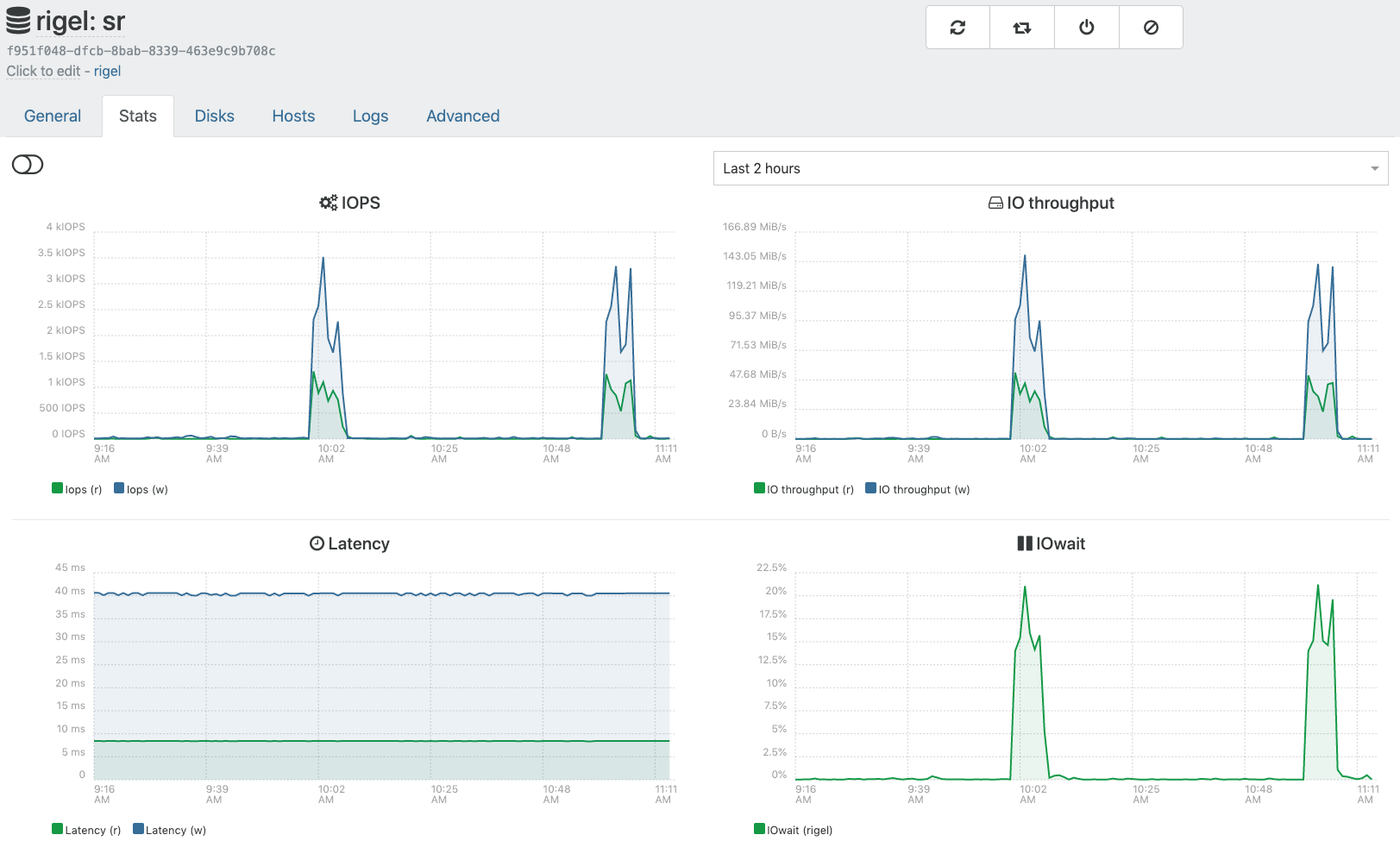

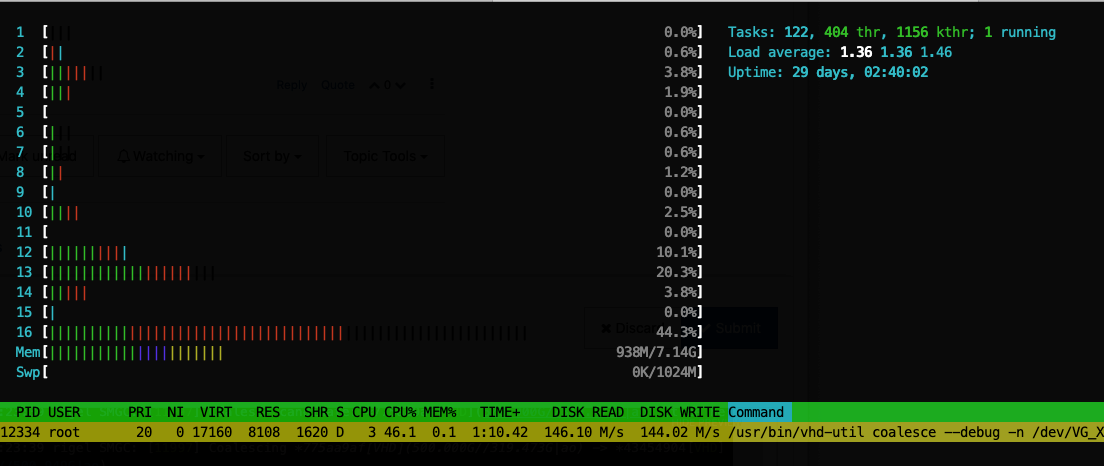

[11:09 rigel ~]# uptime 11:09:41 up 28 days, 21:18, 3 users, load average: 1,13, 1,28, 1,29As I mentioned, usually it is "vhd-util scan" running 100% on a single core with no disk read / write at all according to htop. Then comes "vhd-util coalesce" with e.g. 270 M/s read / 120 M/s write

I'm not sure what the sr stats in XO are showing as I sometimes see the coalescing i/o in htop without anything going on in the stats. Currently it looks like this:

-

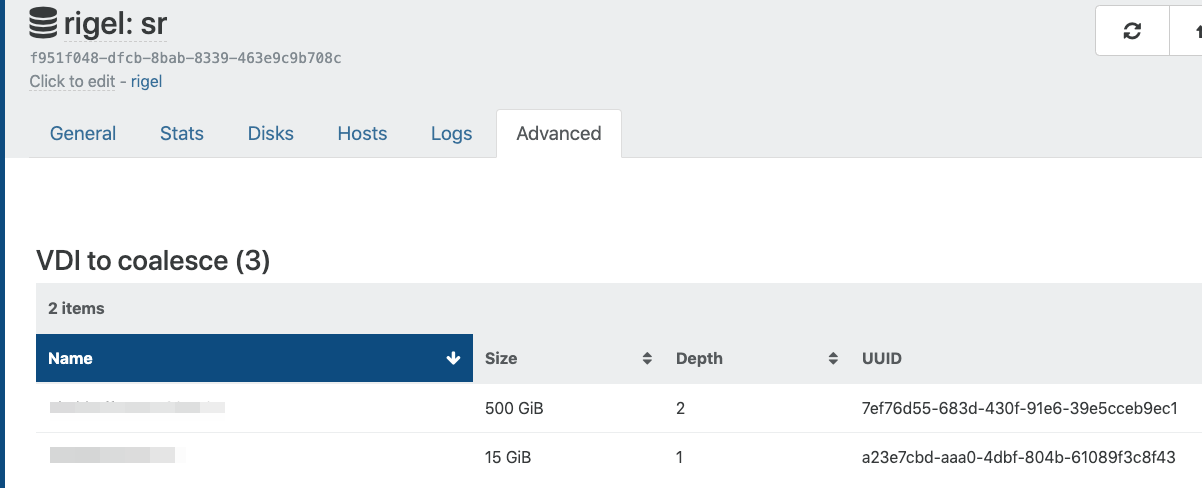

How long is your chain in XO SR view/Advanced tab

-

Usually looks like this:

Sometimes the 500 GB vdi depth goes down to 1 for a couple of seconds max.

-

Can you do a

xapi-explore-srso I can see the whole chain in details? -

rigel: sr (28 VDIs) ├── HDD - 03190d28-19b7-4f99-b9b1-d0cace96eeca - 10.03 Gi ├── customer Web Dev: Boot - 03035c65-2e11-47c5-bc0c-c796937fc91d - 40.09 Gi ├─┬ customer server 2017 0 - 43454904-e56b-4375-b2fb-40691ab28e12 - 500.95 Gi │ ├── customer server 2017 0 - dcdef81b-ec1a-481f-9c66-ea8a9f46b0c8 - 0.01 Gi │ └─┬ base copy - 775aa9af-f731-45e0-a649-045ab1983935 - 301.96 Gi │ └─┬ customer server 2017 0 - 31869c7f-b0b6-4deb-9a8e-95aad0baee4c - 0.15 Gi │ └── customer server 2017 0 - 7ef76d55-683d-430f-91e6-39e5cceb9ec1 - 500.98 Gi ├─┬ base copy - 0494da11-f89a-436c-b1d0-e1a1f54bcc90 - 2.21 Gi │ ├── customer yyy - 63b37e30-d9e8-4b54-9fae-780b5e134d79 - 0.01 Gi │ └── customer yyy - 2ca68323-29c8-4a7a-807c-7302a0ba361a - 100.2 Gi ├─┬ base copy - 62feee6c-3aab-471e-89ae-184c5daf2c44 - 36.23 Gi │ ├── customer aaa 2017 0 - 667cf6a9-7e63-4902-9fde-bbe9ef9926ce - 0.01 Gi │ └── customer aaa 2017 0 - 19333817-ce9d-4825-9cd6-eab5bb9f3af7 - 50.11 Gi ├─┬ base copy - 971a59b8-1017-4d2f-8c08-68f0a0ec36e9 - 5.86 Gi │ ├── customer zzz - 5dddf827-1c84-4807-aa1d-3506da0c8927 - 0.01 Gi │ └── customer zzz - 48edb7f4-d8d4-4125-b9a5-829ef139b45c - 10.03 Gi ├─┬ base copy - cb9ff226-bbf6-4865-beb9-7c5028304576 - 34.61 Gi │ ├── System - b70f6e7f-928a-4a65-ae68-0f80e0cd7955 - 0.01 Gi │ └─┬ base copy - 2a99181e-09f0-4991-be44-19a8f9fbe2e6 - 4.24 Gi │ ├── System - e5af497f-9dad-46cd-8233-bacf361ceed9 - 0.01 Gi │ └── System - 6371a662-499f-4a21-a875-1b74ed304454 - 40.09 Gi ├─┬ base copy - d80f9f3d-314d-4842-80e2-847022c5a22c - 28.41 Gi │ ├── Data - bfb778e9-414f-4283-bc72-e552fa24851a - 0.01 Gi │ └─┬ base copy - f56b9b4e-4bbd-4336-88c0-9ce7758cc195 - 0.7 Gi │ ├── Data - 3978cd9a-419d-4016-8f60-3621f8cacdd0 - 0.01 Gi │ └── Data - 1c6e7b14-8cb8-4568-9be7-a22983a2ab1c - 70.14 Gi └─┬ base copy - f74fa838-499a-4a1b-b9eb-87f65525f591 - 14.63 Gi └── XO customer 0 - a23e7cbd-aaa0-4dbf-804b-61089f3c8f43 - 15.04 Gi -

Thanks! So here is the logic: leaf coalesce will (or should

) merge a

) merge a base copyand its child ONLY if thisbase copyget only one child.Also, here is a good read: https://support.citrix.com/article/CTX201296

You can check if your SR got leaf coalesce enabled, there's no reason to not have it, but still a check to do.

-

GC is an architectural problem in XenServer | CH. I've been fighting about this with Citrix for a long time and I never see this problem being in fact solved or a documentation of a troubleshooting that really works.

For Enterprise Support | Premium, the procedure to be executed is always the FULL COPY of the VM, which is unfeasible in most cases for a problem that is so recurring.

In CH8 (updated) I have the same problems and I started to have problem in other Pools 7.1 CU2 after installing XS71ECU2009. Until then the process came "stable for a while ", after installing I went back to having problems and unfortunately reinstalling and doing a rollback is not feasible... We opted to upgrade to CH8, but the problem remained...

-

Yeah that's why we are focusing on SMAPIv3 instead of trying to "fix" something that's probably flawed by design on SR with slow speed (in general, it works relatively well on SSDs)

-

@olivierlambert said in Delta backup fails for specific vm with VDI chain error:

SMAPIv3

But @olivierlambert, in other posts, even with a FULL SSD disk (SC5020F) we have failed the coalesce process ... when you talk about SMAPIv3 is this something to be implemented exclusively in XCP or will it be inherited from CH 8.x?

-

@_danielgurgel if you have failed coalesce even on SSD, you should have something that cause the issue. Majority of users don't have this problem, so I suppose the thing is to find what could cause it.

SMAPIv3 is done by Citrix, but we are doing stuff on our side (upstream as possible, harder since Citrix closed some sources). As soon we have something that people could test, we'll push it into testing

-

@olivierlambert said in Delta backup fails for specific vm with VDI chain error:

Thanks! So here is the logic: leaf coalesce will (or should

) merge a

) merge a base copyand its child ONLY if thisbase copyget only one child.Also, here is a good read: https://support.citrix.com/article/CTX201296

You can check if your SR got leaf coalesce enabled, there's no reason to not have it, but still a check to do.

With "only one child" you mean no nested child (aka grandchild)?

As I understand leaf-coalesce can be turned off explicitly and otherwise is on implicitely. It wasn't turned off.

Only thing I could do (I guess) was turn it on explicitely - just to make sure.[15:31 rigel ~]# xe sr-param-get uuid=f951f048-dfcb-8bab-8339-463e9c9b708c param-name=other-config param-key=leaf-coalesce trueNothing has changed so far, so I guess I should go on and see what happens this time if I migrate the vm to the other host?

-

Okay so it wasn't disabled, as it should.

To trigger a coalesce, you need to delete a snapshot. So it's trivial to test: create a snapshot, then remove it. Then you'll see a VDI that must be coalesce in Xen Orchestra.

To answer the question: doesn't matter if the child got child too. As long there is only one direct child, it means coalesce should be triggered.

-

That doesn't seem to have an effect in the behaviour other then a bunch of new messages in the log.

I'll check in a couple of hours. If the behaviour persists I'll migrate the vm and we'll see how it behaves on the other host.

-

Create a snap, display the chain with

xapi-explore-sr. Then remove the snap, and check again. Something should have changed

-

It changed from

rigel: sr (30 VDIs) ├─┬ customer server 2017 0 - 43454904-e56b-4375-b2fb-40691ab28e12 - 500.95 Gi │ ├── customer server 2017 0 - dcdef81b-ec1a-481f-9c66-ea8a9f46b0c8 - 0.01 Gi │ └─┬ base copy - 775aa9af-f731-45e0-a649-045ab1983935 - 318.47 Gi │ └─┬ customer server 2017 0 - d7204256-488d-4283-a991-8a59466e4f62 - 24.54 Gi │ └─┬ base copy - 1578f775-4f53-4de4-a775-d94f04fbf701 - 0.05 Gi │ ├── customer server 2017 0 - 8bcae3c3-15af-4c66-ad49-d76d516e211c - 0.01 Gi │ └── customer server 2017 0 - 7ef76d55-683d-430f-91e6-39e5cceb9ec1 - 500.98 Gito

rigel: sr (29 VDIs) ├─┬ customer server 2017 0 - 43454904-e56b-4375-b2fb-40691ab28e12 - 500.95 Gi │ ├── customer server 2017 0 - dcdef81b-ec1a-481f-9c66-ea8a9f46b0c8 - 0.01 Gi │ └─┬ base copy - 775aa9af-f731-45e0-a649-045ab1983935 - 318.47 Gi │ └─┬ customer server 2017 0 - d7204256-488d-4283-a991-8a59466e4f62 - 24.54 Gi │ └─┬ base copy - 1578f775-4f53-4de4-a775-d94f04fbf701 - 0.05 Gi │ └── customer server 2017 0 - 7ef76d55-683d-430f-91e6-39e5cceb9ec1 - 500.98 Gi -

Can you use

--fullbecause we can't have colors in copy/paste from your terminal

-

A moment later it changed to

rigel: sr (28 VDIs) ├─┬ customer server 2017 0 - 43454904-e56b-4375-b2fb-40691ab28e12 - 500.95 Gi │ ├── customer server 2017 0 - dcdef81b-ec1a-481f-9c66-ea8a9f46b0c8 - 0.01 Gi │ └─┬ base copy - 775aa9af-f731-45e0-a649-045ab1983935 - 318.47 Gi │ └─┬ base copy - 1578f775-4f53-4de4-a775-d94f04fbf701 - 0.05 Gi │ └── customer server 2017 0 - 7ef76d55-683d-430f-91e6-39e5cceb9ec1 - 500.98 GiUnfortunately I cannot do a --full, as it gives me an error:

✖ Maximum call stack size exceeded RangeError: Maximum call stack size exceeded at assign (/usr/lib/node_modules/xapi-explore-sr/node_modules/human-format/index.js:21:19) at humanFormat (/usr/lib/node_modules/xapi-explore-sr/node_modules/human-format/index.js:221:12) at formatSize (/usr/lib/node_modules/xapi-explore-sr/dist/index.js:66:36) at makeVdiNode (/usr/lib/node_modules/xapi-explore-sr/dist/index.js:230:60) at /usr/lib/node_modules/xapi-explore-sr/dist/index.js:241:26 at /usr/lib/node_modules/xapi-explore-sr/dist/index.js:101:27 at arrayEach (/usr/lib/node_modules/xapi-explore-sr/node_modules/lodash/_arrayEach.js:15:9) at forEach (/usr/lib/node_modules/xapi-explore-sr/node_modules/lodash/forEach.js:38:10) at mapFilter (/usr/lib/node_modules/xapi-explore-sr/dist/index.js:100:25) at makeVdiNode (/usr/lib/node_modules/xapi-explore-sr/dist/index.js:238:15) -

Hmm strange. Can you try to remove all snapshots on this VM?

-

Sure. Did it.

The depth in the sr's advanced tab now displays a depth of 3.

rigel: sr (27 VDIs) ├─┬ customer server 2017 0 - 43454904-e56b-4375-b2fb-40691ab28e12 - 500.95 Gi │ └─┬ base copy - 775aa9af-f731-45e0-a649-045ab1983935 - 318.47 Gi │ └─┬ customer server 2017 0 - 1d1efc9f-46e3-4b0d-b66c-163d1f262abb - 0.15 Gi │ └── customer server 2017 0 - 7ef76d55-683d-430f-91e6-39e5cceb9ec1 - 500.98 GiThis is something new.. we may be on to something:

Aug 27 16:23:39 rigel SMGC: [11997] Num combined blocks = 255983 Aug 27 16:23:39 rigel SMGC: [11997] Coalesced size = 500.949G Aug 27 16:23:39 rigel SMGC: [11997] Coalesce candidate: *775aa9af[VHD](500.000G//319.473G|ao) (tree height 3) Aug 27 16:23:39 rigel SMGC: [11997] Coalescing *775aa9af[VHD](500.000G//319.473G|ao) -> *43454904[VHD](500.000G//500.949G|ao)And after a while:

Aug 27 16:26:26 rigel SMGC: [11997] Removed vhd-blocks from *775aa9af[VHD](500.000G//319.473G|ao) Aug 27 16:26:27 rigel SMGC: [11997] Set vhd-blocks = (omitted output) for *775aa9af[VHD](500.000G//319.473G|ao) Aug 27 16:26:27 rigel SMGC: [11997] Set vhd-blocks = eJztzrENgDAAA8H9p/JooaAiVSQkTOCuc+Uf45RxdXc/bf6f99ulHVCWdsDHpR0ALEs7AF4s7QAAgJvSDoCNpR0AAAAAAAAAAAAAALCptAMAYEHaAQAAAAAA/FLaAQAAAAAAALCBA/4EhgU= for *43454904[VHD](500.000G//500.949G|ao) Aug 27 16:26:27 rigel SMGC: [11997] Num combined blocks = 255983 Aug 27 16:26:27 rigel SMGC: [11997] Coalesced size = 500.949GDepth is now down to 2 again.

xapi-explore --full now works, but looks the same to me:rigel: sr (26 VDIs) ├─┬ customer server 2017 0 - 43454904-e56b-4375-b2fb-40691ab28e12 - 500.95 Gi │ └─┬ base copy - 775aa9af-f731-45e0-a649-045ab1983935 - 318.47 Gi │ └── customer server 2017 0 - 7ef76d55-683d-430f-91e6-39e5cceb9ec1 - 500.98 GiIt's busy coalescing. We'll see how that ends.

-

Yeah, 140MiB/s for coalesce is really not bad

Let's see!

Let's see!