-

Yeah I did some tests on VDO, but I have to admit I only used it for compression I think

What's the state of it from your perspective?

What's the state of it from your perspective? -

Well, at least it doesn't seem to be one of Redhat's acquisitions that's EOL yet.

I've used it perhaps without thinking it through all too well on all my oVirt setups, mostly "because it's an option you can tick". It was only afterwards that I read that it shouldn't be used in certain use cases, but Redhat is far from consistent in its documentation.

I've searched for a studies/benchmark/recommendations and came up short, apart from a few vendor sponsored ones.

I support a team of ML researchers and they have massive amounts of highly compressible data sets, which they then compressed and de-compressed manually, often enough with both of them lying around afterwards.

So there my main intent was to just let them store the stuff how it's easiest to use, as plain visible data, and not worry about storage efficiency. There LZ4 code and the bit manipuliation support in today's CPUs seem to work faster than any NVMe storage and they use it mostly in large swaths of sequential pipes. In ML even GPU memory is far too slow for random access so I'm not concerned about storage IOPS.

It's actual use case seems to have come from VDI (that's virtual desktop infrastructure!) or Citrix' old battle ground, where tons of virtual desktop images might fill and bottleneck the best of SANs in a morning's boot storm.

Again, I like its smart approach, which isn't about trying to guarantee the complete elimination of all duplicate blocks. Instead it will eliminate the duplicates that it can find in its immediate reach within a fixed amount of time and effort, by doing a compression/checksum run on any block that is being evicted from cache to see if it's a duplicate already: compression pays for the effort and the hash delivers the dedup potential on top! Just cool!

So if you have 100 CentOS instances in your farm, there is no guarantee it will avoid you having duplicates of all code or indeed even eliminating a single one, because they might never be in the same cache on the same node or the same offset as they are being written (no lazy duplicate elimination going on the background).

And then it just very much depends on your use case, whether there will actually be any benefit, of if it's just needlesly spent CPU cycles and RAM.

Operationally it's wonderfully transparent on CentOS/RHEL and even fun to set up with Cockpit (not so much manually). When VDO volumes are consistent, they are also recoverable even without any external metadata e.g. from another machine, which is a real treat. I don't think that they record their hashes as part of the blocks they write, which could be great to deliver some ZFS like integrity checks.

But it just takes a single corrupted block, to make everything on top get unusable, which I've seen happen when an onboard defective RAID controller got swapped with a motherboard and data had only been committed to the BBU backed cache.

That's where Gluster helped out and why I think they might compensate a bit for each other with VDO compensating the write amplification of Gluster and Gluster the higher corruption risk of compressed data and opaque data structures.

And with Gluster underneath, it's never felt like the bottleneck was in VDO

-

O olivierlambert referenced this topic on

O olivierlambert referenced this topic on

-

So my first XOASAN tests using nested virtual hosts were rather promising so I'd like to move to physical machines.

Adding extra disks on virtual hosts is obviously easy, but the NUCs I'm using for that only have one single NVMe drive each without any option to hide the majority of the space e.g. via a RAID controller. The installer just grabs all of that for the default local storage and I have nothing left for XOASAN.

It does mention an "advanced installation option" somewhere in those dialogs, but that never appears.

Any recommendation on how to either keep the installer from grabbing everything or shrink the local storage after a fresh install?

-

Hi @abufrejoval

Just having a doubt: it's now called XOSTOR, so you tested XOSTOR right?

Regarding your installer question: you can uncheck the disk for VM storage, then it will leave free space on the disk after the installation

-

sorry, XOSTORE of course

And why did I think I had already tried that?

Must be late...

Merci!

-

You are welcome and thanks a lot for your tests! It's very important for us to have external users playing with it

-

Hi,

I have been able to create a small lab with 2 xcp-ngs and would like to use the XOSTOR. First I create a first xcp-ng box (xcp-ng-01) create a new XOSTOR with all the command above. All is working. I have a new SR with 1 host only.

Now If I want to add a new host to the SR how can I do this ? I would like to simulate adding new host/disk to the SR. Is it possible ?

-

Hi, the 8.2.1 test five image wouldn't let me install XOSTOR yet (and it wasn't recommended): so is this release variant again compatible with XOSTOR?

-

@abufrejoval @ronan-a has updated the test packages in the linstor test repository, so it should.

-

I tried to do the setup with 3 hosts, and followed the commands. Now I get this error when I try to create the SR.

xe sr-create type=linstor name-label=XOSTOR host-uuid=43b39fc0-002f-4347-a3e8-16e1284cfcb3 device-config:hosts=xcp-ng-01,xcp-ng-02,xcp-ng-03 device-config:group-name=linstor_group/thin_device device-config:redundancy=3 shared=true device-config:provisionning=thinError code: SR_BACKEND_FAILURE_5006 Error parameters: , LINSTOR SR creation error [opterr=Could not create SP `xcp-sr-linstor_group_thin_device` on node `xcp-ng-01`: (Node: 'xcp-ng-01') Expected 3 columns, but got 2],No idea of what I need to do with this error. looks like an error when inserting into the DB.

-

Let me ping @ronan-a

-

@dumarjo Well, check if the lvm group is activated using

vgchange -a y [group].

-

@ronan-a I did this on all this server and now the SR is up.

For the sake of scalability, is it possible to add a new host or a new disk in a host easily ? Do you have a tips about it ?

Regards,

-

@dumarjo Well, for the moment we don't have a script to do that. But you can modify manually the linstor database:

- You must find where is running the controller (for example with the command:

linstor) - Then execute this command on the host to add:

linstor --controllers=<CONTROLLER_IP> node create --node-type combined $HOSTNAMEwhereCONTROLLER_IPis given by the first step. - If you want to add a new disk, you must create a LVM/VG group with the name used in the other storage pools:

linstor --controllers=<CONTROLLER_IP> storage-pool list. - Than you can create the storage pool:

linstor --controllers=<CONTROLLER_IP> storage-pool create {lvm or lvmthin} $HOSTNAME <STORAGE_POOL> <POOL_NAME>(Check the two last params are the same in the DB.) - The PBDs of the current SR must be modified to use the new host, and a PBD must be created on the new host.

It's a little bit complex. So I think I will add a basic script to configure a new host or to remove an existing one. I do not recommend the usage of these commands, except in the case of tests.

- You must find where is running the controller (for example with the command:

-

@ronan-a said in XOSTOR hyperconvergence preview:

- The PBDs of the current SR must be modified to use the new host, and a PBD must be created on the new host.

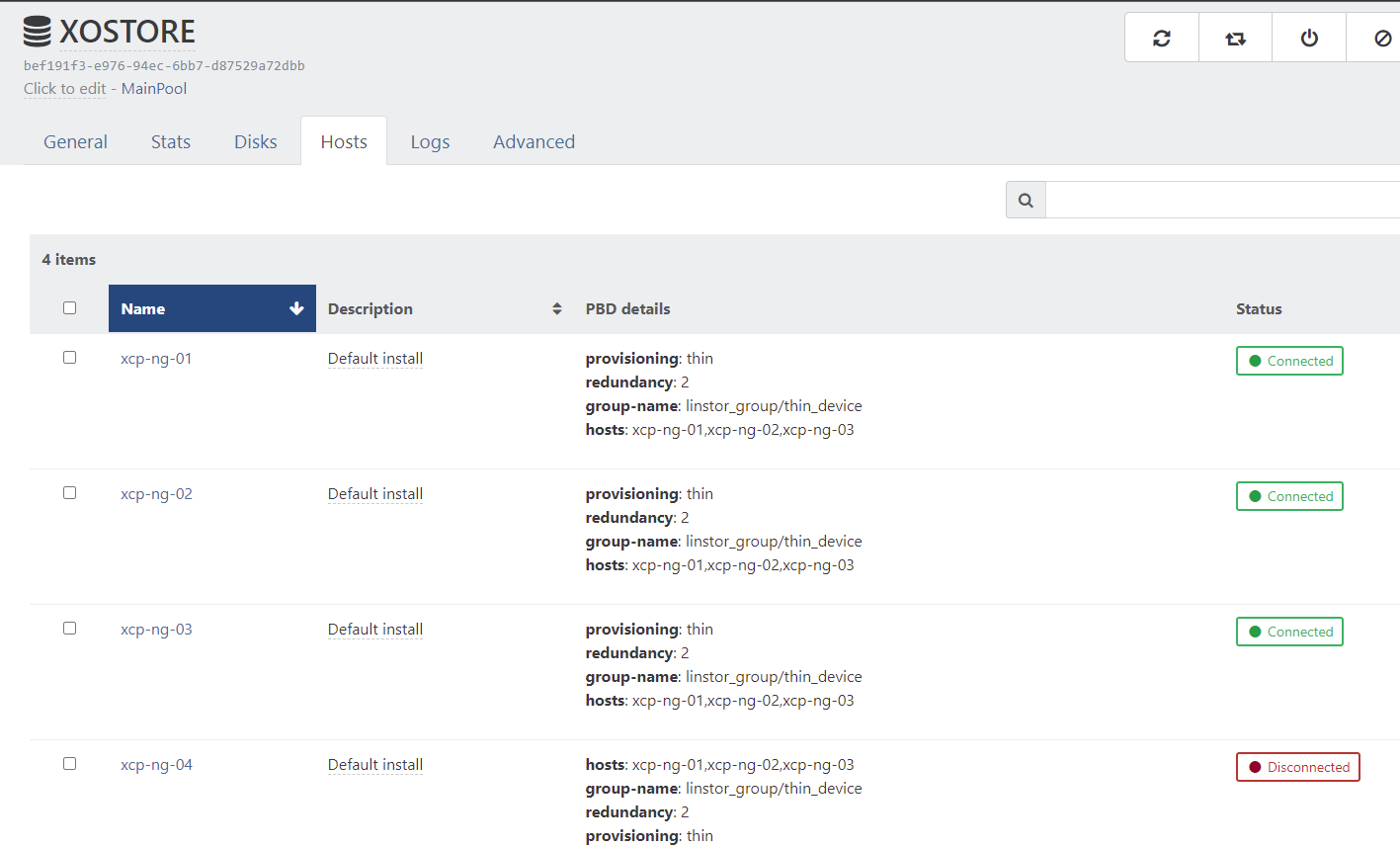

I'm relatively new with xcp-ng, and I'm a bit lost for this part. I'll give you more info on my current setup

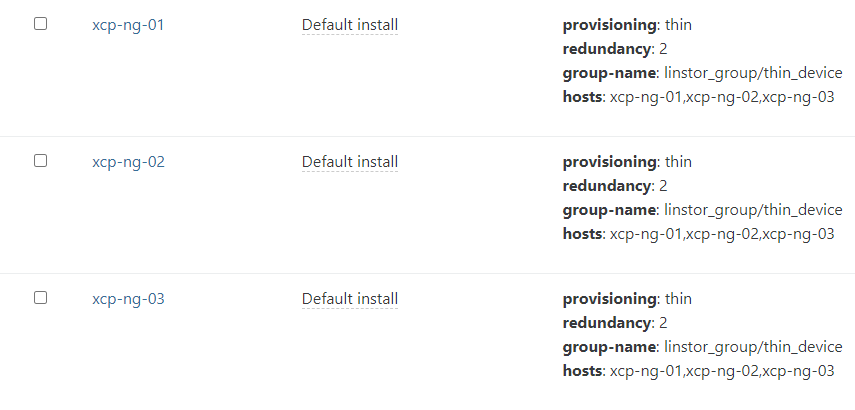

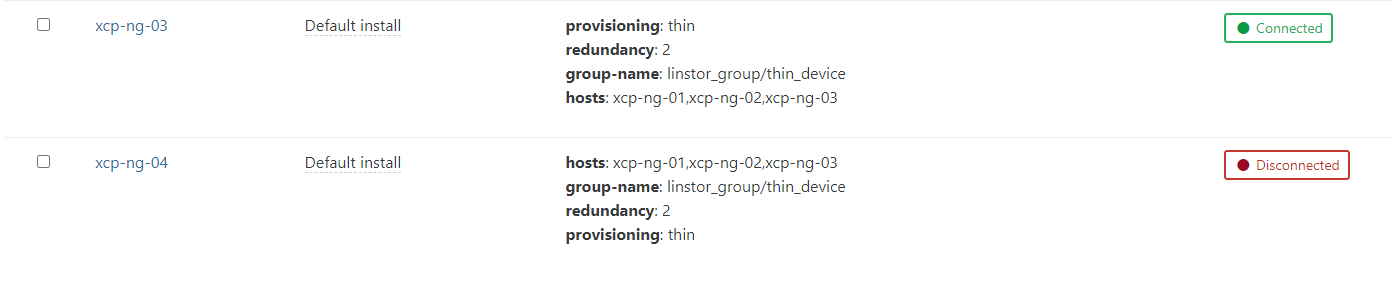

For now I have 3 hosts that are connected and working. I can install new VMs and start them on this SR.

The new host is already added to the pool (xcp-ng-04).

It's a little bit complex. So I think I will add a basic script to configure a new host or to remove an existing one. I do not recommend the usage of these commands, except in the case of tests.

Agree. This can help a bit.

-

@ronan-a

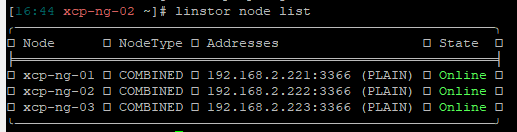

Another situation is that I cannot use the SR on all the host that are not part of the group (linstor_group/thin_device).for now I have my new xcp-ng-04 host joined to the pool, and I would like to connect the SR to it.

The first error I get is that the driver is not installed. So I installed all the package used on the install script (without the init disk section), and try to connect the SR to the xcp-ng-04. Now I get those error in the logfile.

[12:33 xcp-ng-01 ~]# tail -f /var/log/xensource.log | grep linstor Mar 2 12:34:10 xcp-ng-01 xapi: [debug||2306 HTTPS 192.168.2.94->:::80|host.call_plugin R:98517b08066b|audit] Host.call_plugin host = '8ac2930f-f826-4a18-8330-06153e3e4054 (xcp-ng-02)'; plugin = 'linstor-manager'; fn = 'hasControllerRunning' args = [ 'hidden' ] Mar 2 12:34:10 xcp-ng-01 xapi: [debug||2033 HTTPS 192.168.2.94->:::80|host.call_plugin R:e39e097fe8cb|audit] Host.call_plugin host = '81b06c7f-df55-4628-b27f-4e1e7850f900 (xcp-ng-03)'; plugin = 'linstor-manager'; fn = 'hasControllerRunning' args = [ 'hidden' ] Mar 2 12:34:11 xcp-ng-01 xapi: [debug||2306 HTTPS 192.168.2.94->:::80|host.call_plugin R:16602b9fb9d1|audit] Host.call_plugin host = '8ac2930f-f826-4a18-8330-06153e3e4054 (xcp-ng-02)'; plugin = 'linstor-manager'; fn = 'hasControllerRunning' args = [ 'hidden' ] Mar 2 12:34:11 xcp-ng-01 xapi: [debug||2033 HTTPS 192.168.2.94->:::80|host.call_plugin R:20c5d5ce11dc|audit] Host.call_plugin host = '81b06c7f-df55-4628-b27f-4e1e7850f900 (xcp-ng-03)'; plugin = 'linstor-manager'; fn = 'hasControllerRunning' args = [ 'hidden' ] ..... ..... Mar 2 12:34:43 xcp-ng-01 xapi: [error||6940 ||backtrace] Async.PBD.plug R:4bbaefcdb2cb failed with exception Server_error(SR_BACKEND_FAILURE_47, [ ; The SR is not available [opterr=Error: Unable to connect to any of the given controller hosts: ['linstor://xcp-ng-03']]; ]) Mar 2 12:34:43 xcp-ng-01 xapi: [error||6940 ||backtrace] Raised Server_error(SR_BACKEND_FAILURE_47, [ ; The SR is not available [opterr=Error: Unable to connect to any of the given controller hosts: ['linstor://xcp-ng-03']]; ])it's look like a controller need to be started on all the hosts to be able to "connect" the new host ?

Like I said, This is a new test to know if a xcp-ng that are not part of the VG can use this SR.

Hope my intention is clear...I just click on the disconnected button on UI

regards,

-

HI,

Do the vm HA is suppose to work if the VM is hosted on a SR with XOStore based ? I try to get it work, but the VM never restart if I shutdown the hosts where the VM is running.

Regards,

-

Pinging @ronan-a

-

@dumarjo Regarding your error during the attach call, could you send me the SMlog please?

-

@ronan-a

Here some more info

Mar 10 17:05:22 xcp-ng-04 SM: [28002] lock: opening lock file /var/lock/sm/bef191f3-e976-94ec-6bb7-d87529a72dbb/sr Mar 10 17:05:22 xcp-ng-04 SM: [28002] lock: acquired /var/lock/sm/bef191f3-e976-94ec-6bb7-d87529a72dbb/sr Mar 10 17:05:22 xcp-ng-04 SM: [28002] sr_attach {'sr_uuid': 'bef191f3-e976-94ec-6bb7-d87529a72dbb', 'subtask_of': 'DummyRef:|79eb31a6-806c-4883-8e8d-de59cde66469|SR.at$ Mar 10 17:05:22 xcp-ng-04 SMGC: [28002] === SR bef191f3-e976-94ec-6bb7-d87529a72dbb: abort === Mar 10 17:05:22 xcp-ng-04 SM: [28002] lock: opening lock file /var/lock/sm/bef191f3-e976-94ec-6bb7-d87529a72dbb/running Mar 10 17:05:22 xcp-ng-04 SM: [28002] lock: opening lock file /var/lock/sm/bef191f3-e976-94ec-6bb7-d87529a72dbb/gc_active Mar 10 17:05:22 xcp-ng-04 SM: [28002] lock: tried lock /var/lock/sm/bef191f3-e976-94ec-6bb7-d87529a72dbb/gc_active, acquired: True (exists: True) Mar 10 17:05:22 xcp-ng-04 SMGC: [28002] abort: releasing the process lock Mar 10 17:05:22 xcp-ng-04 SM: [28002] lock: released /var/lock/sm/bef191f3-e976-94ec-6bb7-d87529a72dbb/gc_active Mar 10 17:05:22 xcp-ng-04 SM: [28002] lock: acquired /var/lock/sm/bef191f3-e976-94ec-6bb7-d87529a72dbb/running Mar 10 17:05:22 xcp-ng-04 SM: [28002] RESET for SR bef191f3-e976-94ec-6bb7-d87529a72dbb (master: False) Mar 10 17:05:22 xcp-ng-04 SM: [28002] lock: released /var/lock/sm/bef191f3-e976-94ec-6bb7-d87529a72dbb/running Mar 10 17:05:23 xcp-ng-04 SM: [28002] Got exception: Error: Unable to connect to any of the given controller hosts: ['linstor://xcp-ng-02']. Retry number: 0 Mar 10 17:05:27 xcp-ng-04 SM: [28002] Got exception: Error: Unable to connect to any of the given controller hosts: ['linstor://xcp-ng-02']. Retry number: 1 Mar 10 17:05:30 xcp-ng-04 SM: [28002] Got exception: Error: Unable to connect to any of the given controller hosts: ['linstor://xcp-ng-02']. Retry number: 2 Mar 10 17:05:33 xcp-ng-04 SM: [28002] Got exception: Error: Unable to connect to any of the given controller hosts: ['linstor://xcp-ng-02']. Retry number: 3 Mar 10 17:05:37 xcp-ng-04 SM: [28002] Got exception: Error: Unable to connect to any of the given controller hosts: ['linstor://xcp-ng-02']. Retry number: 4 Mar 10 17:05:40 xcp-ng-04 SM: [28002] Got exception: Error: Unable to connect to any of the given controller hosts: ['linstor://xcp-ng-02']. Retry number: 5 Mar 10 17:05:43 xcp-ng-04 SM: [28002] Got exception: Error: Unable to connect to any of the given controller hosts: ['linstor://xcp-ng-02']. Retry number: 6 Mar 10 17:05:47 xcp-ng-04 SM: [28002] Got exception: Error: Unable to connect to any of the given controller hosts: ['linstor://xcp-ng-02']. Retry number: 7 Mar 10 17:05:50 xcp-ng-04 SM: [28002] Got exception: Error: Unable to connect to any of the given controller hosts: ['linstor://xcp-ng-02']. Retry number: 8 Mar 10 17:05:53 xcp-ng-04 SM: [28002] Got exception: Error: Unable to connect to any of the given controller hosts: ['linstor://xcp-ng-02']. Retry number: 9 Mar 10 17:05:54 xcp-ng-04 SM: [28002] Raising exception [47, The SR is not available [opterr=Error: Unable to connect to any of the given controller hosts: ['linstor:/$ Mar 10 17:05:54 xcp-ng-04 SM: [28002] lock: released /var/lock/sm/bef191f3-e976-94ec-6bb7-d87529a72dbb/sr Mar 10 17:05:54 xcp-ng-04 SM: [28002] ***** generic exception: sr_attach: EXCEPTION <class 'SR.SROSError'>, The SR is not available [opterr=Error: Unable to connect to$ Mar 10 17:05:54 xcp-ng-04 SM: [28002] File "/opt/xensource/sm/SRCommand.py", line 110, in run Mar 10 17:05:54 xcp-ng-04 SM: [28002] return self._run_locked(sr) Mar 10 17:05:54 xcp-ng-04 SM: [28002] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Mar 10 17:05:54 xcp-ng-04 SM: [28002] rv = self._run(sr, target) Mar 10 17:05:54 xcp-ng-04 SM: [28002] File "/opt/xensource/sm/SRCommand.py", line 352, in _run Mar 10 17:05:54 xcp-ng-04 SM: [28002] return sr.attach(sr_uuid) Mar 10 17:05:54 xcp-ng-04 SM: [28002] File "/opt/xensource/sm/LinstorSR", line 489, in wrap Mar 10 17:05:54 xcp-ng-04 SM: [28002] return load(self, *args, **kwargs) Mar 10 17:05:54 xcp-ng-04 SM: [28002] File "/opt/xensource/sm/LinstorSR", line 415, in load Mar 10 17:05:54 xcp-ng-04 SM: [28002] raise xs_errors.XenError('SRUnavailable', opterr=str(e)) Mar 10 17:05:54 xcp-ng-04 SM: [28002] Mar 10 17:05:54 xcp-ng-04 SM: [28002] ***** LINSTOR resources on XCP-ng: EXCEPTION <class 'SR.SROSError'>, The SR is not available [opterr=Error: Unable to connect to $ Mar 10 17:05:54 xcp-ng-04 SM: [28002] File "/opt/xensource/sm/SRCommand.py", line 378, in run Mar 10 17:05:54 xcp-ng-04 SM: [28002] ret = cmd.run(sr) Mar 10 17:05:54 xcp-ng-04 SM: [28002] File "/opt/xensource/sm/SRCommand.py", line 110, in run Mar 10 17:05:54 xcp-ng-04 SM: [28002] return self._run_locked(sr) Mar 10 17:05:54 xcp-ng-04 SM: [28002] File "/opt/xensource/sm/SRCommand.py", line 159, in _run_locked Mar 10 17:05:54 xcp-ng-04 SM: [28002] rv = self._run(sr, target) Mar 10 17:05:54 xcp-ng-04 SM: [28002] File "/opt/xensource/sm/SRCommand.py", line 352, in _run Mar 10 17:05:54 xcp-ng-04 SM: [28002] return sr.attach(sr_uuid) Mar 10 17:05:54 xcp-ng-04 SM: [28002] File "/opt/xensource/sm/LinstorSR", line 489, in wrap Mar 10 17:05:54 xcp-ng-04 SM: [28002] return load(self, *args, **kwargs) Mar 10 17:05:54 xcp-ng-04 SM: [28002] File "/opt/xensource/sm/LinstorSR", line 415, in load Mar 10 17:05:54 xcp-ng-04 SM: [28002] raise xs_errors.XenError('SRUnavailable', opterr=str(e)) Mar 10 17:05:54 xcp-ng-04 SM: [28002]This is what I have in the log file. If you need more info, let me know.