unhealthy VDI chain

-

Greetings.

last few days receive this errors. Works fine for a months before.- have 3 pool, but errors only at 1 of them.

- all pools at same network, backup at same 2 storages.

- it begins at 3-4 VMs, but today 2 more backup broken too. One of VM just created, so almost have no backup chain.

- after moving disk to another storage backup chain fixed.

weird that it happens only at 1 pool. It have no any errors with a link to storage or network. Empty space is enough too.

is it possible if link down between XO and pool during backup? this pool located in another place. this is the only difference.

found nothing at SMlog, only at XO syslog.

UUID: e8e86755-8e74-e078-8c43-955a7d521df4 Start time: Thursday, May 19th 2022, 6:04:29 am End time: Thursday, May 19th 2022, 6:04:36 am Duration: a few seconds Reason: (unhealthy VDI chain) Job canceled to protect the VDI chain2022-05-18T21:12:15.067Z xo:backups:mergeWorker FATAL ENOENT: no such file or directory, rename '/mnt/vc-2600-1/xo-vm-b> error: [Error: ENOENT: no such file or directory, rename '/mnt/vc-2600-1/xo-vm-backups/.queue/clean-vm/_20220518T211039Z-p7h0kr8o71d' -> '/mnt/vc-2600-1/xo-vm-backups/.queue/clean-vm/__20220518T211039Z-p7h0kr8o71d'] { errno: -2, code: 'ENOENT', syscall: 'rename', path: '/mnt/vc-2600-1/xo-vm-backups/.queue/clean-vm/_20220518T211039Z-p7h0kr8o71d', dest: '/mnt/vc-2600-1/xo-vm-backups/.queue/clean-vm/__20220518T211039Z-p7h0kr8o71d' 2022-05-18T10:00:54.337Z xo:backups:DeltaBackupWriter WARN checkBaseVdis { error: [Error: ENOENT: no such file or directory, scandir '/mnt/vc-2600-1/xo-vm-backups/06ff383c-6c59-7293-98f5-9ed62745e567/vdis/9f3f2ced-14b7-4857-8a07-e7fb1acce485/260dac24-cad6-4362-ab9f-0a872e1c08f2'] { errno: -2, code: 'ENOENT', syscall: 'scandir', path: '/mnt/vc-2600-1/xo-vm-backups/06ff383c-6c59-7293-98f5-9ed62745e567/vdis/9f3f2ced-14b7-4857-8a07-e7fb1acce485/260dac24-cad6-4362-ab9f-0a872e1c08f2' -

Hi,

It's hard to assist without enough information. Are you using XO from the sources? If yes have you checked that before? https://xen-orchestra.com/docs/community.html

Or are you using XOA, if yes, on which release channel and edition?

-

@olivierlambert from sources.

Xen Orchestra, commit 25183

xo-server 5.93.0

xo-web 5.96.0 -

Please update on the latest commit available on

master, rebuild and try again

-

@olivierlambert updated to commit 30874.

after that all broken backup was fixed without moving vdi.

but after 1-2 iterations same error again, on same VMs.manual backup works always? (as i see), scheduled fail from time to time.

-

@Tristis-Oris What type of backup job and how often does it run?

-

@Danp delta backups. some once 24hours, other 8hours.

-

@Tristis-Oris orchestra log. 14 VMs, 2 failed. 2022-05-20T11_00_00.005Z - backup NG.txt

-

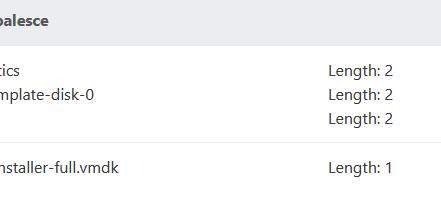

Have you checked your log files to see why the VDIs aren't coalescing? In XO, you can check under

Dashboard > Healthto observe the VDIs pending coalesce . -

You might have simply a SR coalescing not fast enough for your backup

-

@Danp was a something here before, but now it empty.

i'll wait few more days to be sure is it still broken or not. -

@olivierlambert looks like XO got a bad cache with that storage.

on same physical storage i have 2 shares connected to pool. All vm is usualy at 1st.

So i moved broken VDI to 2nd, made few backups, everything works fine. Then moved VDI back to 1st, got this error again.

Any options how to fix that without migrating all VMs and removing this storage?

-

problem still exist even for new VMs.

-

You need to understand why it doesn't coalesce

/var/log/SMlogis your friend. -

@olivierlambert nothing about failed VMs at SMlog for this period.

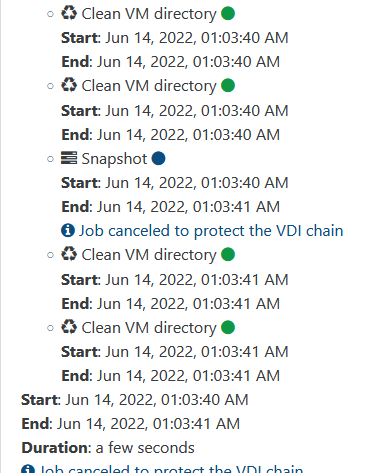

backup task started at 1:00. since 1:03 to 1:06 few backups was failed. log looks likeJun 14 01:00:35 name SM: [17853] pread SUCCESS Jun 14 01:00:35 name SM: [17853] lock: released /var/lock/sm/.nil/lvm Jun 14 01:00:35 name SM: [17853] lock: acquired /var/lock/sm/.nil/lvm Jun 14 01:00:36 name SM: [17853] lock: released /var/lock/sm/.nil/lvm Jun 14 01:00:36 name SM: [17853] Calling tap unpause with minor 8 Jun 14 01:00:36 name SM: [17853] ['/usr/sbin/tap-ctl', 'unpause', '-p', '28995', '-m', '8', '-a', 'vhd:/dev/VG_XenStorage-f1a514f3-2ef9-5705-7a7e-c8c23483122c/V HD-236b3cc3-80f7-40ff-9862-f8d4c2f69225'] Jun 14 01:00:36 name SM: [17853] = 0 Jun 14 01:00:36 name SM: [17853] lock: released /var/lock/sm/236b3cc3-80f7-40ff-9862-f8d4c2f69225/vdi Jun 14 01:06:02 name SM: [19600] on-slave.multi: {'vgName': 'VG_XenStorage-f1a514f3-2ef9-5705-7a7e-c8c23483122c', 'lvName1': 'VHD-1fa9eb66-49fe-4e93-b64d-fbb2c3 bb8745', 'action1': 'deactivateNoRefcount', 'action2': 'cleanupLockAndRefcount', 'uuid2': '1fa9eb66-49fe-4e93-b64d-fbb2c3bb8745', 'ns2': 'lvm-f1a514f3-2ef9-5705-7a7e-c8c2 3483122c'} Jun 14 01:06:02 name SM: [19600] LVMCache created for VG_XenStorage-f1a514f3-2ef9-5705-7a7e-c8c23483122c Jun 14 01:06:02 name SM: [19600] on-slave.action 1: deactivateNoRefcount Jun 14 01:06:02 name SM: [19600] LVMCache: will initialize now Jun 14 01:06:02 name SM: [19600] LVMCache: refreshing Jun 14 01:06:02 name SM: [19600] lock: opening lock file /var/lock/sm/.nil/lvm Jun 14 01:06:02 name SM: [19600] lock: acquired /var/lock/sm/.nil/lvm Jun 14 01:06:02 name SM: [19600] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-f1a514f3-2ef9-5705-7a7e-c8c23483122c'] Jun 14 01:06:03 name SM: [19600] pread SUCCESSno any info for 6 min.

-

This XO message is not a failure. It's a protection.

Your problem isn't in XO but in your storage that either doesn't coalesce (at all) or coalesce slower than you create new snapshots (ie when a new backup starts).

So you have to check on storage side if you have coalesce issues.

-

@olivierlambert but backup works fine on another share at same storage. I think it easier to recreate this one.

-

In your XO, SR detailed view, Advanced, you should see the disks to coalesce.

If you backup a VM having a disk not coalesced yet, XO will prevent the creation of a new snapshot.

You can disable all jobs and see if you end with zero VDI to coalesce. If it works, then it means some disks are backup faster than your SR can coalesce.

-

@olivierlambert thanks, i will try.

-

solved problem.

Storage was in weird state, can't dismount it from pool with bunch on unknown errors. Need a pool reboot to detach it. After reattach it works normally.