VM, missing disk

-

XO interface trouble?

I have a VM that boots and operates okay. But I'm not able to migrate the attached disks from one storage to another.

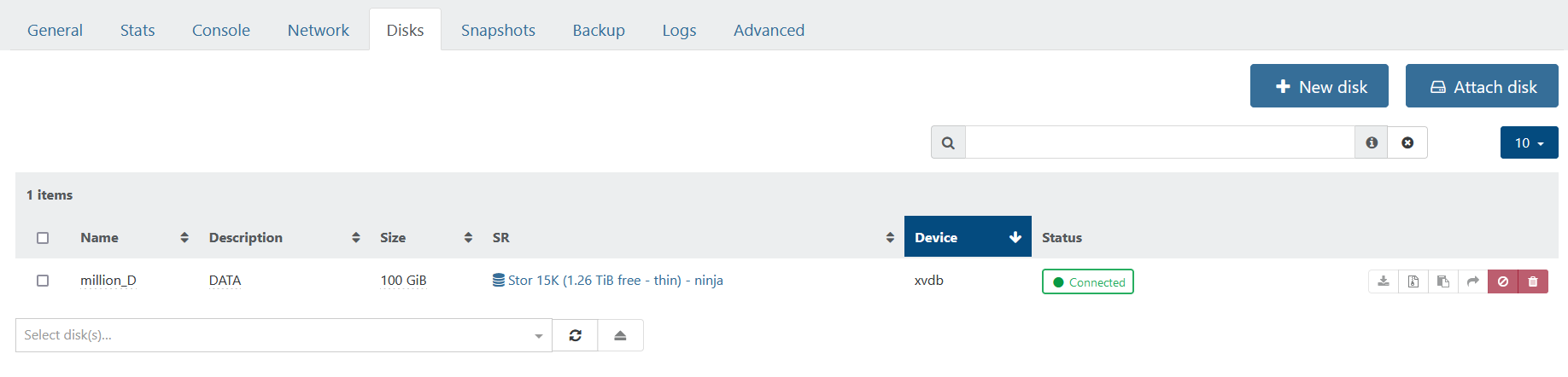

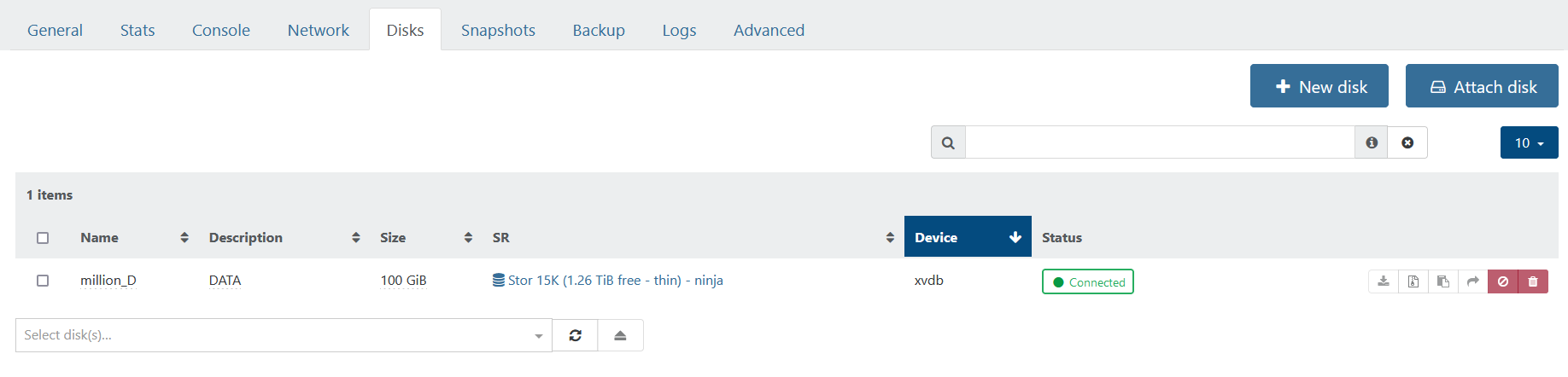

VM, Disks: Missing boot drive, xvda. VM is still able to boot from it though.

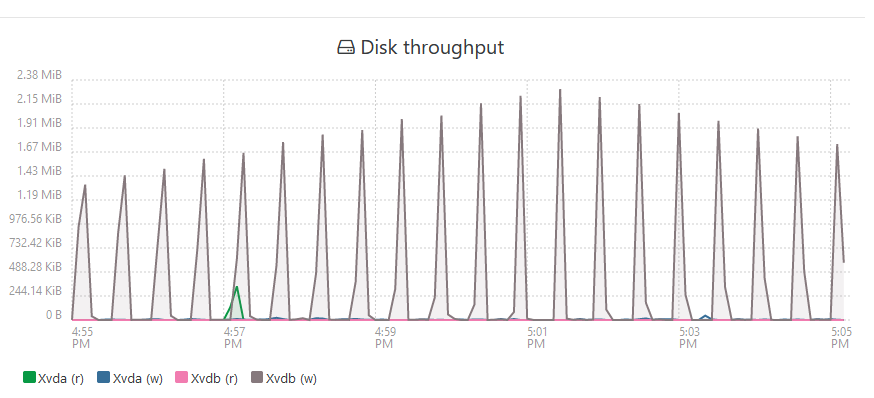

VM, Stats: Clearly showing stats for both a xvda (C:) and xvdb (D:).

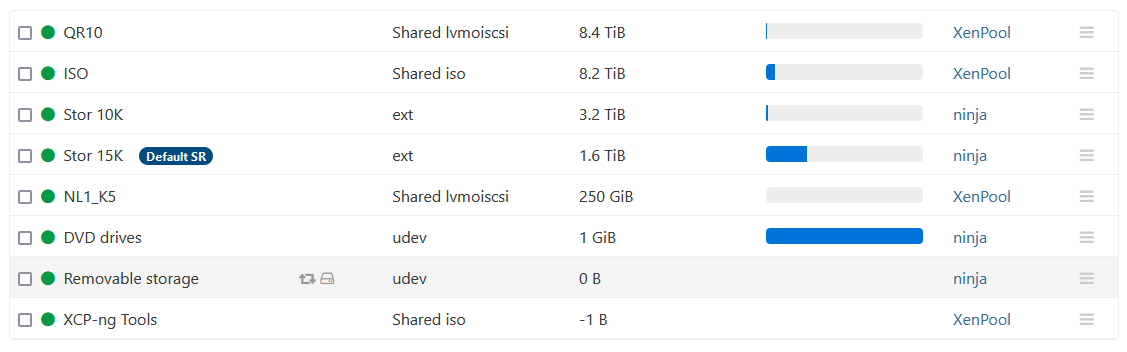

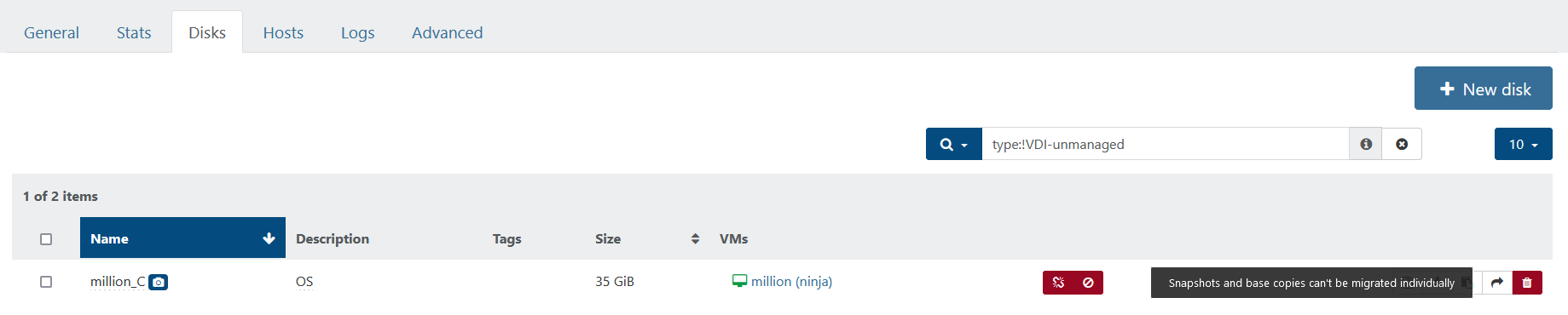

Storage, Disks: Showing the specific disk missing from the VM.

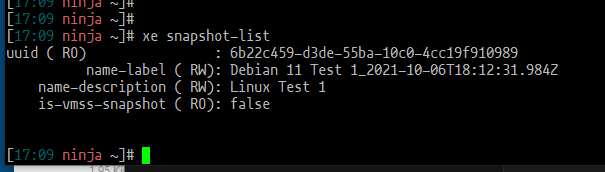

There's a camera icon probably indicating there should be a snapshot of the drive in question. I'm trying to work with that, finding the snapshot and merging or deleting it, solving the problem myself.

As limited my knowledge is around Xen, I'm not able to find out where to go from this situation though. I've tried a few things in the terminal of the host but Xen and XO seem to be conflicting as when the commands below doesnt acknowledge there's a snapshot for the machine above at all, only another one:

I'm a bit lost: Can anyone point me in the direction of commands to run to trouble shoot this kind of problem?

Kind regards,

MRisberg -

@MRisberg Hi !

This is clearly weird

First, are you on XOA (which version ?) or XO from source ? (which commit ?)You spoke about C:/ and D:/, I suppose it's a Windows VM. Did you install xen-tools ? I have a few Windows at $job, and I never see this

This will help to debug your problem

-

@MRisberg What is weird is that we don't see xvda on the VM, could you show us the disks inside the VM?

And the result of this command on your hostxe vm-disk-list uuid=UUID of your VM -

@AtaxyaNetwork said in VM, missing disk:

@MRisberg Hi !

This is clearly weird

First, are you on XOA (which version ?) or XO from source ? (which commit ?)Hi @AtaxyaNetwork

Thanks for helping out.

From sources. Tried multiple builds, the latest as of yesterday back to 45 days or so.You spoke about C:/ and D:/, I suppose it's a Windows VM. Did you install xen-tools ? I have a few Windows at $job, and I never see this

This will help to debug your problem

Yes, this one is a Windows VM. I have the open source guest tools installed, not the ones from Citrix. Or is Xen-Tools something else?

-

@Darkbeldin said in VM, missing disk:

@MRisberg What is weird is that we don't see xvda on the VM, could you show us the disks inside the VM?

Thank you @Darkbeldin for chiming in.

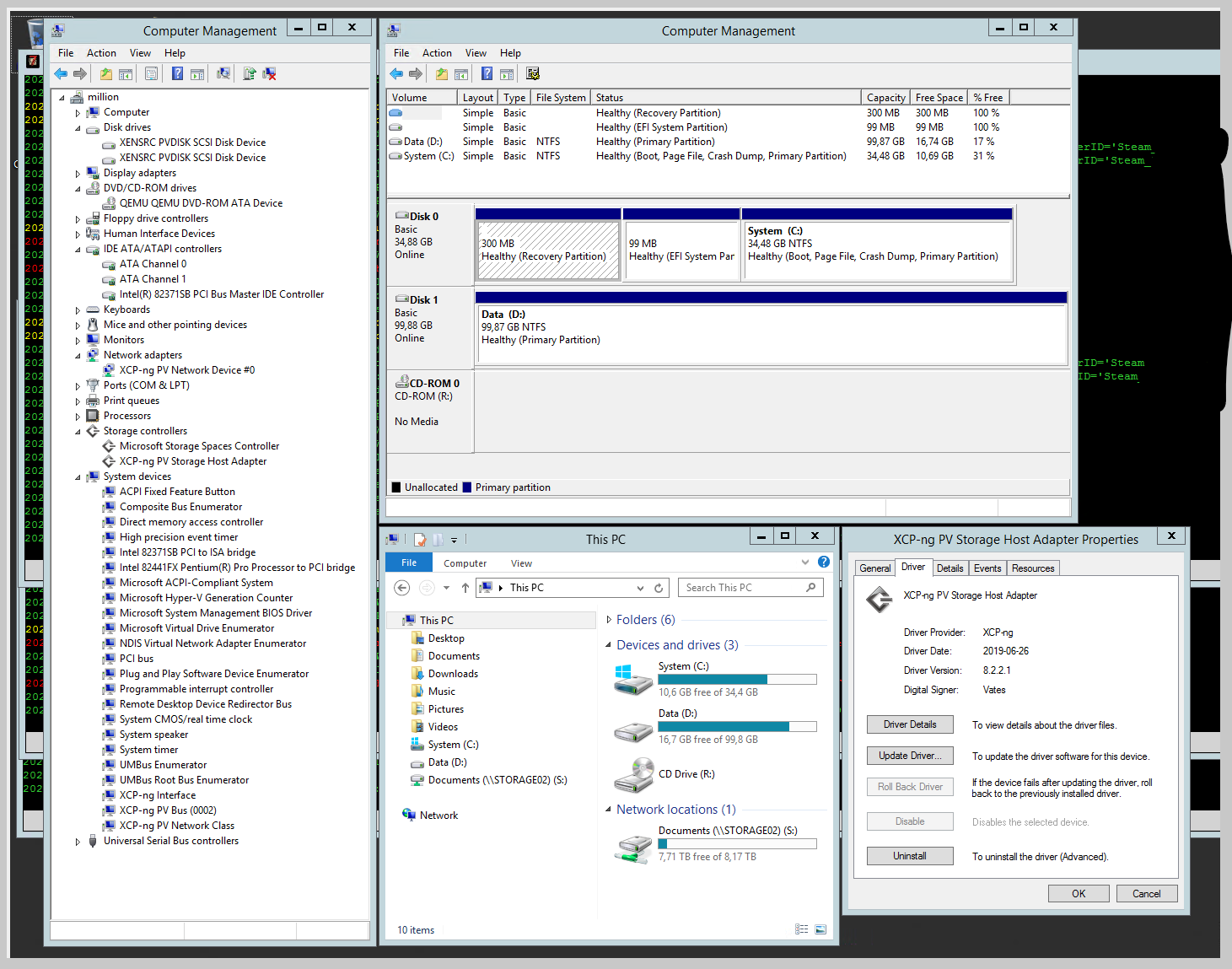

Disks and drivers inside of the VM:

And the result of this command on your host

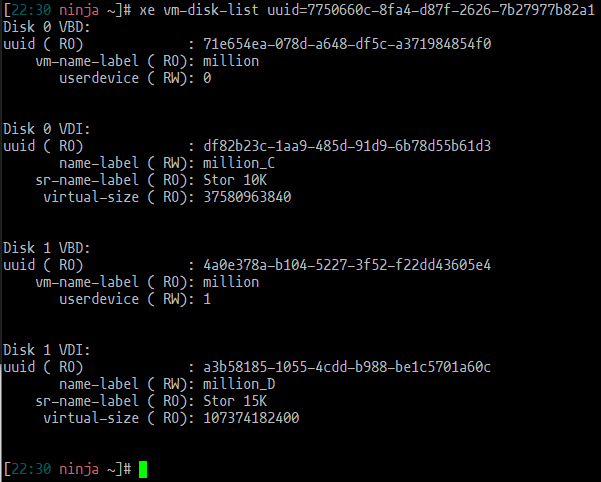

xe vm-disk-list uuid=UUID of your VMThe command you provided returned this:

Here we can see XVDA (named million_C because it's the C drive within the VM).

So .. the hosts knows it's there, obviously, as I can boot the machine. Is there something other I can do than restarting both the toolstack and XO that you can come up with?

-

I built a new XO machine, compiled from sources with build f1ab62524 (xo-server 5.102.3, xo-web 5.103.0 as per 2022-09-21 17:00). Made no difference in regards to being able to see the XVDA drive.

The host knows the drive is there, XO doesn't.

I've already tried this: reset toolstack + restart the host.Is there a way of resetting the interface / API on the host that XO talks to? (Not sure what API that would be.)

-

@MRisberg Do you have more that one SR? Can you show us the Disks tab for the VM in question?

-

-

@Danp For me the disk tab for the Vm is the first screenshot of the first post:

And yes apparently he as at least two SR

Stor 15k adn Stor 10k

Unfortunately from there and without taking a look directly i'm a bit confused on what can be done perhaps he should try backuping the VM and restoring it? -

@MRisberg OIC. I got confused when looking at this earlier. My suspicion is that the

is-a-snapshotparameter has been erroneously set on the VDI. -

@Danp Perhaps, but it will require to look at the chain in the host to see if your right to see if there is an child to this VDI.

-

@Danp said in VM, missing disk:

@MRisberg OIC. I got confused when looking at this earlier. My suspicion is that the

is-a-snapshotparameter has been erroneously set on the VDI.Interesting. Do you know of a way to check what parameters have been set for a VDI on the host?

Running the xe snapshot-list command did not return XVDA or million_C as having a snapshot, although XO indicates this with the camera icon.

Edit: Also, how did you get "is-a-snapshot" show up highlighted as in your post? Looked nice

-

@MRisberg said in VM, missing disk:

Do you know of a way to check what parameters have been set for a VDI on the host?

xe vdi-param-list uuid=<Your UUID here>Also, how did you get "is-a-snapshot" show up highlighted as in your post?

With the appropriate Markdown syntax (see Inline code with backticks).

-

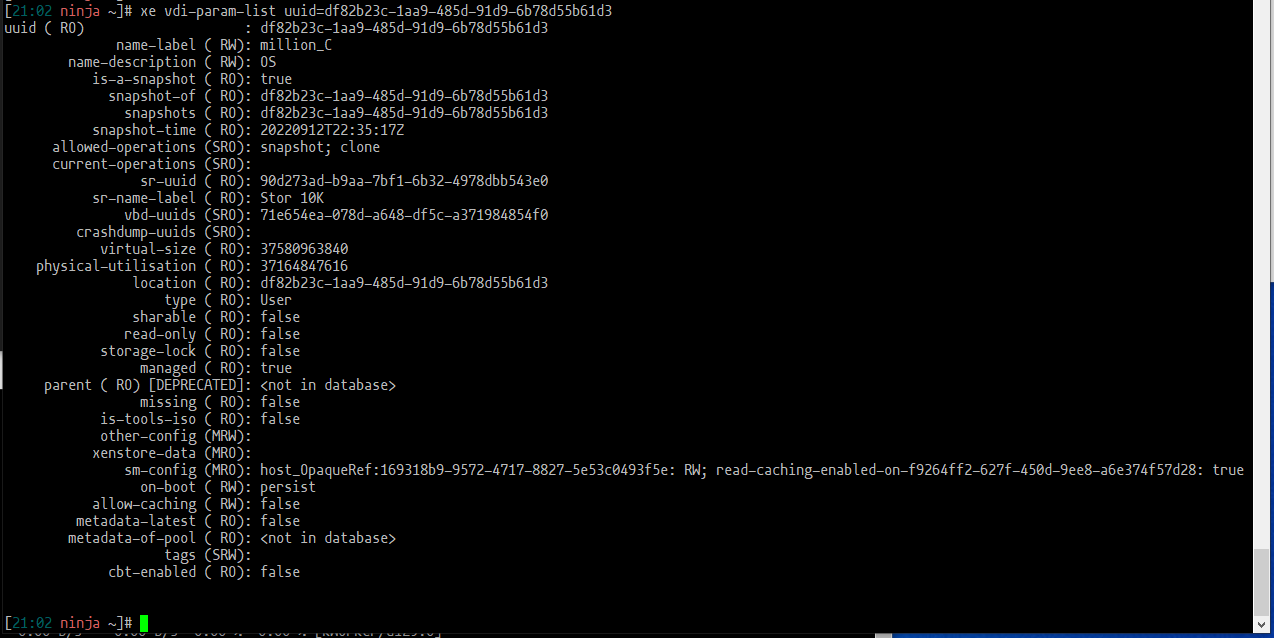

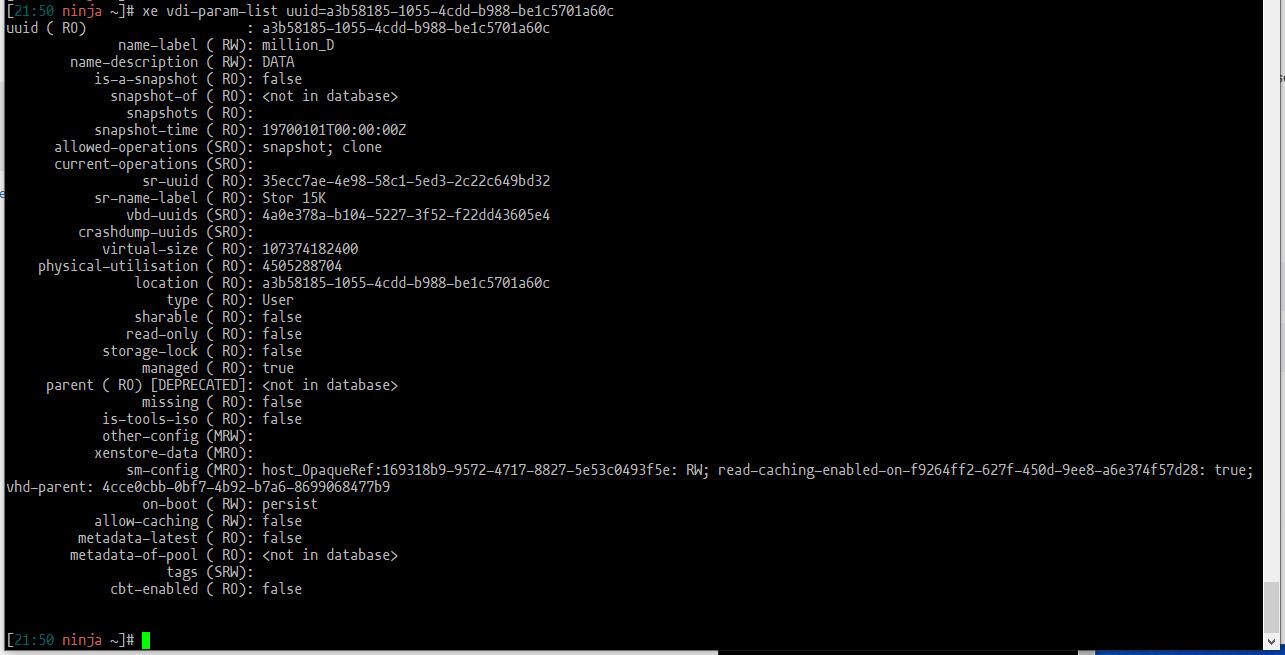

Interesting, and a bit strange that XVDA (million_C) is-a-snapshot ( RO): true when earlier I wasn't able to list it as a snapshot at all. Oh, maybe the command makes a difference between VM snapshot and a VDI snapshot. Still learning. It returned:

XVDA:

XVDB:

Obviously there are some differences regarding the snapshot info but also million_C is missing info regarding vhd-parent. Not that I understand yet what that means.

Thank you. -

@MRisberg I will defer to others on advising you on how to fix your situation. If the VM's contents are important, then I would be sure to make multiple backups in case of a catastrophic event. You should be able to export the to an XVA so that it can be reimported if needed.

-

I appreciate your effort to help.

Seems like this is an odd one. Maybe the backup / restore strategy is the most efficient way forward here, as long as the backup sees both drives. -

@MRisberg You take no risk at trying it you can keep the VM and restore it with another name.

-

While troubleshooting something else, backups also struggling, me and my friend found out that the host doesn't seem to coalesce successfully on the SR Stor 15K:

Sep 22 22:27:16 ninja SMGC: [1630] In cleanup Sep 22 22:27:16 ninja SMGC: [1630] SR 35ec ('Stor 15K') (44 VDIs in 5 VHD trees): Sep 22 22:27:16 ninja SMGC: [1630] *3fc6e297(40.000G/85.000K) Sep 22 22:27:16 ninja SMGC: [1630] *4cce0cbb(100.000G/88.195G) Sep 22 22:27:16 ninja SMGC: [1630] *81341eac(40.000G/85.000K) Sep 22 22:27:16 ninja SMGC: [1630] *0377b389(40.000G/85.000K) Sep 22 22:27:16 ninja SMGC: [1630] *b891bcb0(40.000G/85.000K) Sep 22 22:27:16 ninja SMGC: [1630] Sep 22 22:27:16 ninja SMGC: [1630] *~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~* Sep 22 22:27:16 ninja SMGC: [1630] *********************** Sep 22 22:27:16 ninja SMGC: [1630] * E X C E P T I O N * Sep 22 22:27:16 ninja SMGC: [1630] *********************** Sep 22 22:27:16 ninja SMGC: [1630] gc: EXCEPTION <class 'util.SMException'>, Parent VDI 6b13ce6a-b809-4a50-81b2-508be9dc606a of d36a5cb0-564a-4abb-920e-a6367741 574a not found Sep 22 22:27:16 ninja SMGC: [1630] File "/opt/xensource/sm/cleanup.py", line 3379, in gc Sep 22 22:27:16 ninja SMGC: [1630] _gc(None, srUuid, dryRun) Sep 22 22:27:16 ninja SMGC: [1630] File "/opt/xensource/sm/cleanup.py", line 3264, in _gc Sep 22 22:27:16 ninja SMGC: [1630] _gcLoop(sr, dryRun) Sep 22 22:27:16 ninja SMGC: [1630] File "/opt/xensource/sm/cleanup.py", line 3174, in _gcLoop Sep 22 22:27:16 ninja SMGC: [1630] sr.scanLocked() Sep 22 22:27:16 ninja SMGC: [1630] File "/opt/xensource/sm/cleanup.py", line 1606, in scanLocked Sep 22 22:27:16 ninja SMGC: [1630] self.scan(force) Sep 22 22:27:16 ninja SMGC: [1630] File "/opt/xensource/sm/cleanup.py", line 2357, in scan Sep 22 22:27:16 ninja SMGC: [1630] self._buildTree(force) Sep 22 22:27:16 ninja SMGC: [1630] File "/opt/xensource/sm/cleanup.py", line 2313, in _buildTree Sep 22 22:27:16 ninja SMGC: [1630] "found" % (vdi.parentUuid, vdi.uuid)) Sep 22 22:27:16 ninja SMGC: [1630] Sep 22 22:27:16 ninja SMGC: [1630] *~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~* Sep 22 22:27:16 ninja SMGC: [1630] * * * * * SR 35ecc7ae-4e98-58c1-5ed3-2c22c649bd32: ERRORMaybe my problem is related to the host rather than XO? Back to troubleshooting .. and any input is appreciated.

-

@MRisberg That's mostly the issue apparently you lost a VDI from a chain there.

So the fact that the disk is now seen as a snashop can come from there yes.

But solving coalesce issue is not easy, how much do you love this VM? -

@Darkbeldin

I actually solved the coalesce issue yesterday. After removing a certain VDI (not related) and a coalesce lock file, SR Stor 15K managed to process the coalesce backlog. After that the backups started to process again.Due to poor performance I choose to postpone exporting or backing up the million VM. When I do, I'll see if I can restore it with both XVDA and XVDB.

I'll copy the important contents from the million VM before I do anything. That way I can improvise and dare to move forward in anyway i need to - even if I'll have to reinstall the VM. But first I always try to make an attempt at resolving the problem rather than just throwing away and redo from start. That way I learn more about the platform.