nVidia Tesla P4 for vgpu and Plex encoding

-

@splastunov said in nVidia Tesla P4 for vgpu and Plex encoding:

@mohammadm

I'm talking now about vGPU not passthrough- old drivers

- no way to monitor GPU load

- Sometimes the GPU on Dom0 stops responding and the only thing that can be done to solve this problem is to reboot the entire server with all the virtual machines on it.

and etc.... do not remember all troubles I had with it

I installed the Firepro S7150x2 yesterday without any issues. It's been about 24 hours, so far no issues. I do agree I am missing the nvidia-smi command to get a better overview.

Why is the support regarding vGPU so bad and mostly outdated

-

I will have the opportunity to discuss more with AMD (on a regular basis, for some reasons), I'll try to see if I can connect to their GPU division

-

@splastunov Dom0 would need to have a vGPU in this scenario?

-

@olivierlambert As mentioned in another thread...Intel Flex GPU's seem primed for this. nVidia is closed and license greedy. AMD seems a little lost and wandering. Intel has said, "No licensing...Just use it." but they require some development.

It should be relatively easy to incorporate the Intel Flex GPU's, but I'm not sure if the newer kernels are required. That might be where the wheels fall off for now.

-

@JamesG This would indeed be awesome! I would prefer going the Intel route. Any contacts there @olivierlambert ?

-

From my perspective, there's literally money on the ground for any virtualization platform to pick up VDI with Intel. The GPU's are affordable and performant for VDI work. They currently work with Openshift and Proxmox is at work on it.

-

@splastunov why do you extract the vgpu RPM rather than just installing the RPM directly?

-

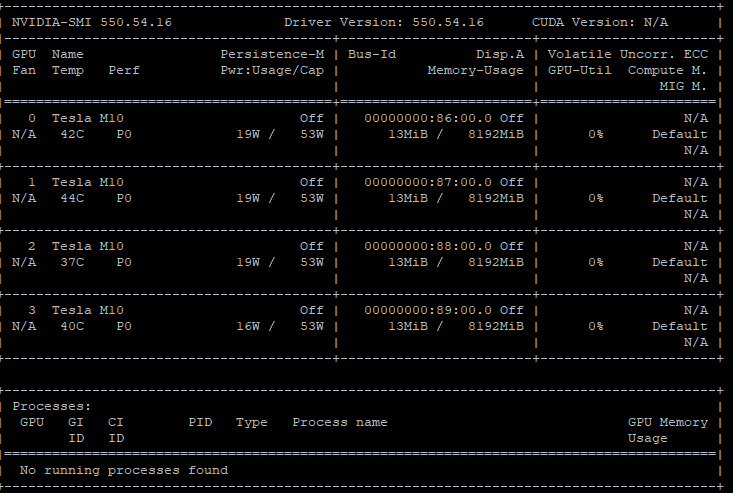

I am currently trying to get a NVIDIA Telsa M10 working with the latest updated Xcp-ng 8.2.1 Build Date 2024-07-17.

The Drivers "NVIDIA-vGPU-xenserver-8-550.54.10.x86_64.rpm" are installed and the system was rebooted. Now it is possible to select a vGPU via XOA for the VM.

Further the extracted vgpu file from the CitrixHypervisor-8.2.0-install-cd.iso was copied to /usr/lib64/xen/bin/vgpu and is executable.

The Start of the VM exited with:

"FAILED_TO_START_EMULATOR(OpaqueRef:0cc388f0-b606-469d-b68c-b4713c7f4abb, vgpu, Daemon exited unexpectedly)

"Is there someone who has solved this Problem?

Platform:

HPE DL380 Gen10 -

download XenServer iso file (https://www.xenserver.com/downloads | XenServer8_2024-06-03.iso)

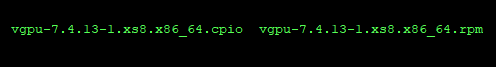

copy the file (vgpu-7.4.13-1.xs8.x86_64.rpm) in the packages directory ! Do not use CitrixHypervisor-8.2.0-install-cd file vgpu-7.4.8-1.x86_64

unpack file vgpu-7.4.13-1.xs8.x86_64

copy the file \usr\lib64\xen\bin\vgpu (size 129KB) to \usr\lib64\xen\bin\ on your XCP-NG host (chmod 755) -

Why unpacking and making manual changes to the filesystem instead of installing the RPM directly?

-

Thanks...

I tried at first the driver from above:

NVIDIA-GRID-CitrixHypervisor-8.2-550.54.10-550.54.14-551.61

what doesn’t work.

After this I have found the newer Version:

NVIDIA-GRID-CitrixHypervisor-8.2-550.54.16-550.54.15-551.78

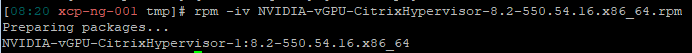

Now the Error on VM startup does not came up and the driver installation in the VM works.The Driver packages what are specially for Xenserver8 are not installable on the Xcp-NG Version 8.2.1 because the different Base "https://xcp-ng.org/forum/post/80461https://xcp-ng.org/forum/post/80461"

now I get one VM working with a vGPU but a second one does not detect the mapped vGPU and the windows driver cant be installed

I am currently not sure why this happens, because a copy of the working one with another vGPU config will work.

The Xentools and Windowsversions are the same and the template alsothe vGPU rpm is installable without any problems

-

@high-voltages hello i'm stuck at the power on issue, i can assign a vGPU profile but the VM wont power on, it sounds like a mis match in the binary file but i've tried vgpu-7.4.8.x and currently 7.4.13.x also tried host driver NVIDIA-GRID-CitrixHypervisor-8.2-550.54.10 and now running 54.16. i also tried 8.3 with their respective drivers, but same power on even issue so i did a clean install of 8.2

vm.start

{

"id": "1eadda44-c82d-69ad-5b83-514a8e421d65",

"bypassMacAddressesCheck": false,

"force": false

}

{

"code": "FAILED_TO_START_EMULATOR",

"params": [

"OpaqueRef:f44e209a-5d6f-4022-b9b0-263fddcc5f12",

"vgpu",

"Daemon exited unexpectedly"

],

"call": {

"method": "VM.start",

"params": [

"OpaqueRef:f44e209a-5d6f-4022-b9b0-263fddcc5f12",

false,

false

]

},

"message": "FAILED_TO_START_EMULATOR(OpaqueRef:f44e209a-5d6f-4022-b9b0-263fddcc5f12, vgpu, Daemon exited unexpectedly)",

"name": "XapiError",

"stack": "XapiError: FAILED_TO_START_EMULATOR(OpaqueRef:f44e209a-5d6f-4022-b9b0-263fddcc5f12, vgpu, Daemon exited unexpectedly)

at Function.wrap (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_XapiError.mjs:16:12)

at file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/transports/json-rpc.mjs:38:21

at runNextTicks (node:internal/process/task_queues:60:5)

at processImmediate (node:internal/timers:447:9)

at process.callbackTrampoline (node:internal/async_hooks:128:17)"

} -

@mgformula i can take a look at my setup tomorrow, currently all runs fine.

8.2.1 with Tesla m10 will work with the correct drivers an patches.

-

@high-voltages thanks so much!! I'm also testing with M10 cards, we are trying to find VMware alternatives and are a large vGPU shop

here is what i'm currently running

xe host-param-get param-name=software-version uuid=$(xe host-list --minimal)

product_version: 8.2.1; product_version_text: 8.2; product_version_text_short: 8.2; platform_name: XCP; platform_version: 3.2.1; product_brand: XCP-ng; build_number: release/yangtze/master/58; hostname: localhost; date: 2024-07-17; dbv: 0.0.1; xapi: 1.20; xen: 4.13.5-9.44; linux: 4.19.0+1; xencenter_min: 2.16; xencenter_max: 2.16; network_backend: openvswitch; db_schema: 5.603i installed the nvidia host driver using rpm -iv "nvidia.rpm ive also tried other methods copying the .iso and installing the supplemental pack. One thing to mention is i grabbed the binaries from XenServer8_2024-10-03

-

@mgformula

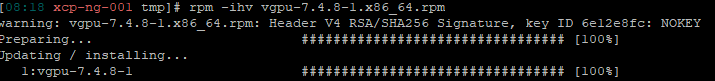

I used this driverpackage "NVIDIA-vGPU-CitrixHypervisor-8.2-550.54.16.x86_64.iso" and installed the vgpu source with "rpm -ihv vgpu-7.4.8-1.x86_64.rpm" this I have got from the Citrix installation iso.The binary 7.4.13 should used for the Xcp-ng 8.3 release not for the 8.2.1.

The Problem from my earlier post is also solved, a new created vm was working. some copies from a another host does not work. perhaps there some driverconflicts in this Windows Vm.

-

Great news! Enjoy vGPU with XCP-ng

-

@high-voltages thanks, i'm trying this now, Just to confirm we are not downloading the NVidia host drivers from NVidia site, we are using them from the Citrix .ISO?

and my install commands are just the binary.rpm and the nvidia host driver correct? I was basically just copying the binary and making it executable, maybe that was the issue i never installed it

-

@high-voltages said in nVidia Tesla P4 for vgpu and Plex encoding:

rpm -ihv vgpu-7.4.8-1.x86_64.rpm

Here are my commands just to confirm I'm doing this right

Do i still need to copy the binary to the location other have mentioned and make it executable?

Do i still need to copy the binary to the location other have mentioned and make it executable?

-

@high-voltages Just wanted to thank you again, its working now with the commands i did in the screenshot.

-

O olivierlambert marked this topic as a question on

O olivierlambert marked this topic as a question on

-

O olivierlambert has marked this topic as solved on

O olivierlambert has marked this topic as solved on

-

N nathanael-h referenced this topic on

N nathanael-h referenced this topic on