VMware migration tool: we need your feedback!

-

@florent The import was successful, the VM is up and running. I will try another the "thin=true" option.

-

@brezlord said in VMware migration tool: we need your feedback!:

@florent The import was successful, the VM is up and running. I will try another the "thin=true" option.

yeah

thank you for your patience -

\o/

\o/ -

@florent thank you for you work.

-

@florent Great news. I'm looking forward to testing when it has been pushed to the vmware channel.

-

This will land on XOA

latestfor the end of the month

-

@olivierlambert Got this working myself as well, very seamless once everything is prepped for it!

I do have a question though, since I noticed you mentioned the thin=true here, so doing this without that command creates a thick provisioned disk? Maybe I misunderstood that in the blog post.

I noticed mine took around 2.5 hours to complete a VM with 6 disks but the total data usage on disks was only around 20GB, so seems very slow, but if it's transferring the thick provisioning, then that is a different story. (in case it matters everything is 10GbE here).

-

Yes, it's because we have no way to know in advance which blocks are really used. So we transfer even the empty blocks by default.

When using

thin=true, it will read the file on VMware twice:- once to see which blocks are really used

- then actually sending the used blocks

And finally, only create the used VHD blocks on XCP-ng.

So it's slower because you read twice, but you actually send less data to your XCP-ng storage.

After adding the "warm" algorithm, the transfer time will be meaningless (or at least a lot shorter), since what matters is the actual downtime, ie when you transfer the delta after the initial full while the VM was on.

-

@olivierlambert Got it, so this does bring up a couple additional questions I have.

Does this mean the VHD that everything is transferred to is also thick? Or does it still only used the used space AFTER transferring all the blocks?

Forgive me if I'm misunderstanding, just trying to figure this all out since I have more VMs to transfer.

So, per my example, if I have a VM with 500GB of space on VMware (but only 20GB is used), transfer with this method, it'll transfer all 500GB, but does it also USE 500GB on the SR?

And does that mean this disk is "forever" thick provisioned so snapshots use way more space?

Asking all this because I have a VM with 13TB of available space to move, which only has 5TB of used space. After migration I don't want to end up with 13TB of space used on my SR since it only has 10TB remaining and we probably won't go over about 6TB used on this VM ever.

Hope I am making some sense.

Edit: also, is there a way to tell if a disk is thin or thick in XOA? The SR shows thin but I don't see anything indicating if the disk is thin or not. Would also be kinda nice to see how much ACTUAL space each disk uses up in here.

-

Without

thin=true, the destination VHD will contains ALL blocks, and will be the size of the virtual disk. So yes, in your example, 500GiB will be transferred and used on your SR.It will be then "forever" thick, until maybe you do a SR migration or copy, because (I think but not 100% sure) that the SR migration or VDI copy is doing a sparse copy (without empty blocks).

So I would suggest, in any case, to use

thin=true.Also, a disk isn't thin or thick per se, only a copy with sparse will be able to tell you if there's a lot of empty blocks.

-

@olivierlambert OK this is all great to know, thanks so much, really appreciate it!

-

I think, in the end, reading twice worth it. Especially when warm mode will be enabled, the penalty to read twice will be small vs the space saved.

-

@olivierlambert Yes, I totally agree with this.

One more question, I just did some digging and it seems like the snapshots aren't using the full amount of disk space even though they are thick, is this normal?

As an example I have 100GB base copy which shows as 101GB with ls -lh in the CLI, but the actual disk attached to the VM shows 9GB (I have 1 snapshot).

I'll also test migrating the VDI to another SR and then back to see if it shrinks it like you think it will, just for test purposes. Will definitely use thin=true when I do the bigger VMs though.

-

So when you do a snapshot, it will convert the actual active disk to a base copy in read only (so 100GiB entirely full), and then write the new blocks inside the new active disk, which was empty and now probably 9GiB big because you created a diff 9GiB worth of 2MiB blocks.

Indeed, moving the disk is using sparse command and so clean those empty blocks.

We'll continue to find solutions (if there are) to improve it, but now we are focused on getting the diff working from VMware to XCP-ng, accelerating a lot the "shutdown" time during the migration process

-

@olivierlambert Got it, I guess I misunderstood, thought that snapshots of thick provisioned disks used WAY more space but seems like it ends up similar?

I mean the thin provisioned base copy will only use the space that actual VM is using, so still saves some there I suppose, but with thick you are using the space for empty blocks either way.

Thanks again for all the help, this is such a great tool and is just in time for my org to be leaving VMware!

-

Are you talking about thin or thick SR? In a thick SR, any active disk will use the total disk space (eg 500GiB even if 10GiB used inside). In a thin SR, the active disk will only use the "real" diff from the base copy (10GiB from our previous example).

I think it's more appropriate to use the term "dynamic image" for VHD files in general. Without the

thin=trueparameter, the image will be inflated to the total size of the disk with empty blocks, regardless the SR type (thin or thick). Withthin=true, we'll only create VHD blocks where there's actual data, so the VHD size will be on the "content" size. -

@olivierlambert OK I think I got it all now, my brain was totally confusing thin provisioned SRs and disk images, not enough coffee today! lol

This all makes a ton of sense now, thank you!

-

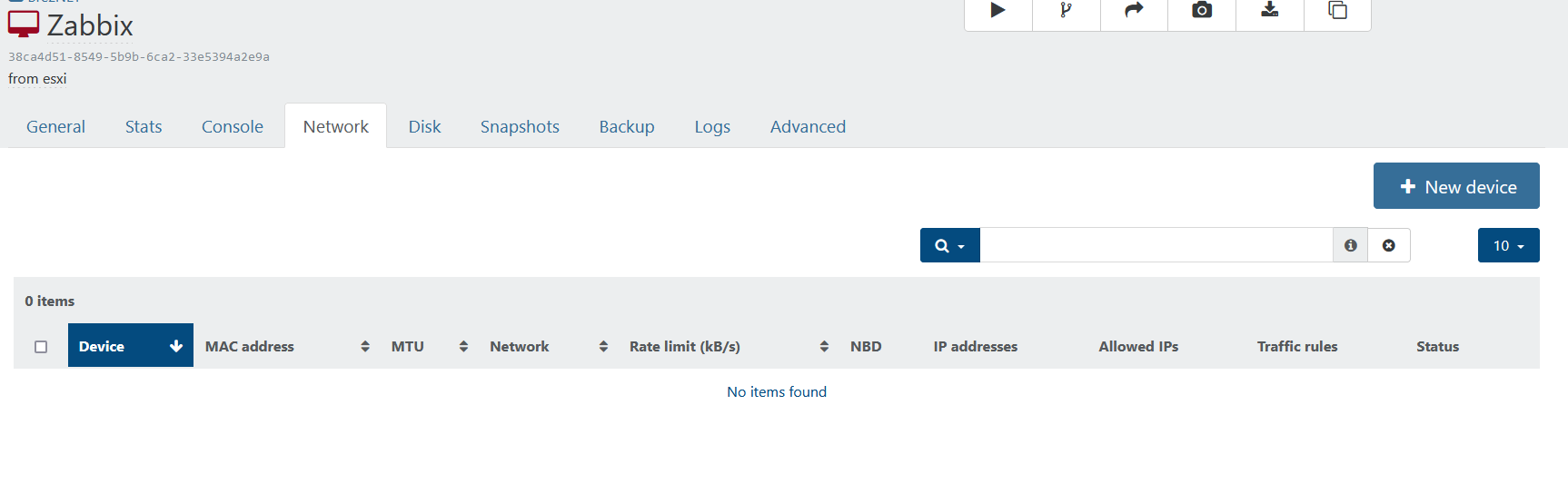

I've done 2 imports and the VMs are up and running. I had to add the nic manually as XO did not create the nic, I had to add it manually.

-

@olivierlambert Just as a quick update, I migrated a 32GB VDI to a different SR and then back, size is still 32GB used, so doesn't appear it "deflated" it or anything like that.

Definitely think using thin=true is the way to go for anyone using this!

-

@planedrop this would only apply to block storage I believe, NFS or ext is thin by default. When I imported a VM that was on an NFS share form ESXi to XCP-ng without the thin=true set the VM was imported with the disk being thin.