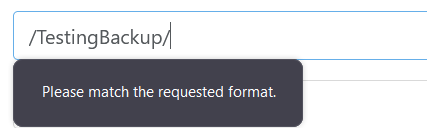

S3 Backup "Please Match The Requested Format"

-

@vincentp Interesting, I did try it without the leading slash but not with lowercase.

It's odd to me that entering something random that's not actually a bucket name made it work, then I could modify that entry to be whatever name I wanted (including caps) and it worked just fine.

It's working great for me now though too, going to do more testing with some very large VMs but with some smaller ones it was decent; not TrueNAS Cloudsync levels of fast (which can dump to B2 at gigabit plus) but fast enough that I think delta backups natively from XOA would work.

I don't recall exactly who was working on the S3 stuff but pinging @olivierlambert about it and maybe it was @florent who did some work on it? I don't remember but it does seem like a bug that things didn't work with capital letters (or maybe just something that isn't documented). I'd be happy to try and write up some documentation on this though, never done so before but I've got it working pretty well now.

-

@planedrop This is what I saw on the first full backup (the XOA vm)

Duration: 8 minutes

Size: 8.55 GiB

Speed: 18.46 MiB/sNot particularly fast, but with differential backups it will might be fast enough. The first differential backup

Duration: a few seconds

Size: 76.22 MiB

Speed: 3.96 MiB/sThe real test will be when I migrate our production VMs to the host (this weekend), which are much larger - I will have to spend some time configuring the backups to stagger the full backups if that is the best speed I can get (host is has 1Gbps connection).

-

@vincentp Yeah for sure, with delta backups I imagine this speed being relatively ok. I saw similar results with my tests on a 50GB VM, going to test with much larger ones soon though.

I am also curious if multiple backups at the same time results in faster overall speeds? Not quite sure how parallel a single VM backup is, but maybe each can run at that 15 or so MiB/s so multiple backups can use more bandwidth, I'll have to test this to find out.

Just for your reference, currently the backup architecture I use is to backup things to a local SMB share on a NAS in house, I then use this to clone to B2 since it can be super fast (I have it set at a 70 megabyte limit and it uses that the entire time, makes backing up like 4TB overnight a possibility). This of course makes restoring more of a pain though if the local backup were to fail for some reason, since it would require me restoring the backup from B2 to the local NAS and then restoring that to XCP-ng.

As for migration, what are you migrating from? I've been doing that myself for a few VMs from ESXi in specific, and have also done it (couple years back) for Hyper-V, so happy to provide any feedback/answer questions if you've got any.

-

@planedrop I plan on spinning up a few more vms this week to test the backups speed, I will post my results when done.

We are migrating from Hyper-V - where we had an adhoc backup scheme at best. We have 2 identical servers, and each host backed up to an smb share on the other host and then we periodially pulled those down locally (the hosts are in a LA, I'm in Canberra, Australia).

One of the machines hung on reboot due to a failed raid array (hyper-v makes it hard to monitor things so probably failed weeks ago) - so I decided to migrate to xcp-ng as we did that recently with our dev servers in Sydney (we do have a truenas server and synology for shared storage/backups there) and it was a major upgrade on hyper-v!

In the LA DC we just have those two servers and no shared storage - which makes life difficult since you can't use the hosts for backup like we did with hyper-v. So I do need to use a cloud service for backups - it will be a while before I can afford another storage server.

-

@vincentp OK cool, would love to see those. Tag me if it's a different post haha!

And sounds like a fun project overall, migrations can be a pain but I for some reason always enjoy them lol. Glad XCP-ng has been solid for you so far though, that's also been my experience coming from Hyper-V a few years back.

Backup management has been super easy with it too, only reason I haven't been using B2 with it previously was because of speed (it's not uncommon for us to need to backup 100GB in a day) but I think it's finally fast enough to be usable for us. Restores have been super easy though too and the test restore feature has been a big deal, just takes the guess work out of testing backups.

-

@vincentp I am the one doing most of the work on S3. The remote and backup UX need a huge overhaul for XO 6, but we'll fix bugs until then

The pattern used to check seems strange, maybe it is too coupled to S3 bucket format, we'll try to relax it.

edit : it's because we took S3 naming convention from https://docs.aws.amazon.com/AmazonS3/latest/userguide/bucketnamingrules.html

Bucket names can consist only of lowercase letters, numbers, dots (.), and hyphens (-).

that's great news. Feel free to share your results, we always try to improve the backup speed. In the xo-server config file, there is a

writeBlockConcurrencyparameter ; it's the nmber of blocks written to the remote in parallel.Btw we are merging another code path to access backup using NBD which should speed up delta backup and continuous replication when they are constrained by the speed of the xapi ( the xen api used to read data from the host) https://github.com/vatesfr/xen-orchestra/pull/6716 .

Did you check the continuous replication ? it could be an easy addition to your backup strategy if you have enough storages on your hosts. This way you can start a replicated VM immediatly if there is an incident on the main host. It can even be in the same job as the backup to backblaze ( but in this case it will be constrained by the backblaze backup speed)

-

@florent PR for bucket is here : https://github.com/vatesfr/xen-orchestra/pull/6757 it should be merged today and go live with the release on the end of he week

-

@florent Sorry for my slow reply on this, ended up falling ill shortly after posting this, doing much better now though.

This makes sense to me why I was getting the format error, glad it's getting "fixed", thanks a ton!

As for the writeBlockConcurrency parameter, is this something that will eventually be tunable in the XOA GUI? I'll see if I can mess with this and see what speed improvements I can get though.

The NBD stuff looks very interesting to me, excited to test it.

Right now we aren't using Continuous Rep but will be in the future, we only have a single host currently in our stack for XCP-ng, but within a few months we should have 3 or 4 hosts for it (including one at a remote backup site of ours, which is where we will do the continuous rep to).

-

@planedrop said in S3 Backup "Please Match The Requested Format":

I am also curious if multiple backups at the same time results in faster overall speeds? Not quite sure how parallel a single VM backup is, but maybe each can run at that 15 or so MiB/s so multiple backups can use more bandwidth, I'll have to test this to find out.

So I did get to test this scenario out last night - finally got my production vms migrated over - that's another story - and did run 2 full backups at the same time.

Duration: 9 hours

Size: 512.03 GiB

Speed: 16.98 MiB/sDuration: 7 hours

Size: 511.97 GiB

Speed: 20.25 MiB/sI did monitor for a short while (it was 2am) with netdata and observed the outbound connection running between 600Mbs and 900Mbs - so I guess that's about as good as it's going to get (1Gbs connection). In the long term I really need to get a truenas server at this site and run the backups to backblaze from there.

-

@vincentp Interesting findings, odd that the speeds showed that with netdata though considering 20MiB/s is 160Mbs, seems like maybe it is a more bursty workload instead of a constant one?

As for the TrueNAS, I'm actually trying to move away from that since it results in a double restore scenario, say something catastrophic does happen, ransomware, etc..... Then you have to both source and build a TrueNAS server, restore to it, then source and build an XCP-ng server and restore from the TrueNAS server. A much faster method would be just to install XCP-ng and XOA and then restore config and restore VMs direct from Backblaze.

-

@planedrop I'm about to give up on using backblaze - the peformance is really variable, but I am seeing full backups take > 24hrs at the moment. I'm not sure where the bottleneck is, servers are co-located in LA - backblaze region set to us.west - 1Gbps connection but I just cannot get the backups done in a reasonable time. I'm having similar issues with continous replication between the 2 servers (which have a 10G direct connection) - the incremental backups are fine, but the full backups just take too long.

For now I'm just going to focus on backing up the data inside the vm's (db, websites etc) as that will be quicker.

-

@vincentp Did you try activating the NBD option ?

Can you back one VM on a NFS on a separate job to check if it's really reading speed that is slow, or if it's the S3 and CR writer ?

-

@florent said in S3 Backup "Please Match The Requested Format":

@vincentp Did you try activating the NBD option ?

Can you back one VM on a NFS on a separate job to check if it's really reading speed that is slow, or if it's the S3 and CR writer ?

No, I don't have any shared storage at that site so cannot test NFS.

I do have NDB enabled on the direct 10G connection between the two hosts.

-

@vincentp did you also enable it in the xapioptions of xo-server / the relevant proxy ?

-

@vincentp Seems to me the full backups taking a long time isn't a huge issue considering you should mostly just have deltas from there on out, other than periodic fulls once in a long while.

I'm going to do more testing myself here soon to see if I can get things to speed up more, will test NBD.

Also @florent I am not seeing the

writeBlockConcurrencyoption within the config files, is it one that I should add or should it already be there? -

@planedrop you should add it in the

[backups]section -

@florent I'll give this a shot and see how performance is. Do you have a recommended number to set on this? 8?

-

@planedrop not reallky for now

-

@vincentp Wanted to get some more info from you regarding your backups that were taking a long time.

Was the > 24hrs mark the entire backup process or just the data transfer process?

If you were using Delta backups and then had the backup retention in the schedule set to 1, it is still honored (even if the schedule itself was disabled), so what might have been happening is that it was merging the delta chain every single time you did a backup which takes a long time.

I did some more testing (nothing with huge VMs yet, but with 4 100GB ish ones) and setting the backup retention to 7 (so it only merges things into the Delta every 7 days) made it so the deltas were much faster to complete, otherwise the backup process would get "stuck" while it's merging the deltas into the full, which is the part that takes the longest from my testing.

Of course, once you hit that 7 retention mark, then every backup from there on out has to merge 1 delta into the full so not sure this is a solution but was just curious.

I'm still doing testing but I think the merge process is going to be the largest issue, so much so that it might actually be faster to NOT use Delta's and instead use full backups and just do them less frequently.

-

@florent any idea on a way to make the delta merges faster? I know there was some work in the pipeline for that a while back but don't recall what came of it. As of right now the actual uploads of the deltas are plenty fast but the merge process is super slow (for some VMs the upload happens in like 10 minutes but merge takes over an hour).

Would the

writeblockconcurrencysetting help with merges at all?