Continuous Replication health check fails

-

Hi!

I've been testing both the normal and delta backups, and the DR and Continuous Replication backups with the health checks.

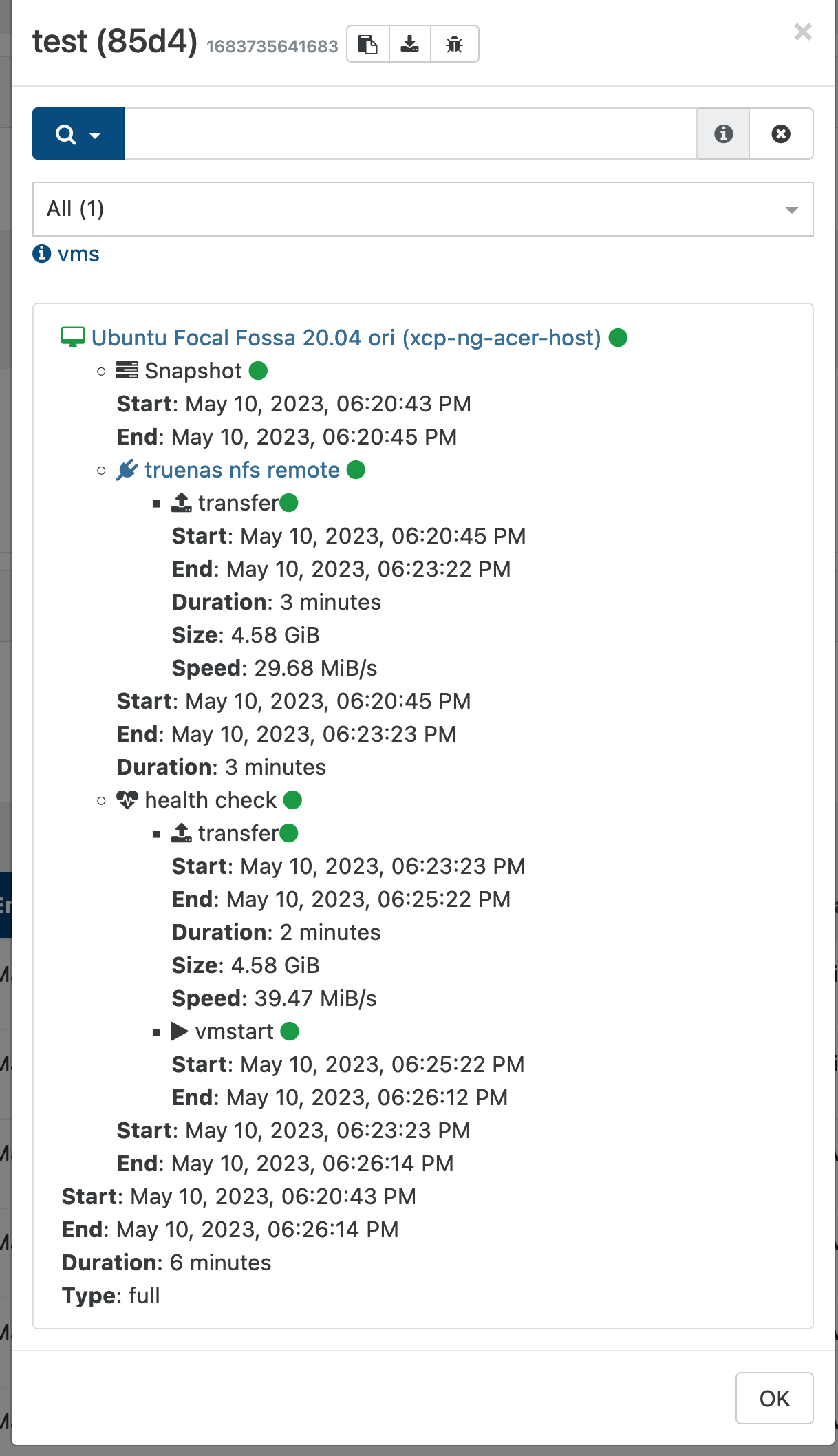

The "normal" backups work fine, it creates a backup and with the health check it boots a copy of the VM and waits for the management tools to load and then destroys it. Works wonders:

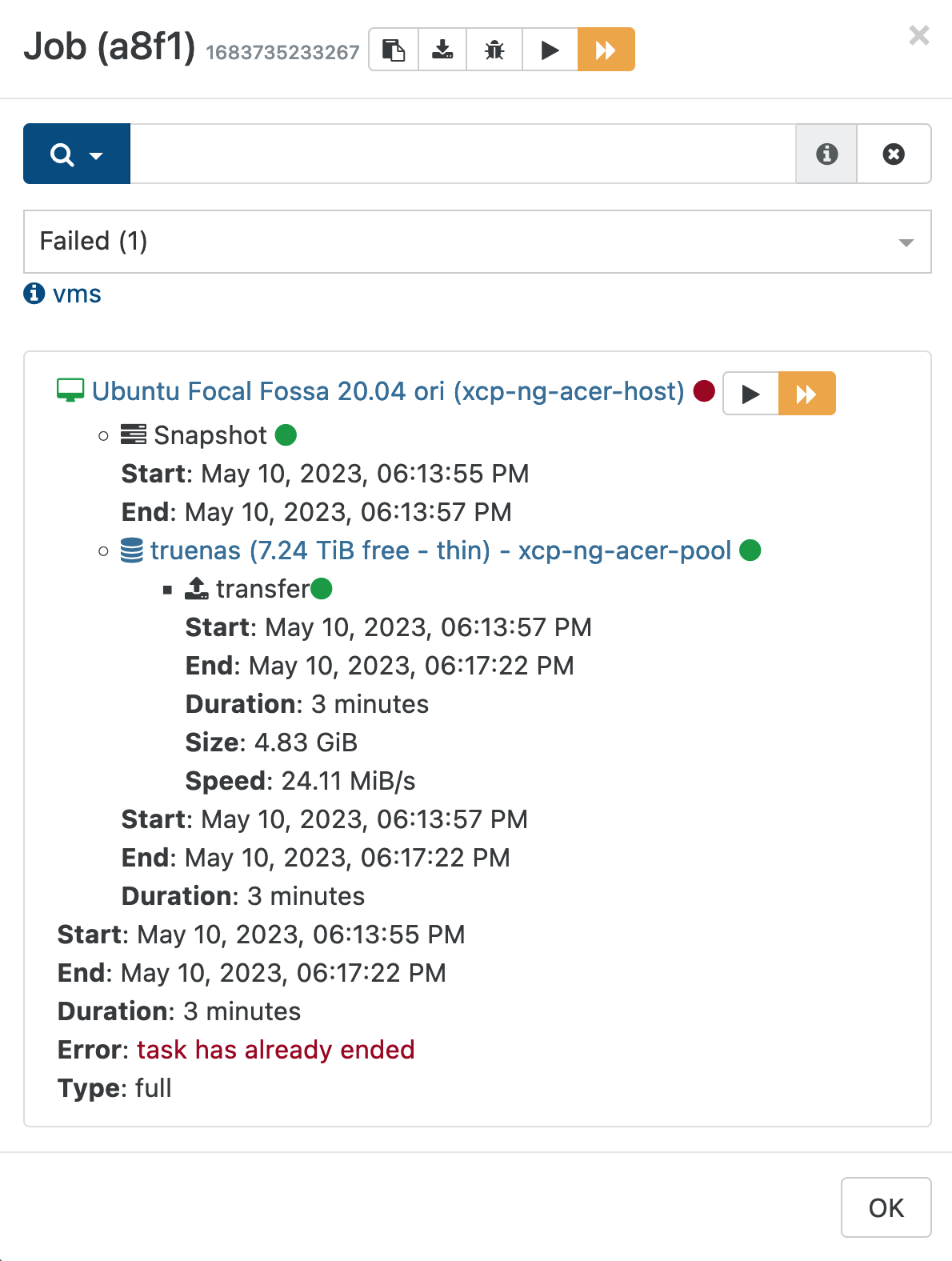

But when using the Continuous Replication method, it works fine as long as I don't check the "health check". It actually works "fine", it creates the backup vm, but it doesn't boot it, i can boot it though manually after and it boots fine. Is this normal behavior?

I get the error: Error: task has already ended

Full error log:

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1683735233267", "jobId": "0ae3a8f1-b21c-4302-a4f4-a175b3766380", "jobName": "ubuntuBackup", "message": "backup", "scheduleId": "fde39c31-a81a-4534-8265-3d63264cd629", "start": 1683735233267, "status": "failure", "infos": [ { "data": { "vms": [ "89cc6c0e-4a19-eaf7-edd2-c3189094e66e" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "89cc6c0e-4a19-eaf7-edd2-c3189094e66e", "name_label": "Ubuntu Focal Fossa 20.04 ori" }, "id": "1683735235212", "message": "backup VM", "start": 1683735235212, "status": "failure", "tasks": [ { "id": "1683735235906", "message": "snapshot", "start": 1683735235906, "status": "success", "end": 1683735237813, "result": "3504e7ab-240e-5d4b-d09b-97b59a4ae6a8" }, { "data": { "id": "340350a1-1198-edb3-b477-caaad85c02e3", "isFull": true, "name_label": "truenas", "type": "SR" }, "id": "1683735237814", "message": "export", "start": 1683735237814, "status": "success", "tasks": [ { "id": "1683735237848", "message": "transfer", "start": 1683735237848, "status": "success", "end": 1683735442805, "result": { "size": 5181273600 } } ], "end": 1683735442889 } ], "end": 1683735442907, "result": { "log": { "result": { "log": { "message": "health check", "parentId": "aw473bidagq", "event": "start", "taskId": "wzq5it9o9ip", "timestamp": 1683735442901 }, "message": "task has already ended", "name": "Error", "stack": "Error: task has already ended\n at Task.logAfterEnd (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:7:17)\n at Task.onLog (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:66:37)\n at #log (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:146:16)\n at new Task (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:82:14)\n at Task.run (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:40:12)\n at DeltaReplicationWriter.healthCheck (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/writers/_MixinReplicationWriter.js:26:19)\n at /opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:136:32\n at /opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:110:24\n at Zone.run (/opt/xo/xo-builds/xen-orchestra-202305091555/node_modules/node-zone/index.js:80:23)\n at Task.run (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:108:23)" }, "status": "failure", "event": "end", "taskId": "aw473bidagq", "timestamp": 1683735442901 }, "message": "task has already ended", "name": "Error", "stack": "Error: task has already ended\n at Task.logAfterEnd (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:7:17)\n at #log (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:146:16)\n at #end (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:141:14)\n at Task.failure (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:90:14)\n at /opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:119:14\n at Zone.run (/opt/xo/xo-builds/xen-orchestra-202305091555/node_modules/node-zone/index.js:80:23)\n at Task.run (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:108:23)\n at DeltaReplicationWriter.healthCheck (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/Task.js:136:19)\n at /opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/_VmBackup.js:458:46\n at callWriter (/opt/xo/xo-builds/xen-orchestra-202305091555/@xen-orchestra/backups/_VmBackup.js:148:15)" } } ], "end": 1683735442908 }XO build from source today.

-

Just updated to lastest commit https://github.com/vatesfr/xen-orchestra/commit/4bd5b38aeb9d063e9666e3a174944cbbfcb92721

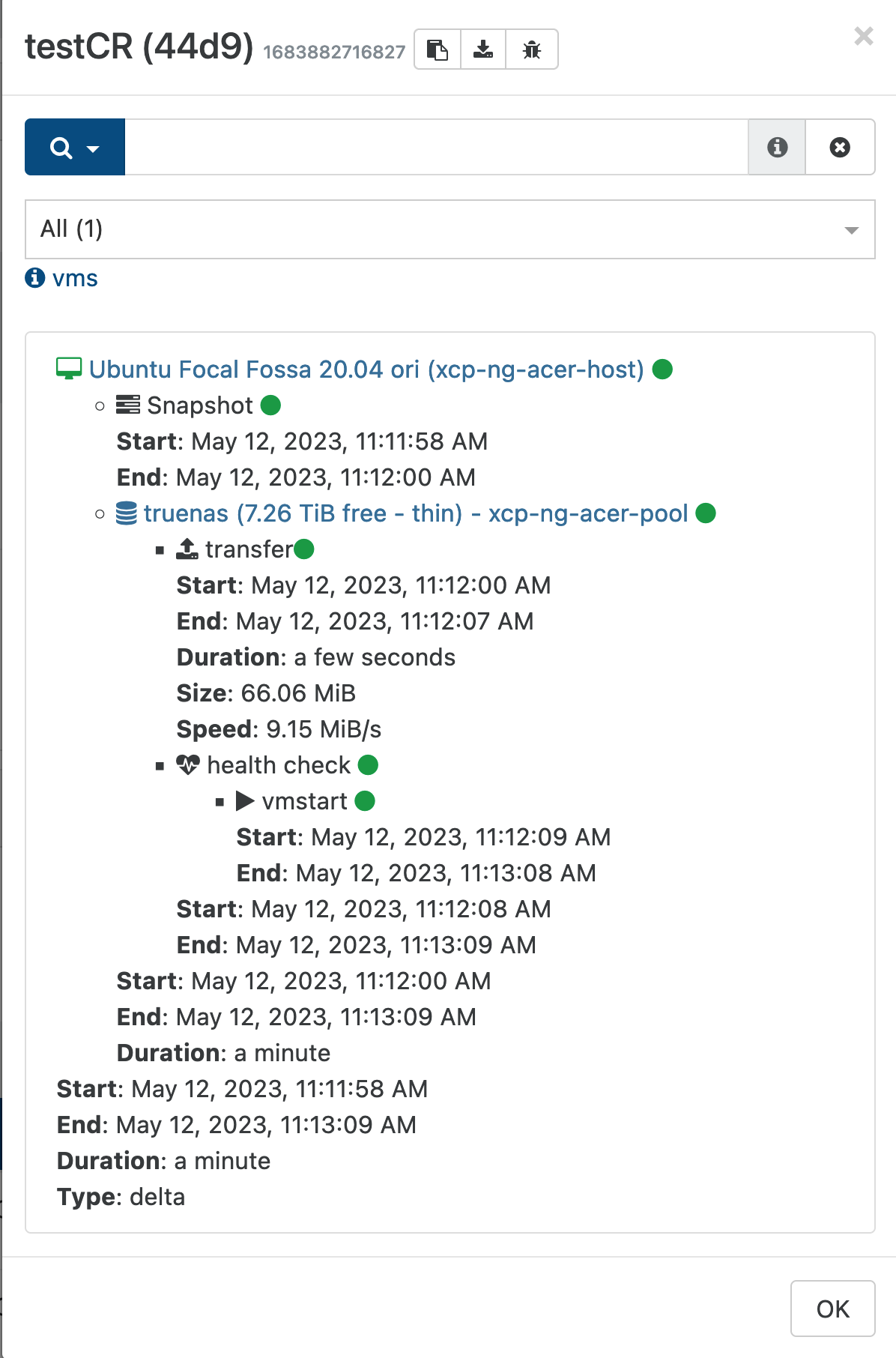

It's fixed now. Working wonderfully. Thanks!

-

Thanks for your report. Does this error rings a bell @julien-f ?

-

@bullerwins hi, can you export the full JOSN log (the button with the downard arrow in the title of the modal ) ?

-

@florent said in Continuous Replication health check fails:

@bullerwins hi, can you export the full JOSN log (the button with the downard arrow in the title of the modal ) ?

Sure!

I copied it to pastebin, I can't upload .json's I believe

-

@bullerwins nice catch, the fix is trivial with such a detailled error : https://github.com/vatesfr/xen-orchestra/pull/6830

-

@florent said in Continuous Replication health check fails:

@bullerwins nice catch, the fix is trivial with such a detailled error : https://github.com/vatesfr/xen-orchestra/pull/6830

nice! as soon as it's merges I'll update it and check. I'm so happy if this report helped you guys in any way!

-

Just updated to lastest commit https://github.com/vatesfr/xen-orchestra/commit/4bd5b38aeb9d063e9666e3a174944cbbfcb92721

It's fixed now. Working wonderfully. Thanks!

-

O olivierlambert marked this topic as a question on

O olivierlambert marked this topic as a question on

-

O olivierlambert has marked this topic as solved on

O olivierlambert has marked this topic as solved on