Patching and trying to Pool Hosts after they've been in production

-

Hey all,

Looking for some guidance on this. I have 3 hosts all running XCP-ng.

- XCP-ng 8.2.1 (GPLv2)

- XCP-ng 8.0.0 (GPLv2)

- XCP-ng 8.2.1 (GPLv2)

Respectively,

One host was recently running 7.6.0 and has been updated. I want to introduce this system back into my NFS shares that are attached to the other two hosts.

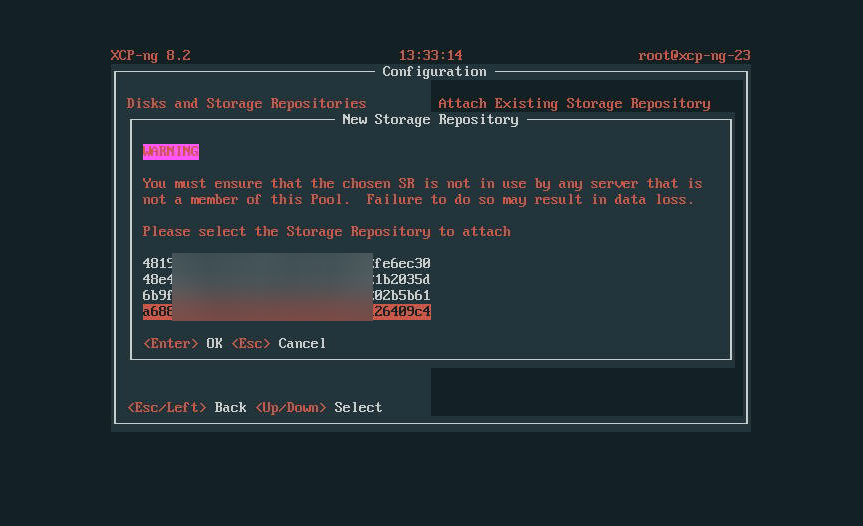

When I look to do that on Dom0 I get this warning message about potential data loss.

The 4th line item highlighted is the repo that I need to attach.

How worried should I be about this, as this storage is provided over NFS from a NAS?

What does this then do if I want to try and pool all of these hosts, which is the end goal?

-

Hi,

You can't share a storage between different pools. A shared storage is only shared with hosts inside the same pool. So first, you'll need to migrate (or warm migration, or CR) VMs from a single host to the destination pool with the "final" shared SR configured there.

Then, you'll remove the previous SR and join the destination pool.

-

@olivierlambert said in Patching and trying to Pool Hosts after they've been in production:

Hi,

You can't share a storage between different pools. A shared storage is only shared with hosts inside the same pool. So first, you'll need to migrate (or warm migration, or CR) VMs from a single host to the destination pool with the "final" shared SR configured there.

Then, you'll remove the previous SR and join the destination pool.

That is extremely odd, because it at least was setup like that before my time with this org..

-

Each host has its own Pool (of itself), and then has VM storage on a NAS that its accessing over NFS.

-

You can connected to the same NAS, but each pool will have a dedicated folder named after the SR UUID. So you can't actually share a VM disk between 2 pools, by design (the pool is the only way to know which host get the lock on the disk).

-

@olivierlambert said in Patching and trying to Pool Hosts after they've been in production:

You can connected to the same NAS, but each pool will have a dedicated folder named after the SR UUID. So you can't actually share a VM disk between 2 pools, by design (the pool is the only way to know which host get the lock on the disk).

Yeah and that is the challenge that I'm trying to remedy, ideally I want to vacate 1 more of the hosts, so that I have two up to date XCP instances, and then pool those two systems.

My challenge is that these hosts were all setup separate of each other over a year or so, but do have the same architecture.

-

As I said, you need to migrate the VMs to the target pool until the host is empty, and then you can add it to the target pool.

-

@olivierlambert said in Patching and trying to Pool Hosts after they've been in production:

As I said, you need to migrate the VMs to the target pool until the host is empty, and then you can add it to the target pool.

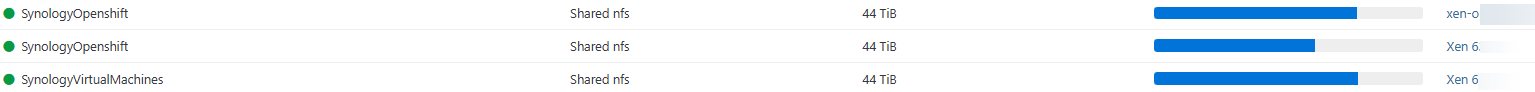

I think I got it sorted out and was able to add a new storage repo to the pool to use to migrate VM's.

I tested with one VM as a migration from the old host to the updated one and it failed with something similar to the below - I was able to restore from backup, but would like to know if this should be expected.. (cause I can see the headache now lol)

vm.migrate { "vm": "b735466c-08f4-2d1a-3778-7e17401ec822", "mapVifsNetworks": { "490fbf16-4951-2856-a8d8-0bb8ae8f67a6": "e7a40a11-d541-ce1f-f246-c1c3d855820b" }, "migrationNetwork": "e7a40a11-d541-ce1f-f246-c1c3d855820b", "sr": "3df813d4-0818-6b95-a15f-f704ae7858e0", "targetHost": "165ebeed-a67e-4b7c-8898-6a9656d105a2" } { "code": "VM_INCOMPATIBLE_WITH_THIS_HOST", "params": [ "OpaqueRef:7cac0ba3-d626-42ff-b2d2-eb55b627dff9", "OpaqueRef:705ea907-9ac3-43df-93c7-87fb85f9ba61", "VM last booted on a CPU with features this host's CPU does not have." ], "task": { "uuid": "254495fa-8950-2f36-5997-a7500c8bd1f3", "name_label": "Async.VM.assert_can_migrate", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20240111T20:55:19Z", "finished": "20240111T20:55:19Z", "status": "failure", "resident_on": "OpaqueRef:c065fa00-9e2d-47de-a5f2-bc7426b945cd", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "VM_INCOMPATIBLE_WITH_THIS_HOST", "OpaqueRef:7cac0ba3-d626-42ff-b2d2-eb55b627dff9", "OpaqueRef:705ea907-9ac3-43df-93c7-87fb85f9ba61", "VM last booted on a CPU with features this host's CPU does not have." ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi/rbac.ml)(line 231))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 103)))" }, "message": "VM_INCOMPATIBLE_WITH_THIS_HOST(OpaqueRef:7cac0ba3-d626-42ff-b2d2-eb55b627dff9, OpaqueRef:705ea907-9ac3-43df-93c7-87fb85f9ba61, VM last booted on a CPU with features this host's CPU does not have.)", "name": "XapiError", "stack": "XapiError: VM_INCOMPATIBLE_WITH_THIS_HOST(OpaqueRef:7cac0ba3-d626-42ff-b2d2-eb55b627dff9, OpaqueRef:705ea907-9ac3-43df-93c7-87fb85f9ba61, VM last booted on a CPU with features this host's CPU does not have.) at Function.wrap (file:///opt/xen-orchestra/packages/xen-api/_XapiError.mjs:16:12) at default (file:///opt/xen-orchestra/packages/xen-api/_getTaskResult.mjs:11:29) at Xapi._addRecordToCache (file:///opt/xen-orchestra/packages/xen-api/index.mjs:1006:24) at file:///opt/xen-orchestra/packages/xen-api/index.mjs:1040:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///opt/xen-orchestra/packages/xen-api/index.mjs:1030:12) at Xapi._watchEvents (file:///opt/xen-orchestra/packages/xen-api/index.mjs:1203:14) at runNextTicks (node:internal/process/task_queues:60:5) at processImmediate (node:internal/timers:447:9) at process.callbackTrampoline (node:internal/async_hooks:128:17)" }Which okay, I get it, I forced it anyways (should I cold migrate in this case?)

When I did force migrate it anyways this came out and the VM wasn't operable (restored from backup just to get back to what I had - no biggie)

vm.migrate { "vm": "b735466c-08f4-2d1a-3778-7e17401ec822", "force": true, "mapVifsNetworks": { "490fbf16-4951-2856-a8d8-0bb8ae8f67a6": "e7a40a11-d541-ce1f-f246-c1c3d855820b" }, "migrationNetwork": "e7a40a11-d541-ce1f-f246-c1c3d855820b", "sr": "3df813d4-0818-6b95-a15f-f704ae7858e0", "targetHost": "165ebeed-a67e-4b7c-8898-6a9656d105a2" } { "code": "INTERNAL_ERROR", "params": [ "Xenops_interface.Xenopsd_error([S(Internal_error);S(Xenops_migrate.Remote_failed(\"unmarshalling error message from remote\"))])" ], "task": { "uuid": "5ed69757-c5da-cf5d-da8c-3197c7098262", "name_label": "Async.VM.migrate_send", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20240111T20:55:31Z", "finished": "20240111T21:07:37Z", "status": "failure", "resident_on": "OpaqueRef:c065fa00-9e2d-47de-a5f2-bc7426b945cd", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "INTERNAL_ERROR", "Xenops_interface.Xenopsd_error([S(Internal_error);S(Xenops_migrate.Remote_failed(\"unmarshalling error message from remote\"))])" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xenopsd-xc)(filename lib/xenops_migrate.ml)(line 65))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2365))((process xenopsd-xc)(filename lib/open_uri.ml)(line 20))((process xenopsd-xc)(filename lib/open_uri.ml)(line 20))((process xenopsd-xc)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xenopsd-xc)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2316))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2751))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2761))((process xenopsd-xc)(filename lib/xenops_server.ml)(line 2780))((process xenopsd-xc)(filename lib/task_server.ml)(line 162))((process xapi)(filename ocaml/xapi/xapi_xenops.ml)(line 3154))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/xapi_xenops.ml)(line 3319))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 200))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 206))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 230))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 1340))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/xapi_vm_migrate.ml)(line 1471))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 128))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 231))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 103)))" }, "message": "INTERNAL_ERROR(Xenops_interface.Xenopsd_error([S(Internal_error);S(Xenops_migrate.Remote_failed(\"unmarshalling error message from remote\"))]))", "name": "XapiError", "stack": "XapiError: INTERNAL_ERROR(Xenops_interface.Xenopsd_error([S(Internal_error);S(Xenops_migrate.Remote_failed(\"unmarshalling error message from remote\"))])) at Function.wrap (file:///opt/xen-orchestra/packages/xen-api/_XapiError.mjs:16:12) at default (file:///opt/xen-orchestra/packages/xen-api/_getTaskResult.mjs:11:29) at Xapi._addRecordToCache (file:///opt/xen-orchestra/packages/xen-api/index.mjs:1006:24) at file:///opt/xen-orchestra/packages/xen-api/index.mjs:1040:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///opt/xen-orchestra/packages/xen-api/index.mjs:1030:12) at Xapi._watchEvents (file:///opt/xen-orchestra/packages/xen-api/index.mjs:1203:14) at runNextTicks (node:internal/process/task_queues:60:5) at processImmediate (node:internal/timers:447:9) at process.callbackTrampoline (node:internal/async_hooks:128:17)" } -

Warm migration should work in this case because the VM is halted then restarted as part of the process. See here for more details.

-

@Danp said in Patching and trying to Pool Hosts after they've been in production:

Warm migration should work in this case because the VM is halted then restarted as part of the process. See here for more details.

Sweet, I'll setup something small on the old host for testing and use the Warm Migration process.

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login