Maintenance mode auto disabled after some time

-

@nick-lloyd no HA.

So that a part of rolling update logic. In that case mode should be disabled after reboot, yes.

For real hardware maintenance, best way is disable plugin, migrate VM once and then do what i need.

-

You need to disable the load balancer plugin and/or HA if you have any of the two. That's what RPU does behind the scene

-

@olivierlambert did more tests.

Balancer plugin is disabled. Pool with 3 hosts, i enabled mode on 2. After 20-30min mode disabled on 1 hosts, and 5min later on 2nd, right after 1 VM migration completed.

VMs not evacuated, only about 1-2 from each host.Log created for both hosts at the time when mode been disabled.

host.setMaintenanceMode { "id": "85630f99-fabf-4a3c-a75b-b6a7fea42c81", "maintenance": true } { "code": "VM_SUSPEND_TIMEOUT", "params": [ "OpaqueRef:7937dfa9-50d9-411e-a1de-f3b51d4763be", "1200." ], "task": { "uuid": "36aebea0-8422-68a2-cce9-e88f7e7fbf33", "name_label": "Async.host.evacuate", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20240828T07:40:27Z", "finished": "20240828T08:02:05Z", "status": "failure", "resident_on": "OpaqueRef:f4ca6efc-e3c3-4eb2-92d7-e431a7376162", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "VM_SUSPEND_TIMEOUT", "OpaqueRef:7937dfa9-50d9-411e-a1de-f3b51d4763be", "1200." ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi-client/client.ml)(line 7))((process xapi)(filename ocaml/xapi-client/client.ml)(line 19))((process xapi)(filename ocaml/xapi-client/client.ml)(line 6172))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/xapi_host.ml)(line 612))((process xapi)(filename ocaml/xapi/xapi_host.ml)(line 621))((process xapi)(filename hashtbl.ml)(line 266))((process xapi)(filename hashtbl.ml)(line 272))((process xapi)(filename hashtbl.ml)(line 277))((process xapi)(filename ocaml/xapi/xapi_host.ml)(line 629))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))" }, "message": "VM_SUSPEND_TIMEOUT(OpaqueRef:7937dfa9-50d9-411e-a1de-f3b51d4763be, 1200.)", "name": "XapiError", "stack": "XapiError: VM_SUSPEND_TIMEOUT(OpaqueRef:7937dfa9-50d9-411e-a1de-f3b51d4763be, 1200.) at Function.wrap (file:///opt/xo/xo-builds/xen-orchestra-202408271341/packages/xen-api/_XapiError.mjs:16:12) at default (file:///opt/xo/xo-builds/xen-orchestra-202408271341/packages/xen-api/_getTaskResult.mjs:13:29) at Xapi._addRecordToCache (file:///opt/xo/xo-builds/xen-orchestra-202408271341/packages/xen-api/index.mjs:1041:24) at file:///opt/xo/xo-builds/xen-orchestra-202408271341/packages/xen-api/index.mjs:1075:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///opt/xo/xo-builds/xen-orchestra-202408271341/packages/xen-api/index.mjs:1065:12) at Xapi._watchEvents (file:///opt/xo/xo-builds/xen-orchestra-202408271341/packages/xen-api/index.mjs:1238:14)" } -

@Tristis-Oris said in Maintenance mode auto disabled after some time:

VM_SUSPEND_TIMEOUT

This means we couldn't migrate the VM, because the VM couldn't suspend. Fix that first and the rest should work.

-

@olivierlambert hmm. Why it can't suspend? As i understand that a last step of migration, when data already transefered. i never get such problem with before.

That a huge docker vm, which is really heavy and need a lot time to shutdown. And it still ongoing migration - task not interrupted, but maintanance is already canceled.I'm still sure it have same 20-30min timeout as for rolling update.

-

well migration going not very fine, but services still working.

-

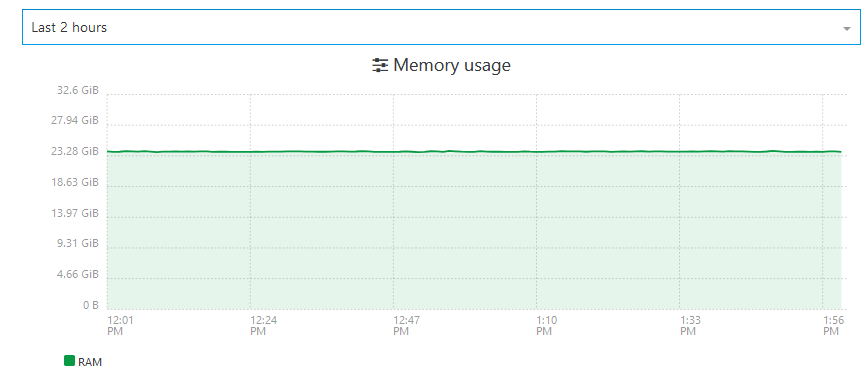

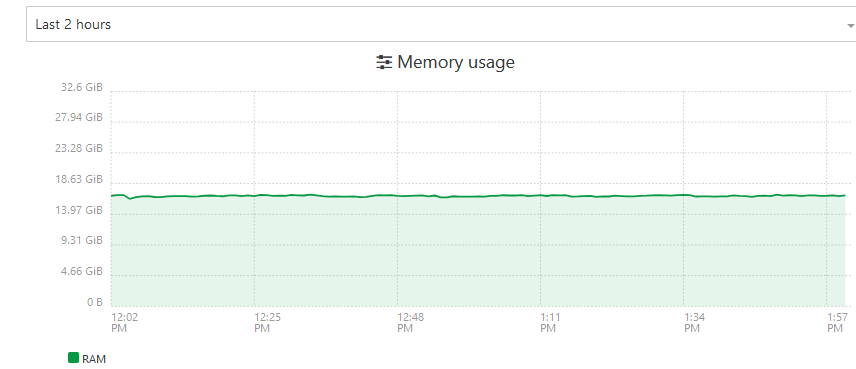

So your problem is the following: when you start a migration, Xen will "deflate" the VM by shrinking its memory to "dynamic min value". If you don't have enough memory for your system to run on dynamic min, then the migration will fail. Raise your dynamic min to a higher value to solve this.

-

@olivierlambert hm. i never changed this value, but database VM with high memory load always migrated fine.

so i just need to increase min memory?

-

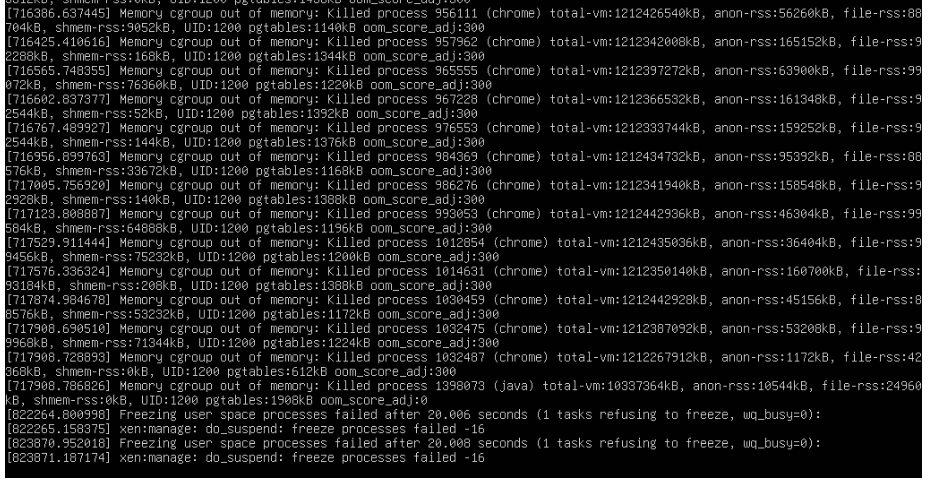

Well, if you are already at 32/32/32 it just means you don't have enough RAM in your guest at the moment, you need more. You can see the OOM killer trying to keep up, in short your guest OS isn't sized properly.

-

@olivierlambert this is DB vm. always migrated fine without problem.

docker vm can't

i do not understand something.

-

This is an issue inside your guest, so take time to read the logs and try to make sense of it. It's possible you don't have enough resources in the VM to make the migration, but from "outside" it's hard to tell.