@planedrop Not opposed to cloud of course, but it's a network with no internet!

Best posts made by DustyArmstrong

-

RE: XO Backups - Offline Storage Best Practices?

-

RE: Detached VM Snapshots after Warm Migration

Well, scratch the help, I have fixed it.

Bad VM:

xe vm-param-list uuid={uuid} | grep "other-config"other-config (MRW): xo:backup:schedule: one-time; xo:backup:vm: {uuid}; xo:backup:datetime: 20260214T18:13:03Z; xo:backup:job: 97665b6d-1aff-43ba-8afb-11c7455c16ff; xo:backup:sr: {uuid}; auto_poweron: true; base_template_name: Debian Buster 10; [...]Clean VM:

other-config (MRW): auto_poweron: true; base_template_name: Debian Buster 10; [...]Stale references to my old backup job causing the VM to disassociate with the VDI chain. Removing the extra config resolved it. Simple when you know where to look.

xe vm-param-remove uuid={uuid} param-name=other-config param-key=xo:backup:job xe vm-param-remove uuid={uuid} param-name=other-config param-key=xo:backup:sr xe vm-param-remove uuid={uuid} param-name=other-config param-key=xo:backup:vm xe vm-param-remove uuid={uuid} param-name=other-config param-key=xo:backup:schedule xe vm-param-remove uuid={uuid} param-name=other-config param-key=xo:backup:datetimeVMs now snapshot clean.

-

RE: Has REST API changed (Cannot GET backup logs)?

@julien-f Thank you and thank you for the quick resolution, you guys rock.

-

RE: Has REST API changed (Cannot GET backup logs)?

@olivierlambert Yes but didn't see any changes for

backup/logswhich is where the issue seemed to arise from. I pull the status from each log entry not the job info itself.@julien-f awesome, thanks!

-

RE: New Rust Xen guest tools

Testing the agent out on Arch Linux (mainly due to the spotty 'support' in the AUR/generally) and it is working fine - better than what I had before (which did not report VM info properly). I've set it up as a

systemdservice to replace the previous one I had, also working as expected.This would be fun to contribute towards.

-

RE: Console keyboard problems using Firefox

For anyone who comes across this, you can just add an exception for your management page and shift will work on the console.

Settings > Privacy & Security > Enhanced Tracking Protection > Manage Exceptions > Add the site url e.g.

https://xo.fqdn.com.

Latest posts made by DustyArmstrong

-

RE: Detached VM Snapshots after Warm Migration

@florent No problem, just thought it would be fun.

Thanks for your work anyway!

-

RE: Detached VM Snapshots after Warm Migration

@florent I'd be interested in giving it a shot if you accept PRs, but if you already have it planned and would rather do it yourselves at a later date (and it's too low a priority to review a PR anyway) then that's fair enough. I'll be content enough knowing it's a thing to be aware of under the hood.

-

RE: Detached VM Snapshots after Warm Migration

@florent Thanks, had to put the DFIR hat on.

May as well ask as I thought about a PR for this - would it be feasible/practical/desirable to allow this to be done from XO's UI? I don't know how much of an edge case this was for me, but being able to remove "other-config" data following a migration (e.g. you do what I did and want the VMs to start over independently on a new host) might be beneficial to others.

Obviously it would be quite destructive I imagine, if used inappropriately. Even just reporting those ghostly associations would be nice - again not sure of your overall design ethos so there may be good reasons why it's not a solid idea.

-

RE: Detached VM Snapshots after Warm Migration

Well, scratch the help, I have fixed it.

Bad VM:

xe vm-param-list uuid={uuid} | grep "other-config"other-config (MRW): xo:backup:schedule: one-time; xo:backup:vm: {uuid}; xo:backup:datetime: 20260214T18:13:03Z; xo:backup:job: 97665b6d-1aff-43ba-8afb-11c7455c16ff; xo:backup:sr: {uuid}; auto_poweron: true; base_template_name: Debian Buster 10; [...]Clean VM:

other-config (MRW): auto_poweron: true; base_template_name: Debian Buster 10; [...]Stale references to my old backup job causing the VM to disassociate with the VDI chain. Removing the extra config resolved it. Simple when you know where to look.

xe vm-param-remove uuid={uuid} param-name=other-config param-key=xo:backup:job xe vm-param-remove uuid={uuid} param-name=other-config param-key=xo:backup:sr xe vm-param-remove uuid={uuid} param-name=other-config param-key=xo:backup:vm xe vm-param-remove uuid={uuid} param-name=other-config param-key=xo:backup:schedule xe vm-param-remove uuid={uuid} param-name=other-config param-key=xo:backup:datetimeVMs now snapshot clean.

-

RE: Detached VM Snapshots after Warm Migration

Looks like I unfortunately blew my chance to do this cleanly.

Decided to plug in my old hosts again, just to see if XO felt the VM - despite being on a new host - was still associated with or on the old host. Turns out that was the right assumption, as when I snapshotted a problem VM, it did not have the health warning. Took that to mean if I removed the old VMs, the references would go with it, but alas, I should've snapshotted all of them before doing that.

Back to square 1, but now the one VM I did snapshot no longer has the health warning. Completely wiped XO, still getting the same problem. Kind of at a loss now.

I would still really appreciate any assistance.

-

RE: Detached VM Snapshots after Warm Migration

@acebmxer My container is built from the sources, only fairly recently. I may try rebuilding it with the latest commit and deploy completely new, if that would work. I'm just trying to avoid a situation where I end up with even more confusion in the database.

In the end, my ultimate goal is just to re-associate the VMs with XO and the wider XAPI database. Whatever method will achieve that should work here as I'm starting from scratch anyway - for the most part. Whatever way achieves that cleanly really, I'm just a bit unsure how best to do it as moving XO clearly caused me issues, need to understand how to rebuild the VM associations.

-

RE: Detached VM Snapshots after Warm Migration

@Pilow Yeah I don't particularly want to, but in the absence of any alternatives! I'll give it a day in case anyone responds, otherwise I'll just wipe it - assuming it leaves the hosts untouched. I just need to do whatever needs to be done to re-associate the VM UUIDs and would hope a total rebuild of XO would do that.

-

RE: Detached VM Snapshots after Warm Migration

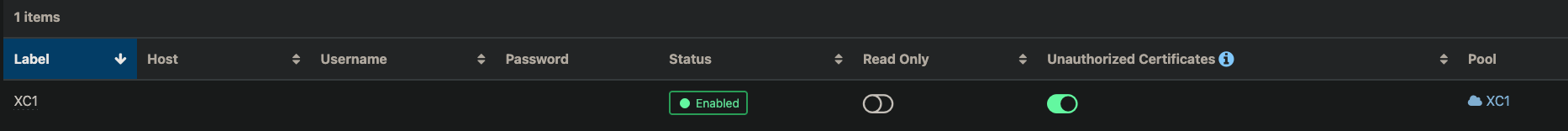

@Pilow Yes one pool, 2 servers (a master and a secondary/slave).

I think I've realised per my last post update, when I warm migrated I didn't select "delete source VM", which has probably broken something since I retired the old hosts afterwards.

I mainly just want to know the best method to wipe XO and start over so it can rebuild the database.

-

RE: Detached VM Snapshots after Warm Migration

Still not working, blew away my old backups and deleted the job, still getting detached snapshots. Interestingly, of my two hosts, the pool slave isn't actually recording the snapshot properly still. Tested the "revert snapshot" from XO, which works properly - everything works properly except the detached snapshot warning. Probably also worth noting that I did not select the "delete source VM", I deleted them manually later.

Snapshotting a VM on XC2, slave in a pool. Don't know if this is expected or not for a pool slave.

Pool master (Displays the IP of XO):

HTTPS 123.456.789.6->|Async.VM.snapshot R:da375f8192ab|audit] ('trackid=2f566a5e238297cab4efbd4023dba8da' 'LOCAL_SUPERUSER' 'root' 'ALLOWED' 'OK' 'API' 'VM.snapshot' (('vm' 'VM_Name0' 'e0f0 29ec-f0b8-1c14-c654-80c6ecec582c' 'OpaqueRef:f0fae2b6-7729-b539-066f-16a795b0b22f')Pool slave (displays IP of the XCP host itself):

HTTPS 123.456.789.2->:::80|Async.VM.snapshot R:da375f8192ab|audit] ('trackid=f49a8b9396c939d4cd5335542cba3848' 'LOCAL_SUPERUSER' '' 'ALLOWED' 'OK' 'API' 'VM.snapshot' (('vm' '' '' 'OpaqueRef:f0fae2b6-7729-b539-066f-16a795b0b22f')I don't know if this means anything. When I snapshot a VM on XC1 (warm migrated) it also shows in the logs of the pool master, but with the IP of XO (still detached):

HTTPS 123.456.789.6->|Async.VM.snapshot R:cbd3f663c5d7|audit] ('trackid=2f566a5e238297cab4efbd4023dba8da' 'LOCAL_SUPERUSER' 'root' 'ALLOWED' 'OK' 'API' 'VM.snapshot' (('vm' 'VM_Name1 'c7c63201-e25a-a7d5-7e39-394636538866' 'OpaqueRef:72f934a8-bfd6-f01c-5917-234cacdd49d5')When I snapshot a VM I created natively (no warm migration), it looks exactly the same and is not detached:

HTTPS 123.456.789.6->|Async.VM.snapshot R:3e4f5c3dd9f2|audit] ('trackid=2f566a5e238297cab4efbd4023dba8da' 'LOCAL_SUPERUSER' 'root' 'ALLOWED' 'OK' 'API' 'VM.snapshot' (('vm' 'VM_Name2' 'cd95df02-a907-bdaa-2c0e-ca503656460b' 'OpaqueRef:00c31a47-69d9-753d-5481-d5a10e881e13')Looking at another thread, running

xl listshows VMs with a mixture of some with[XO warm migration Warm migration]tagged and some not. My VM that wasn't migrated shows as its normal name (matching output ofxe vm-list), this one snapshots fine. Notably, another VM that was migrated doesn't show the migration tags, but doesn't snapshot properly anyway. I renamed the VMs after migrating. Renaming again doesn't update the output ofxl listbut does update the output ofxe vm-list.If anyone in the know can help me understand what's going on I'd be most appreciative, I doubt I'll be able to backup my VMs until it's resolved. Alternatively, if anyone can confirm what steps I should take to completely rebuild XO such that the data is held correctly so this stops happening. To re-iterate, this only seems to happen on warm migrated VMs.