They are all connected and I see them connected to the NAS side also.

We are migrating them off the SR and going drop and readd the SR and see fi the issue still is there.

They are all connected and I see them connected to the NAS side also.

We are migrating them off the SR and going drop and readd the SR and see fi the issue still is there.

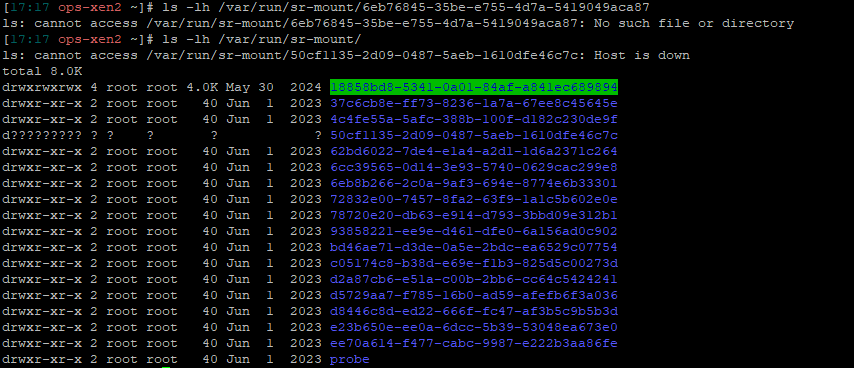

Maybe I am reading this wrong but the SR is not there in a mount? but also it is viewable and working in XO and lists usage and all that?

@tjkreidl said in Issue with SR and coalesce:

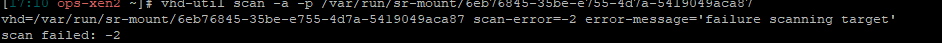

vhd-util scan -a -p /var/run/sr-mount/<UUID-of-the-SR>"

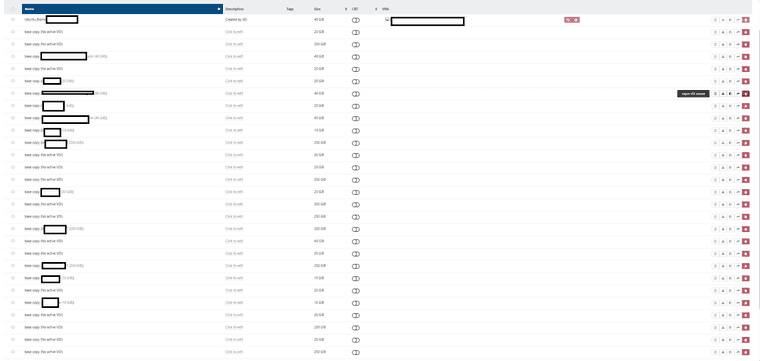

In the Orchestra interface there are 4 VDI's

When removing all filters in the Storage view we get this BUT you can not delete them using Zen and I am not sure base copies are to be removed

xe vdi-list sr-uuid=6eb76845-35be-e755-4d7a-5419049aca87 params=uuid,managed,sm-config

uuid ( RO) : 64ffcd3c-82a4-45c8-adbd-a38be5f1683e

managed ( RO): false

sm-config (MRO): vhd-blocks: eJztegt0FFW26D5Vla5K0klXPkCHNOkKMBovCA1EaCSkKwEhM36II9fR8UOhqMz4izjKL6RPJxGiIsQZn+KMQvt5yr13RtHxKX5TISj4ecKMc2dwVFLgLypCK2gSk3TdfU51J53wEd+666231nNDuqvPZ5999n+fU40qOECSD4Ng7JwHlrlhD0BPe3xVhERHjXmlpaW9/TV732u9oXdiFS1P2DZIe9jQyOTEnFUHgOCXtlMJfQUgemJBDx3AKInEgmzbGmXvBuiWyBFTBxhn8T6x10RCvgOoBsBWqFvR6iUzlreZRVOmj3tlQZv0wM1HrgyPPxW7Ri0J/J6NWP+/VtrzP+Cz1dk1b2Zkq79or6OCjbDNNET8qj3cZXOIxyE0Y2Zf2y9XpsMDLoiyNdKqb787Ite7J19kRaWRcED8Z48NY3vsMI4/feXL8ekjNm06fKinfRE9bX8Agq37366rnz19bWZ6hAYKTt2+JPytp33iDRd8NYNKkhdUEO9Ky/k6CEbbI8s3xu+eed5bvrqsnnokbnpfTLnQBDLtD8fgcsh+A+a9cvGZuOivN/ZtMle1mP691hnNwjBjHwDnTZZugL8LQHERUwEooIKmV+bbIXvl+1d/t7l29yTDtqvk8N9XZ8/4ojtiFu76fOwD8S1h+8AFMPKl8hWfXzfzJrKmOnB5l3tqXUVo7RZn4bDdxb/bwA0LbFtcA7/qQUYZn9YsMAMCshe79TJ9Xx9dEP5s3//+9N7QjaWy3B4nIPZIC94rK36Tlszt7v3dvFhwrHSeePOWTY8DuN8N/3VRzo3xvI7iJ0I3/bPukD2xrjNu77prR1un+FlbfJXt+caO28+DREHDxZlyTLkCPw40g0ZBQO1R8sFVhS3DUZFIcyqryIfPhUcboX3s2e81z5Ugb8tbZQdEe1nYPnx/R9EVaQthRqly+fV/+rZn+taRfApACSBaUHJNVRLHNFLRytkJmRC+9YXONOvNuAJzPzLqdh/+9otdT9o37X2lRmzYFHr1oWuW7+pcbx/44CwKot/T3ZAHoYjEFFukmWG5FkT78VgMDty0/LAixfSsrmG+pjnz1Zmw7r50D27MBSMD/tu8r2Vvwx2We6vbuyE8qQqWDZE9SkJoU3MelSBNAMWAtOZvNoO4+K21IEx1A5e+ruUUPk4NfdlzW1PsiH96+AZ36NRvAaGGu66na0zZsQz5e0G+5yKAUwlkDO0gO9mnAILQ30STDzqgLipgoMx6L9hYCtNRg9fgnwX4cXnLE+5iC/x2AnqvUuVO+8Vm+9twXwwM5CO3StocsbyaoP49eK74P3UBRjPbiy+1P/SE0TAcQE3EVhP1FRVWtu3u3paiTi3rw++8kyVqM3LGJFfpglloh+dfbYI9AFE2M+EFuoCw78Nd4AORa8c1MUBywBWy23vsrnCXGLaP5g9hbXLLBrKp+22oPR0pM/tXRDkhUTaise128LQhpThhUb7d5A5MpLpSDAWbczIumw0PQA5Fan+G3SqshVIQVTA8U+EuLcHS4ONpghqsEKtu3PLqsgqyrfil8Z64F8Qaz8e/b3vURWGxb6W9Y/rlBk2lTWlurjFJARH3Zx7ccfO5S/9xSk+PPcu4adNv1a9UQfAvlxZmkNU40NfYGcuL4oObpIFZBblfxEMZZZpQSXNBlYRlj+MWKnLGNEuTXWM1bxX8kSoodV0xoRfUa8T1T70VPZEOCUc3ETq0JTDw6COrSxep4J1MrofCnV7j4c3IgabrIC8DtBnawsje1wX7ZYuj+TmQhu0Gdc/aztYR6mdvWJTLFPBSX/hucK3e2mlWeOsu1OAIeJf8qeRQTxd0ZO0un/T++iPZlFwSq6ces9GtNBkvjL/B/Qs5Hu427Hc/UOIhu6Xjyet7G2z70Aq94XzNLVfanc/2LV1+yvUTO33gTbdMPZpesbzQ7tzaAFvIATvc9U5TQ0+raC6UvGeSjy/N7pYwcrrAH/RF9jky9F3zxdn2KmHmFXXm6eOkHODSZv85f1TGkdz0GnIbpZnmUfxaRMwz5FGvuSsFsbFJ0TZPzmzr0jY8/J9U+xdoveLpmh2vkB0uIeNz/7Z+RqaZ1egmxmm/Cj175mUbylueUnaEfh1zOf0Nuf4PtnbAbxZOLhi5s/rUvIq3pYACt/V9N7HRkQEEXa3gDjuUMNqI85DdWn3WYImiyxmro82IG1uD3+q4JLGVWoBdGPhvvXPv7QssuP6J9Wu2iB0jQu65I3VTEqDDgCMUyDaqnlVMwW+OR69td5dD3IKc/NdmI34VzFJr9DNQ1Ibx/2GzacvrOq6eFYpEvBphKtIdxbDp0Kqxv/ulC5UAeZoSU5/FtqhZRGvQMJmAvn/LEJ95wTW3mCi+GoCdum+NNG9F61/Oqe7e1NdzQ8+h+9tabJC/isn1y0C5fc8z5GMKgfR/g37kSbiA8vwDGLW2dbRWo89L01N/Mz45cYpuAEnpbxc1ZzQUDyxAhh2Q1Lk7g7e0uwX/bPIX2R4MXwlFUVdLri42kieHRouhIN82R8tsQJyXt94au4CP7jv04fmTynr3+/964zPGxEOPh7eb8PXZ12ogvvKYJXWcWl559pJc2k81QI1GBSf5U1/TRCbnxuzc+T0/MZhm1YAJEK2CIr1SWVM1fkK7Rbw1w/dKr+4UPPYhJZh2P/z5UEv43Z7snlsv/nPZi/Nw/iWxBHUF0NBu15C7M9+6BDXdpbtljVi/+c2lofdjM+xbdmME7QmbXqljMaCLKzMSrI063zTBuSTojIOjCRXAVX/miE81eWRN9ZWhFfE1zXc3wqo7u7J2PFa79JXIhPuMJrnrjzRzEE+7hPK3iCa7IKNQQNVAN/yuRPYzry9KajE0q9lUzBvmAnfgtLPBJGtvi6+Yk9VAhzCbzty/ZcLIgyPvWOLYM7xENsXmTN6+QUnjQ4kjX3x+JMxyJLL+fuUJzzq3D6waCJebrn5zIlQnPyf9mNfiXxnori5CCeWRPSuC5lYv5VgBFy6cnAWqqRvH1oQrYI2SeQVVQBWSzEP+szmVOltoDEydma0HECtGqwzI5gOSuIjpfLtE0KqEgIkJeISpM+ZPRSGmJlJF1IWWTwTVl5iyZjnkZrtep43G7c+BpO4iOFmrQWIvqhQ0GCMlhJfYoyhzb2ToCRnP64uopApZKM/CThqtR5+eL4JQOMXaZFiJeYy64ZF8JF3j2Dz23ufsTtvu6em9BlQN3NnA46DC9jtlyzJwJ2uYMR9JLHEK6hPwx/ztYpRtuV7DkZKBusZAoE5sch+bnQCp+QMKMy+rElcStg/PwGwHCdKpElSUX4qtDXeZbNliXSIgvLwOAiTAbZ3wdQT5YYB7UHudfA2ogL2SBLSuFH2Mxhg/yZAqwTX2bSGPTZsxellwm6EZVNKBxJvACuypnCAVYwxomtGjj34ppUxTrxNGbb1OiU6oq1PazCxJnwflwyIHwNUSC1GhUUEt333GJHqpdz7G/rtWXE8PTtuyjEvg2+6xDh4i+SpYDJjUCo2Zd9rPGutKhkPWKAgtzEf8AVC2FV/IWfwBzLPN/pXF1VwkPuXpJ69p0t9e0ntv1SMCqT3vUdaJuwMSBR4vmAr4vEz2Adc1czN/TidDxr7K199AIZD1LKOarU1BQYsUzSmgF4CW/lAuBgrmS3FEA3irSiBbPuPMN7PnnwdoM7BZiDFskTltpSTquoTS8WQrsSotje+GJvWZVbPYZGTtdBqmTrhhTUPFA9jvNdVt2lGyLriy+yLQPPfjFstx+iedzW30db/d9Fhrw7sA2QYcmPjWqE0roxx5xBTFRSS7a2Lahp32xpcwnysr8mqnheCOf4Eir6jMukPLWZRzDgz/4o4Hyyr0fJDIsKBboe7cxTVMVV1oUdkqUhg8veOseyhqqpow2DHzucNjP4xP3YajMz6uSGQ2Y2ia9Vvwim6iE1rB/YJPS8fOYahoBjT/HB8rYRvkequ4HEQDmmpqlP7Qg/Jc/89Vlt6MC8TcXEV3K+KHbdLtScZlQ4croqdy5rLwY+aV4kheb6DaSo4SzDTmE6+O2lvlYrTFBcjBOo2UwC+pyhfiboKAf9PuTLPQ0TX/iK4p/wmB6V+DUJqDC1ahS183bjekU0QsxLSSGEjblEvptLfzMzJ17/zxk+9zbxujw2ZwS1aSHCQjsphZdhqM+6vyzCw0SnDTixvTWKeQyUNaC6tavY6HBmfz2e2U5muqOIL+JOjDGBdkHUr6MyAUDTr3EIfE4l22vf+nMQjk94+QeOwkN3fg4N05LCoR3QTvNU9SH0ks+VtmADHhX+OMaqnyjiKgFpwciGte7lINtifS0mlyFSbvbFfFsllvb5gKPK760gSmJLpVuLMUzVp1QVB+Hhu2lrkNtAQDHM4H8N/MKJAV25HEvLZI71VSQAxfJJl6491gJhxxxmcvfmwK02NkxC3b1DIY/Wy5CB4HA0+AzinWMDpWIdcDbnJz2mbGNoEnsKCG2hvewszPdJlSNZBrl0JfKPQtZSYffq5uqs4yO59XZSXWWA3qS7hHR6FMponStR/Q4C6wuJS8Ak026sdkz4EaeL+GnftAWuNAK3fhClsqU2Nb55VqIhbDvaqUVH8xgslZjgbaObSYLME+r5K3qHr6472hPppYj41Eq3Lt66dRZe4M6Czskc4tSWtarmni3QaKvIH15p/NxwAZreLcrPGSGkF9YE1ps9DQa9CUMRpCfREEJMhdrLAF7goILnwC+R9rVW1gF2P18gfcmRvGChu03i6TKPthu8MFMzHIC671EN8aPl8zi5gcEIXIXF4gsdvRfI9FbBPLJLJ7PBGl7n5+7rPCZDhx6w9ud34LIL8XN+v08l88bvfVDZDx4tNfvz5QobFgxeuXnJEZrs8e1LTDJvtVZ4d7WuwvwjGhCMT2Vdsc6gbSGbA0aVChp6GDYl5AYlJbtAU9ORCFjQiyoz4OjXx+Sr6HPPTCCHSYpAPiAKHruEg0YXzRRp6lMjVyT8XNCtw/rkg0MRRSDsrcgKNgaH0qjea8ZUaL37cN7heMmXvfuhzglKKt+aBsp29wXuqNbPQwzupo6b9CfTLXCpOXcrNhAz6dwlB21tSvhfKY5iw6aFsCj02JJjJdNG561akd3BIVMCuILZsSTQwNDpiKDpBiOAwpc3leIcz3aaZLTmdlRIMIDPJpxwWpmSAh6fdqA006JN2mK8rlpzrWDs7qSQIY/x0a5WS3Qg4ZkkaaEvtiORkVtUzdQZs/7oyHpmvsKIEL3oEMXEp6Gx8ehRKJnTBhjLjxY5M4czwsbSdd2yaumvswLT8yneBGm8O3dfNO98gAZHyH+HboRE+hHxPnQD9+UR9ZhnQWE+FX2C5EUMFKnZ08gB85iWkEd0hZGIGtKfKfHkhRX4chx8wVyeDCsfCqW5PLaxm4UwfJscVRYFWnJ5/Li0G8/dyKJqGUQIaZJAz3R5hnwp3nJXDI5THmKyPsuTY5O0H3mPVYOwQYOeyQSXOodQRUxAjR2RP/iUmqyY4Wg0jgMBOne2eB6L40azFZTwbpagqwfMF9A4XZxUjOTHlHzeDuZTFQfY8OahIfsJmz182IoLnXwE+D97PFVT2FI+X8M0dIqRUGGaFoR5UgxQqApSacm+WoeORnRWxzrhlfzg5WmQoM7y+T8/Nno5rJGCircYOF5tyOC4IQocnN+/gq55ggBRQadKYUFMq9KSsyyiRQ3Sk/HWBH0bQayQuUkL+O7+ck6Lx4Lv8W4KABzBU2CjU+lZ/25DPd8GmGcwrAtmOyA9ZcYY6Wdt5inVPl81jz9vJOVErh/sZxC067+G/rzmsrLCo4GBTCX5isEKm/gMI6EF/dqB4WQ5dI8+xZv1g84oXNF2rnv1G3PkEHLJyNsYDw7I3RqrtJ0iVnw1Dw5LlfB1jy0WXU88yMNXpgLO5GSiAiMbmDXpgwdfOT92zOTz9QUsFHYHid0X6lKR5ZbvqbmDRz0DYyrq2lW7xDVuFnyf1OKy1JKD9rUozbcU3/BGSN4SvzJShF95Codi1WBnShyD0wauPfc1qyP/kHrZCaYa+BnbX3MVyjJ7NrjIoRQFV3lUM65UvSNIuJWp4rMAN6zQI4MzKxndtioLqbWspXrFjjEz5DNR6VQjKXFCK3i9f8R32iTUUDlJhadolwwynFKaPVaet0/1d+vbrhzS+B3Jl+Wq4C4niYzajTEorM9d3QCrbIYQrTRy36pvep5+8YJ7iuwzyvjrpQk0xc4WpncHEVuDW96mfMCnRWFDVCcSaqdT9x2iAGy/m/YZWlEYRYopgXMUXX0FV9hs/Z5/FBp76UkEEQFLtxrgXTktMFRloGajSMiKIqatIW8OlItzzJ4ibhR0MxHbGAjKlx3P6T/ak9xR7/ypwycss96XTcobRN/WflpfBDIV8CU9g9zNw9ItHQ/YZ2yULFiXXU0yRSjw5jThTMWE3eZ7qRATQCCTPW+3sJXPWH7/hTtnY8t8ZOAizghq4x9pnABCJBOuNXDcpBYs0s88ZvCJncQYlr6MB8/siJLJrX8PxOPtClMgsE8zNWc8HAHcgAdB/dxKEvWinbLS2M2Xr/pYUJCyPYZFte8BM49+qQ/bJtH3kZKmwncVbBftruiUGx3TlrY312DcEFN7GJHdmQ51CJAZadpxoAn5owkbNqg16zaqb7zz/dPvfa4rxllLCQR9Yi3RI7/sy/sl6Tdw8wcwCIWvNItcGcS+FSq+peRc2Cd8f/euTwG+acAwurJ34JPklEp1jp2runkcaFs9y+ce50PXIRss6n1E2zTCg8MIBNu8l76lm/Bkg/fNZu78vES4uXYjbEDlPcicV1Xghjkp0BOSqTjwIuAlmRiFlSyC5/JnGihgdTEyQt+TAojO/1pX91l/1iT+koB/VFoLvAdfDCv+Gz+EmcSZLAOoo1bDnPcY4LSbbQ1EYswSFV/TgUjDAHfuS2ovaM/8PdDx8DJbnskZn9QwsfLUlsw4IAd5zSC+HOwsGpThJpib7kIVZOY8KDnpjd2CikYa4VRh0swJIK2difmuMCcu9T65GLkWRTBtTT/nVVtQJCMXCxQLmDtc4EK3lj7b38sVDOT0O1ZblRyG45grkZZYn8syznJa3qhih+y5Ed5vjX0n6XP3r2JJjRrLoUZMdwgCbct8bOHhFnEMtz/9cRvaFzuy7manqJLjj7Elw6D12i/VmMsTGf326CXO9cgVMuTB5j+l1CgO9M16V8LKWMFE470LJmt5aSTwR1h9OQs4QmmtLgdoZPc04EwDVSEJY5t6qJRKSsdOE37JKcpr1jUViJlFRhaI2vDhl8eu2ZH7ETkMwN0WFfdsAVpzp4cVSWCYIJa/ftCZmZ2ynUB17r5/hg0EH33tNisy2lTc+MaVKmednskQahRATX07mq3no3R+rotrteSV4ha1Rj3BgP+k/eiwjyPQ0yBHPedfU697+4H4155Ju+22psVYsvD2TyzLkH85UMeIMPUVIKcRHL5PeASCzbaEnU1iE0ysiXJmTWrQYbxEsu67uen1TzQ4/JoKuSYqjZEfnVrFcxEIu/NJmM1EY3VjOMOcOoQCdJ6D41B11NJm3dFbZfKNWhQOvRvkYGR8WPH4H0dXVb0fW4166FMVKzUAUl0c04Jba88AmQa2USMVe+WIpZ08QmgstomsnMwl0G8imoIcnCHYgVLdD8vlxddRWuzei8vcG7J+jckjIHeHzvnwpeJ2PjUA3FwUMVkTPYzY3CThRUsWk2U7wyoFl3gOcRHOMXeVmgDKgYYdpEIKotRiksc08tZgcA+VAw2ZgRW20Kl3tD8d7FDtsbiGuqU+r8BY1W3z2php1NOow3k/jk34LzjLoEhkwXiVclk1XK5OD55vOBoykOdzZfKp12NQycmA0CXRr8m7J/wWx+dDBQv5swm685wBjEFpuqqNeyS417eZ8nJl3hvuNh0u/wqvXEQ53MMPj6Jz8YSMufPq2zblVL2Hvw/F5PfJRtt7JdvgrwqsDqVpOw05Pb2GEG7mr5g/FRT3YJot3aaX/alb9pz6itYD7GvD9JJKkvCc7bSsTQz5Vq1zs+ga1OJ5rvu6e0v4cpgbO91NsSkfPsZv9HaBplBmmaduT3Tgdpsx9SWH3Dz6l5E9YcRMK8tozY7+CkhTR/8nh+WTvp4RtvLllZyG5ILk7bbCqjvq6909RhRN3SGXlXy1nPFbMQWxvAwiqPPqQXeIuRNvuylX9boeovX7H06ouE55qy5HWfv/uH9wDGSkx7/r8FgfZC3UFDNv22YfBIemMchCwS99sdTvTzxeRYUSiWR953ZqT/j89X55wOo0xPfDhNwURKQ105q33Ub2XaVN4gPx/d7oRmiWmhuH9o2pwAUakq99wb4hlW4spyIX/l5AhOJpZayd1GExbTNPFOzZgolk4WiO8fEx8HnfQKGBt2PczqYwmKnZieZTOXmC5aICfyZR0Grt+OApJyc3nclVh2aGlDtobWFD7CjrHjVl94Fx2M1WLH2xF3LTnuwkft5tjk8c/Ut/q+F42R6oNDLLuN21+0f2idxORdiUF17MNrzUw0z/me5UPe5AuJmHiKaQNJA5/36X6QLRd7oy7BbNZogv/jNrsZOdgE002AafY1j7Wv31gVdR8zGxxa7oJgJbPNoDS07ygQ+m0fx/oPgBA+XLXc4g2uD7qj7DvN/F4sPwA0/im/bJ1SgAoSs+1v6XHke2xImxDeQ9n7YL6Kp5qZmXyS7CknUIR10uL6TAiHwmDHWOSQV9np6+1dXH/z5+uuiT9gqbAddx5UEE2AD9ex1PaYwE4JbQyJp7EfNUd1Zxwlo6EgJ86ghgBZwMqu5OmmdmIUx35H5P8YrGO8foYwYYQtW2h77IRO9cz0c5dF01Qp7IlO2xm95Zx08wcsIrYNrTxPdiY5QV/I67E3xVgUlbm96QC/y64kdBAHpVWmBiq/gqIwcITMjkH0EyzbrXMbLpm04LuTJfVo0gdUXuyLuYH7db+fXeoyKlSTxFjem8PJCSdGulUIdYmOSsoDoub+jN3gnDIDivpbjwkn9AaEC3IMf+bqWnHHKU5PnZV6dGfwT4/daUtsE2rafaX5dWX9SCjjttBLIsc5WDg5iJ9wHycF4eiJSmdyYws9OTy1ocOdXcb3jUJPRuyDoRNGykFgSmqoK3nr5PlSCPfeWuP/b9j2yYHoUFkLIawmuS0d73ir3y2pOWbiKZPYlgJCMpLKrKIkXXLvvC4gfEd5oyVHhwJeQq0YKEFIb+01E1FJ0bWhishepSetU21tUCu6b0rkFF1IhjWMgzdwTIwszD/oEHQCr4dcmOb8MBAmMkxH++rQBk0FuwP8QxcadGT/3wduLDFEqQadkwCqoMLAfXQSPM67vUd2bLuAmW2/8GQniVzwR22D5/0A5E9OmRJPXFHivJwecyhGlpP1YAgz2A8pugAfy3dZLgMC7L3SAHOPzhuCHdiftxP6nAPBJAySKDMDK7Xh6A0k2o//2tP3AlmGXvTC0++yyB6dl4gemy46alQNay9l9boSPLnq+yhIuJEqmSmiQsHRkJLB++8f7PgvphepASqZ2HPCv5ZMkLuPnjsUBmUEDrYp7EPe4jXZ2dBigC3l7CJXDdkHT+zc+6Gmn0iL/142lr0CHwn++9xUiYWYGxPpQIPbeeZHPsdYyJOsJgR2ssYBZ9/fhRwn7CWBMAvrCeVL6qpW51dmnLPUtmz9pCgfBOwKbODUk0wwZL3oB6IQQP6/5m5/hB/hR/gRfoQf4Uf4EX6E/8fgvwAhq/Ts; import_task: OpaqueRef:57091129-8fb3-4746-b1a5-6d6032a6485f; vdi_type: vhd; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-parent: db977fb7-45f0-4f54-ba62-dcb3ef415244; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : 2f77fac1-bd1d-47fb-a426-272df8fd7540

managed ( RO): false

sm-config (MRO): read-caching-enabled-on-03b66886-2498-462b-b0bb-aa560c7f7105: false; import_task: OpaqueRef:8c981323-9b20-4678-81dc-cb7192e21686; vdi_type: vhd; read-caching-reason-03b66886-2498-462b-b0bb-aa560c7f7105: SR_NOT_SUPPORTED; vhd-blocks: eJxjYKAMxDBIkKu1hoA8BxALEDKkgCGDoQFqmAO5LsEDQGaKYZNggdlfBVYDtduAkHnMDBLsB6jhMChIYPAC0xJgNzBjyNf/P1IPtK/++IECMN3M/qH+/wH2/w0gIQb24wfAav7/Yag/wF7/v6H+D4muc2DwgTA4sEgimcWI0wT2BuziCANBxlQAXV3/v/7/n///GWqqP8DTTgKUlv8PAR8IphjqggS4CwYGVALT/0CCGgZrKAsz7dEDVDCYHkDiMu442ACieRsGwjGjYBSMglEwhAAArOQ9Bg==

uuid ( RO) : 15199577-db62-4a2a-ac5c-66ef9d3cceb9

managed ( RO): false

sm-config (MRO): read-caching-enabled-on-03b66886-2498-462b-b0bb-aa560c7f7105: false; import_task: OpaqueRef:8c981323-9b20-4678-81dc-cb7192e21686; vdi_type: vhd; read-caching-reason-03b66886-2498-462b-b0bb-aa560c7f7105: SR_NOT_SUPPORTED; vhd-blocks: eJxjYKAMxDBIkKu1hoA8BxALEDKkgCGDoQFqmAO5LsEDQGaKYZNggdlfBVYDtduAkHnMDBLsB6jhMChIYPAC0xJgNzBjyNf/P1IPtK/++IECMN3M/qH+/wH2/w0gIQb24wfAav7/Yag/wF7/v6H+D4muc2DwgTA4sEgimcWI0wT2BuziCANBxlQAXV3/v/7/n///GWqqP8DTTgKUlv8PAR8IphjqggS4CwYGVALT/0CCGgZrKAsz7dEDVDCYHkDiMu442ACieRsGwjGjYBSMglEwhAAArOQ9Bg==; vhd-parent: f92a5dfe-e9f3-4876-89ff-80f05a8a861a

uuid ( RO) : 1bd814cf-ecff-4825-9d37-7573c61d94af

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:92db446c-4b44-425f-98cd-8fa65f2fbf1f; vdi_type: vhd; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: eJxjYEAB8g0MuIEACi+BgYHRAUizMSk0uDCA9DHi0QsGjA9gRnAgCW5AsMXkG2TBLpD/DwHPoNY+QKjB50IEOAA0mDiVKEB+tQEZuhiYMEQc0PhEmiohRFgh4wPizCIBsDck4FcgwGCPVdwBGuk8DAowoQa89mAVZoGHoAPQJGIAVHmFAzQlZeC3AKwWn2M4HIBcqEAFptJRMFwBAD8DHCI=; vhd-parent: 633547da-0308-422a-83ca-c60e375d3d5d; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : f6b60683-7245-4c49-b299-df796d5c64cb

managed ( RO): true

sm-config (MRO): read-caching-enabled-on-03b66886-2498-462b-b0bb-aa560c7f7105: false; import_task: OpaqueRef:60915ab5-de0d-4768-978c-92aa8f5b4503; vdi_type: vhd; host_OpaqueRef:d1ef8654-1582-4fbf-bff9-a0c18af279a5: RW; read-caching-reason-03b66886-2498-462b-b0bb-aa560c7f7105: SR_NOT_SUPPORTED; vhd-parent: 3e88720e-592a-4aa1-995e-af62d0e2593f

uuid ( RO) : db13cef1-29b7-497a-811e-1fbd72a27068

managed ( RO): true

sm-config (MRO): import_task: OpaqueRef:92db446c-4b44-425f-98cd-8fa65f2fbf1f; vdi_type: vhd; host_OpaqueRef:ec4a67fd-9558-47cd-9c87-9be5aeb1601c: RW; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: Removed=; vhd-parent: 1bd814cf-ecff-4825-9d37-7573c61d94af; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : 000e5169-0bc4-4966-96cd-8ba8c41105a6

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:261b88d6-f425-4b93-8d3f-36d42925ab31; vdi_type: vhd; read-caching-reason-1ab99770-8264-492d-91a3-b221118ac366: SR_NOT_SUPPORTED; vhd-blocks: Removed=; read-caching-enabled-on-1ab99770-8264-492d-91a3-b221118ac366: false; vhd-parent: 1a591e58-c55a-47d2-b024-5233f9293c85

uuid ( RO) : 35567cc8-2328-4cb8-8ba6-d90264d17ea8

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:249a5d03-50ed-4a71-82a1-a5bf9d4c799d; vdi_type: vhd; read-caching-reason-1ab99770-8264-492d-91a3-b221118ac366: SR_NOT_SUPPORTED; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: e Removedk=; read-caching-enabled-on-1ab99770-8264-492d-91a3-b221118ac366: false; vhd-parent: a8bd427a-1688-4d9c-abbe-0f5ad5828229; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : 3e88720e-592a-4aa1-995e-af62d0e2593f

managed ( RO): false

sm-config (MRO): vhd-blocks: Removed

1mAN1mANVoL/A1PY8sU=; read-caching-enabled-on-03b66886-2498-462b-b0bb-aa560c7f7105: false; import_task: OpaqueRef:60915ab5-de0d-4768-978c-92aa8f5b4503; vdi_type: vhd; read-caching-reason-03b66886-2498-462b-b0bb-aa560c7f7105: SR_NOT_SUPPORTED; vhd-parent: 8fff0634-044f-444c-a5e2-a74a2e02fb34

uuid ( RO) : 84f62ecf-27e7-46fd-935f-6504747ab327

managed ( RO): false

sm-config (MRO): vhd-blocks: Removed03; vdi_type: vhd; read-caching-reason-03b66886-2498-462b-b0bb-aa560c7f7105: SR_NOT_SUPPORTED

uuid ( RO) : b6af6f2f-8935-4386-a4fe-cdf2661a9c97

managed ( RO): false

sm-config (MRO): vhd-blocks: RemovedKrWA==; read-caching-enabled-on-03b66886-2498-462b-b0bb-aa560c7f7105: false; import_task: OpaqueRef:57091129-8fb3-4746-b1a5-6d6032a6485f; vdi_type: vhd; read-caching-reason-03b66886-2498-462b-b0bb-aa560c7f7105: SR_NOT_SUPPORTED; vhd-parent: 64ffcd3c-82a4-45c8-adbd-a38be5f1683e

uuid ( RO) : a8bd427a-1688-4d9c-abbe-0f5ad5828229

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:249a5d03-50ed-4a71-82a1-a5bf9d4c799d; vdi_type: vhd; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: RemovedDk=; read-caching-enabled-on-1ab99770-8264-492d-91a3-b221118ac366: false; read-caching-reason-1ab99770-8264-492d-91a3-b221118ac366: SR_NOT_SUPPORTED; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : db977fb7-45f0-4f54-ba62-dcb3ef415244

managed ( RO): false

sm-config (MRO): vhd-blocks: Removed; vhd-parent: 08b5e4b4-d428-4a8a-9884-ecd48c5ab295; import_task: OpaqueRef:57091129-8fb3-4746-b1a5-6d6032a6485f; vdi_type: vhd

uuid ( RO) : 7054e859-9f16-4b1d-afd6-a432be3df557

managed ( RO): false

sm-config (MRO): vhd-blocks: Removed8=; read-caching-enabled-on-03b66886-2498-462b-b0bb-aa560c7f7105: false; import_task: OpaqueRef:57091129-8fb3-4746-b1a5-6d6032a6485f; vdi_type: vhd; read-caching-reason-03b66886-2498-462b-b0bb-aa560c7f7105: SR_NOT_SUPPORTED; vhd-parent: b6af6f2f-8935-4386-a4fe-cdf2661a9c97

uuid ( RO) : 8fff0634-044f-444c-a5e2-a74a2e02fb34

managed ( RO): false

sm-config (MRO): vhd-blocks: Removed/g++2jae; read-caching-enabled-on-03b66886-2498-462b-b0bb-aa560c7f7105: false; import_task: OpaqueRef:60915ab5-de0d-4768-978c-92aa8f5b4503; vdi_type: vhd; read-caching-reason-03b66886-2498-462b-b0bb-aa560c7f7105: SR_NOT_SUPPORTED; vhd-parent: 84f62ecf-27e7-46fd-935f-6504747ab327

uuid ( RO) : 8580e5aa-8e59-4191-aaae-08b80101617b

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:6b756b4e-d573-4b69-bae3-2a5882b5660d; vdi_type: vhd; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: Removedg==; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : eee08599-f4d6-4ef3-ad3b-862c24658c2b

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:6b756b4e-d573-4b69-bae3-2a5882b5660d; vdi_type: vhd; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: Removedg==; vhd-parent: 8580e5aa-8e59-4191-aaae-08b80101617b; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : cd2634d6-219f-4925-b3ef-1c3ce8027e02

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:261b88d6-f425-4b93-8d3f-36d42925ab31; vdi_type: vhd; read-caching-reason-1ab99770-8264-492d-91a3-b221118ac366: SR_NOT_SUPPORTED; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: Removed66: false; vhd-parent: 496fdfc3-7b98-4658-8b25-25097c2e21b8; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : 54ea774f-23d3-4600-9469-8ae2ccffc74a

managed ( RO): false

sm-config (MRO): vhd-blocks: Removed57; import_task: OpaqueRef:57091129-8fb3-4746-b1a5-6d6032a6485f; vdi_type: vhd

uuid ( RO) : 23407d9c-e626-4f2e-8f2d-e7fae316651c

managed ( RO): true

sm-config (MRO): read-caching-enabled-on-03b66886-2498-462b-b0bb-aa560c7f7105: false; import_task: OpaqueRef:8c981323-9b20-4678-81dc-cb7192e21686; host_OpaqueRef:d1ef8654-1582-4fbf-bff9-a0c18af279a5: RW; vdi_type: vhd; read-caching-reason-03b66886-2498-462b-b0bb-aa560c7f7105: SR_NOT_SUPPORTED; vhd-blocks: Removedg==; vhd-parent: 15199577-db62-4a2a-ac5c-66ef9d3cceb9

uuid ( RO) : 2d7bea37-2b2c-46ab-898d-544bd7b18bfe

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:92db446c-4b44-425f-98cd-8fa65f2fbf1f; vdi_type: vhd; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: RemovedCI=; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : 1a591e58-c55a-47d2-b024-5233f9293c85

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:261b88d6-f425-4b93-8d3f-36d42925ab31; vdi_type: vhd; read-caching-reason-1ab99770-8264-492d-91a3-b221118ac366: SR_NOT_SUPPORTED; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: Removed67Siw=; read-caching-enabled-on-1ab99770-8264-492d-91a3-b221118ac366: false; vhd-parent: af10d4dd-b20b-4f6b-abcd-3fd2818a5a20; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : f92a5dfe-e9f3-4876-89ff-80f05a8a861a

managed ( RO): false

sm-config (MRO): read-caching-enabled-on-03b66886-2498-462b-b0bb-aa560c7f7105: false; import_task: OpaqueRef:8c981323-9b20-4678-81dc-cb7192e21686; vdi_type: vhd; read-caching-reason-03b66886-2498-462b-b0bb-aa560c7f7105: SR_NOT_SUPPORTED; vhd-blocks: Removedg==; vhd-parent: 1c98b2f5-4147-4e41-9d41-2cf446199564

uuid ( RO) : af10d4dd-b20b-4f6b-abcd-3fd2818a5a20

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:261b88d6-f425-4b93-8d3f-36d42925ab31; vdi_type: vhd; read-caching-reason-1ab99770-8264-492d-91a3-b221118ac366: SR_NOT_SUPPORTED; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: Removedw=; read-caching-enabled-on-1ab99770-8264-492d-91a3-b221118ac366: false; vhd-parent: cd2634d6-219f-4925-b3ef-1c3ce8027e02; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : 44f96838-b2f0-4971-b1e8-610eadc94222

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:3def9239-87a3-4cdb-b99e-8e770e0454f1; vdi_type: vhd; read-caching-reason-1ab99770-8264-492d-91a3-b221118ac366: SR_NOT_SUPPORTED; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: Removedw=; read-caching-enabled-on-1ab99770-8264-492d-91a3-b221118ac366: false; vhd-parent: a87215e0-2e39-4d57-9c58-7b36e634bcbd; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : a5c1e8d8-8eef-417f-a9ae-6a120f4b5461

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:249a5d03-50ed-4a71-82a1-a5bf9d4c799d; vdi_type: vhd; read-caching-reason-1ab99770-8264-492d-91a3-b221118ac366: SR_NOT_SUPPORTED; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: Removedk=; read-caching-enabled-on-1ab99770-8264-492d-91a3-b221118ac366: false; vhd-parent: 35567cc8-2328-4cb8-8ba6-d90264d17ea8; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : a771d4d8-8780-4c6b-b906-77931323d2b8

managed ( RO): true

sm-config (MRO): import_task: OpaqueRef:249a5d03-50ed-4a71-82a1-a5bf9d4c799d; vdi_type: vhd; host_OpaqueRef:ec4a67fd-9558-47cd-9c87-9be5aeb1601c: RW; vhd-parent: a5c1e8d8-8eef-417f-a9ae-6a120f4b5461; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: RemovedDk=; read-caching-enabled-on-1ab99770-8264-492d-91a3-b221118ac366: false; read-caching-reason-1ab99770-8264-492d-91a3-b221118ac366: SR_NOT_SUPPORTED; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : 633547da-0308-422a-83ca-c60e375d3d5d

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:92db446c-4b44-425f-98cd-8fa65f2fbf1f; vdi_type: vhd; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: Removed ptJRMFwBAD8DHCI=; vhd-parent: 2d7bea37-2b2c-46ab-898d-544bd7b18bfe; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : a87215e0-2e39-4d57-9c58-7b36e634bcbd

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:3def9239-87a3-4cdb-b99e-8e770e0454f1; vdi_type: vhd; read-caching-reason-1ab99770-8264-492d-91a3-b221118ac366: SR_NOT_SUPPORTED; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: Removed Siw=; read-caching-enabled-on-1ab99770-8264-492d-91a3-b221118ac366: false; vhd-parent: 8a544363-91c0-4194-8d8b-26699be2633a; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : 8a544363-91c0-4194-8d8b-26699be2633a

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:3def9239-87a3-4cdb-b99e-8e770e0454f1; vdi_type: vhd; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: Removedw=; read-caching-enabled-on-1ab99770-8264-492d-91a3-b221118ac366: false; read-caching-reason-1ab99770-8264-492d-91a3-b221118ac366: SR_NOT_SUPPORTED; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : 08b5e4b4-d428-4a8a-9884-ecd48c5ab295

managed ( RO): false

sm-config (MRO): vhd-blocks: RemovedOd0A==; import_task: OpaqueRef:57091129-8fb3-4746-b1a5-6d6032a6485f; vdi_type: vhd; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

uuid ( RO) : 1c98b2f5-4147-4e41-9d41-2cf446199564

managed ( RO): false

sm-config (MRO): read-caching-enabled-on-03b66886-2498-462b-b0bb-aa560c7f7105: false; import_task: OpaqueRef:8c981323-9b20-4678-81dc-cb7192e21686; vdi_type: vhd; read-caching-reason-03b66886-2498-462b-b0bb-aa560c7f7105: SR_NOT_SUPPORTED; vhd-blocks: Removed0CCGgZrKAsz7dEDVDCYHkDiMu442ACieRsGwjGjYBSMglEwhAAArOQ9Bg==; vhd-parent: 2f77fac1-bd1d-47fb-a426-272df8fd7540

uuid ( RO) : 496fdfc3-7b98-4658-8b25-25097c2e21b8

managed ( RO): false

sm-config (MRO): import_task: OpaqueRef:261b88d6-f425-4b93-8d3f-36d42925ab31; vdi_type: vhd; read-caching-enabled-on-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: false; vhd-blocks: RemovedSiw=; read-caching-enabled-on-1ab99770-8264-492d-91a3-b221118ac366: false; read-caching-reason-1ab99770-8264-492d-91a3-b221118ac366: SR_NOT_SUPPORTED; read-caching-reason-4b88e4da-8f32-447b-ac2d-d666ec9f34bc: SR_NOT_SUPPORTED

I feel like this may be counting local storage on the hosts? we don't use those on these systems

We have several other VM's in the Pool that are on a different SR, we were planning on moving them all over BUT we ran into this backup not working issue so we halted until we figure that out and these do have some snapshots with them.

I am very certain, just checked them.

These are Backups that were restored to the new SR, in Orchestra they show No Snapshots

We do how ever see then I hover over the VM's under the storage view

I am in the discord and can screenshare the logs if it helps at all.

I can share the log in its entirety if needed just need to make sure it does not contain any confidential details

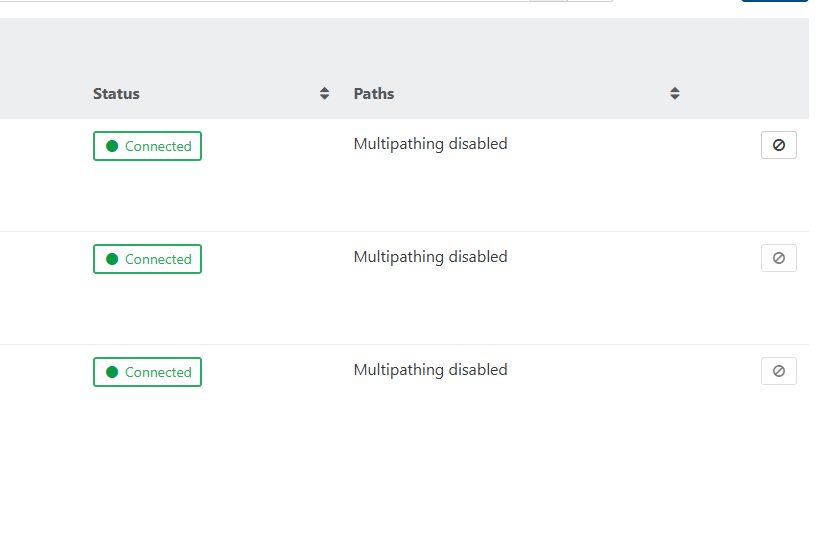

Multipathing is not enabled, the three connections are to each host in the pool

The SR ID the issues seems to be on is 6eb76845-35be-e755-4d7a-5419049aca87

Mar 15 04:06:41 ops-xen2 SM: [19269] lock: tried lock /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/sr, acquired: True (exists: True)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] Found 23 VDIs for deletion:

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *9573449e[VHD](250.000G//8.000M|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *707d5286[VHD](20.000G//6.070G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *44f96838[VHD](20.000G//2.789G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *a87215e0[VHD](20.000G//1.258G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *8a544363[VHD](20.000G//19.680G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *b27b0d95[VHD](250.000G//66.812G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *54ea774f[VHD](250.000G//19.277G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *7054e859[VHD](250.000G//22.547G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *b6af6f2f[VHD](250.000G//51.547G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *64ffcd3c[VHD](250.000G//40.863G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *db977fb7[VHD](250.000G//11.977G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *08b5e4b4[VHD](250.000G//207.605G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *a8248b10[VHD](3.879G//3.023G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *4bbdc102[VHD](40.000G//4.586G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *eee08599[VHD](40.000G//6.500G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *8580e5aa[VHD](40.000G//20.414G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *29ad53b8[VHD](20.000G//812.000M|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *000e5169[VHD](20.000G//4.344G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *1a591e58[VHD](20.000G//6.000G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *af10d4dd[VHD](20.000G//3.355G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *cd2634d6[VHD](20.000G//4.727G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *496fdfc3[VHD](20.000G//19.672G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] *b23fbb00[VHD](150.000G//150.297G|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] Deleting unlinked VDI *9573449e[VHD](250.000G//8.000M|n)

Mar 15 04:06:41 ops-xen2 SMGC: [19269] Checking with slave OpaqueRef:0745579d-7eca-4dae-9502-68b645fd9957 (path /dev/VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87/VHD-9573449e-fed0-4d9d-ad5a-8ff3c0dd69d8)

Mar 15 04:06:42 ops-xen2 SMGC: [19269] call-plugin returned: 'True'

Mar 15 04:06:42 ops-xen2 SMGC: [19269] Checking with slave OpaqueRef:ec4a67fd-9558-47cd-9c87-9be5aeb1601c (path /dev/VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87/VHD-9573449e-fed0-4d9d-ad5a-8ff3c0dd69d8)

Mar 15 04:07:03 ops-xen2 SM: [28516] Matched SCSIid, updating 36e843b63e2d6a93dd5e7d4263d804bdf

Mar 15 04:07:03 ops-xen2 SM: [28516] Matched SCSIid, updating 36001405928bd167d3b1ed4351d8a50d8

Mar 15 04:07:03 ops-xen2 SM: [28516] Matched SCSIid, updating 36001405991b910dd5e91d4aaed9a5edf

Mar 15 04:07:03 ops-xen2 SM: [28516] MPATH: Update done

Mar 15 04:07:03 ops-xen2 SM: [28682] Matched SCSIid, updating 36e843b63e2d6a93dd5e7d4263d804bdf

Mar 15 04:07:03 ops-xen2 SM: [28682] Matched SCSIid, updating 36001405928bd167d3b1ed4351d8a50d8

Mar 15 04:07:03 ops-xen2 SM: [28682] Matched SCSIid, updating 36001405991b910dd5e91d4aaed9a5edf

Mar 15 04:07:03 ops-xen2 SM: [28682] MPATH: Update done

Mar 15 04:07:04 ops-xen2 SM: [29001] Matched SCSIid, updating 36e843b63e2d6a93dd5e7d4263d804bdf

Mar 15 04:07:04 ops-xen2 SM: [29001] Matched SCSIid, updating 36001405928bd167d3b1ed4351d8a50d8

Mar 15 04:07:04 ops-xen2 SM: [29001] Matched SCSIid, updating 36001405991b910dd5e91d4aaed9a5edf

Mar 15 04:07:04 ops-xen2 SM: [29001] MPATH: Update done

Mar 15 04:07:04 ops-xen2 SM: [29280] Matched SCSIid, updating 36e843b63e2d6a93dd5e7d4263d804bdf

Mar 15 04:07:04 ops-xen2 SM: [29280] Matched SCSIid, updating 36001405928bd167d3b1ed4351d8a50d8

Mar 15 04:07:04 ops-xen2 SM: [29280] Matched SCSIid, updating 36001405991b910dd5e91d4aaed9a5edf

Mar 15 04:07:04 ops-xen2 SM: [29280] MPATH: Update done

Mar 15 04:07:04 ops-xen2 SM: [29546] Matched SCSIid, updating 36e843b63e2d6a93dd5e7d4263d804bdf

Mar 15 04:07:04 ops-xen2 SM: [29546] Matched SCSIid, updating 36001405928bd167d3b1ed4351d8a50d8

Mar 15 04:07:04 ops-xen2 SM: [29546] Matched SCSIid, updating 36001405991b910dd5e91d4aaed9a5edf

Mar 15 04:07:04 ops-xen2 SM: [29546] MPATH: Update done

Mar 15 04:07:06 ops-xen2 SM: [29642] Setting LVM_DEVICE to /dev/disk/by-scsid/36e843b63e2d6a93dd5e7d4263d804bdf

Mar 15 04:07:06 ops-xen2 SM: [29642] Setting LVM_DEVICE to /dev/disk/by-scsid/36e843b63e2d6a93dd5e7d4263d804bdf

Mar 15 04:07:06 ops-xen2 SM: [29642] lock: opening lock file /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/sr

Mar 15 04:07:06 ops-xen2 SM: [29642] LVMCache created for VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87

Mar 15 04:07:06 ops-xen2 SM: [29642] ['/sbin/vgs', '--readonly', 'VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87']

Mar 15 04:07:06 ops-xen2 SM: [29642] pread SUCCESS

Mar 15 04:07:06 ops-xen2 SM: [29642] Failed to lock /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/sr on first attempt, blocked by PID 19269

Mar 15 04:07:06 ops-xen2 SM: [29673] sr_update {'sr_uuid': '18858bd8-5341-0a01-84af-a841ec689894', 'subtask_of': 'DummyRef:|aab5875a-ae37-4d0c-934b-bf39e06fa7de|SR.stat', 'args': [], 'host_ref': 'OpaqueRef:d1ef8654-1582-4fbf-bff9-a0c18af279a5', 'session_ref': 'OpaqueRef:c79da288-ef14-4c7c-9c64-fbc0581c2eee', 'dev$

Mar 15 04:07:31 ops-xen2 SM: [19269] lock: released /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/sr

Mar 15 04:07:31 ops-xen2 SM: [29642] lock: acquired /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/sr

Mar 15 04:07:31 ops-xen2 SM: [19269] lock: released /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/running

Mar 15 04:07:31 ops-xen2 SMGC: [19269] GC process exiting, no work left

Mar 15 04:07:31 ops-xen2 SM: [19269] lock: released /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/gc_active

Mar 15 04:07:31 ops-xen2 SM: [29642] LVMCache: will initialize now

Mar 15 04:07:31 ops-xen2 SM: [29642] LVMCache: refreshing

Mar 15 04:07:31 ops-xen2 SM: [29642] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87']

Mar 15 04:07:31 ops-xen2 SMGC: [19269] SR 6eb7 ('HyperionOps') (41 VDIs in 12 VHD trees): no changes

Mar 15 04:07:31 ops-xen2 SMGC: [19269] *~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*

Mar 15 04:07:31 ops-xen2 SMGC: [19269] ***********************

Mar 15 04:07:31 ops-xen2 SMGC: [19269] * E X C E P T I O N *

Mar 15 04:07:31 ops-xen2 SMGC: [19269] gc: EXCEPTION <class 'XenAPI.Failure'>, ['XENAPI_PLUGIN_FAILURE', 'multi', 'CommandException', 'Input/output error']

Mar 15 04:07:31 ops-xen2 SMGC: [19269] File "/opt/xensource/sm/cleanup.py", line 2961, in gc

Mar 15 04:07:31 ops-xen2 SMGC: [19269] _gc(None, srUuid, dryRun)

Mar 15 04:07:31 ops-xen2 SMGC: [19269] File "/opt/xensource/sm/cleanup.py", line 2846, in _gc

Mar 15 04:07:31 ops-xen2 SMGC: [19269] _gcLoop(sr, dryRun)

Mar 15 04:07:31 ops-xen2 SMGC: [19269] File "/opt/xensource/sm/cleanup.py", line 2813, in _gcLoop

Mar 15 04:07:31 ops-xen2 SMGC: [19269] sr.garbageCollect(dryRun)

Mar 15 04:07:31 ops-xen2 SMGC: [19269] File "/opt/xensource/sm/cleanup.py", line 1651, in garbageCollect

Mar 15 04:07:31 ops-xen2 SMGC: [19269] self.deleteVDIs(vdiList)

Mar 15 04:07:31 ops-xen2 SMGC: [19269] File "/opt/xensource/sm/cleanup.py", line 1665, in deleteVDIs

Mar 15 04:07:31 ops-xen2 SMGC: [19269] self.deleteVDI(vdi)

Mar 15 04:07:31 ops-xen2 SMGC: [19269] File "/opt/xensource/sm/cleanup.py", line 2426, in deleteVDI

Mar 15 04:07:31 ops-xen2 SMGC: [19269] self._checkSlaves(vdi)

Mar 15 04:07:31 ops-xen2 SMGC: [19269] File "/opt/xensource/sm/cleanup.py", line 2650, in _checkSlaves

Mar 15 04:07:31 ops-xen2 SMGC: [19269] self.xapi.ensureInactive(hostRef, args)

Mar 15 04:07:31 ops-xen2 SMGC: [19269] File "/opt/xensource/sm/cleanup.py", line 332, in ensureInactive

Mar 15 04:07:31 ops-xen2 SMGC: [19269] hostRef, self.PLUGIN_ON_SLAVE, "multi", args)

Mar 15 04:07:31 ops-xen2 SMGC: [19269] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 264, in __call__

Mar 15 04:07:31 ops-xen2 SMGC: [19269] return self.__send(self.__name, args)

Mar 15 04:07:31 ops-xen2 SMGC: [19269] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 160, in xenapi_request

Mar 15 04:07:31 ops-xen2 SMGC: [19269] result = _parse_result(getattr(self, methodname)(*full_params))

Mar 15 04:07:31 ops-xen2 SMGC: [19269] File "/usr/lib/python2.7/site-packages/XenAPI.py", line 238, in _parse_result

Mar 15 04:07:31 ops-xen2 SMGC: [19269] raise Failure(result['ErrorDescription'])

Mar 15 04:07:31 ops-xen2 SMGC: [19269]

Mar 15 04:07:31 ops-xen2 SMGC: [19269] *~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*~*

Mar 15 04:07:31 ops-xen2 SMGC: [19269] * * * * * SR 6eb76845-35be-e755-4d7a-5419049aca87: ERROR

Mar 15 04:07:31 ops-xen2 SMGC: [19269]

Mar 15 04:07:31 ops-xen2 SM: [29642] pread SUCCESS

Mar 15 04:07:31 ops-xen2 SM: [29642] lock: released /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/sr

Mar 15 04:07:31 ops-xen2 SM: [29642] Entering _checkMetadataVolume

Mar 15 04:07:31 ops-xen2 SM: [29642] lock: acquired /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/sr

Mar 15 04:07:31 ops-xen2 SM: [29642] sr_scan {'sr_uuid': '6eb76845-35be-e755-4d7a-5419049aca87', 'subtask_of': 'DummyRef:|5e97641e-9c29-4d5b-b42c-c9df3a794e69|SR.scan', 'args': [], 'host_ref': 'OpaqueRef:d1ef8654-1582-4fbf-bff9-a0c18af279a5', 'session_ref': 'OpaqueRef:2684e3de-0605-4442-9678-3d3c07da1b7d', 'devic$

Mar 15 04:07:31 ops-xen2 SM: [29642] LVHDSR.scan for 6eb76845-35be-e755-4d7a-5419049aca87

Mar 15 04:07:31 ops-xen2 SM: [29642] ['/sbin/vgs', '--noheadings', '--nosuffix', '--units', 'b', 'VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87']

Mar 15 04:07:31 ops-xen2 SM: [29642] pread SUCCESS

Mar 15 04:07:31 ops-xen2 SM: [29642] LVMCache: refreshing

Mar 15 04:07:31 ops-xen2 SM: [29642] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87']

Mar 15 04:07:31 ops-xen2 SM: [29642] pread SUCCESS

Mar 15 04:07:31 ops-xen2 SM: [29642] ['/usr/bin/vhd-util', 'scan', '-f', '-m', 'VHD-*', '-l', 'VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87']

Mar 15 04:07:31 ops-xen2 SM: [29642] pread SUCCESS

Mar 15 04:07:31 ops-xen2 SM: [29642] Scan found hidden leaf (4bbdc102-8a1d-4c8a-991f-8f1feee2740b), ignoring

Mar 15 04:07:31 ops-xen2 SM: [29642] Scan found hidden leaf (9573449e-fed0-4d9d-ad5a-8ff3c0dd69d8), ignoring

Mar 15 04:07:31 ops-xen2 SM: [29642] Scan found hidden leaf (b27b0d95-2911-4240-b510-f065212a6d22), ignoring

Mar 15 04:07:31 ops-xen2 SM: [29642] Scan found hidden leaf (29ad53b8-7e2d-4472-a8f1-410d362e84b8), ignoring

Mar 15 04:07:31 ops-xen2 SM: [29642] Scan found hidden leaf (a8248b10-9c14-4d88-bf63-3dcae5b81ce6), ignoring

Mar 15 04:07:31 ops-xen2 SM: [29642] Scan found hidden leaf (b23fbb00-ca47-4493-8058-50affdb2b348), ignoring

Mar 15 04:07:31 ops-xen2 SM: [29642] Scan found hidden leaf (707d5286-bc23-4e4a-8981-e6f772a193c3), ignoring

Mar 15 04:07:31 ops-xen2 SM: [29642] ['/sbin/vgs', '--noheadings', '--nosuffix', '--units', 'b', 'VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87']

Mar 15 04:07:32 ops-xen2 SM: [29642] pread SUCCESS

Mar 15 04:07:32 ops-xen2 SM: [29642] lock: opening lock file /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/running

Mar 15 04:07:32 ops-xen2 SM: [29642] lock: tried lock /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/running, acquired: True (exists: True)

Mar 15 04:07:32 ops-xen2 SM: [29642] lock: released /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/running

Mar 15 04:07:32 ops-xen2 SM: [29642] Kicking GC

Mar 15 04:07:32 ops-xen2 SMGC: [29642] === SR 6eb76845-35be-e755-4d7a-5419049aca87: gc ===

Mar 15 04:07:32 ops-xen2 SMGC: [29802] Will finish as PID [29803]

Mar 15 04:07:32 ops-xen2 SMGC: [29642] New PID [29802]

Mar 15 04:07:32 ops-xen2 SM: [29803] lock: opening lock file /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/running

Mar 15 04:07:32 ops-xen2 SM: [29803] lock: opening lock file /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/gc_active

Mar 15 04:07:32 ops-xen2 SM: [29642] lock: released /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/sr

Mar 15 04:07:32 ops-xen2 SM: [29803] lock: opening lock file /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/sr

Mar 15 04:07:32 ops-xen2 SM: [29803] LVMCache created for VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87

Mar 15 04:07:32 ops-xen2 SM: [29803] lock: tried lock /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/gc_active, acquired: True (exists: True)

Mar 15 04:07:32 ops-xen2 SM: [29803] lock: tried lock /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/sr, acquired: True (exists: True)

Mar 15 04:07:32 ops-xen2 SM: [29803] LVMCache: refreshing

Mar 15 04:07:32 ops-xen2 SM: [29803] ['/sbin/lvs', '--noheadings', '--units', 'b', '-o', '+lv_tags', '/dev/VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87']

Mar 15 04:07:32 ops-xen2 SM: [29803] pread SUCCESS

Mar 15 04:07:32 ops-xen2 SM: [29803] ['/usr/bin/vhd-util', 'scan', '-f', '-m', 'VHD-*', '-l', 'VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87']

Mar 15 04:07:32 ops-xen2 SM: [29820] Setting LVM_DEVICE to /dev/disk/by-scsid/36e843b63e2d6a93dd5e7d4263d804bdf

Mar 15 04:07:32 ops-xen2 SM: [29820] Setting LVM_DEVICE to /dev/disk/by-scsid/36e843b63e2d6a93dd5e7d4263d804bdf

Mar 15 04:07:32 ops-xen2 SM: [29820] lock: opening lock file /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/sr

Mar 15 04:07:32 ops-xen2 SM: [29820] LVMCache created for VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87

Mar 15 04:07:32 ops-xen2 SM: [29820] ['/sbin/vgs', '--readonly', 'VG_XenStorage-6eb76845-35be-e755-4d7a-5419049aca87']

Mar 15 04:07:32 ops-xen2 SM: [29820] pread SUCCESS

Mar 15 04:07:32 ops-xen2 SM: [29820] Failed to lock /var/lock/sm/6eb76845-35be-e755-4d7a-5419049aca87/sr on first attempt, blocked by PID 29803

Mar 15 04:07:32 ops-xen2 SM: [29803] pread SUCCESS

Mar 15 04:07:32 ops-xen2 SMGC: [29803] SR 6eb7 ('HyperionOps') (41 VDIs in 12 VHD trees):

Mar 15 04:07:32 ops-xen2 SMGC: [29803] *a8bd427a[VHD](10.000G//9.953G|n)

Mar 15 04:07:32 ops-xen2 SMGC: [29803] *35567cc8[VHD](10.000G//4.809G|n)

Mar 15 04:07:32 ops-xen2 SMGC: [29803] *a5c1e8d8[VHD](10.000G//3.547G|n)

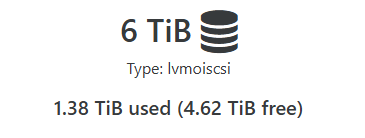

@tjkreidl I am not sure if you saw my earlier post, I have several TB of space

@tjkreidl Would a not full disk still have the issue be just a completely different issue ?

Yeah we are thinking that too, it would involve downtime on all 10 of the systems in the pool to complete so that would be a scheduled task.

@Byte_Smarter said in Issue with SR and coalesce:

@lucasljorge Its a Brand New SR that we moved them too with TB of free space

By moved we used a backup restore instead of a migration.

@lucasljorge Its a Brand New SR that we moved them too with TB of free space