Any updates here? We're 8 months away and its worrisome installing new nodes with less than 1 year lifetime.

Posts

-

RE: Centos 8 is EOL in 2021, what will xcp-ng do?

-

RE: S3 Remote with encryption: VHDFile implementation is not compatible

I also just tested this with aws s3 instead of wasabi and got the same error.

-

RE: S3 Remote with encryption: VHDFile implementation is not compatible

Unfortunately, I created the remote this morning after the update of XO.

Any other ideas I can try? Are these settings saved in a text file or db that I can go into and check if the UI saved the correct value?

-

RE: S3 Remote with encryption: VHDFile implementation is not compatible

@olivierlambert Apologies for the naming mishap. Thanks for clarifying.

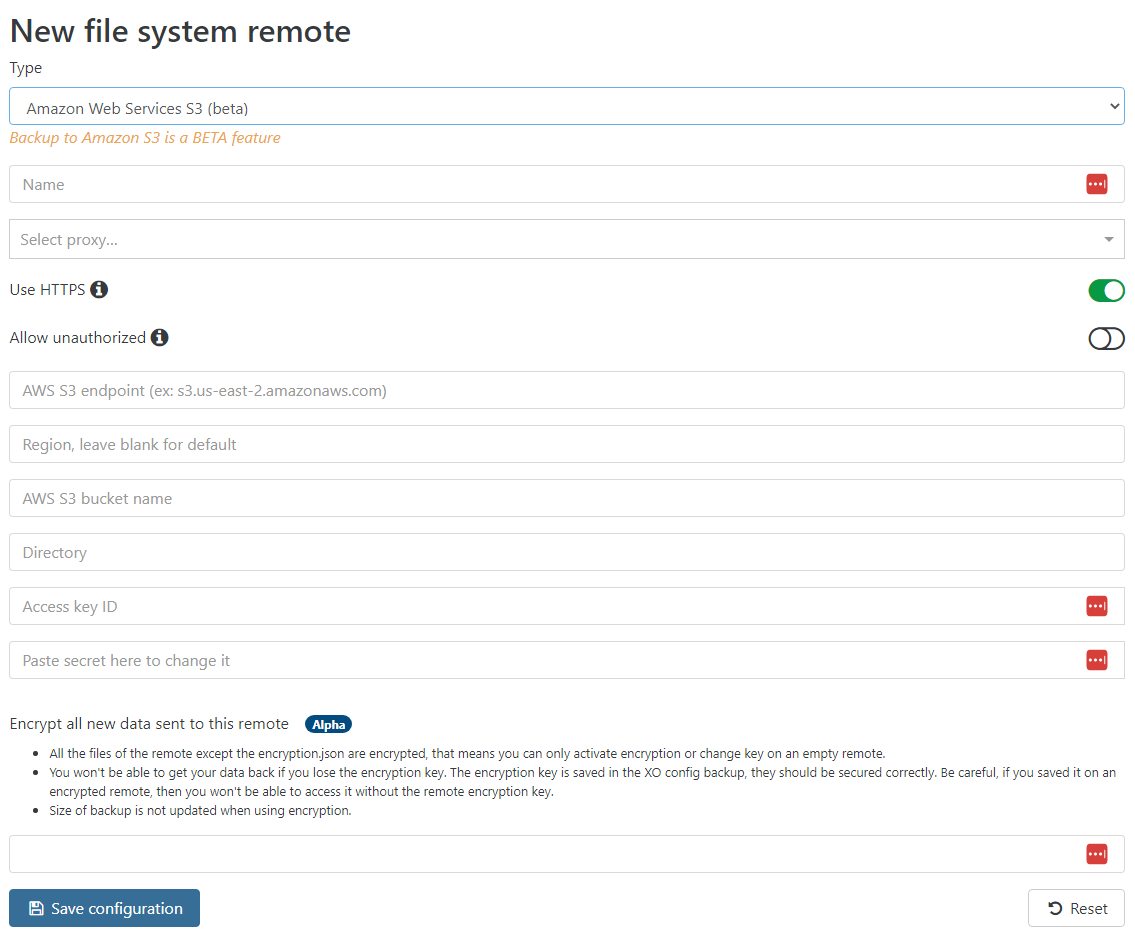

I went to create a new s3 remote and do not see any such option. Any idea what I am doing wrong?

-

S3 Remote with encryption: VHDFile implementation is not compatible

Hello,

We are trying the new s3 remote option with encryption and receiving this when we select one of our vm's (the xoa vm actually):

"VHDFile implementation is not compatible with encrypted remote"What does it mean? What can be changed to make it work?

We are running xoa on git commit 1702783cfb5c2701c3fa3 dated 12/21/2022

Thanks!

-

RE: S3 / Wasabi Backup

@nraynaud on my env I received the following error:

The request signature we calculated does not match the signature you provided. Check your key and signing method. -

RE: S3 / Wasabi Backup

Hmm, failed.

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1599934093646", "jobId": "5811c71e-0332-44f1-8733-92e684850d60", "jobName": "Wasabis3", "message": "backup", "scheduleId": "74b902af-e0c1-409b-ba88-d1c874c2da5d", "start": 1599934093646, "status": "interrupted", "tasks": [ { "data": { "type": "VM", "id": "1c6ff00c-862a-09a9-80bb-16c077982853" }, "id": "1599934093652", "message": "Starting backup of CDDev. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093652, "status": "interrupted", "tasks": [ { "id": "1599934093655", "message": "snapshot", "start": 1599934093655, "status": "success", "end": 1599934095529, "result": "695149ec-94c3-a8b6-4670-bcb8b1865487" }, { "id": "1599934095532", "message": "add metadata to snapshot", "start": 1599934095532, "status": "success", "end": 1599934095547 }, { "id": "1599934095728", "message": "waiting for uptodate snapshot record", "start": 1599934095728, "status": "success", "end": 1599934095935 }, { "id": "1599934096010", "message": "start snapshot export", "start": 1599934096010, "status": "success", "end": 1599934096010 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934096011", "message": "export", "start": 1599934096011, "status": "interrupted", "tasks": [ { "id": "1599934096104", "message": "transfer", "start": 1599934096104, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "413a67e3-b505-29d1-e1b7-a848df184431" }, "id": "1599934093655:0", "message": "Starting backup of APC Powerchute. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093655, "status": "interrupted", "tasks": [ { "id": "1599934093656", "message": "snapshot", "start": 1599934093656, "status": "success", "end": 1599934110591, "result": "ee4b2001-1324-fb02-07b4-bded6e5e19cb" }, { "id": "1599934110594", "message": "add metadata to snapshot", "start": 1599934110594, "status": "success", "end": 1599934111133 }, { "id": "1599934111997", "message": "waiting for uptodate snapshot record", "start": 1599934111997, "status": "success", "end": 1599934112783 }, { "id": "1599934118449", "message": "start snapshot export", "start": 1599934118449, "status": "success", "end": 1599934118449 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": false, "type": "remote" }, "id": "1599934118450", "message": "export", "start": 1599934118450, "status": "interrupted", "tasks": [ { "id": "1599934120299", "message": "transfer", "start": 1599934120299, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "e18cbbbb-9419-880c-8ac7-81c5449cf9bd" }, "id": "1599934093656:0", "message": "Starting backup of Brian-CDQB. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093656, "status": "interrupted", "tasks": [ { "id": "1599934093657", "message": "snapshot", "start": 1599934093657, "status": "success", "end": 1599934095234, "result": "98cdf926-55cc-7c2f-e728-96622bee7956" }, { "id": "1599934095237", "message": "add metadata to snapshot", "start": 1599934095237, "status": "success", "end": 1599934095255 }, { "id": "1599934095431", "message": "waiting for uptodate snapshot record", "start": 1599934095431, "status": "success", "end": 1599934095635 }, { "id": "1599934095762", "message": "start snapshot export", "start": 1599934095762, "status": "success", "end": 1599934095763 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934095763:0", "message": "export", "start": 1599934095763, "status": "interrupted", "tasks": [ { "id": "1599934095825", "message": "transfer", "start": 1599934095825, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "0c426b2b-c24c-9532-32f0-0ce81caa6aed" }, "id": "1599934093657:0", "message": "Starting backup of testspeed2. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093657, "status": "interrupted", "tasks": [ { "id": "1599934093658", "message": "snapshot", "start": 1599934093658, "status": "success", "end": 1599934096298, "result": "0ec43ca8-52b2-fdd3-5f1c-7182abac7cbe" }, { "id": "1599934096302", "message": "add metadata to snapshot", "start": 1599934096302, "status": "success", "end": 1599934096316 }, { "id": "1599934096528", "message": "waiting for uptodate snapshot record", "start": 1599934096528, "status": "success", "end": 1599934096737 }, { "id": "1599934096952", "message": "start snapshot export", "start": 1599934096952, "status": "success", "end": 1599934096952 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934096952:1", "message": "export", "start": 1599934096952, "status": "interrupted", "tasks": [ { "id": "1599934097196", "message": "transfer", "start": 1599934097196, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "dfb231b8-4c45-528a-0e1b-2e16e2624a40" }, "id": "1599934093658:0", "message": "Starting backup of AFGUpdateTest. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093658, "status": "interrupted", "tasks": [ { "id": "1599934093658:1", "message": "snapshot", "start": 1599934093658, "status": "success", "end": 1599934099064, "result": "1468a52f-a5a2-64d3-072e-5c35e9c70ec5" }, { "id": "1599934099068", "message": "add metadata to snapshot", "start": 1599934099068, "status": "success", "end": 1599934099453 }, { "id": "1599934100070", "message": "waiting for uptodate snapshot record", "start": 1599934100070, "status": "success", "end": 1599934100528 }, { "id": "1599934101178", "message": "start snapshot export", "start": 1599934101178, "status": "success", "end": 1599934101179 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934101188", "message": "export", "start": 1599934101188, "status": "interrupted", "tasks": [ { "id": "1599934101562", "message": "transfer", "start": 1599934101562, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "34cd5a50-61ab-c232-1254-93b52666a52c" }, "id": "1599934093658:2", "message": "Starting backup of CentOS8-Base. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093658, "status": "interrupted", "tasks": [ { "id": "1599934093659", "message": "snapshot", "start": 1599934093659, "status": "success", "end": 1599934101677, "result": "0a1287c8-a700-35e9-47dd-9a337d18719a" }, { "id": "1599934101681", "message": "add metadata to snapshot", "start": 1599934101681, "status": "success", "end": 1599934102030 }, { "id": "1599934102730", "message": "waiting for uptodate snapshot record", "start": 1599934102730, "status": "success", "end": 1599934103247 }, { "id": "1599934103759", "message": "start snapshot export", "start": 1599934103759, "status": "success", "end": 1599934103760 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934103760:0", "message": "export", "start": 1599934103760, "status": "interrupted", "tasks": [ { "id": "1599934104329", "message": "transfer", "start": 1599934104329, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "1c157c0c-e62c-d38a-e925-30b3cf914548" }, "id": "1599934093659:0", "message": "Starting backup of Oro. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093659, "status": "interrupted", "tasks": [ { "id": "1599934093659:1", "message": "snapshot", "start": 1599934093659, "status": "success", "end": 1599934106102, "result": "5b9054b8-24db-5dd8-378a-a667089e84d4" }, { "id": "1599934106109", "message": "add metadata to snapshot", "start": 1599934106109, "status": "success", "end": 1599934106638 }, { "id": "1599934107407", "message": "waiting for uptodate snapshot record", "start": 1599934107407, "status": "success", "end": 1599934108110 }, { "id": "1599934109020", "message": "start snapshot export", "start": 1599934109020, "status": "success", "end": 1599934109021 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934109022", "message": "export", "start": 1599934109022, "status": "interrupted", "tasks": [ { "id": "1599934109778", "message": "transfer", "start": 1599934109778, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "3719b724-306f-d1ee-36d1-ff7ce584e145" }, "id": "1599934093659:2", "message": "Starting backup of AFGDevQB (20191210T050003Z). (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093659, "status": "interrupted", "tasks": [ { "id": "1599934093659:3", "message": "snapshot", "start": 1599934093659, "status": "success", "end": 1599934112949, "result": "848a2fef-fc0c-8d3e-242a-7640b14d20e7" }, { "id": "1599934112953", "message": "add metadata to snapshot", "start": 1599934112953, "status": "success", "end": 1599934113706 }, { "id": "1599934114436", "message": "waiting for uptodate snapshot record", "start": 1599934114436, "status": "success", "end": 1599934115269 }, { "id": "1599934116362", "message": "start snapshot export", "start": 1599934116362, "status": "success", "end": 1599934116362 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934116362:1", "message": "export", "start": 1599934116362, "status": "interrupted", "tasks": [ { "id": "1599934117230", "message": "transfer", "start": 1599934117230, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "d2475530-a43d-8e70-2eff-fedfa96541e1" }, "id": "1599934093659:4", "message": "Starting backup of Wickermssql. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093659, "status": "interrupted", "tasks": [ { "id": "1599934093660", "message": "snapshot", "start": 1599934093660, "status": "success", "end": 1599934114621, "result": "e90b1a2d-8c22-2159-ec5e-2a4f39040562" }, { "id": "1599934114627", "message": "add metadata to snapshot", "start": 1599934114627, "status": "success", "end": 1599934115347 }, { "id": "1599934116269", "message": "waiting for uptodate snapshot record", "start": 1599934116269, "status": "success", "end": 1599934116954 }, { "id": "1599934118049", "message": "start snapshot export", "start": 1599934118049, "status": "success", "end": 1599934118049 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934118050", "message": "export", "start": 1599934118050, "status": "interrupted", "tasks": [ { "id": "1599934118988", "message": "transfer", "start": 1599934118988, "status": "interrupted" } ] } ] }, { "data": { "type": "VM", "id": "e63d7aba-9c82-7278-c46f-a4863fd2914d" }, "id": "1599934093660:0", "message": "Starting backup of XOA-FULL. (5811c71e-0332-44f1-8733-92e684850d60)", "start": 1599934093660, "status": "interrupted", "tasks": [ { "id": "1599934093660:1", "message": "snapshot", "start": 1599934093660, "status": "success", "end": 1599934118205, "result": "1c542cd1-8a84-7aec-d0c3-3c7e25daf216" }, { "id": "1599934118210", "message": "add metadata to snapshot", "start": 1599934118210, "status": "success", "end": 1599934118914 }, { "id": "1599934119851", "message": "waiting for uptodate snapshot record", "start": 1599934119851, "status": "success", "end": 1599934120624 }, { "id": "1599934121964", "message": "start snapshot export", "start": 1599934121964, "status": "success", "end": 1599934121965 }, { "data": { "id": "9c6a4527-c2d6-4346-a504-4b0baaa18972", "isFull": true, "type": "remote" }, "id": "1599934121966", "message": "export", "start": 1599934121966, "status": "interrupted", "tasks": [ { "id": "1599934124045", "message": "transfer", "start": 1599934124045, "status": "interrupted" } ] } ] } ] } -

RE: S3 / Wasabi Backup

@nraynaud First test of it with a small vm.....only issue I had was the folder section of the remote.

I tried to leave blank but it did not let me. So I put a value in, but did not create the folder inside the bucket. I assumed XOA would create it if it did not exist.

The backup failed because of this with a pretty lame (non descriptive) transfer interrupted message.

I created the folder and I was able to successfully backup a test vm.

I am currently trying a variety of vm's ranging from 20GB to 1tb.

-

RE: XO(A) + rclone

Did you get this working? I am interested in your setup!

-

RE: S3 / Wasabi Backup

Also, do you know of any other cloud service that does work? s3/backblaze, etc?

We were having luck with a fuse mounted ssh folder on a machine offsite in another physical location....but that particular server has other reasons that we can no longer use it with xcp-ng (the disk io was already too high, and adding this job was maxing it out),

So we'd like to use a cloud provider instead of having to invest in hardware and maintenance, but worse case, I guess we might have to build a storage server offsite for it.

-

RE: S3 / Wasabi Backup

Looking at the below I

assumeso. Would you concur or do you think differently?Filesystem Size Used Avail Use% Mounted on devtmpfs 3.8G 0 3.8G 0% /dev tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 3.9G 18M 3.8G 1% /run tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/xvda1 20G 9.6G 11G 48% / s3fs 256T 0 256T 0% /mnt/backupwasabi tmpfs 780M 0 780M 0% /run/user/0 -

RE: S3 / Wasabi Backup

Sure...

So on the xoa server instance, when I have wasabi mounted in fstab as such:

s3fs#xoa.mybucket.com /mnt/backupwasabi fuse _netdev,allow_other,use_path_request_style,url=https://s3.wasabisys.com 0 0I often get

operation timed outFor example,

APC Powerchute (vault-1) Snapshot Start: Apr 29, 2020, 01:07:35 PM End: Apr 29, 2020, 01:07:48 PM Wasabi transfer Start: Apr 29, 2020, 01:07:49 PM End: Apr 29, 2020, 02:22:40 PM Duration: an hour Error: operation timed out Start: Apr 29, 2020, 01:07:49 PM End: Apr 29, 2020, 02:22:40 PM Duration: an hour Error: operation timed out Start: Apr 29, 2020, 01:07:35 PM End: Apr 29, 2020, 02:22:40 PM Duration: an hourSometimes I get

ENOSPC: no space left on device, writeLike this:APC Powerchute (vault-1) Snapshot Start: Apr 29, 2020, 12:14:32 PM End: Apr 29, 2020, 12:14:36 PM Wasabi transfer Start: Apr 29, 2020, 12:14:50 PM End: Apr 29, 2020, 12:22:11 PM Duration: 7 minutes Error: ENOSPC: no space left on device, write Start: Apr 29, 2020, 12:14:50 PM End: Apr 29, 2020, 12:22:11 PM Duration: 7 minutes Error: ENOSPC: no space left on device, write Start: Apr 29, 2020, 12:14:32 PM End: Apr 29, 2020, 12:22:12 PM Duration: 8 minutes Type: fullThose are the two common errors I get when wasabi is mounted directly as a local mount point on ssh on the xoa server, and then in the remotes section I have it setup as a "local".

I have also tried a whole other pandoras box approach of having a vm called "WasabiProxy" which has the bucket mounted locally in /etc/fstab as described above, and then I expose that as a nfs share and mount it into xoa as a nfs remote. I found a forum post where a guy did this approach so i gave it a try (https://mangolassi.it/topic/19264/how-to-use-wasabi-with-xen-orchestra).

However when I do that I get two different kinds of errors on different runs:

APC Powerchute (vault-1) Snapshot Start: Apr 28, 2020, 05:56:41 PM End: Apr 28, 2020, 05:56:58 PM WasabiNFS transfer Start: Apr 28, 2020, 05:56:58 PM End: Apr 28, 2020, 05:58:23 PM Duration: a minute Error: ENOSPC: no space left on device, write Start: Apr 28, 2020, 05:56:58 PM End: Apr 28, 2020, 05:58:23 PM Duration: a minute Error: ENOSPC: no space left on device, write Start: Apr 28, 2020, 05:56:41 PM End: Apr 28, 2020, 05:58:23 PM Duration: 2 minutes Type: fullAND

APC Powerchute (vault-1) Snapshot Start: Apr 28, 2020, 05:59:26 PM End: Apr 28, 2020, 05:59:44 PM WasabiNFS transfer Start: Apr 28, 2020, 05:59:44 PM End: Apr 28, 2020, 05:59:44 PM Duration: a few seconds Error: Unknown system error -116: Unknown system error -116, scandir '/run/xo-server/mounts/a0fbb865-b55d-466c-8933-b3c091e302ff/' Start: Apr 28, 2020, 05:59:44 PM End: Apr 28, 2020, 05:59:44 PM Duration: a few seconds Error: Unknown system error -116: Unknown system error -116, scandir '/run/xo-server/mounts/a0fbb865-b55d-466c-8933-b3c091e302ff/' Start: Apr 28, 2020, 05:59:26 PM End: Apr 28, 2020, 05:59:45 PM Duration: a few seconds Type: fullThe proxy to me seems like a bit of a wonky workaround...I think it would be better to be mounted as local storage in the long run, but I don't understand what is causing it to timeout or provide the out of space error.

When the out of space error occurs, I check to ensure fuse still has the volume mounted and that something silly like an unmount and the local drive filling up didn't occur. That doesn't seem to be the case.

-

S3 / Wasabi Backup

Has anyone successfully got s3/wasabi storage to work?

I have tried through s3fs as a local mount point on the xoa server and also tried faking it through a nfs mount as well.

I always get odd behavior and failures. Before I spin wheels too much, has anyone got this to work?

-

Backup Interupted

Hey everyone,

I am running XO from source. (Sha: 2726045). I have a SMB remote and a backup process keeps getting interrupted.

I posted on github and since this might be a specific machine/vm issue and not an issue with the software someone suggested I move to the community forums for some help identifying the issue.

My XO vm has 2 cpu cores, 8GB ram and 20gb of disk space.

I am trying to backup 5 or so VM's. The largest is 150gb.

I believe my xo-server is restarting through the process which is causing the "interrupted" state on the backup.

Does anyone have any ideas for me to look or things to consider?

Mar 11 09:40:38 xoa.garfield.securedatatransit.com systemd[1]: Started XO Server. Mar 11 09:40:42 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:42.608Z xo:main INFO Configuration loaded. Mar 11 09:40:42 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:42.619Z xo:main INFO Web server listening on http://[::]:80 Mar 11 09:40:43 xoa.garfield.securedatatransit.com xo-server[1145]: Warning: connect.session() MemoryStore is not Mar 11 09:40:43 xoa.garfield.securedatatransit.com xo-server[1145]: designed for a production environment, as it will leak Mar 11 09:40:43 xoa.garfield.securedatatransit.com xo-server[1145]: memory, and will not scale past a single process. Mar 11 09:40:43 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:43.766Z xo:main INFO Setting up / → /etc/xo/xo-web/dist/ Mar 11 09:40:43 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:43.773Z xo:plugin INFO register audit Mar 11 09:40:43 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:43.820Z xo:plugin INFO register auth-github Mar 11 09:40:43 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:43.838Z xo:plugin INFO register auth-google Mar 11 09:40:43 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:43.845Z xo:plugin INFO register auth-ldap Mar 11 09:40:43 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:43.934Z xo:plugin INFO register auth-saml Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.062Z xo:plugin INFO register backup-reports Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.068Z xo:plugin INFO register load-balancer Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.080Z xo:plugin INFO register perf-alert Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.085Z xo:plugin INFO register sdn-controller Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.101Z xo:plugin INFO register test Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.103Z xo:plugin INFO register test-plugin Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.105Z xo:plugin INFO register transport-email Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.160Z xo:plugin INFO register transport-icinga2 Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.162Z xo:plugin INFO register transport-nagios Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.164Z xo:plugin INFO register transport-slack Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.327Z xo:plugin INFO register transport-xmpp Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.407Z xo:plugin INFO register usage-report Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.566Z xo:plugin INFO register web-hooks Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.569Z xo:plugin INFO failed register test Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.569Z xo:plugin INFO Cannot find module '/etc/xo/xo-builds/xen-orchestra-202011030757/ Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: at Function.Module._resolveFilename (module.js:548:15) Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: at Function.Module._load (module.js:475:25) Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: at Module.require (module.js:597:17) Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: at require (internal/module.js:11:18) Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: at Xo.call (/etc/xo/xo-builds/xen-orchestra-202011030757/packages/xo-server/src/index.js:268:18) Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: at Xo.call (/etc/xo/xo-builds/xen-orchestra-202011030757/packages/xo-server/src/index.js:324:25) Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: at map (/etc/xo/xo-builds/xen-orchestra-202011030757/packages/xo-server/src/index.js:349:38) Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: at Array.map (<anonymous>) Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: at Xo.registerPluginsInPath (/etc/xo/xo-builds/xen-orchestra-202011030757/packages/xo-server/src/index.js Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: at <anonymous> Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: at process._tickCallback (internal/process/next_tick.js:189:7) code: 'MODULE_NOT_FOUND' } } Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.774Z xo:plugin INFO successfully register auth-github Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.774Z xo:plugin INFO successfully register auth-google Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.775Z xo:plugin INFO successfully register auth-ldap Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.775Z xo:plugin INFO successfully register auth-saml Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.775Z xo:plugin INFO successfully register test-plugin Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.775Z xo:plugin INFO successfully register transport-icinga2 Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.775Z xo:plugin INFO successfully register transport-nagios Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.775Z xo:plugin INFO successfully register transport-slack Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.775Z xo:plugin INFO successfully register transport-xmpp Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.775Z xo:plugin INFO successfully register web-hooks Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.775Z xo:plugin INFO successfully register backup-reports Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.775Z xo:plugin INFO successfully register transport-email Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.776Z xo:plugin INFO successfully register load-balancer Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.776Z xo:plugin INFO successfully register perf-alert Mar 11 09:40:44 xoa.garfield.securedatatransit.com xo-server[1145]: 2020-03-11T13:40:44.838Z xo:plugin INFO successfully register usage-report