Posts

-

RE: [HELP] XCP-ng 4.17.5 dom0 kernel panic — page fault in TCP stack, crashdump attached

Hi everyone,

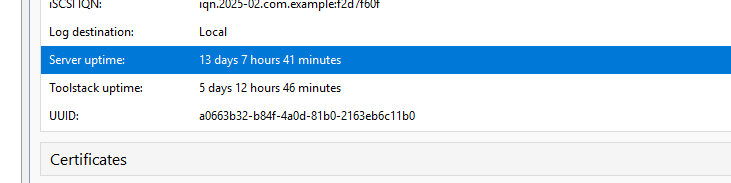

Just wanted to share a follow-up on the situation discussed earlier. The issues related to Realtek NIC and stability seem resolved for now.

Changes Made:

- Replaced onboard Realtek NIC with Intel X520 dual-port SFP+ card

- Installed and verified Ubiquiti UACC-DAC-SFP10-3M cable (working fine)

- Updated BIOS to the latest version: 1820 (May 2025)

The system seems stable now, but if you'd like to review the full dmesg output, I've uploaded it here: https://pastebin.com/6Mgt7Nir

Now that we have a working Intel NIC, what do you recommend regarding the onboard Realtek NIC?

Would it be best to:

- Disable it in BIOS?

- Leave it enabled but unused (no cable)?

- Leave it connected to a disabled port on the managed switch?

We’re currently leaning toward just removing it from all bridges and leaving it connected on disabled managed switch port — but we’d appreciate your thoughts on the cleanest/safest long-term solution.

Thanks again to @olivier and @Andrew for all the help so far.

If there’s any other suggestion you might have for additional tuning or validation now that we're stable, please let us know. -

RE: [HELP] XCP-ng 4.17.5 dom0 kernel panic — page fault in TCP stack, crashdump attached

@Andrew said in [HELP] XCP-ng 4.17.5 dom0 kernel panic — page fault in TCP stack, crashdump attached:

@dnikola Please make sure your motherboard firmware is up to date (BIOS F30e). There are a LOT of stability issues with Intel CPUs for that board and old BIOS.

If you still have r8125 crashes, then try a newer r8125 alt version (9.016.00) from my download page and see if it works better. I gave it a quick test and it installs and works, but YMMV... You can always uninstall it.

Ok, that will be done and I will report!

is it possible to have some quick user guide what has to be done, in which order to process with correct install - uninstall process@Andrew said in [HELP] XCP-ng 4.17.5 dom0 kernel panic — page fault in TCP stack, crashdump attached:

@dnikola As for the other card you listed, no, it's still a 8125 card. The single port 10G card (from the same site) is a AQC113 chipset, you'll need to install the atlantic-module-alt to support it. If you must have 2.5G then the Intel i225/i226 card is the other choice (not from that site).

I appreciate all your help so far — thank you. I noticed that the only 2.5G NIC currently available locally is the one with 8125, so it was "first aid", but didn't work . Since I’ll likely need to order a replacement online (not possible to find it in our country without purchase), could you kindly recommend a reliable source or a specific NIC model (our ISP is 2,5gbps so i prefer 2,5+ card) you’d personally suggest for this purpose (eBay or what every)?

Of course, this would be just an informal recommendation — I fully respect your experience and advice, and I completely understand it wouldn’t imply any obligation or responsibility on your part for any potential purchase issues or problems later.

my second option is MBO replacement with intel NIC (local wholesale have few models on stock) and it will be maybe fastest option

- ASUS PRIME Z790-A WIFI

- MSI PRO Z790-P WIFI

- GIGABYTE Z790 AERO G rev. 1.x

- MSI Z790 GAMING PLUS WIFI

Thanks again in advance — any tip would be much appreciated.

-

RE: [HELP] XCP-ng 4.17.5 dom0 kernel panic — page fault in TCP stack, crashdump attached

Hi, thanks for your kind replay.

Let me share what i have noticed

3 servers with same hardware, same XCP-ng last version, same MBO bios..

1 server different Hardware, same XCP-ng last versionServer C

not so frequent crashes but yes it happens from time to time around 10 days, and toolstack restarts few times in that 10 days5 VM: server 2022 x3, server 2019, win 10 pro

delta backup disabled but connected to XOServer D

2 VM: server 2019, server 2022

delta backup enabled, everything running fine from first day, not any single problem restart or toolstack crashServer K

5 VM: server 2019, server 2022, win 10 pro, win 7, linux

make the most problems, it works for 10 days than restarts 10 times in 2 days... it was triggered after delta backups, so I have disabled delta backups and disabled sending metrics from serverServer P

5 VM: server 2019, server 2022 x 2, win 10 pro, win 7

not so frequent crashes but yes it happens from time to time around 10 days, and toolstack restarts few times in that 10 days

delta backup enabled, works fine, but few time restarts occurred in that timeFrom reviewing dom0.log from server K as most affected one we have noticed:

Multiple segfaults in xcp-rrdd throughout runtime:

INFO: xcp-rrdd[xxx]: segfault at ...The RRD polling is active and seems unstable on this host.

Frequent link down/up events from the r8125 driver:

INFO: r8125: eth0: link down INFO: r8125: eth0: link up(known issue on Xen hypervisors with Realtek drivers)

And eventually, identical kernel panics as before:

CRIT: kernel BUG at drivers/xen/events/events_base.c:1601! Kernel panic - not syncing: Fatal exception in interrupt Always same stack trace, same event channel handling failure. Actions planned:

Actions planned:

BIOS update on-site (currently on v1663 / Aug 2024 — latest is 1854)

Evaluate replacing the Realtek NIC with an Intel one

Problem is that the server is at a remote location, and we’re organizing an on-site intervention ASAP. In the meantime:

In the meantime:

Can I safely disable xcp-rrdd service to reduce polling activity?

I know it powers the RRD stats in XO and XenCenter, but we can live without the graphs for now.Is there anything else advisable to disable / adjust until we get on-site?

(delta backups are already paused on this)The VM involved during the latest crash was a FreePBX virtual machine running management agent version 8.4.

Is there a newer agent package available for CentOS/AlmaLinux 8/9 guests I should apply? Question:

Question:- Would disabling xcp-rrdd mitigate dom0 instability short-term?

- Is there any way to tune RRD polling frequency instead of disabling entirely?

- Anything else to collect before the next crash (besides xen-bugtool -y) you’d recommend?

I also noticed that my FreePBX VM (UUID: 6c725208-c266-a106-da10-50e9ec66b41e) repeatedly triggers an event processing loop via xenopsd-xc and xapi, visible both in dom0.log and xapi.log.

Example from logs:

Received an event on managed VM 6c725208-c266-a106-da10-50e9ec66b41e Queue.push ["VM_check_state","6c725208-c266-a106-da10-50e9ec66b41e"] Queue.pop returned ["VM_check_state","6c725208-c266-a106-da10-50e9ec66b41e"] VM 6c725208-c266-a106-da10-50e9ec66b41e is not requesting any attentionThis repeats every minute, without an actual task being created (confirmed via xe task-list showing no pending tasks).

Notably:

- This behavior persists even after disabling RRD polling and delta backups

- The VM shows an orange activity indicator in XCP-ng Admin Center, as if a task is ongoing

- Previously this has caused a dom0 crash and reboot

- Given the log pattern and event storm, it seems likely that either:

- A stale or looping event is being triggered by the guest agent or hypervisor integration

- Or xenopsd/xapi state machine isn't properly clearing or marking the VM state after these checks

I'd appreciate advice on:

- How to safely clear/reset the VM state without restarting dom0

- Whether updating the management agent inside the FreePBX guest (currently xcp-ng-agent 8.4) to a newer version might resolve this

(If a newer one is available for RHEL7/FreePBX)

Part of log in time of this happening

Thanks in advance — we’re pushing for the hardware fixes but would appreciate advice for short-term stability in the meantime.

-

RE: [HELP] XCP-ng 4.17.5 dom0 kernel panic — page fault in TCP stack, crashdump attached

@olivierlambert please let me know one or two model of nic card, a, i will purchase them over ebay because local seller would not be able to deliver them, nd have them just in case for future debugging process.

for last X years, till 8.3 we could put xcp on any damn hardware and never had any problem ... This is our experiance.

-

RE: [HELP] XCP-ng 4.17.5 dom0 kernel panic — page fault in TCP stack, crashdump attached

thanks for letting me know something that i already know

but from time to time situation is as it is, and we need to adapt to situation (lack of HW, lack of budget and etc...) -

RE: [HELP] XCP-ng 4.17.5 dom0 kernel panic — page fault in TCP stack, crashdump attached

You BIOS is also outdated.

Yes, same BIOS

What NIC are you using in there?

Same mbo NIC, and there is one more NIC card used just for SIP trunk.

there is one more server which has less problems (because slow ISP, temporary backups has been disabled) and crash are not so frequent, but they happen... without crash log files... specially toolstack...

Regarding NIC, local seller has this 2.5gbps card, https://www.cudy.com/en-eu/products/pe25-1-0

would it be better? -

RE: [HELP] XCP-ng 4.17.5 dom0 kernel panic — page fault in TCP stack, crashdump attached

Hi, thanks for your kind replay.

Here is one more crash log from different server - identical hardware, identical problems.

What i have noticed before this crash, server has stuck on creating delta backups in 02:00 AM

and it had a few pending tasks returning in xo task-list command, was not accessible from xo and admin XCP-ng Center, and after XAPI - toolstack restart from ssh, connection restored but now I see that server restarted after that. -

RE: [HELP] XCP-ng 4.17.5 dom0 kernel panic — page fault in TCP stack, crashdump attached

thank you both again for the detailed replies and suggestions. I’d like to provide a bit more context about our setup and situation:

Situation Summary:

Situation Summary:

We’re currently running XCP-ng 8.3 with Xen 4.17.5-13 on a mix of servers, including some older, obsolete hardware.Interestingly, XCP-ng 8.2 runs without issues on identical hardware configurations — no crashes, even under the same workloads.

On this particular host, we’ve experienced 10 crashes so far, and in almost every case the crash happened while performing delta backups from Xen Orchestra.

This seems to consistently trigger the issue under higher network load.We’ve already performed full memory tests (memtest86+) on this host, and the results came back clean — no memory errors found.

The servers are currently physically located at a remote site, which makes immediate hands-on intervention difficult.

We’re organizing a visit to the site to update the BIOS and potentially replace the Realtek NIC with a supported Intel NIC as suggested. This intervention will happen as soon as logistically possible. Question:

Question:

Is there anything else you would recommend we check or do remotely in the meantime before our on-site intervention?And once we're physically on-site, aside from:

Updating the BIOS

Swapping NIC hardwareis there anything else you’d recommend we inspect or collect while we’re there?

I appreciate your help and guidance — and thank you again for pointing us in the right direction so quickly.

ONE more important question which guest tools do you recommend for Win server 2019, 2022, windows 10 ?

is 9.4.0 right one? -

[HELP] XCP-ng 4.17.5 dom0 kernel panic — page fault in TCP stack, crashdump attached

Hi everyone,

I'm reaching out to ask for help analyzing a recent crash on one of our XCP-ng hosts.

We experienced a dom0 kernel panic caused by a page fault in the TCP stack. I’ve collected and parsed the crash dumps and would appreciate your feedback, recommendations, or confirmation whether this is a known issue. Environment:

Environment:

XCP-ng hypervisor version: 4.17.5-13

Dom0 kernel: 4.19.0+1

CPU: Intel

24 physical CPUs (PCPUs)Crashkernel configured and working via kexec

Crash summary:

Crash summary:

The crash occurred due to a page fault inside the TCP stack of dom0 kernel at virtual address:0xffff8882b6ed0000 - level 1 page table not present

This triggered an NMI (Non-Maskable Interrupt) and crash dump via kexec. dom0.log details:

dom0.log details:

WARN paging error for vaddr 0xffff8882b6ed0000 - level 1 not presentCall Trace:

[ffffffff81071fa5] panic+0x111/0x27c

[ffffffff8102796f] oops_end+0xcf/0xd0

[ffffffff8105da73] no_context+0x1b3/0x3c0

[ffffffff8169885c] tcp_check_space+0x4c/0xf0

[ffffffff8105e33a] __do_page_fault+0xaa/0x4f0

Indicates page fault in tcp_check_space() leading to kernel panic. xen.log details:

xen.log details:

Xen hypervisor correctly triggered NMI crash handling across all PCPUs.

Key stack trace on all CPUs:kexec_crash_save_cpu()

do_nmi_crash()

Most CPUs were in idle loops (xen_safe_halt), while dom0 VCPU0 crashed on PCPU20. xen-crashdump-analyser output:

xen-crashdump-analyser output:

Several warnings like:WARN Cannot get kernel page table address - VCPU assumed down

indicating missing page tables at crash time for multiple VCPUs.Confirmed same paging error on the same virtual address:

WARN paging error for vaddr 0xffff8882b6ed0000 - level 1 not present

Dom0 had VCPUs active on PCPU18, PCPU0, PCPU11, PCPU2 at crash time.Several guest VMs had VCPUs active, suggesting moderate to high workload.

Summary:

Summary:

It seems to be a page fault on a virtual memory address inside the dom0 TCP stack that caused a panic.

The crashdump shows memory mapping inconsistencies (missing page tables) for various VCPUs after the crash was triggered.

This might suggest a kernel bug, unstable network driver, or potential hardware-related issue like sporadic memory corruption. Questions:

Questions:- Has anyone experienced similar page faults in the dom0 TCP stack on 4.19 kernels or XCP-ng 4.17.5?

- Are there any known issues with network drivers on this kernel/hypervisor combo?

- Would you recommend moving to a newer dom0 kernel or hypervisor build?

- Could a memory issue cause this specific kind of page table inconsistency during a kernel panic?

- Any advice on additional debug steps or log files I should collect next time?

Thank you all in advance for your time and input — really appreciate it!