I meant the template you're using to clone which is Windows.

Is it a Windows Server VM with Cloudbase-Init installed, then converted to a template?

I meant the template you're using to clone which is Windows.

Is it a Windows Server VM with Cloudbase-Init installed, then converted to a template?

I'm curious if this is something with Windows, does it work on an Ubuntu VM with cloud-init?

@rochemike Based on the TF snippet that you pasted earlier you're declaring the pool's name but not the UUID. The pool_id in xenorchestra_network needs to be the UUID to work. I tried both the name and the UUID on my end and failed with the name but worked with the UUID.

data "xenorchestra_pool" "pool" {

name_label = "b500-2555 Server Room"

}

...

data "xenorchestra_network" "network" {

name_label = "VLAN113"

pool_id = data.xenorchestra_pool.pool.id

}

...

network {

network_id = data.xenorchestra_network.network.id

}

...

}

You may be able to skip the pool_id entirely based on the Terrafarm docs. This seems to work on my end.

That would look like the below assuming you don't have VLAN113 on another pool visible to Xen Orchestra.

data "xenorchestra_network" "net" {

name_label = "VLAN113"

}

@rochemike Sorry that's on me, you want to set the UUID like below, replace YOUR_POOL_UUID with the pool UUID, make sure it stays wrapped in quotes.

data "xenorchestra_network" "net" {

name_label = var.network

pool_id = "YOUR_POOL_UUID"

}

I set mine as a variable during an Ansible playbook that writes it to a terraform.tfvars file providing the var.pool_uuid alongside others.

I'm wondering if it's because there is no pool UUID specified in your config.

Working bottom up we have your network which references the xenorchestra_network object

network {

network_id = data.xenorchestra_network.network.id

}

Which is this, specifying the network name and references xenorchestra_pool for the pool information

data"xenorchestra_network""network" {

name_label = "VLAN113"

pool_id = data.xenorchestra_pool.pool.id

}

Which specifies the name of the pool.

data"xenorchestra_pool""pool" {

name_label = "b500-2555 Server Room"

}

My config has the name of the network and the UUID of the pool that network resides on. I tried swapping it over to the pool name and my script errors out at applying the terraform configuration, swapping it over to the UUID of the pool worked though.

data "xenorchestra_network" "net" {

name_label = var.network.

pool_id = var.pool_uuid

}

What is your version of XO? There was a breaking change a few months back that required a MAC address to be defined but was changed. It's fixed now so if you update to the latest version it would fix it.

Though I can't find it now, I remember having to roll back a version until it was fixed. Might be mixing this up with the affinity host actually...

In case this is useful, here is my ubuntu TF

data "xenorchestra_pool" "pool" {

name_label = var.pool_name

}

data "xenorchestra_template" "template" {

name_label = var.vmtemplate

}

data "xenorchestra_network" "net" {

name_label = var.network

pool_id = var.pool_uuid

}

resource "xenorchestra_cloud_config" "bar" {

name = "cloud config name"

# Template the cloudinit if needed

template = templatefile("cloud_config.tftpl", {

hostname = var.hostname

})

}

resource "xenorchestra_cloud_config" "net" {

name = "cloud network config name"

# Template the cloudinit if needed

template = templatefile("cloud_network_config.tftpl", {

})

}

resource "xenorchestra_vm" "bar" {

memory_max = var.ram

cpus = var.cpus

cloud_config = xenorchestra_cloud_config.bar.template

cloud_network_config = xenorchestra_cloud_config.net.template

name_label = var.name_label

name_description = var.name_description

template = data.xenorchestra_template.template.id

# Prefer to run the VM on the primary pool instance

affinity_host = data.xenorchestra_pool.pool.master

network {

network_id = data.xenorchestra_network.net.id

}

disk {

sr_id = var.disk_sr

name_label = var.disk_name

size = var.disk_size

}

tags = [

"Nightly Backup"

]

wait_for_ip = true

// Override the default create timeout from 5 mins to 20.

timeouts {

create = "20m"

}

}

@psafont that's good to know, there were disturbingly few results when I searched for the error or the command.

This post is actually the top result for me now alongside a blog post from Oliver that wasn't there originally.

@jrc That's strange, I've been using apt to install xe-guest-utilities in my cloud-init configuration with 22.04 and haven't noticed anything odd.

What did you experience?

If you don't want to import Github keys at all, you can remove that portion and specify it under ssh_authorized_keys for each user built from cloud-init.

#cloud-config

hostname: {name}

timezone: America/New_York

users:

- default

- name: YOURUSER

passwd: YOURPASSWORDHASH

sudo: ALL=(ALL) NOPASSWD:ALL

groups: users, admin, sudo

shell: /bin/bash

lock_passwd: true

ssh_authorized_keys:

- ssh-rsa YOURKEY

package_update: true

packages:

- xe-guest-utilities

- htop

package_upgrade: true

If you want to be able to log in from the console instead of only SSH using keys, change lock_passwd: true to lock_passwd: false

No modifications at all. I download the OVA, import into Xen Orchestra, usually change the cpu to 2 cores and ram to 2gb, then convert to template.

I select the template and the cloud configs when creating a new VM. It expands the file system, sets up the user, imports SSH keys, updates packages, installs the tools, then reboots.

You can expand it to add addition users, keys, packages, etc.

@stormi I was originally fairly confident it was fresh from 8.3 Alpha but can't recall with 100% certainty if it wasn't an in-place upgrade from 8.2.

Is there anything in the file system I can check to see if it was a fresh install or an upgrade?

@gsrfan01 Follow up to this

Turns out the existing pool has it sent to false while the new one was set to new

New

[17:06 prod-hv-xcpng02]# xe host-param-list uuid=

...

tls-verification-enabled ( RO): true

[17:06 prod-hv-xcpng02]# xe host-param-list uuid=

tls-verification-enabled ( RO): true

Old

[17:06 prod-hv-xcpng02]# xe host-param-list uuid=

tls-verification-enabled ( RO): false

[17:07 prod-hv-xcpng01]# xe pool-param-list uuid=

tls-verification-enabled ( RO): false

Ended up running xe pool-enable-tls-verification and xe host-emergency-reenable-tls-verification on the existing server and was then able to get the new server added to the pool.

I was able to find very little documentation on these commands so I'm crossing fingers emergency-reenable-tls-verification doesn't cause any issues down the line but all seems good so far.

Not sure if this is related to 8.3 quite yet, but having trouble joining a newly installed 8.3 beta server into a pool that was started from 8.3 alpha and updated.

Attempting to add the new host generates this error POOL_JOINING_HOST_TLS_VERIFICATION_MISMATCH which I only see a single other reference to mentioning CPU settings which as far as I can tell match between the 2 hosts.

My cloud-inits are below, I don't mess around with building my own templates and just download the pre-made Ubuntu ones: https://cloud-images.ubuntu.com/jammy/

#cloud-config

hostname: {name}

timezone: America/New_York

users:

- default

- name: netserv

passwd: passwordhash

ssh_import_id:

- gh:guthub_account

sudo: ALL=(ALL) NOPASSWD:ALL

groups: users, admin, sudo

shell: /bin/bash

lock_passwd: true

ssh_authorized_keys:

- ssh-ed25519 yourkey

package_update: true

packages:

- xe-guest-utilities

package_upgrade: true

runcmd:

- sudo reboot

And network, though this is no longer the correct way to handle it, I've yet to update

version: 2

ethernets:

eth0:

dhcp4: false

dhcp6: false

addresses:

- 172.16.100.185/24

gateway4: 172.16.100.1

nameservers:

addresses:

- 172.16.100.21

- 172.16.100.22

- 1.1.1.1

search:

- dev.themaw.tech

Intel Xeon CPU E5-1620 v4 on a

Supermicro SYS-5018R-WR

/xtf-runner selftest -q --host

Combined test results:

test-hvm32-selftest SUCCESS

test-hvm32pae-selftest SUCCESS

test-hvm32pse-selftest SUCCESS

test-hvm64-selftest SUCCESS

test-pv64-selftest SUCCESS

./xtf-runner -aqq --host

Combined test results:

test-hvm32-umip SKIP

test-hvm64-umip SKIP

test-pv64-xsa-167 SKIP

test-pv64-xsa-182 SKIP

/usr/libexec/xen/bin/test-cpu-policy

CPU Policy unit tests

Testing CPU vendor identification:

Testing CPUID serialise success:

Testing CPUID deserialise failure:

Testing CPUID out-of-range clearing:

Testing MSR serialise success:

Testing MSR deserialise failure:

Testing policy compatibility success:

Testing policy compatibility failure:

Done: all ok

@olivierlambert is this going to be the suggestion going forward? I currently install xe-guest-utilities during deployment with cloud-init to reduce time to VM creation.

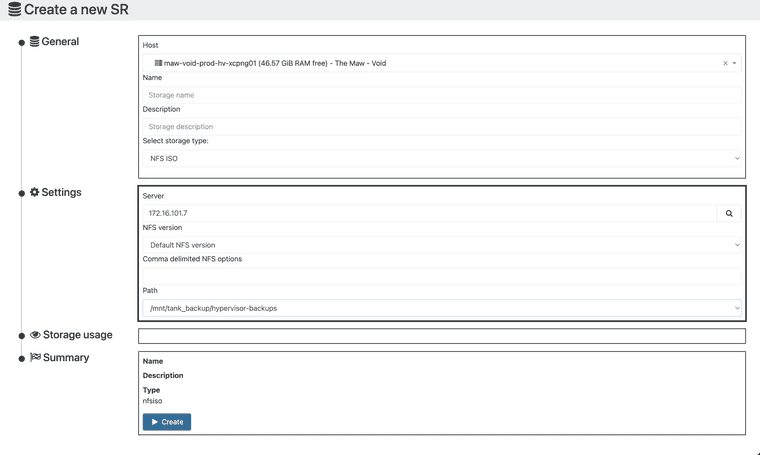

@Kajetan321 So you're looking for a cross-pool ISO store if I'm reading this right?

@Kajetan321 You should be able to create a new SR in the Xen Orchestra GUI.

New -> Storage -> NFS ISO

Even though you're attaching it to a single host it'll be available on the pool just like an NFS SR for VMs. You might need to go to the SR itself in Home -> Storage -> SR-> Hosts and then manually connect it

You should be able to go to the network tab for the VM, click the network it's currently connected to, and then change it to the new one. If you have the management tools installed this can be done live, otherwise you'd need to shut the VM down.

@mauzilla My R720XD came with the rear drives, they were optional from Dell during configuration so I try to get them included already when possible. You can grab the parts and install them yourself too.

They used to be a bit more expensive, looks like $50 now: https://www.ebay.com/itm/174894761780

They're "R720xd rear flex bays" if you wanted to look for them on not ebay, make sure if comes with the cables. I believe the cables run from the backplane to the rear flex bay on the R720.