@olivierlambert Thanks a lot.

The Max vCPU was larger than the Physical CPU. Now it migrates successfully.

Posts

-

RE: Migration failled

-

RE: Migration failled

Thanks for your quick response.

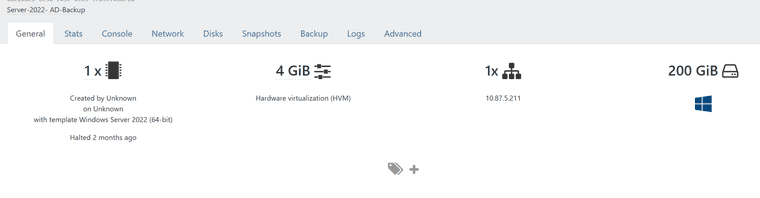

My host has 4 vCPU and the VM is just 1 vCPU as shown in the screenshot.

-

Migration failled

I tried to migrate VM from host1 to host2 it failed. the error message in log is:

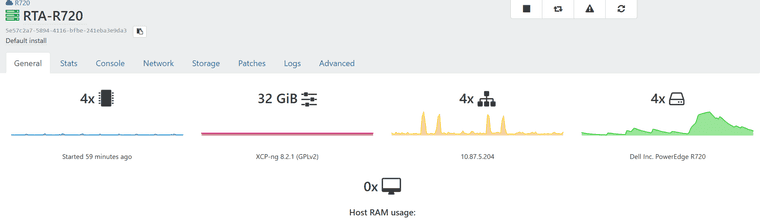

vm.migrate { "vm": "08e2baeb-0e58-c037-0e63-97b3cf41871a", "mapVifsNetworks": { "cbc74384-2167-a72f-f707-52d7179b76c3": "fab39b68-c54e-d23a-2e21-9e905f6dbbe9" }, "migrationNetwork": "b0123e39-89e2-0fd6-47dc-5509552d1615", "sr": "bf8ec472-f0d5-1a0f-946f-97b854c6f82d", "targetHost": "5e57c2a7-5894-4116-bfbe-241eba3e9da3" } { "code": 21, "data": { "objectId": "08e2baeb-0e58-c037-0e63-97b3cf41871a", "code": "HOST_NOT_ENOUGH_PCPUS" }, "message": "operation failed", "name": "XoError", "stack": "XoError: operation failed at operationFailed (/usr/local/lib/node_modules/xo-server/node_modules/xo-common/src/api-errors.js:21:32) at file:///usr/local/lib/node_modules/xo-server/src/api/vm.mjs:578:15 at Xo.migrate (file:///usr/local/lib/node_modules/xo-server/src/api/vm.mjs:564:3) at Api.#callApiMethod (file:///usr/local/lib/node_modules/xo-server/src/xo-mixins/api.mjs:445:20)" } -

RE: Adding Master Host to Existing Pool

@olivierlambert

Thank you very much for your assistance. Unfortunately, I have encountered another issue that I would like to address. I have forgotten the password for the XOA VM , but I do have the root password.

, but I do have the root password. -

RE: Adding Master Host to Existing Pool

@Rashid @BenjiReis @olivierlambert

I appreciate your patience and guidance throughout the process. I am pleased to inform you that I have successfully completed the task at hand. However, I encountered two issues along the way. Firstly, one of my SMB Storage was corrupted and was not disconnecting properly. Secondly, the system was stuck in a restart loop. After resolving the SMB storage problem, I was able to restart the system and successfully add the new host to the existing pool.

Thanks all. -

RE: Adding Master Host to Existing Pool

@BenjiReis @olivierlambert Thanks for your response. I have run the yum update & yum upgrade in both servers. no package is available.

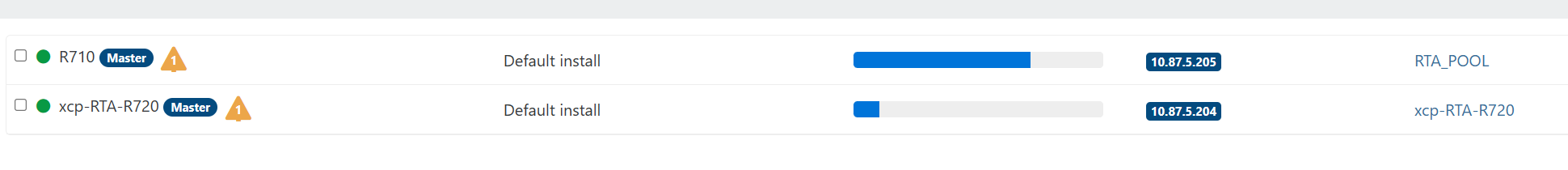

And I think the problem is that both servers are Master, Am I correct? -

RE: Adding Master Host to Existing Pool

@olivierlambert Thanks for your response. I have run the yum update & yum upgrade in both servers. no package is available.

And I think the problem is that both servers are Master, Am I correct? -

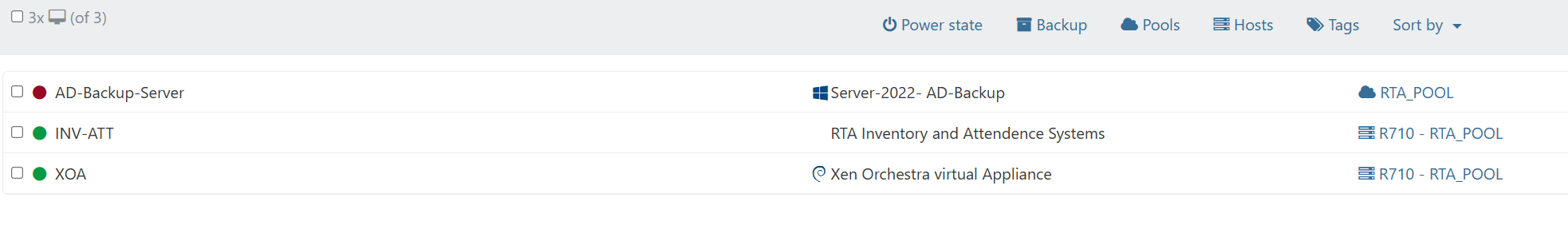

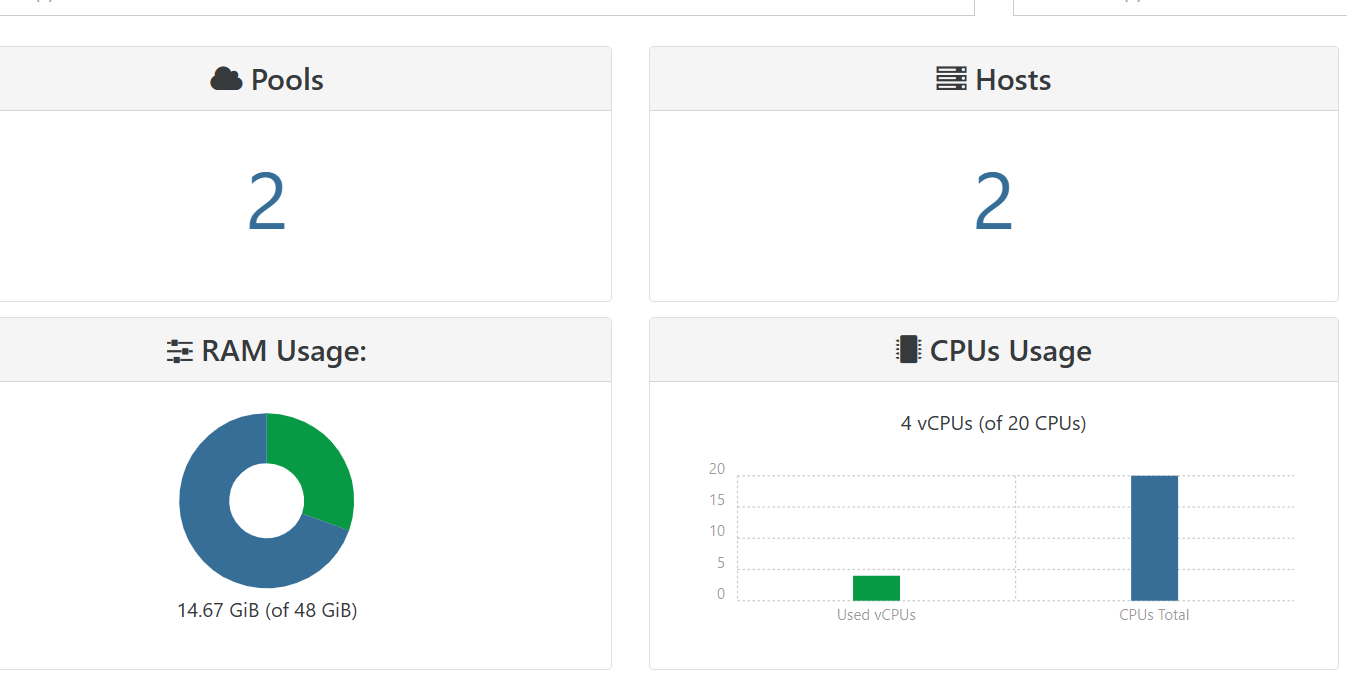

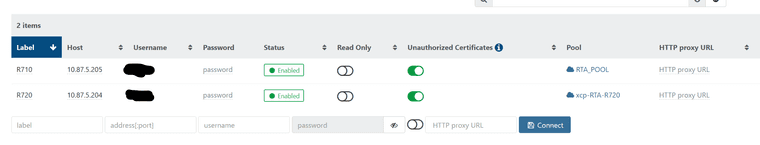

Adding Master Host to Existing Pool

I currently own two Dell Servers, one being the R710 with dual CPUs and 16 GB of RAM, and the other being the R20 with a single CPU and 32 GB of RAM. Recently, I installed XCP-ng 8.2.1 on both servers. After the installation was complete, each server became a master by default, resulting in two separate pools. However, I would like to have only one pool with one master and have my R720 server act as a slave in that pool. I have attempted various methods to resolve this issue, but unfortunately, none have been successful. It is worth mentioning that both of my XCP-ng installations are up to date. Please find the attached error log and screenshot for further reference.

Thank you in Advanced.

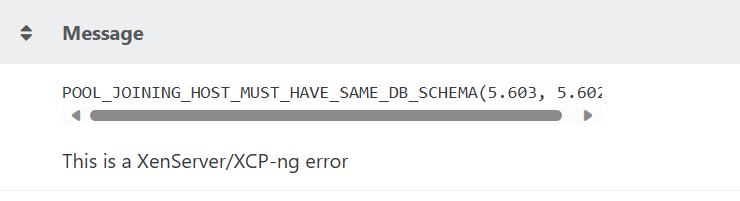

pool.mergeInto { "sources": [ "b341a66a-6ec2-2c61-300a-2675b0edb575" ], "target": "5b11bc18-bb1f-93b5-62f9-1d45a2c69179", "force": true } { "code": "POOL_JOINING_HOST_MUST_HAVE_SAME_DB_SCHEMA", "params": [ "5.603", "5.602" ], "call": { "method": "pool.join_force", "params": [ "10.87.5.205", "root", "* obfuscated *" ] }, "message": "POOL_JOINING_HOST_MUST_HAVE_SAME_DB_SCHEMA(5.603, 5.602)", "name": "XapiError", "stack": "XapiError: POOL_JOINING_HOST_MUST_HAVE_SAME_DB_SCHEMA(5.603, 5.602) at Function.wrap (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_XapiError.mjs:16:12) at file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/transports/json-rpc.mjs:35:21 at runNextTicks (node:internal/process/task_queues:60:5) at processImmediate (node:internal/timers:447:9) at process.callbackTrampoline (node:internal/async_hooks:130:17)" }