Update :

The cards only started working after enabling I/O Memory mapping above 4G in BIOS. And it was not possible to use GPU-Passthrough of XCP-NG because it seems to be limited to just one card. Instead I enabled PCI passthrough for both PCI devices to a virtual machine. And there you can use Nvidia Container Toolkit to have a docker environment which shares the GPU(s) among each other. Also tested latest LLama 3.3 70b model already which works very good.

Best posts made by Vinylrider

-

RE: Nvidia P40s with XCP-ng 8.3 for inference and light training

Latest posts made by Vinylrider

-

RE: Nvidia P40s with XCP-ng 8.3 for inference and light training

Update :

The cards only started working after enabling I/O Memory mapping above 4G in BIOS. And it was not possible to use GPU-Passthrough of XCP-NG because it seems to be limited to just one card. Instead I enabled PCI passthrough for both PCI devices to a virtual machine. And there you can use Nvidia Container Toolkit to have a docker environment which shares the GPU(s) among each other. Also tested latest LLama 3.3 70b model already which works very good. -

RE: Nvidia P40s with XCP-ng 8.3 for inference and light training

Thank you for your review. You are right. 5x black is the riser side. I have corrected my post!

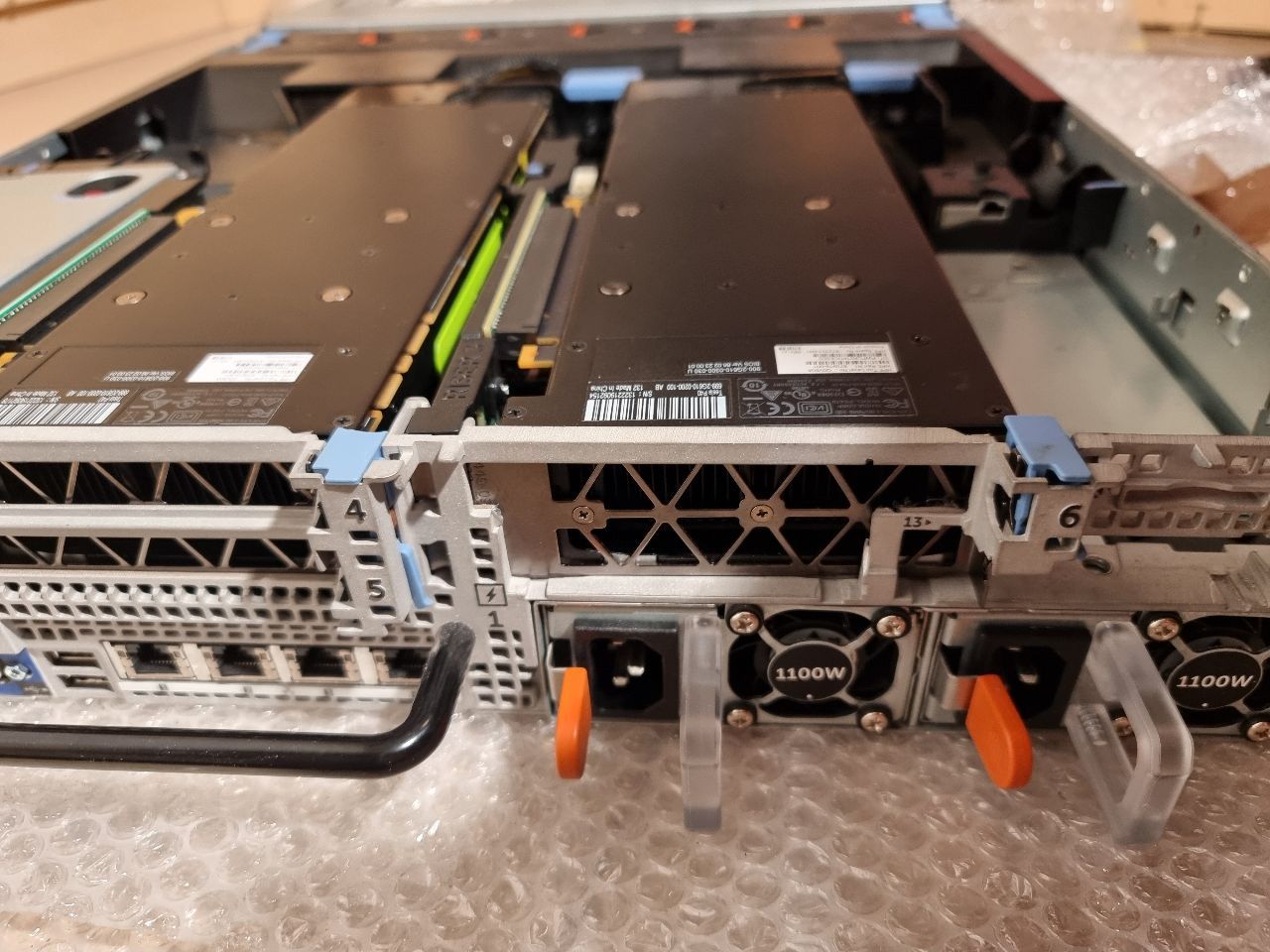

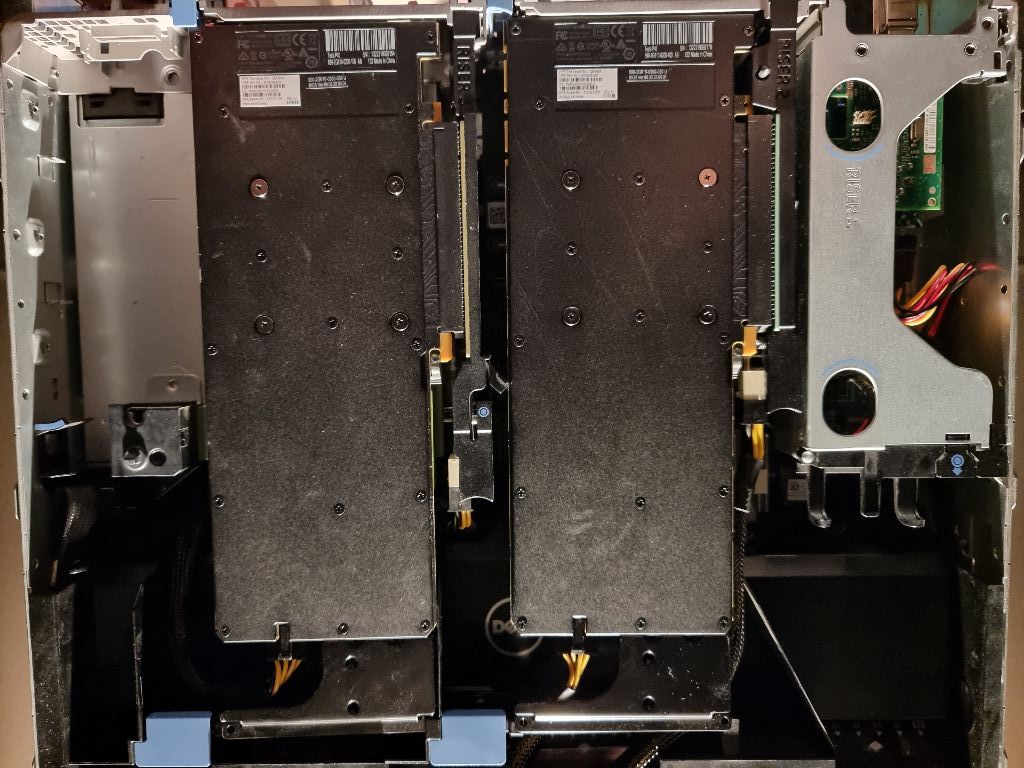

The modification was actually easier to do than expected. It took 3 hours to shut down the system, open everything, exchange the full-size USB hub PCIe and LAN card with low-profile ones, do the modification work, insert the Teslas, and start up the system again. My biggest worry was whether I would have to remove the mainboard, but luckily I didn't have to. For the metal drive cage part, you just need to hammer away 3 rivets with a screwdriver (and a hammer). Then this metal part can be bent up. Just move it left/right approximately 50 times so the metal gets weak and finally breaks off completely from the case. The dremel work was just a few cuts and was done in seconds because the metal is not really hard. I just had to ensure the mainboard/server was protected with layers of foil and carpet and have a vacuum cleaner to immediately remove all metal dust to prevent problems later on. But even if a problem occurred, the Dell parts are so indecently cheap to get on eBay. A whole R720 or R720XD server for 150 EUR. Also, I replaced the 750W PSUs with 2x 1100W ones for just 42 EUR (including shipping) and added a surplus 32GB RAM for 30 EUR. These servers truly give you the best bang for the buck.

Your temperature observations are very interesting. I also already wondered how to make fan speed dependent on GPU temperature. Please publish your script once ready! I also do not think there might be much of a heat problem with standard daily workload. But what will happen if you train stuff with, e.g., PrivateGPT for some days with full load?

-

RE: Nvidia P40s with XCP-ng 8.3 for inference and light training

Thank you gskger for this inspiring post.

With help of a dremel and gentle force it also is possible to have the same setup with Dell R720XD. Just need to remove the rear drive bays and "widen" the single PCI slot to dual and all is good.

Just two notes which are important : The pinout of Dell riser card power connector is different from the Tesla P40 power input connector. But the connector on the power cable has two same ends. If you connect wrong way then the system will refuse to start and PSUs will blink in yellow/amber color because there is a short circuit (thank you dell for having this security integrated). See picture below. The end with 5x black(GND) is riser card side, the end with 4x black is Tesla side.

Next thing you might consider is creating a small docker system for controling the fans so there is no heat issue. (see https://github.com/Gibletron/Docker-iDrac-Fan-Control)

Next thing I will try to setup is "NVIDIA Container Toolkit" so it will be possible to share the GPUs along Plex, Ollama, Frigate NVR.

-

RE: CBT: the thread to centralize your feedback

We have upgraded from commit f2188 to cb6cf and have 3 hosts. With none of these hosts backups worked anymore after doing the upgrade. When reverting back to f2188 all works again.

We also deleted orphaned VDIs and let the garbage collector do its job but it did not helped.Hosts/Errors :

1.) XCP-ng 8.2.1 : "VDI must be free or attached to exactly one VM"

2.) XCP-ng 8.2.1 (with latest updates): "VDI must be free or attached to exactly one VM"

3.) XenServer 7.1.0 : "MESSAGE_METHOD_UNKOWN(VDI.get_cbt_enabled)With the Xenserver 7.1.0 CBT is not enabled (and can not be enabled).

-

Question: Delta backup retention vs. full backup interval

It is not fully clear to me if "full backup interval" is really needed (for preventing corrupted backups over the time) with delta backups wich are supposed to be "continous" and which are merging in new deltas automatically.

So is it really suggested to set a full backup interval with continous delta backups ?

And what means that in practice for disk usage ? If we have for example an retention of 3 and a full backup intervall of 5. Will it mean :

1st backup : Full

2nd backup : Delta

3rd backup : Delta

4th backup : Delta (now the 2nd delta gets new "full")

5th backup : Full (now 3rd delta becomes another "full" and we have 2 fulls ?)I am confused. Maybe you could help me understand this mechanism better.