Updated my HP DL360 G7 home lab with no problem at all.. Everything seems to work OK.. 8-)

Best posts made by Anonabhar

-

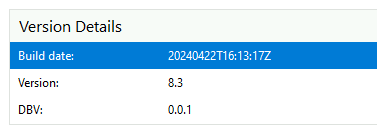

RE: XCP-ng 8.3 public alpha 🚀

-

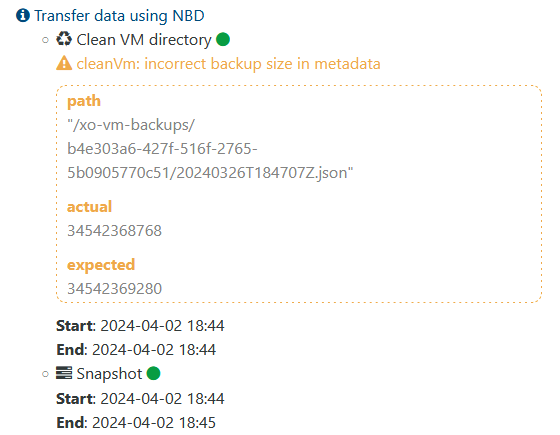

CleanVm incorrect backup size in metadata

Hi all,

I keep seeing this weird error in the logs when my incremental backups run every night. It dosent appear to be causing a problem, but it is beginning to drive me a bit mental.

The backups complete without error, but the below is in my logs when I look through them.

I am using:

Any idea what this might be or how can I clear them. I have about 40 VM's that are showing this problem LOL

-

RE: XCP-ng 8.3 betas and RCs feedback 🚀

OMG! I have just noticed that on the latest beta of 8.3 the Garge Collector gives a percentage of completion in XO(a) "Tasks" and estimation of time to completion!

THANK YOU!! THANK YOU!! THANK YOU!!!

-

RE: XCP-ng Center and XCP-ng 8.3 beta

@borzel WooHoo! Success!! Thank you...

All my pools were able to be attached now

-

RE: XCP-ng 8.1.0 beta now available!

@stormi - Thanks for the help.. Yup I removed both packages and now things are going as expected 8-)

Cant wait to try out the new build!

~Peg

-

RE: Remove a VM without Destroying the Disk

@JSylvia007 If you made the backup with a tool like XO / XOA then the backups should be unaffected. So long as they were proper backups and not just snapshots ! LOL

If you are worried, just do a restore before you delete the original VM. It will have the date appended to the end of the VM name, so it will not overwrite the existing VM..

Latest posts made by Anonabhar

-

RE: XCP-ng 8.3 betas and RCs feedback 🚀

@marvine I dont know if that is true or not.. I am using 8.3 RC1 with GPU pass-through to NVidia Tesla cards with no problems (aside from the NVidia drivers being a pain in the backside to install under Linux).

-

RE: XCP-ng 8.3 betas and RCs feedback 🚀

@pierre-c I dont use iSCSI in my lab anymore, but yesterday when I did a "yum update" of 8.3 Beta, I got a script error when yum was running through all the updates. The script that failed was part of the sm update.

To be honest, I didnt bother doing anything with the error as everything seemed to work OK for me after, so I just ignored it. But.. Maybe I should have said something.. Sorry guys..

-

RE: CBT: the thread to centralize your feedback

For those that may be stuck, like I was, I finally have un-done the coaless nightmare the previous CBT did.

For note: I am using XCP-ng 8.3 Beta fully patched.

- What I had to do was shutdown every VM and delete every snapshot

- Find every VDI that had CBT enabled and disable it. I did this in a simple bash command (not the best, I know)

for i in `xe vdi-list cbt-enabled=true | grep "^uuid ( RO)" | cut -d " " -f 20` do echo $i xe vdi-disable-cbt uuid=$i done- Reboot the server

- Create a snapshot on any VM and immidately delete it. (If you just do a rescan, it says that the GC is running when it is not but for whatever reason, deleting a shapshot seems to kick in the GC regardless)

- Keep an eye on the SMLog and look for exceptions... I tend to do something like: (It will sleep for 5 minutes - so dont get anxious)

tail -f /var/log/SMLog | grep SMGC- When it finishes, check XO to see if there are any remaining uncoalessed disk and repeat from step 4.

It took about 5 iterations of the above to finally clean up all the stuck coalessed leafs but it eventually did it. The key, for me, was making sure the VM's were not running and turning CBT off.

-

RE: CBT: the thread to centralize your feedback

I think I may have a bit of a similar problem here. About a week ago, I did an update to the broken version of XO and it threw the same error as is in the subject line here. I reverted and everything was OK, but then I started to get unhealthy VDI warnings on my backups.

I tried to rescan the SR and I would see in the SMLog that it believed another GC was running, so it would abort. Rebooting the host was the only way to force the coalesce to complete; however as soon as the next inc-backup ran, it would go into the same problem (the GC thinking another is running and would no do any work).

I then did a full power off of the host, reboot and let all the VM's sit in a "powered off" state, rescanned the SR and let it coalesce. Once everything was idle, I then deleted all snapshots and waited for the coalesce to finish. Only then did I restart the VM's. Now a few VM's immediately have come up as 'unhealthy' and once again the GC will not run, thinking there is another GC working..

I'm kind of running out of idea's 8-) Does anyone know what might be stuck or what I need to look for to find out?

Just a side note here. I noticed that all the VM's that I am having problems with have CBT enabled.

I have a VM that is a snapshot only VM and even when the coalesces is stuck, I can delete snapshots off this non-cbt VM and the coalesces process runs (then gives an exception when it gets to the VM's that have CBT enabled)

Is there a way to disable CBT?

-

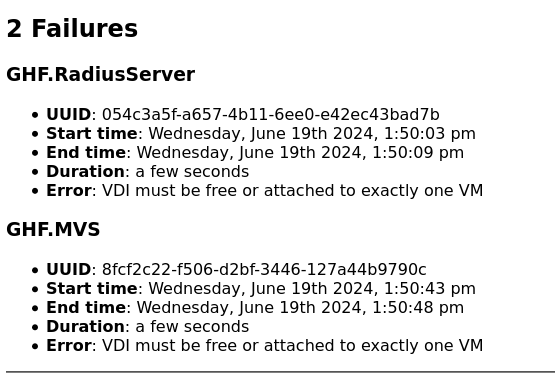

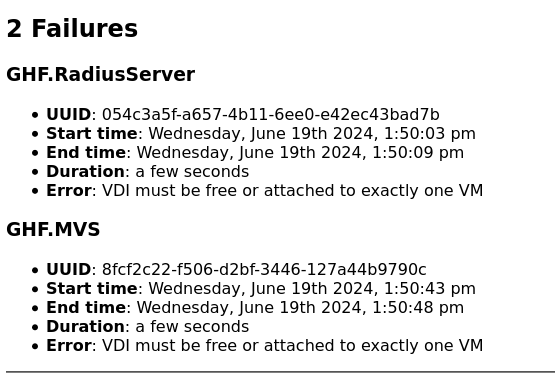

RE: starting getting again: ⚠️ Retry the VM backup due to an error Error: VDI must be free or attached to exactly one VM

Just a side note here. I noticed that all the VM's that I am having problems with have CBT enabled.

I have a VM that is a snapshot only VM and even when the coalesces is stuck, I can delete snapshots off this non-cbt VM and the coalesces process runs (then gives an exception when it gets to the VM's that have CBT enabled)

Is there a way to disable CBT?

-

RE: starting getting again: ⚠️ Retry the VM backup due to an error Error: VDI must be free or attached to exactly one VM

I think I may have a bit of a similar problem here. About a week ago, I did an update to the broken version of XO and it threw the same error as is in the subject line here. I reverted and everything was OK, but then I started to get unhealthy VDI warnings on my backups.

I tried to rescan the SR and I would see in the SMLog that it believed another GC was running, so it would abort. Rebooting the host was the only way to force the coalesce to complete; however as soon as the next inc-backup ran, it would go into the same problem (the GC thinking another is running and would no do any work).

I then did a full power off of the host, reboot and let all the VM's sit in a "powered off" state, rescanned the SR and let it coalesce. Once everything was idle, I then deleted all snapshots and waited for the coalesce to finish. Only then did I restart the VM's. Now a few VM's immediately have come up as 'unhealthy' and once again the GC will not run, thinking there is another GC working..

I'm kind of running out of idea's 8-) Does anyone know what might be stuck or what I need to look for to find out?

-

RE: XCP-ng 8.3 betas and RCs feedback 🚀

Actually, another cool feature I just noticed is that I am now able to take snapshots of my Windows 11 VM's with vTPM attached.. So.. The day is just getting better and better..

I havent tried doing a backup / export yet.. I think that if it worked, I would be overwhelmed with to0 much good news for a single day and faint

LOL

LOL -

RE: XCP-ng 8.3 betas and RCs feedback 🚀

OMG! I have just noticed that on the latest beta of 8.3 the Garge Collector gives a percentage of completion in XO(a) "Tasks" and estimation of time to completion!

THANK YOU!! THANK YOU!! THANK YOU!!!

-

RE: backups started failing: Error: VDI must be free or attached to exactly one VM

Oh.. Just a side note here: I went into the backup history and "restarted" the backups on the failed VM's and both VM's backed up successfully. Huh...

-

RE: backups started failing: Error: VDI must be free or attached to exactly one VM

Hi Guys, Just chimeing in that I am seeing the same problem