XCP-ng 8.0.0 Beta now available!

-

Good morning, afternoon, evening or night to everybody.

The beta release of XCP-ng 8.0 is available right now

.

.What's new

- Based on Citrix Hypervisor 8.0 (see https://xen-orchestra.com/blog/xenserver-7-6/).

- New kernel (4.19), updated CentOS packages (7.2 => 7.5)

- Xen 4.11

- New hardware supported thanks to the new kernel. Some older hardware is not supported anymore. It's still expected work in most cases but security cannot be guaranteed for those especially against

side-channelattacks on legacy Intel CPUs. See Citrix's Hardware Compatibility List. Note: in 7.6 we've started providing alternate drivers for some hardware. That's something we intend to keep doing, so that we can extend the compatibility list whenever possible. See https://github.com/xcp-ng/xcp/wiki/Kernel-modules-policy. - ). Note: in 7.6 we've started providing alternate drivers

- Experimental UEFI support for guests (not tested yet: have fun and report!)

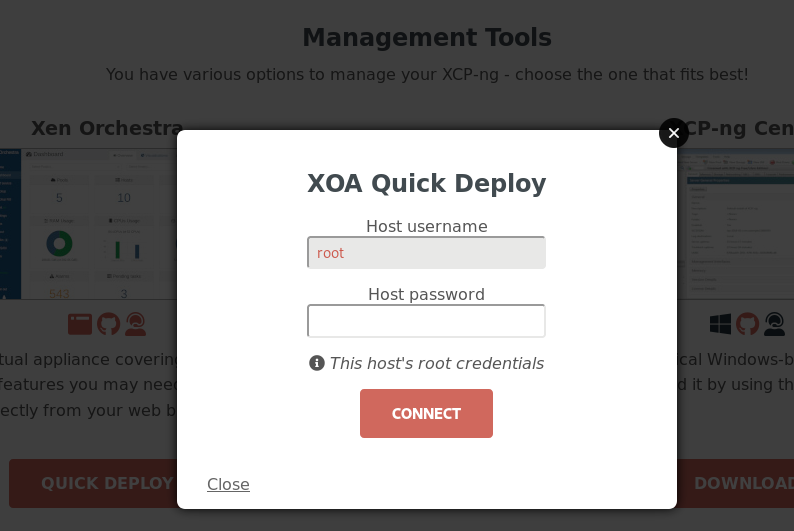

- An updated welcome HTML page with the ability to install Xen Orchestra Appliance directly from there (see below). Tell us how it works for you and what you think of it!

- A new implementation of the infamous

emu-manager, rewritten in C. Test live migrations extensively! - Mirrors: you can offer mirrors and

yumnow pulls from them: https://github.com/xcp-ng/xcp/wiki/Mirrors - Already includes the latest patches for MDS attacks.

- The net-install installer now checks the signature of the downloaded RPMs against our GPG key, which is becoming more important now that we're delegating downloads to mirrors.

- Status of our experimental packages:

ext4andxfssupport for local SR are still considered experimental, although no one reported any issue about it. You still need to install an additional package for it to work:yum install sm-additional-drivers.- ZFS packages are now available in the main repositories, can be installed with a simple

yum install zfs, and have been updated to version 0.8.1 (as of 2019-06-18) which removes the limitations we had with the previous version in XCP-ng 7.6. - No more modified

qemu-dpfor Ceph support, due to stalled issue in 7.6 (patches need to be updated). However the newer kernel brings better support for Ceph and there's some documentation in the wiki: https://github.com/xcp-ng/xcp/wiki/Ceph-on-XCP-ng-7.5-or-later. - Alternate kernel: none available yet for 8.0, but it's likely that we'll provide one later.

- New repository structure.

updates_testing,extrasandextras_testingdisappear and there's now simply:base,updatesandtesting`. Extra packages are now in the same repos as the main packages, for simpler installation and upgrades. - It's our first release with close to 100% of the packages rebuilt in our build infrastructure, which is Koji: https://koji.xcp-ng.org. Getting to this stage was a long path, so even if it changes nothing for users it's a big step for us. For the curious ones, more about the build process at https://github.com/xcp-ng/xcp/wiki/Development-process-tour.

Documentation

At this stage you should be aware that our main documentation is in our wiki and you should also know that you can all take part in completing it. Since the previous release, it has improved a lot but there's still a lot to improve.

How to upgrade

As usual, there should be two different upgrade methods: classical upgrade via installation ISO or upgrade using

yum.However, the

yum-style update is not ready yet. Since this is a major release, Citrix does not support updating using an update ISO, and the consequence for us is that the RPMs have not been carefully crafted for clean update. So it's all on us, and we plan to have this ready for the RC (release candidate).Before upgrading, remember that it is a pre-release, so the risks are higher than with a final release. But if you can take the risk, please do and tell us how it went and what method you used! However, we've been testing it internally with success and xcp-ng.org already runs on XCP-ng 8.0 (which includes this forum, the main repository and the mirror redirector) !

Although not updated yet, the Upgrade Howto remains mostly valid (except the part about

yum-style upgrade, as I said above).- Standard ISO: http://mirrors.xcp-ng.org/isos/8.0/xcp-ng-8.0.0-beta.iso

- Net-install ISO: http://mirrors.xcp-ng.org/isos/8.0/xcp-ng-8.0.0-netinstall-beta.iso

- Do not use the pre-filled URL which still points at XCP-ng 7.6. Use this instead: http://mirrors.xcp-ng.org/netinstall/8.0

- SHA256SUMS: http://mirrors.xcp-ng.org/isos/8.0/SHA256SUMS

- Signatures of the sums: http://mirrors.xcp-ng.org/isos/8.0/SHA256SUMS.asc

It's a good habit to check your downloaded ISO against the SHA256 sum and for better security also check the signature of those sums. Although our mirror redirector does try to detect file changes on mirrors, it's shoud always be envisioned that a mirror (or in the worst case our source mirror) gets hacked and managed to provide both fake ISOs and SHA256 sums. But they can't fake the signature.

Stay up to date

Run

yum updateregularly. We'll fix bugs that are detected regularly until the release candidate. Subscribe to this thread (and make sure to activate mail notifications in the forum parameters if you need them): we'll announce every update to the beta here.What to test

Everything!

Report or ask anything as replies to this thread.

A community effort to list things to be tested has been started at https://github.com/xcp-ng/xcp/wiki/Test-XCP

-

Installing Xen Orchestra Appliance directly from the browser

The Xen Orchestra team has provided us with a way to install XOA directly from your browser by simply visiting the IP address of your freshly installed host.

Try it!

-

Niiicce

Especially the new quick XOA deployment, that is more turnkey than ever

Especially the new quick XOA deployment, that is more turnkey than ever

I will migrate to XCP-ng 8.0 in my lab this weekend and start provide some tests. -

I'll do whatever testing I can with the limited resources I / my team has at the moment.

I'll update this comment with any tests I perform.

Hardware

- HPE BL460c Gen9, Xeon E5-2690v4, 256GB, Latest firmware (22/05/19), HT disabled

- Local storage: 2x Crucial MX300 SSDs in hardware RAID 1

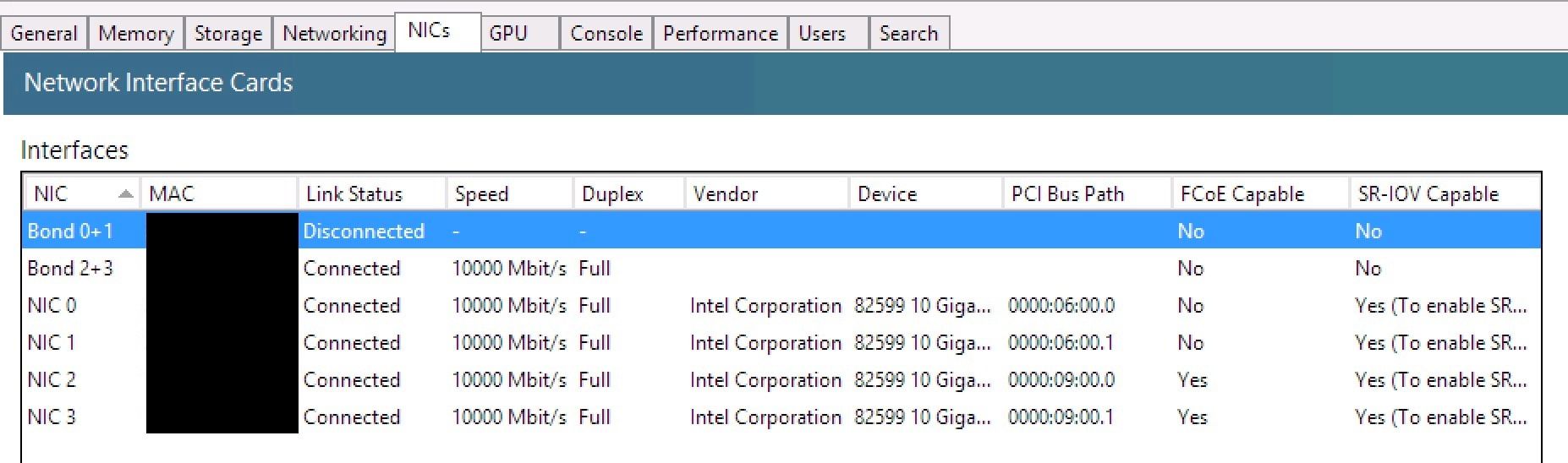

- Network cards: Dual port HPE 560M, Dual port HPE 560FLB (both Intel 82599)

Software

- XCP-ng Centre 8.0 (stable) was used throughout testing.

- Client VM is CentOS 7.6

Test 1: ISO upgrade from fresh XCP-ng 7.6 install

- Fresh Install XCP-ng 7.6.

- Configure only the management interface, just left it as DHCP.

- Install XCP-ng 7.6 updates and reboot.

- Reboot off XCP-ng 8 Beta ISO and select upgrade from 7.6.

- Upgrade completed, rebooted server.

- Connected with XCP-ng Centre.

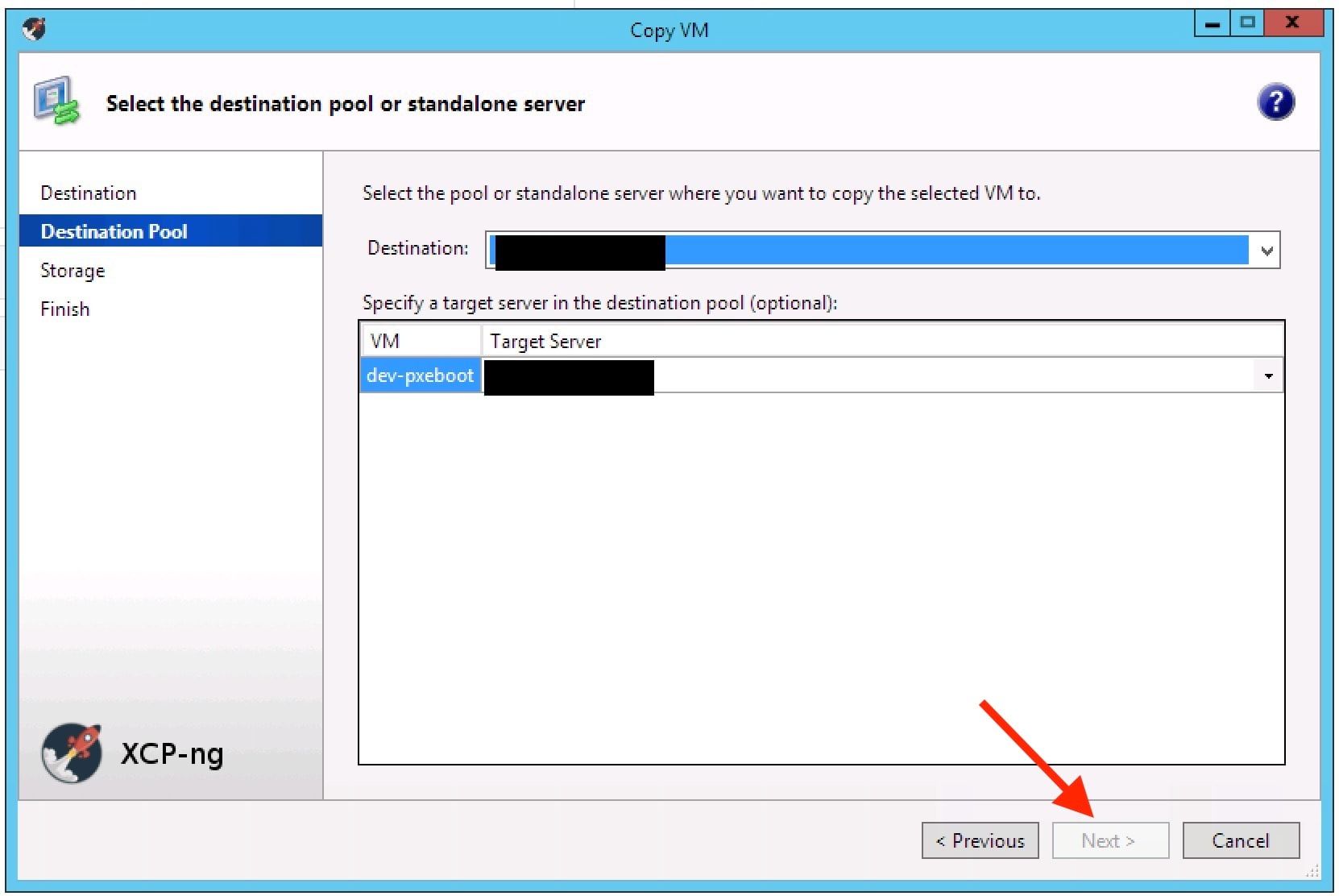

- Tried to copy VM cross-pool from XCP-ng 7.6 to the new standalone XCP-ng 8 beta host

- Looked like it was going to work, but wanted to setup network bonding and VLANs first so cancelled.

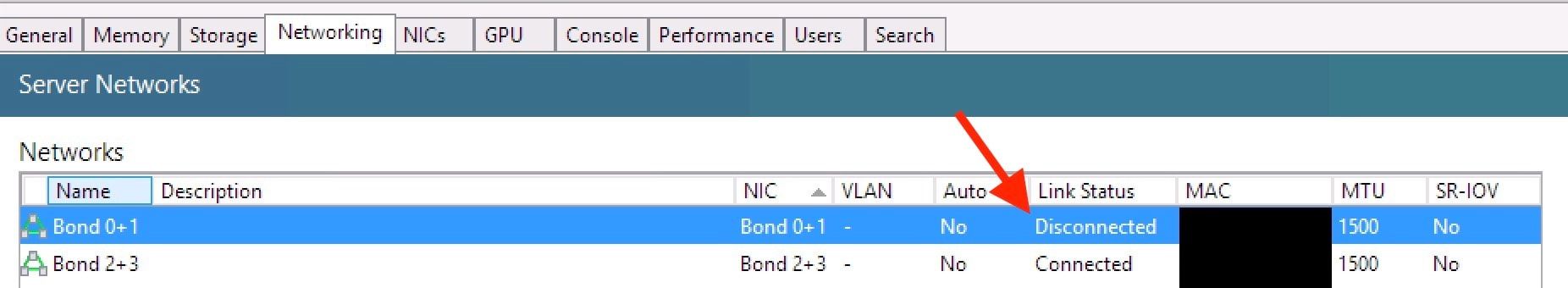

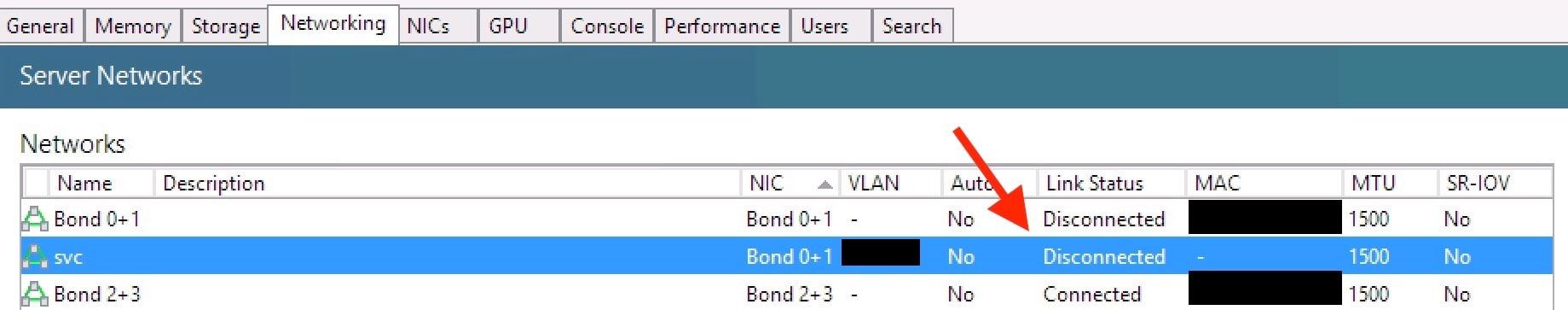

- Created a 2x 10Gbit, 20Gbit LACP bond on the non-management NICs. (

Failed Test)- After 1-3 minutes the bond showed up, but in a disconnected stated..

- Waited 10 minutes, no change, nothing in

xe-task list. - Rebooted host.

- Tried deleting and re-creating the bond.

- Bond still showing as disconnected: (

Failed Test) -

# ovs-appctl bond/show bond0 ---- bond0 ---- bond_mode: balance-slb bond may use recirculation: no, Recirc-ID : -1 bond-hash-basis: 0 updelay: 31000 ms downdelay: 200 ms next rebalance: 6113 ms lacp_status: negotiated active slave mac: 00:00:00:00:00:00(none) slave eth0: disabled may_enable: false slave eth1: disabled may_enable: false

- Switches (Juniper EX4550) show:

- Physical links:

up - Bond: down (this should be

up) - Received state: defaulted (this should be

current), this means that the switches "did not receive an aggravation control PDU within the LACP timeout * 2". - MUC state:

detached(should becollecting distributing).

- Physical links:

- Note: This is the same config as our 7.6 pools as it was with XenServer as pools as well.

- Completely powered off server, then powered on again - this strangely enough Fixed the issue!

- Leaving for now, continuing other testing.

- Created a 2x 10Gbit, Active/Passive bond on the management NICs.

- Bond created and connected within 30 seconds.

- Tried to copy VM cross-pool from XCP-ng 7.6 to the new standalone XCP-ng 8 beta host (Failed Test)

- The

Nextbutton was greyed out this time, but I couldn't see any issues. (Failed Test) - Closed and re-opened XCP-ng Centre, tried again - this time

Nextwas available when trying to copy cross-pool, but I selected cancel to test again. - This time - the

Nextbutton was greyed out again, but I couldn't see any issues. (Failed Test)

- Leaving for now, continuing other testing.

- The

- Added a SMB based ISO SR.

- Created a CentOS 7 VM, installed from the latest CentOS 7 ISO on the ISO SR to local storage.

- VM booted, installed xen tools, rebooted all fine.

- No networking in the CentOS 7 VM due to the XCP-ng bond0 not functioning, so could not test in-VM functions any further.

- Suspended VM failed. (Failed Test)

- Start VM successfully.

- Snapshot VM successfully.

- Delete snapshot successfully.

- Shutdown VM successfully.

From https://github.com/xcp-ng/xcp/wiki/Test-XCP

Text XCP - Results

- Verify installation.

- Verify connectivity with your interfaces - Pass, but required server to be powered off and on again rather than a reboot to work, will test again.

- Verify connectivity to Shared Storages. - Cannot test, at test location there is no spare SAN LUN I can use at present.

- Verify creation a new Linux VM (install guest tools).

Verify creation a new Windows VM (install guest tools)- We don't run Windows- Verify basic VM functionality (start, reboot, suspend, shutdown)

- Verify migration of a VM from an host to another. - Cannot test, at test location there is no second XCP-ng 8.0 host available at present.

- Verify migration of a VM from an old host to (this) release one -FAIL

- Verify migration of a VM from a newest host to the old one (this test should be fail).

- Check your logs for uncommon info or warnings. - Yet to test.

Performance Test

- Compare speed of write/read of disks in the old and in the new release - Yet to test.

- Compare speed of interfaces in the old and in the new release - Yet to test.

-

Some great news with the following setup, my XCP-ng VM is passing spectre-meltdown-checker including the latest zombieload etc....

I have never seen any XenServer or XCP-ng VM pass all these tests before.

- XCP-ng 8.0 beta

- Xeon E5-2690v4

- Hyperthreading Disabled (In BIOS)

- VM: CentOS 7.6

- VM: All updates installed

- VM: Kernel 5.1.4-1 (elrepo kernel-ml)

spectre-meltdown-checker output:

[root@pm-samm-dev-01 ~]# ./spectre-meltdown-checker.sh Spectre and Meltdown mitigation detection tool v0.41 Checking for vulnerabilities on current system Kernel is Linux 5.1.4-1.el7.elrepo.x86_64 #1 SMP Wed May 22 08:12:02 EDT 2019 x86_64 CPU is Intel(R) Xeon(R) CPU E5-2690 v4 @ 2.60GHz Hardware check * Hardware support (CPU microcode) for mitigation techniques * Indirect Branch Restricted Speculation (IBRS) * SPEC_CTRL MSR is available: YES * CPU indicates IBRS capability: YES (SPEC_CTRL feature bit) * Indirect Branch Prediction Barrier (IBPB) * PRED_CMD MSR is available: YES * CPU indicates IBPB capability: YES (SPEC_CTRL feature bit) * Single Thread Indirect Branch Predictors (STIBP) * SPEC_CTRL MSR is available: YES * CPU indicates STIBP capability: YES (Intel STIBP feature bit) * Speculative Store Bypass Disable (SSBD) * CPU indicates SSBD capability: YES (Intel SSBD) * L1 data cache invalidation * FLUSH_CMD MSR is available: YES * CPU indicates L1D flush capability: YES (L1D flush feature bit) * Microarchitecture Data Sampling * VERW instruction is available: YES (MD_CLEAR feature bit) * Enhanced IBRS (IBRS_ALL) * CPU indicates ARCH_CAPABILITIES MSR availability: NO * ARCH_CAPABILITIES MSR advertises IBRS_ALL capability: NO * CPU explicitly indicates not being vulnerable to Meltdown (RDCL_NO): NO * CPU explicitly indicates not being vulnerable to Variant 4 (SSB_NO): NO * CPU/Hypervisor indicates L1D flushing is not necessary on this system: NO * Hypervisor indicates host CPU might be vulnerable to RSB underflow (RSBA): NO * CPU explicitly indicates not being vulnerable to Microarchitectural Data Sampling (MDC_NO): NO * CPU supports Software Guard Extensions (SGX): NO * CPU microcode is known to cause stability problems: NO (model 0x4f family 0x6 stepping 0x1 ucode 0xb000036 cpuid 0x406f1) * CPU microcode is the latest known available version: YES (latest version is 0xb000036 dated 2019/03/02 according to builtin MCExtractor DB v110 - 2019/05/11) * CPU vulnerability to the speculative execution attack variants * Vulnerable to CVE-2017-5753 (Spectre Variant 1, bounds check bypass): YES * Vulnerable to CVE-2017-5715 (Spectre Variant 2, branch target injection): YES * Vulnerable to CVE-2017-5754 (Variant 3, Meltdown, rogue data cache load): YES * Vulnerable to CVE-2018-3640 (Variant 3a, rogue system register read): YES * Vulnerable to CVE-2018-3639 (Variant 4, speculative store bypass): YES * Vulnerable to CVE-2018-3615 (Foreshadow (SGX), L1 terminal fault): NO * Vulnerable to CVE-2018-3620 (Foreshadow-NG (OS), L1 terminal fault): YES * Vulnerable to CVE-2018-3646 (Foreshadow-NG (VMM), L1 terminal fault): YES * Vulnerable to CVE-2018-12126 (Fallout, microarchitectural store buffer data sampling (MSBDS)): YES * Vulnerable to CVE-2018-12130 (ZombieLoad, microarchitectural fill buffer data sampling (MFBDS)): YES * Vulnerable to CVE-2018-12127 (RIDL, microarchitectural load port data sampling (MLPDS)): YES * Vulnerable to CVE-2019-11091 (RIDL, microarchitectural data sampling uncacheable memory (MDSUM)): YES CVE-2017-5753 aka 'Spectre Variant 1, bounds check bypass' * Mitigated according to the /sys interface: YES (Mitigation: __user pointer sanitization) * Kernel has array_index_mask_nospec: UNKNOWN (missing 'perl' binary, please install it) * Kernel has the Red Hat/Ubuntu patch: NO * Kernel has mask_nospec64 (arm64): UNKNOWN (missing 'perl' binary, please install it) * Checking count of LFENCE instructions following a jump in kernel... NO (only 5 jump-then-lfence instructions found, should be >= 30 (heuristic)) > STATUS: NOT VULNERABLE (Mitigation: __user pointer sanitization) CVE-2017-5715 aka 'Spectre Variant 2, branch target injection' * Mitigated according to the /sys interface: YES (Mitigation: Full generic retpoline, IBPB: conditional, IBRS_FW, STIBP: disabled, RSB filling) * Mitigation 1 * Kernel is compiled with IBRS support: YES * IBRS enabled and active: YES (for firmware code only) * Kernel is compiled with IBPB support: YES * IBPB enabled and active: YES * Mitigation 2 * Kernel has branch predictor hardening (arm): NO * Kernel compiled with retpoline option: YES * Kernel compiled with a retpoline-aware compiler: YES (kernel reports full retpoline compilation) > STATUS: NOT VULNERABLE (Full retpoline + IBPB are mitigating the vulnerability) CVE-2017-5754 aka 'Variant 3, Meltdown, rogue data cache load' * Mitigated according to the /sys interface: YES (Mitigation: PTI) * Kernel supports Page Table Isolation (PTI): YES * PTI enabled and active: YES * Reduced performance impact of PTI: YES (CPU supports INVPCID, performance impact of PTI will be greatly reduced) * Running as a Xen PV DomU: NO > STATUS: NOT VULNERABLE (Mitigation: PTI) CVE-2018-3640 aka 'Variant 3a, rogue system register read' * CPU microcode mitigates the vulnerability: YES > STATUS: NOT VULNERABLE (your CPU microcode mitigates the vulnerability) CVE-2018-3639 aka 'Variant 4, speculative store bypass' * Mitigated according to the /sys interface: YES (Mitigation: Speculative Store Bypass disabled via prctl and seccomp) * Kernel supports disabling speculative store bypass (SSB): YES (found in /proc/self/status) * SSB mitigation is enabled and active: YES (per-thread through prctl) * SSB mitigation currently active for selected processes: NO (no process found using SSB mitigation through prctl) > STATUS: NOT VULNERABLE (Mitigation: Speculative Store Bypass disabled via prctl and seccomp) CVE-2018-3615 aka 'Foreshadow (SGX), L1 terminal fault' * CPU microcode mitigates the vulnerability: N/A > STATUS: NOT VULNERABLE (your CPU vendor reported your CPU model as not vulnerable) CVE-2018-3620 aka 'Foreshadow-NG (OS), L1 terminal fault' * Mitigated according to the /sys interface: YES (Mitigation: PTE Inversion) * Kernel supports PTE inversion: YES (found in kernel image) * PTE inversion enabled and active: YES > STATUS: NOT VULNERABLE (Mitigation: PTE Inversion) CVE-2018-3646 aka 'Foreshadow-NG (VMM), L1 terminal fault' * Information from the /sys interface: * This system is a host running a hypervisor: NO * Mitigation 1 (KVM) * EPT is disabled: N/A (the kvm_intel module is not loaded) * Mitigation 2 * L1D flush is supported by kernel: YES (found flush_l1d in /proc/cpuinfo) * L1D flush enabled: UNKNOWN (unrecognized mode) * Hardware-backed L1D flush supported: YES (performance impact of the mitigation will be greatly reduced) * Hyper-Threading (SMT) is enabled: NO > STATUS: NOT VULNERABLE (this system is not running a hypervisor) CVE-2018-12126 aka 'Fallout, microarchitectural store buffer data sampling (MSBDS)' * Mitigated according to the /sys interface: YES (Mitigation: Clear CPU buffers; SMT Host state unknown) * CPU supports the MD_CLEAR functionality: YES * Kernel supports using MD_CLEAR mitigation: YES (md_clear found in /proc/cpuinfo) * Kernel mitigation is enabled and active: YES * SMT is either mitigated or disabled: NO > STATUS: NOT VULNERABLE (Mitigation: Clear CPU buffers; SMT Host state unknown) CVE-2018-12130 aka 'ZombieLoad, microarchitectural fill buffer data sampling (MFBDS)' * Mitigated according to the /sys interface: YES (Mitigation: Clear CPU buffers; SMT Host state unknown) * CPU supports the MD_CLEAR functionality: YES * Kernel supports using MD_CLEAR mitigation: YES (md_clear found in /proc/cpuinfo) * Kernel mitigation is enabled and active: YES * SMT is either mitigated or disabled: NO > STATUS: NOT VULNERABLE (Mitigation: Clear CPU buffers; SMT Host state unknown) CVE-2018-12127 aka 'RIDL, microarchitectural load port data sampling (MLPDS)' * Mitigated according to the /sys interface: YES (Mitigation: Clear CPU buffers; SMT Host state unknown) * CPU supports the MD_CLEAR functionality: YES * Kernel supports using MD_CLEAR mitigation: YES (md_clear found in /proc/cpuinfo) * Kernel mitigation is enabled and active: YES * SMT is either mitigated or disabled: NO > STATUS: NOT VULNERABLE (Mitigation: Clear CPU buffers; SMT Host state unknown) CVE-2019-11091 aka 'RIDL, microarchitectural data sampling uncacheable memory (MDSUM)' * Mitigated according to the /sys interface: YES (Mitigation: Clear CPU buffers; SMT Host state unknown) * CPU supports the MD_CLEAR functionality: YES * Kernel supports using MD_CLEAR mitigation: YES (md_clear found in /proc/cpuinfo) * Kernel mitigation is enabled and active: YES * SMT is either mitigated or disabled: NO > STATUS: NOT VULNERABLE (Mitigation: Clear CPU buffers; SMT Host state unknown) > SUMMARY: CVE-2017-5753:OK CVE-2017-5715:OK CVE-2017-5754:OK CVE-2018-3640:OK CVE-2018-3639:OK CVE-2018-3615:OK CVE-2018-3620:OK CVE-2018-3646:OK CVE-2018-12126:OK CVE-2018-12130:OK CVE-2018-12127:OK CVE-2019-11091:OK -

As per https://xcp-ng.org/forum/post/12028

It looks like vDSO (available since Xen 4.8 onwards and can greatly improve highly multithreaded VM workload performance):

- Isn't enabled under XCP-ng 8.0 by default

- When you enable it, it doesn't seem to work (see https://github.com/sammcj/vdso-test-results/blob/master/xeon/Xeon E5-2690-v4/XCP-ng-8.0-beta1-vDSO-tsc-2019-05-23-vm-kernel-5.1.4.el7.md)

- The default clocksource for VMs is still

xenalthough it's recommended to usetscunder Xen virtualisation these days. [1] [2]

[1] https://news.ycombinator.com/item?id=13813936

[2] https://aws.amazon.com/premiumsupport/knowledge-center/manage-ec2-linux-clock-source/ -

@s_mcleod issue with the next button is an upstream bug and will be fixed in XCP-ng Center 8.0.1

-

@borzel good catch!

Thanks,

-

So VM.suspend is failing right? I wonder if it's reproducible in CH 8.0, I think we saw the same thing here in XCP-ng.

I'll update our few hosts running XS 7.6 to CH 8.0 next week, so we'll be able to compare

-

Thanks for your hard work!

Just did my first upgrade with the ISO from XCP-NG 7.6 (VirtualBox 6.08 VM). Upgrade went without a problem.My ext4 datastore was detached after the upgrade and could not be attached, because the "sm-additional-drivers" are not installed by default (as was written in your WHAT'S NEW section).

After "yum install sm-additional-drivers" and a toolstack restart it was working again.

Also installing the container package with "yum install xscontainer" went without problems.I did import a coreos.vhd with XCP-NG Center 8, converted the VM from HVM to PV with XCP-NG Center and I could start it.

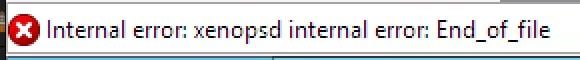

I can confirm the problem with suspending the VM, I get the same error (xenopsd End_of_file). -

It's a little strange to see older but capable CPUs dropped from the list. The old Opteron 2356 I have didn't make the cut, even though it works just fine in 7.6. I'm still going to try out 8.0 anyways.

I understand that vendors don't want to "extend support forever" but it's silly when you have 10+ year old hardware that runs fine, and the only limitation really comes down to "hardware feature XYZ is a requirement". But so far, I've not seen the actual minimum CPU requirement; which makes me a bit suspicious about "it won't run".

-

@apayne said in XCP-ng 8.0.0 Beta now available!:

Opteron 2356

That's a 12 year old CPU so I don't see any issue with dropping it.

-

@DustinB said in XCP-ng 8.0.0 Beta now available!:

That's a 12 year old CPU so I don't see any issue with dropping it.

As I said, 10+ year old hardware; and I also said, I get that vendors want to draw lines in the sand so they don't end up supporting everything under the sun. It's good business sense to limit expenditures to equipment that is commonly used.

But my (poorly articulated from the last posting) point remains: there isn't a known or posted reason why the software forces me to drop the CPU. Citrix just waved their hands and said "these don't work anymore". Well, I suspect it really does work, and this is just the side-effect of a vendor cost-cutting decision for support that has nothing to do with XCP-ng, but unfortunately impacts it anyways. So it's worth a try, and if it fails, so be it - at least there will be a known reason why, instead of the Citrix response of "nothing to see here, move along..."

XCP-ng is a killer deal, probably THE killer deal when viewed through the lens of a home lab.

That makes it hard to justify shelling out money for Windows Hyper-V or VMWare when there is a family to feed and rent to pay. Maybe that explains why I am so keen on seeing if Citrix really did make changes that prevent it from running.Fail or succeed, either way, it'll be more information to be contributed back to the community here, and something will be learned. That's a positive outcome all the way around.

-

Regarding Citrix choice: I think they can't really publicly communicate about the reasons. It might be related to security issues and some CPU vendors not upgrading microcodes anymore.

That's why it should work technically speaking, but Citrix won't be liable for any security breach due to an "old" CPU.

Regarding XCP-ng: as long as it just works, and you don't pay for support, I think you'll be fine for your home lab

Please report if you have any issue with 8.0 on your old hardware, we'll try to help as far as we could.

-

My experience with "XOA Quick Deploy"

It failed.

Because I need to use a proxy, I opened an issue here:

https://github.com/xcp-ng/xcp/issues/193 -

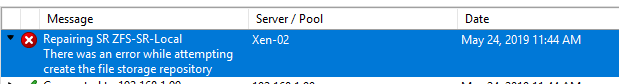

Installed fine on one of my Intel X56XX based system and the system boots into XCP 8.0 although the zfs local storage wasn't mounted and a repair fails as well.

modprobe zfs fails with "module zfs not found"

Is there any updated ZFS documentation for 8.0 that would help?

-

zfs is not part of the default installation, so you need to install it manually using

yumfrom our repositories. Documentation on the wiki has not been updated yet for 8.0: https://github.com/xcp-ng/xcp/wiki/ZFS-on-XCP-ng

Now the zfs packages are directly in our main repositories, no need to add extra--enablerepooptions. Justyum install zfs.I've just built an updated zfs package (new major version 0.8.0 instead of the 0.7.3 that was initially available in the repos for the beta) in the hope that it solves some of the issues we had with previous versions (such as VDI export or the need to patch some packages to remove the use of

O_DIRECT). I'm just waiting for the main mirror to sync to test that it installs fine. -

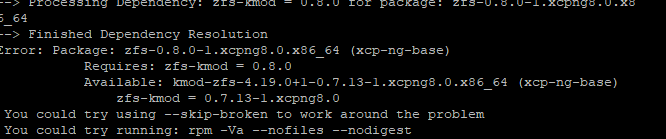

@stormi Thanks for the quick reply .. tried the yum install zfs and it errors with the following:

-

That's why I told that I'm waiting for the main mirror to sync

And the build machine is having a "I'm feeling all slow" moment. -

@stormi Ok..... silly me .. will wait for you to give the aok.