Problem with file level restore from delta backup from LVM parition

-

Hi

Also not working on XO Server$ df -Th Filesystem Type Size Used Avail Use% Mounted on udev devtmpfs 3,9G 0 3,9G 0% /dev tmpfs tmpfs 795M 1020K 794M 1% /run /dev/mapper/ubuntu--vg-ubuntu--lv ext4 3,9G 3,6G 74M 99% / tmpfs tmpfs 3,9G 0 3,9G 0% /dev/shm tmpfs tmpfs 5,0M 0 5,0M 0% /run/lock tmpfs tmpfs 3,9G 0 3,9G 0% /sys/fs/cgroup /dev/loop0 squashfs 90M 90M 0 100% /snap/core/8268 /dev/loop1 squashfs 89M 89M 0 100% /snap/core/7270 /dev/xvda2 ext4 976M 77M 832M 9% /boot tmpfs tmpfs 795M 0 795M 0% /run/user/1000 PV VG Fmt Attr PSize PFree /dev/loop2 mysqldata lvm2 a-- <120,00g 0 /dev/xvda3 ubuntu-vg lvm2 a-- <19,00g <15,00g VG #PV #LV #SN Attr VSize VFree mysqldata 1 1 0 wz--n- <120,00g 0 ubuntu-vg 1 1 0 wz--n- <19,00g <15,00g LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert mysqldata mysqldata -wi-a----- <120,00g ubuntu-lv ubuntu-vg -wi-ao---- 4,00gHere is no XFS

-

@badrAZ or someone else of XO team will try to reproduce the issue. They are under heavy lot for a bunch of new exciting features, so it might take time to test.

-

-

lvscan ACTIVE '/dev/ftp/ftp' [100.00 GiB] inheritMounted as ext4

/dev/mapper/ftp-ftp on /home/ftp type ext4 (rw,relatime,data=ordered)System is debian 8.11

-

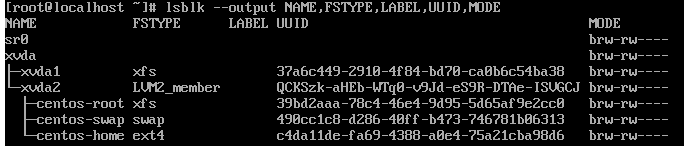

lvscan ACTIVE '/dev/ubuntu-vg/ubuntu-lv' [4,00 GiB] inherit ACTIVE '/dev/mysqldata/mysqldata' [<120,00 GiB] inherit pvscan PV /dev/xvda3 VG ubuntu-vg lvm2 [<19,00 GiB / <15,00 GiB free] PV /dev/loop2 VG mysqldata lvm2 [<120,00 GiB / 0 free] Total: 2 [138,99 GiB] / in use: 2 [138,99 GiB] / in no VG: 0 [0 ] fdisk -l Disk /dev/loop0: 89,1 MiB, 93417472 bytes, 182456 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/loop1: 88,5 MiB, 92778496 bytes, 181208 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/loop2: 120 GiB, 128849018880 bytes, 251658240 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/xvda: 20 GiB, 21474836480 bytes, 41943040 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disklabel type: gpt Disk identifier: 4EBCD4D4-BC5A-4F08-971C-AFB438AC5912 Device Start End Sectors Size Type /dev/xvda1 2048 4095 2048 1M BIOS boot /dev/xvda2 4096 2101247 2097152 1G Linux filesystem /dev/xvda3 2101248 41940991 39839744 19G Linux filesystem Disk /dev/mapper/ubuntu--vg-ubuntu--lv: 4 GiB, 4294967296 bytes, 8388608 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk /dev/mapper/mysqldata-mysqldata: 120 GiB, 128844824576 bytes, 251650048 sectors Units: sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes sudo lsblk --output NAME,FSTYPE,LABEL,UUID,MODE NAME FSTYPE LABEL UUID MODE loop0 squashfs brw-rw---- loop1 squashfs brw-rw---- loop2 LVM2_member lBivVu-YQOo-du2R-8DvU-3Igy-Kbk6-7MLtZU brw-rw---- └─mysqldata-mysqldata xfs c0875768-6bc8-49e5-85e9-fed64b469344 brw-rw---- sr0 brw-rw---- xvda brw-rw---- ├─xvda1 brw-rw---- ├─xvda2 ext4 e79dd49b-ed47-41b0-9b8a-720875dbdaf0 brw-rw---- └─xvda3 LVM2_member qNEetu-q1qF-AO2t-Eufa-OuoK-9Cy4-hjAstI brw-rw---- └─ubuntu--vg-ubuntu--lv ext4 c1b5b0fa-42d1-494a-b2d0-4125c579a660 brw-rw----

-

I tested this functionality on

ext4andxfsand it works for me.

Unfortunately, i need to reproduce this issue in our lab to be able to investigate more.

-

Same problem with XOA @othmar ?

-

I did not have a XOA i have the xo-server 5.54.0 community edition on ubunto server.

-

@othmar Install XOA in trial mode and then retest to see if the issue is present with it.

-

@Danp

yes it's the same on XOA in trial mode latest patched. -

It's same here. Problem is in community version and in xoa trial.

-

There's obviously something different between the VMs you use and ours for tests. Would you be able to provide a VM export so we can deploy it on our side, backup it and see why file restore doesn't work?

-

I am seeing the same issue with LVM restores. Exists between Trial and Community editions.

backupNg.listFiles { "remote": "c6f2b11a-4065-4a8b-b75f-e16bf2aeb5f5", "disk": "xo-vm-backups/8a0d70df-9659-b307-43f4-fc37133d9d66/vdis/2dcbfc0b-5d0a-4f84-b7ec-e89b1747e0b4/47f611b1-7c83-4319-9fcf-aad09a025edc/20200319T145807Z.vhd", "path": "/", "partition": "ea853d6d-01" } { "command": "mount --options=loop,ro,offset=1048576 --source=/tmp/tmp-458p174rEvrE3fU/vhdi1 --target=/tmp/tmp-458llR4TibV7F12", "exitCode": 32, "stdout": "", "stderr": "mount: /tmp/tmp-458llR4TibV7F12: failed to setup loop device for /tmp/tmp-458p174rEvrE3fU/vhdi1.", "failed": true, "timedOut": false, "isCanceled": false, "killed": false, "message": "Command failed with exit code 32: mount --options=loop,ro,offset=1048576 --source=/tmp/tmp-458p174rEvrE3fU/vhdi1 --target=/tmp/tmp-458llR4TibV7F12", "name": "Error", "stack": "Error: Command failed with exit code 32: mount --options=loop,ro,offset=1048576 --source=/tmp/tmp-458p174rEvrE3fU/vhdi1 --target=/tmp/tmp-458llR4TibV7F12 at makeError (/opt/xen-orchestra/node_modules/execa/lib/error.js:56:11) at handlePromise (/opt/xen-orchestra/node_modules/execa/index.js:114:26) at <anonymous>" } -

Do you have a VG using multiple PVs?

-

That one does not. I assumed that was the case for another one that had the issue, and working to move the VMs to VHDs that are not on LVM.

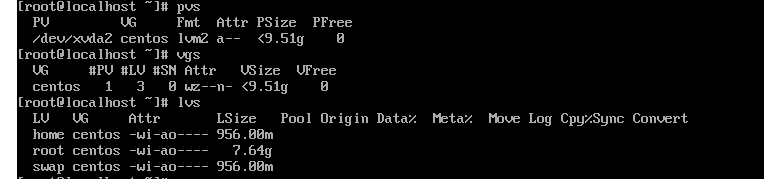

# pvdisplay --- Physical volume --- PV Name /dev/xvda2 VG Name centos PV Size 79.51 GiB / not usable 3.00 MiB Allocatable yes PE Size 4.00 MiB Total PE 20354 Free PE 16 Allocated PE 20338 PV UUID zkCwDd-03ZP-LhLO-drIw-5fpY-Bf7f-J99g0U -

-

Both the built community edition and the XOA appliance seem to have this issue.

-

A little more info... I'm also having this problem, but it seems only non-Linux file systems have a problem on my side. Let me know if I can run/supply anything on this side that might be useful?

Debian, CentOS and Ubuntu files recoveries are all fine, but Windows Server, Windows 10 and pfSense drives are throwing the same error others have posted previously. Error seems to start a step earlier than what has been previously suggested though?

Server is XO Community on Debian, NFS remote (Synology NAS) all LVMs I think.

Selecting backup date is fine. Selecting drive from the backup gives this error:

Mar 25 06:05:32 BKP01 xo-server[431]: { Error: spawn fusermount ENOENT Mar 25 06:05:32 BKP01 xo-server[431]: at Process.ChildProcess._handle.onexit (internal/child_process.js:190:19) Mar 25 06:05:32 BKP01 xo-server[431]: at onErrorNT (internal/child_process.js:362:16) Mar 25 06:05:32 BKP01 xo-server[431]: at _combinedTickCallback (internal/process/next_tick.js:139:11) Mar 25 06:05:32 BKP01 xo-server[431]: at process._tickCallback (internal/process/next_tick.js:181:9) Mar 25 06:05:32 BKP01 xo-server[431]: errno: 'ENOENT', Mar 25 06:05:32 BKP01 xo-server[431]: code: 'ENOENT', Mar 25 06:05:32 BKP01 xo-server[431]: syscall: 'spawn fusermount', Mar 25 06:05:32 BKP01 xo-server[431]: path: 'fusermount', Mar 25 06:05:32 BKP01 xo-server[431]: spawnargs: [ '-uz', '/tmp/tmp-431GWsDT1lOqXyY' ], Mar 25 06:05:32 BKP01 xo-server[431]: originalMessage: 'spawn fusermount ENOENT', Mar 25 06:05:32 BKP01 xo-server[431]: command: 'fusermount -uz /tmp/tmp-431GWsDT1lOqXyY', Mar 25 06:05:32 BKP01 xo-server[431]: exitCode: undefined, Mar 25 06:05:32 BKP01 xo-server[431]: signal: undefined, Mar 25 06:05:32 BKP01 xo-server[431]: signalDescription: undefined, Mar 25 06:05:32 BKP01 xo-server[431]: stdout: '', Mar 25 06:05:32 BKP01 xo-server[431]: stderr: '', Mar 25 06:05:32 BKP01 xo-server[431]: failed: true, Mar 25 06:05:32 BKP01 xo-server[431]: timedOut: false, Mar 25 06:05:32 BKP01 xo-server[431]: isCanceled: false, Mar 25 06:05:32 BKP01 xo-server[431]: killed: false }Partitions still load, but then this error occurs when selecting a partition:

Mar 25 06:07:51 BKP01 xo-server[431]: { Error: spawn fusermount ENOENT Mar 25 06:07:51 BKP01 xo-server[431]: at Process.ChildProcess._handle.onexit (internal/child_process.js:190:19) Mar 25 06:07:51 BKP01 xo-server[431]: at onErrorNT (internal/child_process.js:362:16) Mar 25 06:07:51 BKP01 xo-server[431]: at _combinedTickCallback (internal/process/next_tick.js:139:11) Mar 25 06:07:51 BKP01 xo-server[431]: at process._tickCallback (internal/process/next_tick.js:181:9) Mar 25 06:07:51 BKP01 xo-server[431]: errno: 'ENOENT', Mar 25 06:07:51 BKP01 xo-server[431]: code: 'ENOENT', Mar 25 06:07:51 BKP01 xo-server[431]: syscall: 'spawn fusermount', Mar 25 06:07:51 BKP01 xo-server[431]: path: 'fusermount', Mar 25 06:07:51 BKP01 xo-server[431]: spawnargs: [ '-uz', '/tmp/tmp-431yl3BiFVbSmWB' ], Mar 25 06:07:51 BKP01 xo-server[431]: originalMessage: 'spawn fusermount ENOENT', Mar 25 06:07:51 BKP01 xo-server[431]: command: 'fusermount -uz /tmp/tmp-431yl3BiFVbSmWB', Mar 25 06:07:51 BKP01 xo-server[431]: exitCode: undefined, Mar 25 06:07:51 BKP01 xo-server[431]: signal: undefined, Mar 25 06:07:51 BKP01 xo-server[431]: signalDescription: undefined, Mar 25 06:07:51 BKP01 xo-server[431]: stdout: '', Mar 25 06:07:51 BKP01 xo-server[431]: stderr: '', Mar 25 06:07:51 BKP01 xo-server[431]: failed: true, Mar 25 06:07:51 BKP01 xo-server[431]: timedOut: false, Mar 25 06:07:51 BKP01 xo-server[431]: isCanceled: false, Mar 25 06:07:51 BKP01 xo-server[431]: killed: false } Mar 25 06:07:51 BKP01 xo-server[431]: 2020-03-24T20:07:51.178Z xo:api WARN admin@admin.net | backupNg.listFiles(...) [135ms] =!> Error: Command failed with exit code 32: mount --options=loop,ro,offset=608174080 --source=/tmp/tmp-431yl3BiFVbSmWB/vhdi15 --target=/tmp/tmp-431ieOQYw52Cr6a -

@sccf can you confirm same issue on XOA?

-

OK, so XOA is working fine on my side except for the pfSense/FreeBSD VM, but to be honest I'm not sure I have ever tried a file level restore on it before and can't see any reason why I would ever need to in future.

I guess I'm looking for an environment issue then, perhaps a dependency? I've got 2 independent sites (one at home, one at our local church) that are configured the same and having the same problems. Will try setting up on Ubuntu and see if that resolves.