backblaze b2 / amazon s3 as remote in xoa

-

Hi

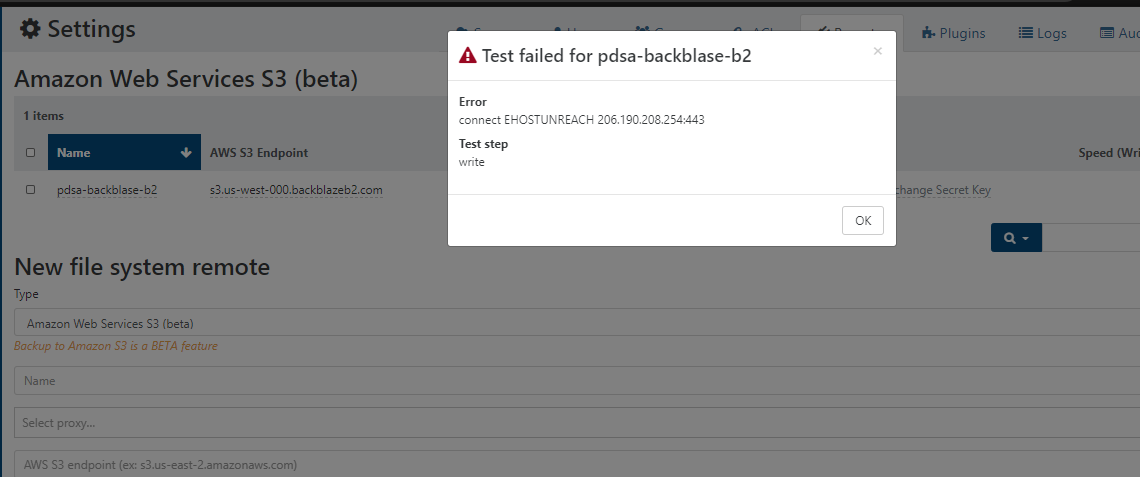

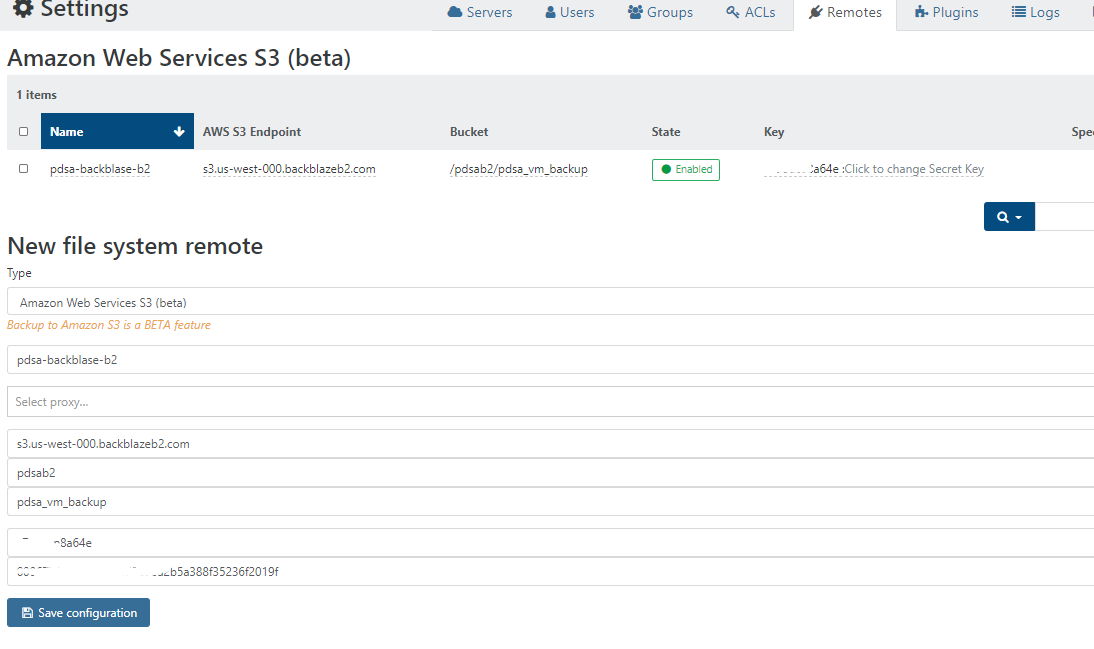

have anyone done proper configuration of s3 backup and can confirm that it works correctly? I'm trying to config remote for backblaze b2 but all I get is an error

-

@akurzawa s3 backups/delta backups are not working great for big vms

but I never had this problem! check the bucket and directory -

We've got a similar but different problem with B2. The test of the S3 config (little doctor's bag) comes back fine, but once we start a backup running it fails with "TimeoutError: Connection timed out after 120000ms".

Have tried upping the memory on the XO machine, but in all honesty it doesn't hit that too badly, it's just the upload to B2 that has this 2min timeout issue. The test works fine and I can see on B2 that the test file has uploaded ok, but can't seem to get a backup to work

Can PM a full log of the above if it helps, key data is here:

"result": { "message": "Connection timed out after 120000ms", "code": "TimeoutError", "time": "2020-12-10T09:53:06.757Z", "region": "us-east-1", "hostname": "s3.us-west-001.backblazeb2.com", "retryable": true, "name": "TimeoutError", "stack": "TimeoutError: Connection timed out after 120000ms\n at ClientRequest.<anonymous> (/home/node/xen-orchestra/node_modules/aws-sdk/lib/http/node.js:86:34)\n at Object.onceWrapper (events.js:420:28)\n at ClientRequest.apply (events.js:314:20)\n at ClientRequest.patchedEmit (/home/node/xen-orchestra/@xen-orchestra/log/src/configure.js:92:17)\n at ClientRequest.EventEmitter.emit (domain.js:486:12)\n at TLSSocket.emitRequestTimeout (_http_client.js:769:9)\n at Object.onceWrapper (events.js:420:28)\n at TLSSocket.apply (events.js:326:22)\n at TLSSocket.patchedEmit (/home/node/xen-orchestra/@xen-orchestra/log/src/configure.js:92:17)\n at TLSSocket.EventEmitter.emit (domain.js:486:12)\n at TLSSocket.Socket._onTimeout (net.js:483:8)\n at listOnTimeout (internal/timers.js:551:17)\n at processTimers (internal/timers.js:494:7)" }XO: 5.71.2

Any ideas? Thanks

-

@shorian yap me too but not always.

sometimes says Error: Your socket connection to the server was not read from or written to within the timeout period. Idle connections will be closed. -

Update to my earlier post - We found the connection timeout issue was solved by allocating more memory to XO. Even though above I said that the memory didn't appear to be a problem, it turned out that Debian was swapping out so as to keep a chunk of free memory available, so we mistakenly assumed that not using all the memory meant we had sufficient. However being memory restricted combined with a slow disk meant that the swap was growing faster than it was being processed.

Substantially increasing the XO VM memory (4->16gb) seems to have solved the timeout issues (so yes, root cause was user error), and we're now finding that the S3 api to B2 (a lot cheaper than Amazon) is working really well for us.

Well done to the XO dev team - the S3 api has completely changed how we use backups and freed up a lot infrastructure that we previously had had to dedicate to this; thank you

-

@nraynaud will be happy to hear that

-

@shorian Thank you.

I will look into the memory issue, that seems to be the clue into a hidden issue, thank you.

-

@shorian I think I found the memory hog in S3 thanks to your message: https://github.com/vatesfr/xen-orchestra/pull/5456

-

@akurzawa @DodgeThis @shorian Any of you able to test this branch to see whether it fixes the issue?

-

@julien-f No problem. glad to test it.

-

@julien-f

Still no sucess,

This vm that i'm trying to backups is about 290 GbXen Orchestra is on a VM with:

Debian 10

4 Gb Ram

85 GB free hd

1CPUxo-server 5.72.1

xo-web 5.75.0{ "data": { "mode": "full", "reportWhen": "failure" }, "id": "1608035511007", "jobId": "695f3dfb-783f-4cb9-85ef-6805d75c7d26", "jobName": "AmazonTest", "message": "backup", "scheduleId": "75c619c3-8b6d-4c72-b110-131b01992b3e", "start": 1608035511007, "status": "failure", "tasks": [ { "data": { "type": "VM", "id": "93b88999-8b61-7207-138b-1ef389491e71" }, "id": "1608035511027", "message": "Starting backup of MysqlDB. (695f3dfb-783f-4cb9-85ef-6805d75c7d26)", "start": 1608035511027, "status": "failure", "tasks": [ { "id": "1608035511031", "message": "snapshot", "start": 1608035511031, "status": "success", "end": 1608035512857, "result": "e612bb3b-9141-45ef-a901-ac8e4f1927c2" }, { "id": "1608035512859", "message": "add metadata to snapshot", "start": 1608035512859, "status": "success", "end": 1608035512869 }, { "id": "1608035513055", "message": "waiting for uptodate snapshot record", "start": 1608035513055, "status": "success", "end": 1608035513259 }, { "id": "1608035513261", "message": "start VM export", "start": 1608035513261, "status": "success", "end": 1608035513302 }, { "data": { "id": "72341e61-56db-43f5-8389-78baf364516f", "type": "remote" }, "id": "1608035513304", "message": "export", "start": 1608035513304, "status": "failure", "tasks": [ { "id": "1608035514389", "message": "transfer", "start": 1608035514389, "status": "failure", "end": 1608035839218, "result": { "message": "write EPIPE", "errno": "EPIPE", "code": "NetworkingError", "syscall": "write", "region": "eu-central-1", "hostname": "s3.eu-central-1.amazonaws.com", "retryable": true, "time": "2020-12-15T12:37:18.736Z", "statusCode": 400, "name": "NetworkingError", "stack": "Error: write EPIPE\n at WriteWrap.onWriteComplete [as oncomplete] (internal/stream_base_commons.js:94:16)" } } ], "end": 1608035839357, "result": { "message": "write EPIPE", "errno": "EPIPE", "code": "NetworkingError", "syscall": "write", "region": "eu-central-1", "hostname": "s3.eu-central-1.amazonaws.com", "retryable": true, "time": "2020-12-15T12:37:18.736Z", "statusCode": 400, "name": "NetworkingError", "stack": "Error: write EPIPE\n at WriteWrap.onWriteComplete [as oncomplete] (internal/stream_base_commons.js:94:16)" } }, { "id": "1608035839607", "message": "set snapshot.other_config[xo:backup:exported]", "start": 1608035839607, "status": "success", "end": 1608035839831 } ], "end": 1608039896381 } ], "end": 1608039896383 } -

Another one with a VM with +/-600 Gb

{ "data": { "mode": "full", "reportWhen": "failure" }, "id": "1608043681979", "jobId": "abfed8aa-b445-4c94-be5c-b6ed723792b8", "jobName": "amazontest", "message": "backup", "scheduleId": "44840d13-2302-45ae-9460-53ed451183ed", "start": 1608043681979, "status": "failure", "infos": [ { "data": { "vms": [ "fbea839a-72ac-ca3d-51e5-d4248e363d72" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "fbea839a-72ac-ca3d-51e5-d4248e363d72" }, "id": "1608043681986:0", "message": "Starting backup of DESKTOPGREEN. (abfed8aa-b445-4c94-be5c-b6ed723792b8)", "start": 1608043681986, "status": "failure", "tasks": [ { "id": "1608043681990", "message": "snapshot", "start": 1608043681990, "status": "success", "end": 1608043683927, "result": "42f38a1a-5037-107d-1ceb-921fed6910fa" }, { "id": "1608043683929", "message": "add metadata to snapshot", "start": 1608043683929, "status": "success", "end": 1608043683938 }, { "id": "1608043684127", "message": "waiting for uptodate snapshot record", "start": 1608043684127, "status": "success", "end": 1608043684330 }, { "id": "1608043684332", "message": "start VM export", "start": 1608043684332, "status": "success", "end": 1608043684348 }, { "data": { "id": "7847ac06-d46f-476a-84c1-608f9302cb67", "type": "remote" }, "id": "1608043684352", "message": "export", "start": 1608043684352, "status": "failure", "tasks": [ { "id": "1608043684783", "message": "transfer", "start": 1608043684783, "status": "failure", "end": 1608043987240, "result": { "message": "Your socket connection to the server was not read from or written to within the timeout period. Idle connections will be closed.", "code": "RequestTimeout", "region": null, "time": "2020-12-15T14:53:06.821Z", "requestId": "E47562F2C7A82C45", "extendedRequestId": "5nM1888rfrbLHHgUrlsuF5cISTd2kj6oVpWfz5Yhtpj4GtfW7wkX9HnTq/Tj8uU37Opp8FNjlFU=", "statusCode": 400, "retryable": true, "name": "RequestTimeout", "stack": "RequestTimeout: Your socket connection to the server was not read from or written to within the timeout period. Idle connections will be closed.\n at Request.extractError (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/services/s3.js:700:35)\n at Request.callListeners (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/sequential_executor.js:106:20)\n at Request.emit (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/sequential_executor.js:78:10)\n at Request.emit (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:688:14)\n at Request.transition (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:22:10)\n at AcceptorStateMachine.runTo (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/state_machine.js:14:12)\n at /opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/state_machine.js:26:10\n at Request.<anonymous> (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:38:9)\n at Request.<anonymous> (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:690:12)\n at Request.callListeners (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/sequential_executor.js:116:18)\n at Request.emit (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/sequential_executor.js:78:10)\n at Request.emit (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:688:14)\n at Request.transition (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:22:10)\n at AcceptorStateMachine.runTo (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/state_machine.js:14:12)\n at /opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/state_machine.js:26:10\n at Request.<anonymous> (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:38:9)\n at Request.<anonymous> (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:690:12)\n at Request.callListeners (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/sequential_executor.js:116:18)\n at callNextListener (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/sequential_executor.js:96:12)\n at IncomingMessage.onEnd (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/event_listeners.js:313:13)\n at IncomingMessage.apply (events.js:326:22)\n at IncomingMessage.patchedEmit (/opt/xo/xo-builds/xen-orchestra-202012150908/@xen-orchestra/log/src/configure.js:92:17)" } } ], "end": 1608043987241, "result": { "message": "Your socket connection to the server was not read from or written to within the timeout period. Idle connections will be closed.", "code": "RequestTimeout", "region": null, "time": "2020-12-15T14:53:06.821Z", "requestId": "E47562F2C7A82C45", "extendedRequestId": "5nM1888rfrbLHHgUrlsuF5cISTd2kj6oVpWfz5Yhtpj4GtfW7wkX9HnTq/Tj8uU37Opp8FNjlFU=", "statusCode": 400, "retryable": true, "name": "RequestTimeout", "stack": "RequestTimeout: Your socket connection to the server was not read from or written to within the timeout period. Idle connections will be closed.\n at Request.extractError (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/services/s3.js:700:35)\n at Request.callListeners (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/sequential_executor.js:106:20)\n at Request.emit (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/sequential_executor.js:78:10)\n at Request.emit (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:688:14)\n at Request.transition (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:22:10)\n at AcceptorStateMachine.runTo (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/state_machine.js:14:12)\n at /opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/state_machine.js:26:10\n at Request.<anonymous> (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:38:9)\n at Request.<anonymous> (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:690:12)\n at Request.callListeners (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/sequential_executor.js:116:18)\n at Request.emit (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/sequential_executor.js:78:10)\n at Request.emit (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:688:14)\n at Request.transition (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:22:10)\n at AcceptorStateMachine.runTo (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/state_machine.js:14:12)\n at /opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/state_machine.js:26:10\n at Request.<anonymous> (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:38:9)\n at Request.<anonymous> (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/request.js:690:12)\n at Request.callListeners (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/sequential_executor.js:116:18)\n at callNextListener (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/sequential_executor.js:96:12)\n at IncomingMessage.onEnd (/opt/xo/xo-builds/xen-orchestra-202012150908/node_modules/aws-sdk/lib/event_listeners.js:313:13)\n at IncomingMessage.apply (events.js:326:22)\n at IncomingMessage.patchedEmit (/opt/xo/xo-builds/xen-orchestra-202012150908/@xen-orchestra/log/src/configure.js:92:17)" } }, { "id": "1608043987670", "message": "set snapshot.other_config[xo:backup:exported]", "start": 1608043987670, "status": "success", "end": 1608043989451 } ], "end": 1608051207208 } ], "end": 1608051207238 } -

-

@nraynaud ping

-

@dodgethis thank you, I will look harder into this.

-

@nraynaud glad to help somehow

-

Confirmed bumping to 12 Gb RAM allowed my "larger" VM's (60 GB+) to complete.

xo-server 5.73.0

xo-web 5.76.0 -

Can someone please test the issue with the current master branch please?

-

@nraynaud Will aim to do this over the weekend

-

I cannot get the backup to run; I have now tried about 12 times to run a backup that should complete in under 2 hours; without a timeout it runs on (stopped it after 10 hours), with a timeout of 3 hours it consistently fails with 'interrupted' without explanation.

Looking through the logs, we've got a lot of buffer allocation errors, it starts with this:

xo:xo-server ERROR uncaught exception { error: Error [RangeError]: Array buffer allocation failed at new ArrayBuffer (<anonymous>) at new Uint8Array (<anonymous>) at new FastBuffer (internal/buffer.js:950:1) at createUnsafeBuffer (buffer.js:149:12) at allocate (buffer.js:419:10) at Function.allocUnsafe (buffer.js:384:10) at BufferList._getBuffer (/opt/xen-orchestra/@xen-orchestra/fs/node_modules/readable-stream/lib/internal/streams/buffer_list.js:166:24) at BufferList.consume (/opt/xen-orchestra/@xen-orchestra/fs/node_modules/readable-stream/lib/internal/streams/buffer_list.js:119:54) at fromList (/opt/xen-orchestra/@xen-orchestra/fs/node_modules/readable-stream/lib/_stream_readable.js:1073:24) at Transform.Readable.read (/opt/xen-orchestra/@xen-orchestra/fs/node_modules/readable-stream/lib/_stream_readable.js:465:20) at ManagedUpload.fillStream (/opt/xen-orchestra/node_modules/aws-sdk/lib/s3/managed_upload.js:422:25) at Response.<anonymous> (/opt/xen-orchestra/node_modules/aws-sdk/lib/s3/managed_upload.js:574:24) at Request.<anonymous> (/opt/xen-orchestra/node_modules/aws-sdk/lib/request.js:369:18) at Request.callListeners (/opt/xen-orchestra/node_modules/aws-sdk/lib/sequential_executor.js:106:20) at Request.emit (/opt/xen-orchestra/node_modules/aws-sdk/lib/sequential_executor.js:78:10) at Request.emit (/opt/xen-orchestra/node_modules/aws-sdk/lib/request.js:688:14) at Request.transition (/opt/xen-orchestra/node_modules/aws-sdk/lib/request.js:22:10) at AcceptorStateMachine.runTo (/opt/xen-orchestra/node_modules/aws-sdk/lib/state_machine.js:14:12) at /opt/xen-orchestra/node_modules/aws-sdk/lib/state_machine.js:26:10 at Request.<anonymous> (/opt/xen-orchestra/node_modules/aws-sdk/lib/request.js:38:9) at Request.<anonymous> (/opt/xen-orchestra/node_modules/aws-sdk/lib/request.js:690:12) at Request.callListeners (/opt/xen-orchestra/node_modules/aws-sdk/lib/sequential_executor.js:116:18) { code: 'RangeError', time: 2021-01-16T22:07:11.590Z } }...followed by this repeating numerous times:

xo:xo-server ERROR uncaught exception { error: RangeError: Array buffer allocation failed at new ArrayBuffer (<anonymous>) at new Uint8Array (<anonymous>) at new FastBuffer (internal/buffer.js:950:1) at createUnsafeBuffer (buffer.js:149:12) at allocate (buffer.js:419:10) at Function.allocUnsafe (buffer.js:384:10) at Function.concat (buffer.js:564:25) at ManagedUpload.fillStream (/opt/xen-orchestra/node_modules/aws-sdk/lib/s3/managed_upload.js:433:38) at Transform.<anonymous> (/opt/xen-orchestra/node_modules/aws-sdk/lib/s3/managed_upload.js:188:44) at Transform.apply (events.js:314:20) at Transform.patchedEmit (/opt/xen-orchestra/@xen-orchestra/log/src/configure.js:92:17) at Transform.EventEmitter.emit (domain.js:483:12) at emitReadable_ (/opt/xen-orchestra/@xen-orchestra/fs/node_modules/readable-stream/lib/_stream_readable.js:538:12) at processTicksAndRejections (internal/process/task_queues.js:83:21) }Going to try whacking the memory right up ; will report back later today.