Migrating VM fails with DUPLICATE_VM error part2

-

@olivierlambert Thanks, this confirms that there is no MAC address clash.

The fact that they are both imported the same way from the same source system is a strong suggestion that something is clashing, especially after the MAC address issue found last year.Suggestions are welcome, otherwise I might entertain myself and recreate a Windows 10 vm on the destination and symply copy over and connect the disks.

Let me know if you want me to provide details or create a ticket somewhere.

-

Well, I'd love that we could find the culprit

xe vm-list params=allwill give you all the params for all VMs on a given host.Then, you should try to compare between 2 hosts and see if there's similar fields that shouldn't be similar (I know, it's vague).

In the mean time, let me ask around to some XAPI devs.

-

@olivierlambert Appreciated. I will go through the fields to see if anything looks odd..

-

@olivierlambert Nothing odd that stands out for me when comparing the data.

Additionally I tried migrating the (most likely) offending vm from the other host and I get the same error.

Migrated two other (created on xcp-ng) vms but those fail with a VDI_COPY_FAILED but the migration starts properly. Only at the end I get this error.vm.migrate { "vm": "0ab24395-becb-71f4-4f93-066c2d660cff", "mapVifsNetworks": { "651db47e-129a-7152-f988-cc4408abce0a": "a014b230-2db6-adb4-ba4f-0b1cc07fdcae" }, "migrationNetwork": "a014b230-2db6-adb4-ba4f-0b1cc07fdcae", "sr": "270f8f4a-a24c-ced6-99c7-9bc2ba5f5008", "targetHost": "3b57d90b-983f-46bb-8f52-4319025d1182" } { "code": 21, "data": { "objectId": "0ab24395-becb-71f4-4f93-066c2d660cff", "code": "VDI_COPY_FAILED" }, "message": "operation failed", "name": "XoError", "stack": "XoError: operation failed at operationFailed (/opt/xo/xo-builds/xen-orchestra-202111061645/packages/xo-common/src/api-errors.js:21:32) at file:///opt/xo/xo-builds/xen-orchestra-202111061645/packages/xo-server/src/api/vm.mjs:482:15 at Object.migrate (file:///opt/xo/xo-builds/xen-orchestra-202111061645/packages/xo-server/src/api/vm.mjs:469:3) at Api.callApiMethod (file:///opt/xo/xo-builds/xen-orchestra-202111061645/packages/xo-server/src/xo-mixins/api.mjs:304:20)" }Now I need to figure out what the real issue is.

-

Can you also try with

xetoo? Maybe the error message will be more clear. -

@olivierlambert Life happened, just spent some time working through scenarios.

Using

xe vm-migrateI can move vms from one host to the other (this is across pools) and back. For me this rules out a host level issue.When I try to move the vms that won't migrate in both cases I get the error

Cannot restore this VM because it would create a duplicateThis clearly points at the two vms that were imported from esxi last year.

Reviewed the output of

xe vm-list params=all uuid=<relevant vm uuid>again and finaly noticed that they both have the samemac_seed:value underother-config (MRW):other-config (MRW): auto_poweron: true; vgpu_pci: ; base_template_name: Other install media; mac_seed: 5e88eb6a-d680-c47f-a94a-028886971ba4; install-methods: cdromand

other-config (MRW): auto_poweron: true; import_task: OpaqueRef:680ed8f5-22a5-4a4b-af3b-6988f7734441; install-repository: cdrom; vgpu_pci: ; base_template_name: Other install media; mac_seed: 5e88eb6a-d680-c47f-a94a-028886971ba4; install-methods: cdromCould that cause a clash/duplicate error?

-

Please modify the mac seed and see if it still trigger the error

-

@olivierlambert Thanks. Done that.

Next stop isxe vm-migrate remote-master=172.25.10.11 remote-username=root remote-password=xxxxxx vif:f4b175c2-0082-212c-b9d9-bd616cd83d2c=a014b230-2db6-adb4-ba4f-0b1cc07fdcae vm=Win10vm Performing a Storage XenMotion migration. Your VM's VDIs will be migrated with the VM. Will migrate to remote host: xen1, using remote network: Pool-wide network associated with eth0. Here is the VDI mapping: VDI 4d4a809d-6801-4462-8e52-811882106821 -> SR 270f8f4a-a24c-ced6-99c7-9bc2ba5f5008 VDI 6a30ca10-a386-4e00-91aa-89c3e5bd43de -> SR 270f8f4a-a24c-ced6-99c7-9bc2ba5f5008 There were no servers available to complete the specified operation.Doesn't matter what I set the

mac_seedto: empty, just a few characters or change one character. Setting it back to the 'original' value reproduces the earlier error about creating a duplicate so themac_seedvalue is making a difference. But there seems to be more.I couldn't find anything specific on

There were no servers available to complete the specified operation.on the forum, just one hit; someone asking what it means.A wider internet search showed some Citrix /Xen related posts; one about a DVD image not being available anymore so I disabled the DVD but that also made no difference. There is more than enough storage available on the receiving SR; more than double what is needed.

But now it gets interesting. When I try to start the vm again I get this:

vm.start { "id": "afe623be-5451-fd48-3f24-60120e53f5ab", "bypassMacAddressesCheck": false, "force": false } { "code": "NO_HOSTS_AVAILABLE", "params": [], "call": { "method": "VM.start", "params": [ "OpaqueRef:3947721f-7307-4560-aa59-dec8a8e26bfb", false, false ] }, "message": "NO_HOSTS_AVAILABLE()", "name": "XapiError", "stack": "XapiError: NO_HOSTS_AVAILABLE() at Function.wrap (/opt/xo/xo-builds/xen-orchestra-202111061645/packages/xen-api/src/_XapiError.js:16:12) at /opt/xo/xo-builds/xen-orchestra-202111061645/packages/xen-api/src/transports/json-rpc.js:41:27 at AsyncResource.runInAsyncScope (async_hooks.js:197:9) at cb (/opt/xo/xo-builds/xen-orchestra-202111061645/node_modules/bluebird/js/release/util.js:355:42) at tryCatcher (/opt/xo/xo-builds/xen-orchestra-202111061645/node_modules/bluebird/js/release/util.js:16:23) at Promise._settlePromiseFromHandler (/opt/xo/xo-builds/xen-orchestra-202111061645/node_modules/bluebird/js/release/promise.js:547:31) at Promise._settlePromise (/opt/xo/xo-builds/xen-orchestra-202111061645/node_modules/bluebird/js/release/promise.js:604:18) at Promise._settlePromise0 (/opt/xo/xo-builds/xen-orchestra-202111061645/node_modules/bluebird/js/release/promise.js:649:10) at Promise._settlePromises (/opt/xo/xo-builds/xen-orchestra-202111061645/node_modules/bluebird/js/release/promise.js:729:18) at _drainQueueStep (/opt/xo/xo-builds/xen-orchestra-202111061645/node_modules/bluebird/js/release/async.js:93:12) at _drainQueue (/opt/xo/xo-builds/xen-orchestra-202111061645/node_modules/bluebird/js/release/async.js:86:9) at Async._drainQueues (/opt/xo/xo-builds/xen-orchestra-202111061645/node_modules/bluebird/js/release/async.js:102:5) at Immediate.Async.drainQueues [as _onImmediate] (/opt/xo/xo-builds/xen-orchestra-202111061645/node_modules/bluebird/js/release/async.js:15:14) at processImmediate (internal/timers.js:464:21) at process.topLevelDomainCallback (domain.js:152:15) at process.callbackTrampoline (internal/async_hooks.js:128:24)" }Setting it back to the 'original'

mac_seedvalue does not even allow me to start the vm. I do get the ' duplicate' error on vm migration.When I start via xe I get

xe vm-start vm=Win10vm There are no suitable hosts to start this VM on. The following table provides per-host reasons for why the VM could not be started: xen2 : Cannot start here [Not enough free memory] There were no servers available to complete the specified operation.There is enough free memory (9 Gb available, 6 Gb requested). Even freeing up 15 Gb of memory does not make a diffence. Same errors in XOA and via xe.

Any other suggestions?

-

- So it seems that MAC SEED was the initial issue?

- Can you display the

xe vm-param-list uuid=<VM UUID>?

-

@olivierlambert Here you go

snapshot-time ( RO): 19700101T00:00:00Z snapshot-info ( RO): parent ( RO): <not in database> children ( RO): is-control-domain ( RO): false power-state ( RO): halted memory-actual ( RO): 6442455040 memory-target ( RO): 0 memory-overhead ( RO): 54525952 memory-static-max ( RW): 6442450944 memory-dynamic-max ( RW): 6442450944 memory-dynamic-min ( RW): 6442450944 memory-static-min ( RW): 6442450944 suspend-VDI-uuid ( RW): <not in database> suspend-SR-uuid ( RW): <not in database> VCPUs-params (MRW): VCPUs-max ( RW): 2 VCPUs-at-startup ( RW): 2 actions-after-shutdown ( RW): Destroy actions-after-reboot ( RW): Restart actions-after-crash ( RW): Restart console-uuids (SRO): hvm ( RO): false platform (MRW): timeoffset: 3570; device-model: qemu-upstream-compat; nx: true; acpi: 1; apic: true; pae: true; hpet: true; viridian: true allowed-operations (SRO): changing_NVRAM; changing_dynamic_range; changing_shadow_memory; changing_static_range; make_into_template; migrate_send; destroy; export; start_on; start; clone; copy; snapshot current-operations (SRO): blocked-operations (MRW): allowed-VBD-devices (SRO): 2; 4; 5; 6; 7; 8; 9; 10; 11; 12; 13; 14; 15; 16; 17; 18; 19; 20; 21; 22; 23; 24; 25; 26; 27; 28; 29; 30; 31; 32; 33; 34; 35; 36; 37; 38; 39; 40; 41; 42; 43; 44; 45; 46; 47; 48; 49; 50; 51; 52; 53; 54; 55; 56; 57; 58; 59; 60; 61; 62; 63; 64; 65; 66; 67; 68; 69; 70; 71; 72; 73; 74; 75; 76; 77; 78; 79; 80; 81; 82; 83; 84; 85; 86; 87; 88; 89; 90; 91; 92; 93; 94; 95; 96; 97; 98; 99; 100; 101; 102; 103; 104; 105; 106; 107; 108; 109; 110; 111; 112; 113; 114; 115; 116; 117; 118; 119; 120; 121; 122; 123; 124; 125; 126; 127; 128; 129; 130; 131; 132; 133; 134; 135; 136; 137; 138; 139; 140; 141; 142; 143; 144; 145; 146; 147; 148; 149; 150; 151; 152; 153; 154; 155; 156; 157; 158; 159; 160; 161; 162; 163; 164; 165; 166; 167; 168; 169; 170; 171; 172; 173; 174; 175; 176; 177; 178; 179; 180; 181; 182; 183; 184; 185; 186; 187; 188; 189; 190; 191; 192; 193; 194; 195; 196; 197; 198; 199; 200; 201; 202; 203; 204; 205; 206; 207; 208; 209; 210; 211; 212; 213; 214; 215; 216; 217; 218; 219; 220; 221; 222; 223; 224; 225; 226; 227; 228; 229; 230; 231; 232; 233; 234; 235; 236; 237; 238; 239; 240; 241; 242; 243; 244; 245; 246; 247; 248; 249; 250; 251; 252; 253; 254 allowed-VIF-devices (SRO): 1; 2; 3; 4; 5; 6 possible-hosts ( RO): domain-type ( RW): hvm current-domain-type ( RO): unspecified HVM-boot-policy ( RW): BIOS order HVM-boot-params (MRW): order: cnd HVM-shadow-multiplier ( RW): 1.000 PV-kernel ( RW): PV-ramdisk ( RW): PV-args ( RW): PV-legacy-args ( RW): PV-bootloader ( RW): PV-bootloader-args ( RW): last-boot-CPU-flags ( RO): vendor: GenuineIntel; features: 1fcbfbff-f7fa3223-2d93fbff-00000423-00000001-000007ab-00000000-00000000-00001000-9c000400-00000000-00000000-00000000-00000000-00000000 last-boot-record ( RO): '{"xen_platform":[1,1],"pv_drivers_detected":true,"pci_power_mgmt":false,"pci_msitranslate":true,"qemu_vifs":[],"qemu_vbds":[],"suspend_memory_bytes":8589934592,"original_profile":"Qemu_upstream_compat","profile":"Qemu_upstream_compat","nested_virt":false,"nomigrate":false,"domain_config":["X86",{"emulation_flags":["X86_EMU_LAPIC","X86_EMU_HPET","X86_EMU_PM","X86_EMU_RTC","X86_EMU_IOAPIC","X86_EMU_PIC","X86_EMU_VGA","X86_EMU_IOMMU","X86_EMU_PIT","X86_EMU_USE_PIRQ"]}],"last_start_time":1603780055.119536,"ty":["HVM",{"firmware":"Bios","qemu_stubdom":false,"qemu_disk_cmdline":false,"boot_order":"cdn","pci_passthrough":false,"pci_emulations":[],"serial":"pty","acpi":true,"video":"Cirrus","video_mib":4,"timeoffset":"3585","shadow_multiplier":1.0,"hap":true}],"build_info":{"has_hard_affinity":false,"priv":["BuildHVM",{"video_mib":4,"shadow_multiplier":1.0}],"vcpus":2,"kernel":"/usr/libexec/xen/boot/hvmloader","memory_target":8388608,"memory_max":8388608},"version":2}' resident-on ( RO): <not in database> affinity ( RW): <not in database> other-config (MRW): mac_seed: 5e88eb6a-d680-c47f-a94a-028886971ba4; auto_poweron: true; vgpu_pci: ; base_template_name: Other install media; install-methods: cdrom dom-id ( RO): -1 recommendations ( RO): <restrictions><restriction field="memory-static-max" max="137438953472" /><restriction field="vcpus-max" max="32" /><restriction property="number-of-vbds" max="255" /><restriction property="number-of-vifs" max="7" /><restriction field="has-vendor-device" value="false" /></restrictions> xenstore-data (MRW): vm-data/mmio-hole-size: 268435456; vm-data: ha-always-run ( RW) [DEPRECATED]: false ha-restart-priority ( RW): blobs ( RO): start-time ( RO): 19700101T00:00:00Z install-time ( RO): 19700101T00:00:00Z VCPUs-number ( RO): 0 VCPUs-utilisation (MRO): os-version (MRO): name: Microsoft Windows 10 Pro|C:\WINDOWS|\Device\Harddisk0\Partition2; distro: windows; major: 6; minor: 2; spmajor: 0; spminor: 0 PV-drivers-version (MRO): major: 0; minor: 0; micro: 0; build: 0 PV-drivers-up-to-date ( RO) [DEPRECATED]: true memory (MRO): disks (MRO): VBDs (SRO): ac9e1b28-f576-b83d-c7ca-8ce097e3b30e; 9177269c-442b-24be-174e-25ba7ba20846; 3adc01b3-e9a7-42d8-8375-7380ef9c2791 networks (MRO): 0/ip: 172.25.10.211; 0/ipv4/0: 172.25.10.211 PV-drivers-detected ( RO): true other (MRO): feature-static-ip-setting: 1; feature-ts: 1; feature-ts2: 1; feature-xs-batcmd: 1; feature-setcomputername: 1; error: CreateProcessAsUser : 2 failed.; feature-s4: 1; feature-s3: 1; feature-reboot: 1; feature-poweroff: 1; feature-balloon: 1; feature-suspend: 1; has-vendor-device: 0; platform-feature-xs_reset_watches: 1; platform-feature-multiprocessor-suspend: 1; data-ts: 1 live ( RO): true guest-metrics-last-updated ( RO): 20211114T22:16:45Z can-use-hotplug-vbd ( RO): unspecified can-use-hotplug-vif ( RO): true cooperative ( RO) [DEPRECATED]: true tags (SRW): appliance ( RW): <not in database> snapshot-schedule ( RW): <not in database> is-vmss-snapshot ( RO): false start-delay ( RW): 0 shutdown-delay ( RW): 0 order ( RW): 0 version ( RO): 0 generation-id ( RO): 2464683622889442979:3979947479560168523 hardware-platform-version ( RO): 0 has-vendor-device ( RW): false requires-reboot ( RO): false reference-label ( RO): bios-strings (MRO): bios-vendor: Xen; bios-version: ; system-manufacturer: Xen; system-product-name: HVM domU; system-version: ; system-serial-number: ; baseboard-manufacturer: ; baseboard-product-name: ; baseboard-version: ; baseboard-serial-number: ; baseboard-asset-tag: ; baseboard-location-in-chassis: ; enclosure-asset-tag: ; hp-rombios: ; oem-1: Xen; oem-2: MS_VM_CERT/SHA1/bdbeb6e0a816d43fa6d3fe8aaef04c2bad9d3e3d -

Okay and on the host in question, can you put a

xe host-param-list? -

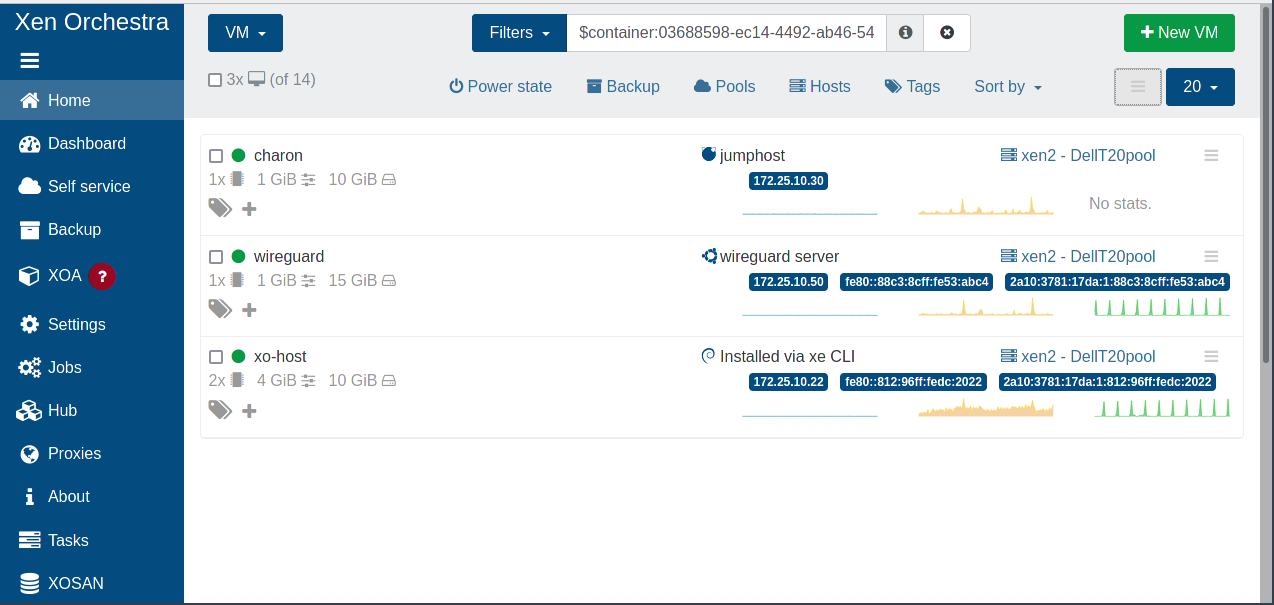

uid ( RO) : 03688598-ec14-4492-ab46-5424dcee8e9f name-label ( RW): xen2 name-description ( RW): Dell T20 allowed-operations (SRO): VM.migrate; provision; VM.resume; evacuate; VM.start current-operations (SRO): enabled ( RO): true display ( RO): enabled API-version-major ( RO): 2 API-version-minor ( RO): 16 API-version-vendor ( RO): XenSource API-version-vendor-implementation (MRO): logging (MRW): suspend-image-sr-uuid ( RW): a610de27-9c64-ee06-a8fd-4e1d1c7768ab crash-dump-sr-uuid ( RW): a610de27-9c64-ee06-a8fd-4e1d1c7768ab software-version (MRO): product_version: 8.2.0; product_version_text: 8.2; product_version_text_short: 8.2; platform_name: XCP; platform_version: 3.2.0; product_brand: XCP-ng; build_number: release/stockholm/master/7; hostname: localhost; date: 2021-05-20; dbv: 0.0.1; xapi: 1.20; xen: 4.13.1-9.12.1; linux: 4.19.0+1; xencenter_min: 2.16; xencenter_max: 2.16; network_backend: openvswitch; db_schema: 5.602 capabilities (SRO): xen-3.0-x86_64; xen-3.0-x86_32p; hvm-3.0-x86_32; hvm-3.0-x86_32p; hvm-3.0-x86_64; other-config (MRW): agent_start_time: 1636223713.; boot_time: 1632048708.; rpm_patch_installation_time: 1632048285.199; iscsi_iqn: iqn.2020-07.com.example:67db4a3c cpu_info (MRO): cpu_count: 4; socket_count: 1; vendor: GenuineIntel; speed: 3192.841; modelname: Intel(R) Xeon(R) CPU E3-1225 v3 @ 3.20GHz; family: 6; model: 60; stepping: 3; flags: fpu de tsc msr pae mce cx8 apic sep mca cmov pat clflush acpi mmx fxsr sse sse2 ss ht syscall nx rdtscp lm constant_tsc rep_good nopl nonstop_tsc cpuid pn -00000000-00000000-00000000-00000000; features_hvm: 1fcbfbff-f7fa3223-2d93fbff-00000423-00000001-000007ab-00000000-00000000-00001000-9c000400-00000000-00000000-00000000-00000000-00000000; features_hvm_host: 1fcbfbff-f7fa3223-2d93fbff-00000423-00000001-000007ab-00000000-00000000-00001000-9c000400-00000000-00000000-00000000-00000000-00000000; features_pv_host: 1fc9cbf5-f6f83203-2991cbf5-00000023-00000001-00000329-00000000-00000000-00001000-8c000400-00000000-00000000-00000000-00000000-00000000 chipset-info (MRO): iommu: true hostname ( RO): xen2 address ( RO): 172.25.10.12 supported-bootloaders (SRO): pygrub; eliloader blobs ( RO): memory-overhead ( RO): 621060096 memory-total ( RO): 25673416704 memory-free ( RO): 16197537792 memory-free-computed ( RO): 3970736128 host-metrics-live ( RO): true patches (SRO) [DEPRECATED]: updates (SRO): ha-statefiles ( RO): ha-network-peers ( RO): external-auth-type ( RO): external-auth-service-name ( RO): external-auth-configuration (MRO): edition ( RO): xcp-ng license-server (MRO): address: localhost; port: 27000 power-on-mode ( RO): power-on-config (MRO): local-cache-sr ( RO): <not in database> tags (SRW): ssl-legacy ( RW): false guest_VCPUs_params (MRW): virtual-hardware-platform-versions (SRO): 0; 1; 2 control-domain-uuid ( RO): 66c5258b-6429-4658-b31c-06ccd0f1896d resident-vms (SRO): 90ee64f7-9a07-fd83-033a-10183b98a9a6; c318a4e3-a14d-01ca-b2c4-df11c1f9d9b8; 66c5258b-6429-4658-b31c-06ccd0f1896d; 49c86f89-eabb-bd68-dc79-387434bdb899 updates-requiring-reboot (SRO): features (SRO): iscsi_iqn ( RW): iqn.2020-07.com.example:67db4a3c multipathing ( RW): false -

That's weird, you have a big diff between memory free and free computed. Ie you don't have enough free memory (computed) to boot the VM right now.

edit: maybe you have dynamic memory for some VMs, using more than you think.

-

Dynamic, yes, but total is still very low. Everywhere I look memory usage is just under 9 Gb including 2.2 Gb for xcp-ng. Host has 24 Gb. Should I just reboot to have a clean start?

-

Let's try a reboot, with no VMs up, except the one you want to boot.

-

@olivierlambert

I stopped all other VMs and then I was able to start the Win10vm again.Memory stettings for this VM

Static: 6 GiB/6 GiB

Dynamic: 6 GiB/6 GiBI'll do a reboot anyway because I have never had this before; normally I have 4-5 vms running and a few Gb of memory free. With the dynamice memory settings. I can only guess that the migrations used memory that was not freed up.

I will reboot both hosts, just to be sure; check the mac_seed and set it to something different and try again.

-

Thanks for your feedback

-

@olivierlambert Rebooted both hosts, everything came up as expected.

Stopped the Win10vm vm, changed themac_seedand started the migration.

Initially it looked ok but it finished with an error:xe vm-migrate remote-master=172.25.10.11 remote-username=root remote-password=xxxxxx vif:f4b175c2-0082-212c-b9d9-bd616cd83d2c=a014b230-2db6-adb4-ba4f-0b1cc07fdcae vm=Win10vm Performing a Storage XenMotion migration. Your VM's VDIs will be migrated with the VM. Will migrate to remote host: xen1, using remote network: Pool-wide network associated with eth0. Here is the VDI mapping: VDI 4d4a809d-6801-4462-8e52-811882106821 -> SR 270f8f4a-a24c-ced6-99c7-9bc2ba5f5008 VDI 6a30ca10-a386-4e00-91aa-89c3e5bd43de -> SR 270f8f4a-a24c-ced6-99c7-9bc2ba5f5008 The VDI copy action has failed <extra>: End_of_fileVia XO

vm.migrate { "vm": "afe623be-5451-fd48-3f24-60120e53f5ab", "mapVifsNetworks": { "f4b175c2-0082-212c-b9d9-bd616cd83d2c": "a014b230-2db6-adb4-ba4f-0b1cc07fdcae" }, "migrationNetwork": "a014b230-2db6-adb4-ba4f-0b1cc07fdcae", "sr": "270f8f4a-a24c-ced6-99c7-9bc2ba5f5008", "targetHost": "3b57d90b-983f-46bb-8f52-4319025d1182" } { "code": 21, "data": { "objectId": "afe623be-5451-fd48-3f24-60120e53f5ab", "code": "VDI_COPY_FAILED" }, "message": "operation failed", "name": "XoError", "stack": "XoError: operation failed at operationFailed (/opt/xo/xo-builds/xen-orchestra-202111061638/packages/xo-common/src/api-errors.js:21:32) at file:///opt/xo/xo-builds/xen-orchestra-202111061638/packages/xo-server/src/api/vm.mjs:482:15 at Object.migrate (file:///opt/xo/xo-builds/xen-orchestra-202111061638/packages/xo-server/src/api/vm.mjs:469:3) at Api.callApiMethod (file:///opt/xo/xo-builds/xen-orchestra-202111061638/packages/xo-server/src/xo-mixins/api.mjs:304:20)" }I have however succesfully migrated the 'other' vm that was imported (and had the duplicate

mac_seed) . So both are now running on the same host.So next to the

mac_seedthere seems to be something wrong with the imported windows vm; it starts horribly slow indeed. -

OK, the underlying disk has problems. I found the kernel.log and it is filling up with read errors. That part is clear now.

So what lead me to try and migrate the vm (slow boot/response) uncovered a

mac_seedduplication and we fixed that. Not sure if this was already fixed in the code last year.On to this prblem; could I have seen the disk issue somewhere in the XO interface? In the logs I only see the higher level issue of failed migrations. It's a learning experience anyway; maybe a mirrored disk could be an answer. Need to investigate.

-

Interesting to discover that

mac_seedcould cause this cryptic issue

XO doesn't have any knowledge on the "state" of the virtual disk, there's no API to expose that (if we can even imagine a way to know that the virtual disk got a problem in the first place)