Migrating VM fails with DUPLICATE_VM error part2

-

Okay and on the host in question, can you put a

xe host-param-list? -

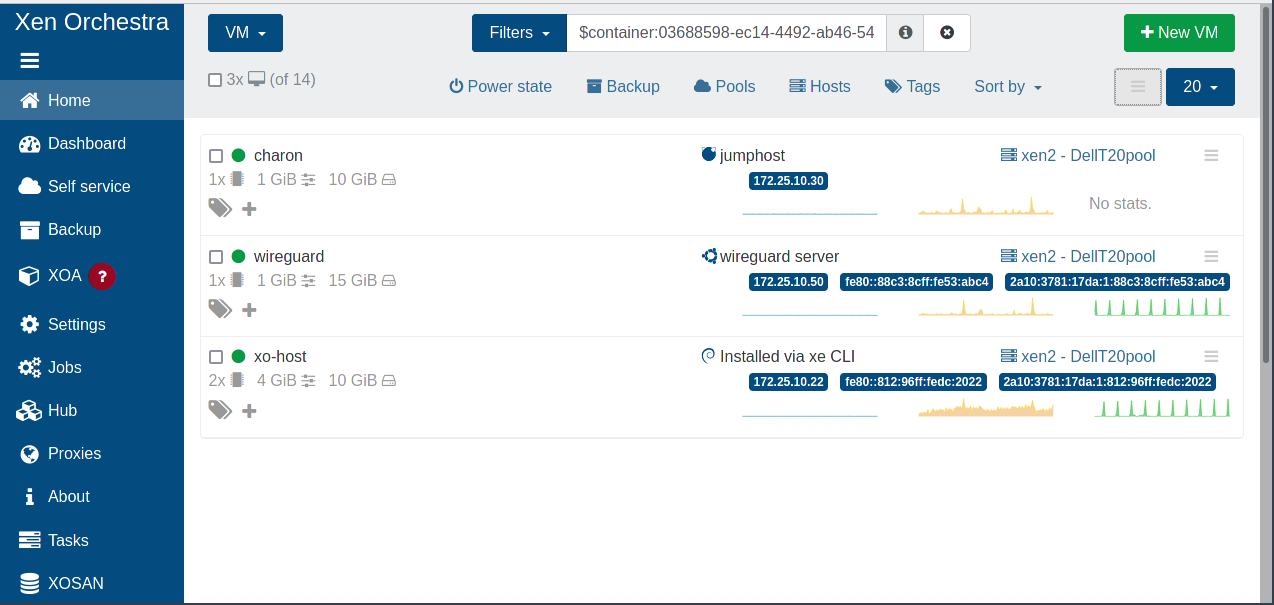

uid ( RO) : 03688598-ec14-4492-ab46-5424dcee8e9f name-label ( RW): xen2 name-description ( RW): Dell T20 allowed-operations (SRO): VM.migrate; provision; VM.resume; evacuate; VM.start current-operations (SRO): enabled ( RO): true display ( RO): enabled API-version-major ( RO): 2 API-version-minor ( RO): 16 API-version-vendor ( RO): XenSource API-version-vendor-implementation (MRO): logging (MRW): suspend-image-sr-uuid ( RW): a610de27-9c64-ee06-a8fd-4e1d1c7768ab crash-dump-sr-uuid ( RW): a610de27-9c64-ee06-a8fd-4e1d1c7768ab software-version (MRO): product_version: 8.2.0; product_version_text: 8.2; product_version_text_short: 8.2; platform_name: XCP; platform_version: 3.2.0; product_brand: XCP-ng; build_number: release/stockholm/master/7; hostname: localhost; date: 2021-05-20; dbv: 0.0.1; xapi: 1.20; xen: 4.13.1-9.12.1; linux: 4.19.0+1; xencenter_min: 2.16; xencenter_max: 2.16; network_backend: openvswitch; db_schema: 5.602 capabilities (SRO): xen-3.0-x86_64; xen-3.0-x86_32p; hvm-3.0-x86_32; hvm-3.0-x86_32p; hvm-3.0-x86_64; other-config (MRW): agent_start_time: 1636223713.; boot_time: 1632048708.; rpm_patch_installation_time: 1632048285.199; iscsi_iqn: iqn.2020-07.com.example:67db4a3c cpu_info (MRO): cpu_count: 4; socket_count: 1; vendor: GenuineIntel; speed: 3192.841; modelname: Intel(R) Xeon(R) CPU E3-1225 v3 @ 3.20GHz; family: 6; model: 60; stepping: 3; flags: fpu de tsc msr pae mce cx8 apic sep mca cmov pat clflush acpi mmx fxsr sse sse2 ss ht syscall nx rdtscp lm constant_tsc rep_good nopl nonstop_tsc cpuid pn -00000000-00000000-00000000-00000000; features_hvm: 1fcbfbff-f7fa3223-2d93fbff-00000423-00000001-000007ab-00000000-00000000-00001000-9c000400-00000000-00000000-00000000-00000000-00000000; features_hvm_host: 1fcbfbff-f7fa3223-2d93fbff-00000423-00000001-000007ab-00000000-00000000-00001000-9c000400-00000000-00000000-00000000-00000000-00000000; features_pv_host: 1fc9cbf5-f6f83203-2991cbf5-00000023-00000001-00000329-00000000-00000000-00001000-8c000400-00000000-00000000-00000000-00000000-00000000 chipset-info (MRO): iommu: true hostname ( RO): xen2 address ( RO): 172.25.10.12 supported-bootloaders (SRO): pygrub; eliloader blobs ( RO): memory-overhead ( RO): 621060096 memory-total ( RO): 25673416704 memory-free ( RO): 16197537792 memory-free-computed ( RO): 3970736128 host-metrics-live ( RO): true patches (SRO) [DEPRECATED]: updates (SRO): ha-statefiles ( RO): ha-network-peers ( RO): external-auth-type ( RO): external-auth-service-name ( RO): external-auth-configuration (MRO): edition ( RO): xcp-ng license-server (MRO): address: localhost; port: 27000 power-on-mode ( RO): power-on-config (MRO): local-cache-sr ( RO): <not in database> tags (SRW): ssl-legacy ( RW): false guest_VCPUs_params (MRW): virtual-hardware-platform-versions (SRO): 0; 1; 2 control-domain-uuid ( RO): 66c5258b-6429-4658-b31c-06ccd0f1896d resident-vms (SRO): 90ee64f7-9a07-fd83-033a-10183b98a9a6; c318a4e3-a14d-01ca-b2c4-df11c1f9d9b8; 66c5258b-6429-4658-b31c-06ccd0f1896d; 49c86f89-eabb-bd68-dc79-387434bdb899 updates-requiring-reboot (SRO): features (SRO): iscsi_iqn ( RW): iqn.2020-07.com.example:67db4a3c multipathing ( RW): false -

That's weird, you have a big diff between memory free and free computed. Ie you don't have enough free memory (computed) to boot the VM right now.

edit: maybe you have dynamic memory for some VMs, using more than you think.

-

Dynamic, yes, but total is still very low. Everywhere I look memory usage is just under 9 Gb including 2.2 Gb for xcp-ng. Host has 24 Gb. Should I just reboot to have a clean start?

-

Let's try a reboot, with no VMs up, except the one you want to boot.

-

@olivierlambert

I stopped all other VMs and then I was able to start the Win10vm again.Memory stettings for this VM

Static: 6 GiB/6 GiB

Dynamic: 6 GiB/6 GiBI'll do a reboot anyway because I have never had this before; normally I have 4-5 vms running and a few Gb of memory free. With the dynamice memory settings. I can only guess that the migrations used memory that was not freed up.

I will reboot both hosts, just to be sure; check the mac_seed and set it to something different and try again.

-

Thanks for your feedback

-

@olivierlambert Rebooted both hosts, everything came up as expected.

Stopped the Win10vm vm, changed themac_seedand started the migration.

Initially it looked ok but it finished with an error:xe vm-migrate remote-master=172.25.10.11 remote-username=root remote-password=xxxxxx vif:f4b175c2-0082-212c-b9d9-bd616cd83d2c=a014b230-2db6-adb4-ba4f-0b1cc07fdcae vm=Win10vm Performing a Storage XenMotion migration. Your VM's VDIs will be migrated with the VM. Will migrate to remote host: xen1, using remote network: Pool-wide network associated with eth0. Here is the VDI mapping: VDI 4d4a809d-6801-4462-8e52-811882106821 -> SR 270f8f4a-a24c-ced6-99c7-9bc2ba5f5008 VDI 6a30ca10-a386-4e00-91aa-89c3e5bd43de -> SR 270f8f4a-a24c-ced6-99c7-9bc2ba5f5008 The VDI copy action has failed <extra>: End_of_fileVia XO

vm.migrate { "vm": "afe623be-5451-fd48-3f24-60120e53f5ab", "mapVifsNetworks": { "f4b175c2-0082-212c-b9d9-bd616cd83d2c": "a014b230-2db6-adb4-ba4f-0b1cc07fdcae" }, "migrationNetwork": "a014b230-2db6-adb4-ba4f-0b1cc07fdcae", "sr": "270f8f4a-a24c-ced6-99c7-9bc2ba5f5008", "targetHost": "3b57d90b-983f-46bb-8f52-4319025d1182" } { "code": 21, "data": { "objectId": "afe623be-5451-fd48-3f24-60120e53f5ab", "code": "VDI_COPY_FAILED" }, "message": "operation failed", "name": "XoError", "stack": "XoError: operation failed at operationFailed (/opt/xo/xo-builds/xen-orchestra-202111061638/packages/xo-common/src/api-errors.js:21:32) at file:///opt/xo/xo-builds/xen-orchestra-202111061638/packages/xo-server/src/api/vm.mjs:482:15 at Object.migrate (file:///opt/xo/xo-builds/xen-orchestra-202111061638/packages/xo-server/src/api/vm.mjs:469:3) at Api.callApiMethod (file:///opt/xo/xo-builds/xen-orchestra-202111061638/packages/xo-server/src/xo-mixins/api.mjs:304:20)" }I have however succesfully migrated the 'other' vm that was imported (and had the duplicate

mac_seed) . So both are now running on the same host.So next to the

mac_seedthere seems to be something wrong with the imported windows vm; it starts horribly slow indeed. -

OK, the underlying disk has problems. I found the kernel.log and it is filling up with read errors. That part is clear now.

So what lead me to try and migrate the vm (slow boot/response) uncovered a

mac_seedduplication and we fixed that. Not sure if this was already fixed in the code last year.On to this prblem; could I have seen the disk issue somewhere in the XO interface? In the logs I only see the higher level issue of failed migrations. It's a learning experience anyway; maybe a mirrored disk could be an answer. Need to investigate.

-

Interesting to discover that

mac_seedcould cause this cryptic issue

XO doesn't have any knowledge on the "state" of the virtual disk, there's no API to expose that (if we can even imagine a way to know that the virtual disk got a problem in the first place)

-

@olivierlambert Indeed. Learned a lot this week. Thanks for walking me through this.

Should I register anything for

mac_seedduplication that is obviously caused by the export from esxi/import into xcp-ng? The MAC address duplciation issue was fixed earlier if I am not mistaken. -

I'd like to see if we can reproduce this. If yes, then we need to be careful on this parameter on OVA import, indeed. Ping @Darkbeldin so we can discuss on how to reproduce the problem first.

-

@olivierlambert Will take a look at it tomorrow morning

-

@darkbeldin Thanks! If I can test anything let me know. Do note that I have removed the degraded disk from the host and said goodbye to three vm's that I could not copy/migrate/clone anymore. Not a big loss, this is a home lab but I could not even copy them away to preserve them for later. I do have the other vm (I imported two last year from esxi).

-

@andres Hi Andres,

Still working on it at the moment

i keep you updated when i have more infos.

i keep you updated when i have more infos. -

@andres Ok after discussing with XAPI team about that apparently the behavior is intended, you should not have VMs with the same MAC_SEED on the same host.

Dev team will take a deeper look at this to see if at least the error could be clearly reported.

To go further could you please provide us with the means you used to migrate your VMs from VMWare?

Did you do it manually? did you export from VMware to import to XCP? -

@darkbeldin We are talking 15-18 months ago ... What I remember is a fairly standard export from esxi into (I believe) an OVA format and I imported that directly into xcp-ng. I don't remember anything complex other than having to redo the export a few times to get properly rid of vmware tools and some drivers.

Edit: I checked a few articles and it was probably the OVF format.

-

@andres Thanks will do some testing on my side.

-

@darkbeldin For the record, both vm's were running on the same esxi host when exported. This may or may not be a factor (I suspect it is).

-

D Danp referenced this topic on

D Danp referenced this topic on