Delta Backup Showing Success No Delta Saved

-

I am running xo-server 5.98.0 & xo-web 5.99.0 built from sources and I have a bug in the Delta backups that I can reproduce. I have deleted the job and the all the related files & directories under /xo-vm-backups, created the job with the same parameters to try to rule out the job itself being the factor but the problem persists.

The backup job is a Delta backup that runs every 10 9,11,13,15,17,19 * * 1,2,3,4,5 and has a retention of 12, no health check, Full backup interval of 20 and a time out of 2 hrs but the job runs fast enough so that the timeout is not an issue. The remote target is a samba share.

When I purge the job & the folder, the create it again it works for a while then at some point the backup job still shows success but stops putting any data in xo-vmbackups/221c4e75-a85f-1810-3a04-b9ea61455b27

My last backup job delete and recreate of this delta backup was on July 5th 2022. Because the job shows success it's hard to know when it stopped but so I did a daily check to make sure the delta backups were there and up until this morning on July 28th they were there and working. They are now missing and there are no files in the 221c4e75-a85f-1810-3a04-b9ea61455b27 directory, no json files, just a sub directory called vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7 with nothing in it.

Here is the one one of success messages that does not really offer me much insight because it does not have an error and indicates the jobs working fine, says it transfered data, but there are no deltas of any other data in the directory.

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1658963400005", "jobId": "38c3b817-7e3a-4002-9a6a-c6114def4fc5", "jobName": "InvoiceNinja V4", "message": "backup", "scheduleId": "1cc8a8ad-44a6-46a2-8bab-313efb09f5a3", "start": 1658963400005, "status": "success", "infos": [ { "data": { "vms": [ "221c4e75-a85f-1810-3a04-b9ea61455b27" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "221c4e75-a85f-1810-3a04-b9ea61455b27" }, "id": "1658963400835", "message": "backup VM", "start": 1658963400835, "status": "success", "tasks": [ { "id": "1658963402162", "message": "clean-vm", "start": 1658963402162, "status": "success", "end": 1658963402301, "result": { "merge": false } }, { "id": "1658963403482", "message": "snapshot", "start": 1658963403482, "status": "success", "end": 1658963407616, "result": "af119325-bc04-de2d-6e25-cc83449ba14b" }, { "data": { "id": "a7420f96-4fe0-4dc5-943b-e09dd1fb06d1", "isFull": false, "type": "remote" }, "id": "1658963407617", "message": "export", "start": 1658963407617, "status": "success", "tasks": [ { "id": "1658963408744", "message": "transfer", "start": 1658963408744, "status": "success", "end": 1658963426470, "result": { "size": 722123264 } }, { "id": "1658963427334", "message": "clean-vm", "start": 1658963427334, "status": "success", "end": 1658963427442, "result": { "merge": true } } ], "end": 1658963427461 } ], "end": 1658963427462 } ], "end": 1658963427462 }All the other back ups that go to this same remote are working fine, both full and delta. But none of the other backups are set to retail more than 3 Delta copies so I am thinking that is where the issue may be. Let me know what other logs I can provide that might be helpful and now that I can reproduce this I will be doing some more frequent deltas in the lab to see if I can trigger this issue sooner than the few weeks it took to show up.

-

Hi @lawrencesystems ,

Do you use the new vhd directory format or the older vhd file for your remote ?

Can you send us the syslog of the last two days, especially the part concerning the xo-server service ?

Regards,

Florent

-

@florent

I am using the old VHD director format, I was not sure if I should or could switch an existing remote to the new format.Here is the complete syslog file https://www.dropbox.com/s/48jns9f1j9k02k1/XO syslog?dl=0

Here is a partial dump and I see the error messages in there, but not clear on the cause.

Jul 25 00:00:00 xo-pool-xen kernel: [55042.585675] Key type cifs.idmap registered Jul 25 00:00:00 xo-pool-xen kernel: [55042.586311] CIFS: No dialect specified on mount. Default has changed to a more secure dialect, SMB2.1 or later (e.g. SMB3.1.1), from CIFS (SMB1). To use the less secure SMB1 dialect to access old servers which do not support SMB3.1.1 (or even SMB3 or SMB2.1) specify vers=1.0 on mount. Jul 25 00:00:00 xo-pool-xen kernel: [55042.586315] CIFS: Attempting to mount \\192.168.10.225\production_backups Jul 25 00:00:01 xo-pool-xen systemd[1]: logrotate.service: Deactivated successfully. Jul 25 00:00:01 xo-pool-xen systemd[1]: Finished Rotate log files. Jul 25 00:00:01 xo-pool-xen systemd[1]: dpkg-db-backup.service: Deactivated successfully. Jul 25 00:00:01 xo-pool-xen systemd[1]: Finished Daily dpkg database backup service. Jul 25 05:52:31 xo-pool-xen systemd[1]: Starting Daily man-db regeneration... Jul 25 05:52:33 xo-pool-xen systemd[1]: man-db.service: Deactivated successfully. Jul 25 05:52:33 xo-pool-xen systemd[1]: Finished Daily man-db regeneration. Jul 25 06:06:31 xo-pool-xen systemd[1]: Starting Daily apt upgrade and clean activities... Jul 25 06:06:34 xo-pool-xen systemd[1]: apt-daily-upgrade.service: Deactivated successfully. Jul 25 06:06:34 xo-pool-xen systemd[1]: Finished Daily apt upgrade and clean activities. Jul 25 06:06:34 xo-pool-xen systemd[1]: apt-daily-upgrade.service: Consumed 1.907s CPU time. Jul 25 06:09:01 xo-pool-xen CRON[241482]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 06:17:01 xo-pool-xen CRON[242903]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 06:25:01 xo-pool-xen CRON[244330]: (root) CMD (if [ ! -d /run/systemd/system ] && [ -x /usr/libexec/ntpsec/rotate-stats ] ; then /usr/libexec/ntpsec/rotate-stats ; fi) Jul 25 06:25:01 xo-pool-xen CRON[244331]: (root) CMD (test -x /usr/sbin/anacron || { cd / && run-parts --report /etc/cron.daily; }) Jul 25 06:25:01 xo-pool-xen systemd[1]: Started Rotate ntpd stats. Jul 25 06:25:01 xo-pool-xen systemd[1]: ntpsec-rotate-stats.service: Deactivated successfully. Jul 25 06:39:01 xo-pool-xen CRON[246667]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 07:09:01 xo-pool-xen CRON[251986]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 07:17:01 xo-pool-xen CRON[253537]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 07:39:01 xo-pool-xen CRON[257453]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 08:09:01 xo-pool-xen CRON[262785]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 08:17:01 xo-pool-xen CRON[264307]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 08:24:00 xo-pool-xen kernel: [85281.249088] CIFS: Attempting to mount \\192.168.3.225\xen_lab_backups Jul 25 08:25:12 xo-pool-xen xo-server[673]: 2022-07-25T12:25:12.152Z xo:main INFO + Console proxy (redacted@lawrencesystems.com - ::ffff:192.168.3.1) Jul 25 08:25:45 xo-pool-xen xo-server[673]: 2022-07-25T12:25:45.786Z xo:main INFO - Console proxy (redacted@lawrencesystems.com - ::ffff:192.168.3.1) Jul 25 08:25:55 xo-pool-xen xo-server[673]: 2022-07-25T12:25:55.713Z xo:main INFO + Console proxy (redacted@lawrencesystems.com - ::ffff:192.168.3.1) Jul 25 08:26:12 xo-pool-xen xo-server[673]: 2022-07-25T12:26:12.473Z xo:main INFO - Console proxy (redacted@lawrencesystems.com - ::ffff:192.168.3.1) Jul 25 08:39:01 xo-pool-xen CRON[268271]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 08:45:48 xo-pool-xen xo-server[673]: 2022-07-25T12:45:48.539Z xo:api WARN redacted@lawrencesystems.com | host.set(...) [6s] =!> XapiError: SR_BACKEND_FAILURE_74(, NFS unmount error [opterr=error is 32], ) Jul 25 08:47:40 xo-pool-xen xo-server[673]: 2022-07-25T12:47:40.473Z xo:api WARN redacted@lawrencesystems.com | host.set(...) [7s] =!> XapiError: SR_BACKEND_FAILURE_74(, NFS unmount error [opterr=error is 32], ) Jul 25 08:49:24 xo-pool-xen xo-server[673]: _watchEvents Error: socket hang up Jul 25 08:49:24 xo-pool-xen xo-server[673]: at connResetException (node:internal/errors:692:14) Jul 25 08:49:24 xo-pool-xen xo-server[673]: at TLSSocket.socketOnEnd (node:_http_client:478:23) Jul 25 08:49:24 xo-pool-xen xo-server[673]: at TLSSocket.emit (node:events:539:35) Jul 25 08:49:24 xo-pool-xen xo-server[673]: at TLSSocket.patchedEmit [as emit] (/etc/xo/xo-builds/xen-orchestra-202207010714/@xen-orchestra/log/configure.js:120:17) Jul 25 08:49:24 xo-pool-xen xo-server[673]: at endReadableNT (node:internal/streams/readable:1345:12) Jul 25 08:49:24 xo-pool-xen xo-server[673]: at processTicksAndRejections (node:internal/process/task_queues:83:21) { Jul 25 08:49:24 xo-pool-xen xo-server[673]: code: 'ECONNRESET', Jul 25 08:49:24 xo-pool-xen xo-server[673]: url: 'https://192.168.3.27/jsonrpc', Jul 25 08:49:24 xo-pool-xen xo-server[673]: call: { Jul 25 08:49:24 xo-pool-xen xo-server[673]: method: 'event.from', Jul 25 08:49:24 xo-pool-xen xo-server[673]: params: [ [Array], '00000000000011465031,00000000000011460820', 60.1 ] Jul 25 08:49:24 xo-pool-xen xo-server[673]: } Jul 25 08:49:24 xo-pool-xen xo-server[673]: } Jul 25 08:50:43 xo-pool-xen xo-server[673]: _watchEvents Error: connect EHOSTUNREACH 192.168.3.27:443 Jul 25 08:50:43 xo-pool-xen xo-server[673]: at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1187:16) Jul 25 08:50:43 xo-pool-xen xo-server[673]: at TCPConnectWrap.callbackTrampoline (node:internal/async_hooks:130:17) { Jul 25 08:50:43 xo-pool-xen xo-server[673]: errno: -113, Jul 25 08:50:43 xo-pool-xen xo-server[673]: code: 'EHOSTUNREACH', Jul 25 08:50:43 xo-pool-xen xo-server[673]: syscall: 'connect', Jul 25 08:50:43 xo-pool-xen xo-server[673]: address: '192.168.3.27', Jul 25 08:50:43 xo-pool-xen xo-server[673]: port: 443, Jul 25 08:50:43 xo-pool-xen xo-server[673]: url: 'https://192.168.3.27/jsonrpc', Jul 25 08:50:43 xo-pool-xen xo-server[673]: call: { Jul 25 08:50:43 xo-pool-xen xo-server[673]: method: 'event.from', Jul 25 08:50:43 xo-pool-xen xo-server[673]: params: [ [Array], '00000000000011465031,00000000000011460820', 60.1 ] Jul 25 08:50:43 xo-pool-xen xo-server[673]: } Jul 25 08:50:43 xo-pool-xen xo-server[673]: } Jul 25 08:51:46 xo-pool-xen xo-server[673]: _watchEvents Error: connect EHOSTUNREACH 192.168.3.27:443 Jul 25 08:51:46 xo-pool-xen xo-server[673]: at TCPConnectWrap.afterConnect [as oncomplete] (node:net:1187:16) Jul 25 08:51:46 xo-pool-xen xo-server[673]: at TCPConnectWrap.callbackTrampoline (node:internal/async_hooks:130:17) { Jul 25 08:51:46 xo-pool-xen xo-server[673]: errno: -113, Jul 25 08:51:46 xo-pool-xen xo-server[673]: code: 'EHOSTUNREACH', Jul 25 08:51:46 xo-pool-xen xo-server[673]: syscall: 'connect', Jul 25 08:51:46 xo-pool-xen xo-server[673]: address: '192.168.3.27', Jul 25 08:51:46 xo-pool-xen xo-server[673]: port: 443, Jul 25 08:51:46 xo-pool-xen xo-server[673]: url: 'https://192.168.3.27/jsonrpc', Jul 25 08:51:46 xo-pool-xen xo-server[673]: call: { Jul 25 08:51:46 xo-pool-xen xo-server[673]: method: 'event.from', Jul 25 08:51:46 xo-pool-xen xo-server[673]: params: [ [Array], '00000000000011465031,00000000000011460820', 60.1 ] Jul 25 08:51:46 xo-pool-xen xo-server[673]: } Jul 25 08:51:46 xo-pool-xen xo-server[673]: } Jul 25 08:52:47 xo-pool-xen xo-server[673]: _watchEvents Error: write ECONNRESET Jul 25 08:52:47 xo-pool-xen xo-server[673]: at WriteWrap.onWriteComplete [as oncomplete] (node:internal/stream_base_commons:94:16) Jul 25 08:52:47 xo-pool-xen xo-server[673]: at WriteWrap.callbackTrampoline (node:internal/async_hooks:130:17) { Jul 25 08:52:47 xo-pool-xen xo-server[673]: errno: -104, Jul 25 08:52:47 xo-pool-xen xo-server[673]: code: 'ECONNRESET', Jul 25 08:52:47 xo-pool-xen xo-server[673]: syscall: 'write', Jul 25 08:52:47 xo-pool-xen xo-server[673]: url: 'https://192.168.3.27/jsonrpc', Jul 25 08:52:47 xo-pool-xen xo-server[673]: call: { Jul 25 08:52:47 xo-pool-xen xo-server[673]: method: 'event.from', Jul 25 08:52:47 xo-pool-xen xo-server[673]: params: [ [Array], '00000000000011465031,00000000000011460820', 60.1 ] Jul 25 08:52:47 xo-pool-xen xo-server[673]: } Jul 25 08:52:47 xo-pool-xen xo-server[673]: } Jul 25 08:53:35 xo-pool-xen xo-server[673]: 2022-07-25T12:53:35.136Z xo:api WARN redacted@lawrencesystems.com | host.getSchedulerGranularity(...) [11ms] =!> XapiError: HOST_OFFLINE(OpaqueRef:1e0985e9-fc80-451b-ac44-11499c3432f5) Jul 25 08:53:35 xo-pool-xen xo-server[673]: 2022-07-25T12:53:35.205Z xo:api WARN redacted@lawrencesystems.com | host.isHyperThreadingEnabled(...) [7ms] =!> XapiError: HOST_OFFLINE(OpaqueRef:1e0985e9-fc80-451b-ac44-11499c3432f5) Jul 25 08:54:02 xo-pool-xen xo-server[673]: 2022-07-25T12:54:02.938Z xo:main INFO + Console proxy (redacted@lawrencesystems.com - ::ffff:192.168.3.1) Jul 25 08:54:03 xo-pool-xen xo-server[673]: 2022-07-25T12:54:03.842Z xo:main INFO - Console proxy (redacted@lawrencesystems.com - ::ffff:192.168.3.1) Jul 25 08:55:03 xo-pool-xen xo-server[673]: 2022-07-25T12:55:03.789Z xo:main INFO + Console proxy (redacted@lawrencesystems.com - ::ffff:192.168.3.1) Jul 25 08:55:05 xo-pool-xen xo-server[673]: 2022-07-25T12:55:05.636Z xo:main INFO - Console proxy (redacted@lawrencesystems.com - ::ffff:192.168.3.1) Jul 25 08:55:16 xo-pool-xen xo-server[673]: 2022-07-25T12:55:16.718Z xo:main INFO + Console proxy (redacted@lawrencesystems.com - ::ffff:192.168.3.1) Jul 25 08:55:22 xo-pool-xen xo-server[673]: 2022-07-25T12:55:22.455Z xo:main INFO - Console proxy (redacted@lawrencesystems.com - ::ffff:192.168.3.1) Jul 25 08:55:26 xo-pool-xen xo-server[673]: 2022-07-25T12:55:26.423Z xo:main INFO + Console proxy (redacted@lawrencesystems.com - ::ffff:192.168.3.1) Jul 25 08:55:56 xo-pool-xen xo-server[673]: 2022-07-25T12:55:56.139Z xo:main INFO - Console proxy (redacted@lawrencesystems.com - ::ffff:192.168.3.1) Jul 25 08:59:09 xo-pool-xen systemd[1]: Starting Cleanup of Temporary Directories... Jul 25 08:59:09 xo-pool-xen systemd[1]: systemd-tmpfiles-clean.service: Deactivated successfully. Jul 25 08:59:09 xo-pool-xen systemd[1]: Finished Cleanup of Temporary Directories. Jul 25 09:09:01 xo-pool-xen CRON[273208]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 09:10:00 xo-pool-xen kernel: [88041.713187] CIFS: Attempting to mount \\192.168.10.225\production_backups Jul 25 09:17:01 xo-pool-xen CRON[274578]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 09:39:01 xo-pool-xen CRON[278433]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 10:09:01 xo-pool-xen CRON[283466]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 10:17:01 xo-pool-xen CRON[284691]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 10:39:01 xo-pool-xen CRON[288197]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 10:57:01 xo-pool-xen CRON[291081]: (root) CMD ( test -x /etc/cron.daily/popularity-contest && /etc/cron.daily/popularity-contest --crond) Jul 25 11:09:01 xo-pool-xen CRON[293029]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 11:10:01 xo-pool-xen kernel: [95241.714544] CIFS: Attempting to mount \\192.168.10.225\production_backups Jul 25 11:17:01 xo-pool-xen CRON[294269]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 11:39:01 xo-pool-xen CRON[297627]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 12:09:01 xo-pool-xen CRON[302778]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 12:17:01 xo-pool-xen CRON[304032]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 12:39:01 xo-pool-xen CRON[307529]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 13:09:01 xo-pool-xen CRON[312724]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 13:10:00 xo-pool-xen kernel: [102441.270693] CIFS: Attempting to mount \\192.168.10.225\production_backups Jul 25 13:17:01 xo-pool-xen CRON[314003]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 13:39:01 xo-pool-xen CRON[317403]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 14:09:01 xo-pool-xen CRON[322228]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 14:17:01 xo-pool-xen CRON[323473]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 14:39:01 xo-pool-xen CRON[326974]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 15:08:09 xo-pool-xen systemd[1]: Starting Daily apt download activities... Jul 25 15:08:40 xo-pool-xen systemd[1]: apt-daily.service: Deactivated successfully. Jul 25 15:08:40 xo-pool-xen systemd[1]: Finished Daily apt download activities. Jul 25 15:08:40 xo-pool-xen systemd[1]: apt-daily.service: Consumed 36.407s CPU time. Jul 25 15:09:01 xo-pool-xen CRON[332394]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 15:10:00 xo-pool-xen kernel: [109641.083009] CIFS: Attempting to mount \\192.168.10.225\production_backups Jul 25 15:17:01 xo-pool-xen CRON[333670]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 15:39:01 xo-pool-xen CRON[336995]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 16:09:01 xo-pool-xen CRON[341866]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 16:17:01 xo-pool-xen CRON[343113]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 16:39:01 xo-pool-xen CRON[346539]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 17:09:01 xo-pool-xen CRON[351494]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 17:10:00 xo-pool-xen kernel: [116840.897246] CIFS: Attempting to mount \\192.168.10.225\production_backups Jul 25 17:17:01 xo-pool-xen CRON[352779]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 17:39:01 xo-pool-xen CRON[356321]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 18:09:01 xo-pool-xen CRON[361020]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 18:17:01 xo-pool-xen CRON[362480]: (root) CMD (cd / && run-parts --report /etc/cron.hourly) Jul 25 18:39:01 xo-pool-xen CRON[366289]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 19:09:01 xo-pool-xen CRON[371461]: (root) CMD ( [ -x /usr/lib/php/sessionclean ] && if [ ! -d /run/systemd/system ]; then /usr/lib/php/sessionclean; fi) Jul 25 19:10:00 xo-pool-xen kernel: [124040.716694] CIFS: Attempting to mount \\192.168.10.225\production_backups Jul 25 19:10:28 xo-pool-xen xo-server[371693]: 2022-07-25T23:10:28.450Z xo:backups:mergeWorker INFO starting Jul 25 19:10:42 xo-pool-xen xo-server[371693]: 2022-07-25T23:10:42.398Z xo:backups:mergeWorker WARN failure handling task { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: error: [Error: EACCES: permission denied, rename '/run/xo-server/mounts/a7420f96-4fe0-4dc5-943b-e09dd1fb06d1/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220721T231008Z.vhd' -> '/run/xo-server/mounts/a7420f96-4fe0-4dc5-943b-e09dd1fb06d1/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T131008Z.vhd'] { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: errno: -13, Jul 25 19:10:42 xo-pool-xen xo-server[371693]: code: 'EACCES', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: syscall: 'rename', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: path: '/run/xo-server/mounts/a7420f96-4fe0-4dc5-943b-e09dd1fb06d1/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220721T231008Z.vhd', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: dest: '/run/xo-server/mounts/a7420f96-4fe0-4dc5-943b-e09dd1fb06d1/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T131008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent VHD is missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T131008Z.vhd', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: vhdPath: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T151008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent VHD is missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T151008Z.vhd', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: vhdPath: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T171008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent VHD is missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T171008Z.vhd', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: vhdPath: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T191008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent VHD is missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T191008Z.vhd', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: vhdPath: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T211008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent VHD is missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T211008Z.vhd', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: vhdPath: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T231008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent VHD is missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T231008Z.vhd', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: vhdPath: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T131008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent VHD is missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T131008Z.vhd', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: vhdPath: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T151008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent VHD is missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T151008Z.vhd', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: vhdPath: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T171008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent VHD is missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T171008Z.vhd', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: vhdPath: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T191008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent VHD is missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T191008Z.vhd', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: vhdPath: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T211008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent VHD is missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: parent: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T211008Z.vhd', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: vhdPath: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T231008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: some metadata VHDs are missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: json: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/20220722T131008Z.json', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: missingVhds: [ Jul 25 19:10:42 xo-pool-xen xo-server[371693]: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T131008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: ] Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: some metadata VHDs are missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: json: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/20220722T171008Z.json', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: missingVhds: [ Jul 25 19:10:42 xo-pool-xen xo-server[371693]: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T171008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: ] Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: some metadata VHDs are missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: json: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/20220722T151008Z.json', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: missingVhds: [ Jul 25 19:10:42 xo-pool-xen xo-server[371693]: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T151008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: ] Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: some metadata VHDs are missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: json: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/20220722T191008Z.json', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: missingVhds: [ Jul 25 19:10:42 xo-pool-xen xo-server[371693]: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T191008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: ] Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: some metadata VHDs are missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: json: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/20220722T211008Z.json', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: missingVhds: [ Jul 25 19:10:42 xo-pool-xen xo-server[371693]: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T211008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: ] Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: some metadata VHDs are missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: json: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/20220722T231008Z.json', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: missingVhds: [ Jul 25 19:10:42 xo-pool-xen xo-server[371693]: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220722T231008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: ] Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: some metadata VHDs are missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: json: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/20220725T131008Z.json', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: missingVhds: [ Jul 25 19:10:42 xo-pool-xen xo-server[371693]: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T131008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: ] Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: some metadata VHDs are missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: json: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/20220725T151008Z.json', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: missingVhds: [ Jul 25 19:10:42 xo-pool-xen xo-server[371693]: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T151008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: ] Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: some metadata VHDs are missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: json: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/20220725T191008Z.json', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: missingVhds: [ Jul 25 19:10:42 xo-pool-xen xo-server[371693]: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T191008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: ] Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: some metadata VHDs are missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: json: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/20220725T171008Z.json', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: missingVhds: [ Jul 25 19:10:42 xo-pool-xen xo-server[371693]: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T171008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: ] Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: some metadata VHDs are missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: json: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/20220725T211008Z.json', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: missingVhds: [ Jul 25 19:10:42 xo-pool-xen xo-server[371693]: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T211008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: ] Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: some metadata VHDs are missing { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: json: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/20220725T231008Z.json', Jul 25 19:10:42 xo-pool-xen xo-server[371693]: missingVhds: [ Jul 25 19:10:42 xo-pool-xen xo-server[371693]: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220725T231008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: ] Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:10:42 xo-pool-xen xo-server[371693]: unused VHD { Jul 25 19:10:42 xo-pool-xen xo-server[371693]: vhd: '/xo-vm-backups/221c4e75-a85f-1810-3a04-b9ea61455b27/vdis/38c3b817-7e3a-4002-9a6a-c6114def4fc5/dfb7d40b-d8cf-4082-a9a4-1638eb67d9a7/20220721T231008Z.vhd' Jul 25 19:10:42 xo-pool-xen xo-server[371693]: } Jul 25 19:12:23 xo-pool-xen xo-server[371693]: 2022-07-25T23:12:23.782Z xo:backups:mergeWorker INFO bye :-) -

@lawrencesystems thank you for the file, I will dive in

-

@lawrencesystems Hi,

Do you have any idea why the renaming failed due to lack of permission? Is there a concurrent access to this file or a specific configuration of the remote ?

-

Not that I can think of, there are several other backup jobs all going to this same remote, some full, some delta & some are metadata but none of them seem to be having an issues. I only have this one instance pointed to this remote with nothing else mounting it. The remote is a TrueNAS Core 13 server, but I have not found any errors coming from TrueNAS that would give me a clue, but I can try turning up the SAMBA log level and see if that helps.

-

@lawrencesystems Yes, it would be great if we could find the root cause of this EACCESS .

in the mean time we hardened our code for the next release (today), fixing error reporting from a merge worker, and stopping the merge if the rename fails

-

@florent

I am still sorting out the permissions issues trying to figure why they occur inconsistently which may be a TrueNAS bug, I might setup another destination such as a Synology as a test. Also searching for "Error: EACCES: permission denied rename samba" is showing a lot of results, not sure if they are helpful yet.My bigger concern is not knowing there was a problem because the delta job reported success which sounds like you are going to address in this next update.

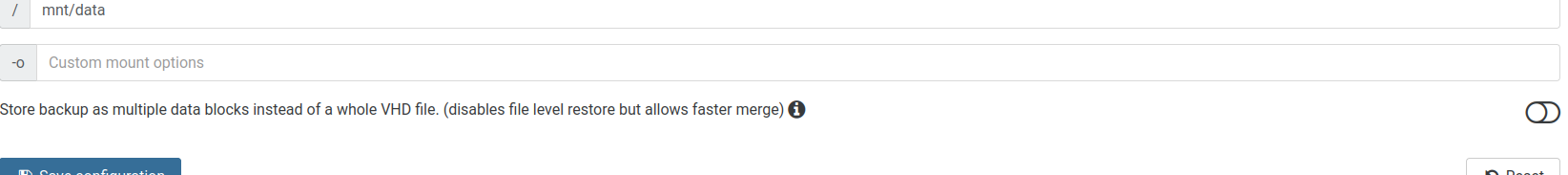

Can an existing remote with deltas be changed to the new "Store backup as multiple data blocks" option? I am curious if the issue would persist in that mode.

-

@lawrencesystems yes the logs weren't sent back to the main process and it doesn't help the troubleshooting.

that is fixed in today's release ( thanks to @julien-f )activating and deactivating the directory mode is on the remote settings page :

It will force a full backup and after that it will continue the delta chain.

I would like if you didn't change it on the problematic remote/job/vm , since I'm eager to fix the cause and couldn't reproduce it in local

-

@florent

I updated to xo-server 5.100.0 and xo-web 5.101.0 and the issue has changed a bit with this version.I created a new delta backup job to that same remote. It does the first backup as full and the files are there. The next run does the delta, transfers, backup job says success, I do get the Error: EACCES: permission denied, rename in the XO syslog and still no denied error in TrueNAS, then all the files in that directory are gone. When the job runs again it does not see any deltas so does a full and the file for the full is there again.

I am going to setup another share on a different device to see if this is just something weird with SMB in TrueNAS and I might try NFS as well. We have a few other remotes so I have a few scenarios to test.

-

Good news, so far I am unable to reproduce the issue on the Synology but that still does not really explain why TrueNAS does not have a failure error on rename in the samba log or why that Error: EACCES: permission denied, rename does not cause XO to report that there is an issue.

Now that I have job that can quickly reproduce the issue I am going to dig deeper into what parameters might need to be passed to TrueNAS on the remote settings or if a setting in TrueNAS needs to be changed from default to make the work. Still odd that I can only make this happen with Delta backups and not full.

-

Another update: it does happen on Synology via a SMB share, it just does not happen as often. Going to setup a test with NFS and with the new "Store backup as multiple data blocks" option.

-

Sounds like a client side error if it happens with two different remotes on different systems, which probably explains why there’s nothing in server side logs.

Which OS is XO running? Could this be related: https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=949394

Could you try

mvon smb share without XO involved to verify it’s not related? Sure it should report other than success if such renaming fails. -

That would be indeed interesting to see if it happens with NFS or not

-

@ronivay Good point, I am running Debian Bookworm but I could rebuild it on Ubuntu and see if that changes anything. I have tried the mv command but I am unable to reproduce the error that way. The inconsistency of the issue is what is making this harder to solve because it works most of the time.

-

@lawrencesystems only the delta backup make rename ( when merging older backup together)

I am also very interested of your findings, and by anything need to be done on XO side

-

I set it up on NFS and had it running 4 time per hour for the last couple days and there has been no rename error, but I do have a new message. On Jul 31, 2022 at 03:00:00 PM about 25 hrs after the job started I get "incorrect backup size in metadata." and this message is in every backup since then as the job is still running. The backup job still does report success and I did a test restore of the delta and they seem to restore just fine. This issue seems to only occur when using SMB. The last test was thinking of doing is changing the job to "Delete First" as I think that would skip the rename process, but I am not sure.

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1659186900001", "jobId": "bfbc352f-faef-437c-9de3-335a7a043aef", "jobName": "Blumira", "message": "backup", "scheduleId": "554d14f2-3613-407d-bdc1-85f9e64e3b3f", "start": 1659186900001, "status": "success", "infos": [ { "data": { "vms": [ "a66859dd-45e8-fae5-8830-ffd918780375" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "a66859dd-45e8-fae5-8830-ffd918780375" }, "id": "1659186901009", "message": "backup VM", "start": 1659186901009, "status": "success", "tasks": [ { "id": "1659186902384", "message": "clean-vm", "start": 1659186902384, "status": "success", "warnings": [ { "data": { "path": "/xo-vm-backups/a66859dd-45e8-fae5-8830-ffd918780375/20220730T111523Z.json", "actual": 109490688, "expected": 43619237376 }, "message": "incorrect backup size in metadata" } ], "end": 1659186902488, "result": { "merge": false } }, { "id": "1659186902662", "message": "snapshot", "start": 1659186902662, "status": "success", "end": 1659186905027, "result": "76c7bb45-f70e-8009-5581-faaf2e2d1eea" }, { "data": { "id": "f1318c7a-5691-409e-baa2-2535f8e8a458", "isFull": false, "type": "remote" }, "id": "1659186905028", "message": "export", "start": 1659186905028, "status": "success", "tasks": [ { "id": "1659186905057", "message": "transfer", "start": 1659186905057, "status": "success", "end": 1659186909884, "result": { "size": 117881344 } }, { "id": "1659186910269", "message": "clean-vm", "start": 1659186910269, "status": "success", "end": 1659186910336, "result": { "merge": true } } ], "end": 1659186910369 } ], "end": 1659186910369 } ], "end": 1659186910370 } -

@lawrencesystems this is a known bug, the PR is nearly finalized

https://github.com/vatesfr/xen-orchestra/pull/6331, it will be merged soon -

So we are back on a potential weird behaviour in SMB mount from Debian itself

-

Yes, beside the known bug listed above the NFS mount never had an issue and I had it running four backups per hour for a few days. The Error: EACCES: permission denied, rename so far it seems to be exclusively an error that occurs on when using an SMB mount (I have not tested S3 yet). I had talked to @tekwendell who had some suggestions such as making sure server multi channel support = no was set but the error still occurs. The error does occur on both Synology and TrueNAS SMB shares.

Below are some logs of the event happening with logs from both XO & TrueNAS (trinity in the logs). There is not really any errors that I can find on the TrueNAS side as it keep reporting that the files were open and closed with status OK. Because this error does not occur at every run it's not making it easy to troubleshoot.

trinity 1 2022-08-02T02:00:11.976688-04:00 trinity.local smbd 85507 - - xcpng opened file xo-vm-backups/a66859dd-45e8-fae5-8830-ffd918780375/vdis/bfbc352f-faef-437c-9de3-335a7a043aef/52506089-c186-4f0f-93ea-d7649fa7107b/20220802T040005Z.vhd read=Yes write=Yes (numopen=10) trinity 1 2022-08-02T02:00:11.959478-04:00 trinity.local smbd 85507 - - smbd_dirptr_get_entry mask=[*] found xo-vm-backups/a66859dd-45e8-fae5-8830-ffd918780375/vdis/bfbc352f-faef-437c-9de3-335a7a043aef/52506089-c186-4f0f-93ea-d7649fa7107b/20220802T040005Z.vhd fname=20220802T040005Z.vhd (20220802T040005Z.vhd) xo-pool-xen xo-server[875460]: path: '/run/xo-server/mounts/b0323f4d-1828-4ad1-b9bd-550f38ff6cfa/xo-vm-backups/a66859dd-45e8-fae5-8830-ffd918780375/vdis/bfbc352f-faef-437c-9de3-335a7a043aef/52506089-c186-4f0f-93ea-d7649fa7107b/20220802T040005Z.vhd', xo-pool-xen xo-server[875460]: error: [Error: EACCES: permission denied, rename '/run/xo-server/mounts/b0323f4d-1828-4ad1-b9bd-550f38ff6cfa/xo-vm-backups/a66859dd-45e8-fae5-8830-ffd918780375/vdis/bfbc352f-faef-437c-9de3-335a7a043aef/52506089-c186-4f0f-93ea-d7649fa7107b/20220802T040005Z.vhd' -> '/run/xo-server/mounts/b0323f4d-1828-4ad1-b9bd-550f38ff6cfa/xo-vm-backups/a66859dd-45e8-fae5-8830-ffd918780375/vdis/bfbc352f-faef-437c-9de3-335a7a043aef/52506089-c186-4f0f-93ea-d7649fa7107b/20220802T041522Z.vhd'] { trinity 1 2022-08-02T02:00:11.933092-04:00 trinity.local smbd 85507 - - xcpng closed file xo-vm-backups/a66859dd-45e8-fae5-8830-ffd918780375/vdis/bfbc352f-faef-437c-9de3-335a7a043aef/52506089-c186-4f0f-93ea-d7649fa7107b/20220802T040005Z.vhd (numopen=2) NT_STATUS_OK trinity 1 2022-08-02T02:00:11.936665-04:00 trinity.local smbd 85507 - - xcpng closed file xo-vm-backups/a66859dd-45e8-fae5-8830-ffd918780375/vdis/bfbc352f-faef-437c-9de3-335a7a043aef/52506089-c186-4f0f-93ea-d7649fa7107b/20220802T040005Z.vhd (numopen=0) NT_STATUS_OK trinity 1 2022-08-02T02:00:11.932835-04:00 trinity.local smbd 85507 - - xcpng opened file xo-vm-backups/a66859dd-45e8-fae5-8830-ffd918780375/vdis/bfbc352f-faef-437c-9de3-335a7a043aef/52506089-c186-4f0f-93ea-d7649fa7107b/20220802T040005Z.vhd read=No write=No (numopen=4) trinity 1 2022-08-02T02:00:11.932929-04:00 trinity.local smbd 85507 - - smbd_do_setfilepathinfo: xo-vm-backups/a66859dd-45e8-fae5-8830-ffd918780375/vdis/bfbc352f-faef-437c-9de3-335a7a043aef/52506089-c186-4f0f-93ea-d7649fa7107b/20220802T040005Z.vhd (fnum 1820097187) info_level=65290 totdata=322