Epyc VM to VM networking slow

-

Heya!

Just chiming in that we (WDMAB) Are keeping tabs on this thread as well as our ongoing support ticket with you guys.

Saw our result up on the list.

If we can do ANYTHING further to assist then please do tell us. We are available 24/7 to solve this issue since it is very heavily impacting to our new production deployment.Regards.

Mathias W. -

@bleader I've been investigating this issue on my own system and came across this discussion. I know this is a somewhat old thread so I hope it's ok to contribute more data here!

Host:

- CPU: EPYC 7302p

- Number of sockets: 1

- CPU pinning: no

- XCP-NG version: 8.3 beta 2, Xen 4.17 (everything current as of the time of writing)

- Output of

xl info -n:

host : xcp-ng release : 4.19.0+1 version : #1 SMP Wed Jan 24 17:19:11 CET 2024 machine : x86_64 nr_cpus : 32 max_cpu_id : 31 nr_nodes : 1 cores_per_socket : 16 threads_per_core : 2 cpu_mhz : 3000.001 hw_caps : 178bf3ff:7ed8320b:2e500800:244037ff:0000000f:219c91a9:00400004:00000780 virt_caps : pv hvm hvm_directio pv_directio hap gnttab-v1 gnttab-v2 total_memory : 130931 free_memory : 24740 sharing_freed_memory : 0 sharing_used_memory : 0 outstanding_claims : 0 free_cpus : 0 cpu_topology : cpu: core socket node 0: 0 0 0 1: 0 0 0 2: 1 0 0 3: 1 0 0 4: 4 0 0 5: 4 0 0 6: 5 0 0 7: 5 0 0 8: 8 0 0 9: 8 0 0 10: 9 0 0 11: 9 0 0 12: 12 0 0 13: 12 0 0 14: 13 0 0 15: 13 0 0 16: 16 0 0 17: 16 0 0 18: 17 0 0 19: 17 0 0 20: 20 0 0 21: 20 0 0 22: 21 0 0 23: 21 0 0 24: 24 0 0 25: 24 0 0 26: 25 0 0 27: 25 0 0 28: 28 0 0 29: 28 0 0 30: 29 0 0 31: 29 0 0 device topology : device node No device topology data available numa_info : node: memsize memfree distances 0: 132338 24740 10 xen_major : 4 xen_minor : 17 xen_extra : .3-3 xen_version : 4.17.3-3 xen_caps : xen-3.0-x86_64 hvm-3.0-x86_32 hvm-3.0-x86_32p hvm-3.0-x86_64 xen_scheduler : credit xen_pagesize : 4096 platform_params : virt_start=0xffff800000000000 xen_changeset : $Format:%H$, pq ??? xen_commandline : dom0_mem=7568M,max:7568M watchdog ucode=scan dom0_max_vcpus=1-16 crashkernel=256M,below=4G console=vga vga=mode-0x0311 cc_compiler : gcc (GCC) 11.2.1 20210728 (Red Hat 11.2.1-1) cc_compile_by : mockbuild cc_compile_domain : [unknown] cc_compile_date : Wed Feb 28 10:12:19 CET 2024 build_id : 9a011a28e29a21a7643376b36aec959253587d42 xend_config_format : 4Test set 1:

Server and client were both Debian 12 (

Linux 6.1.0-18-amd64 #1 SMP PREEMPT_DYNAMIC Debian 6.1.76-1 (2024-02-01) x86_64) with 4 cores.VM to VM (1 thread):

iperf -c 192.168.1.66 -t 60 ------------------------------------------------------------ Client connecting to 192.168.1.66, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 1] local 192.168.1.69 port 55530 connected with 192.168.1.66 port 5001 (icwnd/mss/irtt=14/1448/551) [ ID] Interval Transfer Bandwidth [ 1] 0.0000-60.0213 sec 38.2 GBytes 5.47 Gbits/sec xentop: 100 / 150 / 250VM to VM (4 threads):

iperf -c 192.168.1.66 -t 60 -P4 ------------------------------------------------------------ Client connecting to 192.168.1.66, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 2] local 192.168.1.69 port 35702 connected with 192.168.1.66 port 5001 (icwnd/mss/irtt=14/1448/531) [ 4] local 192.168.1.69 port 35708 connected with 192.168.1.66 port 5001 (icwnd/mss/irtt=14/1448/576) [ 1] local 192.168.1.69 port 35714 connected with 192.168.1.66 port 5001 (icwnd/mss/irtt=14/1448/458) [ 3] local 192.168.1.69 port 35692 connected with 192.168.1.66 port 5001 (icwnd/mss/irtt=14/1448/744) [ ID] Interval Transfer Bandwidth [ 2] 0.0000-60.0141 sec 12.4 GBytes 1.77 Gbits/sec [ 1] 0.0000-60.0129 sec 13.9 GBytes 1.99 Gbits/sec [ 3] 0.0000-60.0141 sec 14.5 GBytes 2.07 Gbits/sec [ 4] 0.0000-60.0301 sec 12.2 GBytes 1.75 Gbits/sec [SUM] 0.0000-60.0071 sec 53.0 GBytes 7.58 Gbits/sec xentop: 165 / 200 / 380Host to VM (1 thread):

iperf -c 192.168.1.66 -t 60 ------------------------------------------------------------ Client connecting to 192.168.1.66, TCP port 5001 TCP window size: 297 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.1.1 port 37804 connected with 192.168.1.66 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-60.0 sec 6.58 GBytes 942 Mbits/sec xentop: N/A / 135 / 145Host to VM (4 threads):

iperf -c 192.168.1.66 -t 60 -P4 ------------------------------------------------------------ Client connecting to 192.168.1.66, TCP port 5001 TCP window size: 112 KByte (default) ------------------------------------------------------------ [ 5] local 192.168.1.1 port 37812 connected with 192.168.1.66 port 5001 [ 3] local 192.168.1.1 port 37808 connected with 192.168.1.66 port 5001 [ 6] local 192.168.1.1 port 37814 connected with 192.168.1.66 port 5001 [ 4] local 192.168.1.1 port 37810 connected with 192.168.1.66 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-60.0 sec 1.63 GBytes 233 Mbits/sec [ 6] 0.0-60.0 sec 2.08 GBytes 298 Mbits/sec [ 4] 0.0-60.0 sec 1.07 GBytes 154 Mbits/sec [ 5] 0.0-60.0 sec 1.80 GBytes 257 Mbits/sec [SUM] 0.0-60.0 sec 6.58 GBytes 942 Mbits/sec xentop: N/A / 155 / 155Test set 2:

Server:

FreeBSD 14 (FreeBSD 14.0-RELEASE (GENERIC) #0 releng/14.0-n265380-f9716eee8ab4: Fri Nov 10 05:57:23 UTC 2023)with 4 cores.

Client: Debian 12 (Linux 6.1.0-18-amd64 #1 SMP PREEMPT_DYNAMIC Debian 6.1.76-1 (2024-02-01) x86_64) with 4 cores.VM to VM (1 thread):

iperf -c 192.168.1.64 -t 60 ------------------------------------------------------------ Client connecting to 192.168.1.64, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 1] local 192.168.1.69 port 38572 connected with 192.168.1.64 port 5001 (icwnd/mss/irtt=14/1448/905) [ ID] Interval Transfer Bandwidth [ 1] 0.0000-60.0089 sec 21.3 GBytes 3.04 Gbits/sec xentop: 125 / 355 / 325VM to VM (4 threads):

iperf -c 192.168.1.64 -t 60 -P4 ------------------------------------------------------------ Client connecting to 192.168.1.64, TCP port 5001 TCP window size: 16.0 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.1.69 port 50068 connected with 192.168.1.64 port 5001 (icwnd/mss/irtt=14/1448/753) [ 1] local 192.168.1.69 port 50078 connected with 192.168.1.64 port 5001 (icwnd/mss/irtt=14/1448/513) [ 4] local 192.168.1.69 port 50088 connected with 192.168.1.64 port 5001 (icwnd/mss/irtt=14/1448/411) [ 2] local 192.168.1.69 port 50070 connected with 192.168.1.64 port 5001 (icwnd/mss/irtt=14/1448/676) [ ID] Interval Transfer Bandwidth [ 4] 0.0000-60.0299 sec 9.48 GBytes 1.36 Gbits/sec [ 1] 0.0000-60.0299 sec 6.56 GBytes 938 Mbits/sec [ 3] 0.0000-60.0301 sec 11.2 GBytes 1.60 Gbits/sec [ 2] 0.0000-60.0293 sec 6.61 GBytes 947 Mbits/sec [SUM] 0.0000-60.0146 sec 33.8 GBytes 4.84 Gbits/sec xentop: 220 / 400 / 730Host to VM (1 thread):

iperf -c 192.168.1.64 -t 60 ------------------------------------------------------------ Client connecting to 192.168.1.64, TCP port 5001 TCP window size: 212 KByte (default) ------------------------------------------------------------ [ 3] local 192.168.1.1 port 58464 connected with 192.168.1.64 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-60.0 sec 6.58 GBytes 941 Mbits/sec xentop: N/A / 295 / 205Host to VM (4 threads):

iperf -c 192.168.1.64 -t 60 -P4 ------------------------------------------------------------ Client connecting to 192.168.1.64, TCP port 5001 TCP window size: 130 KByte (default) ------------------------------------------------------------ [ 5] local 192.168.1.1 port 58470 connected with 192.168.1.64 port 5001 [ 3] local 192.168.1.1 port 58468 connected with 192.168.1.64 port 5001 [ 4] local 192.168.1.1 port 58472 connected with 192.168.1.64 port 5001 [ 6] local 192.168.1.1 port 58474 connected with 192.168.1.64 port 5001 [ ID] Interval Transfer Bandwidth [ 5] 0.0-60.0 sec 1.73 GBytes 247 Mbits/sec [ 3] 0.0-60.0 sec 1.56 GBytes 224 Mbits/sec [ 4] 0.0-60.0 sec 1.73 GBytes 247 Mbits/sec [ 6] 0.0-60.0 sec 1.56 GBytes 224 Mbits/sec [SUM] 0.0-60.0 sec 6.58 GBytes 942 Mbits/sec xentop: N/A / 280 / 205Conclusion:

No special tuning on any of the VMs, just a fresh install from the netboot ISO for each respective OS.

I also don't fully understand why my host seems to be limited to 1Gb. The management interface is 1Gb, but that shouldn't matter? The other physical NIC is 10Gb SFP+, just for the sake of completeness.

Please let me know if there's anything at all that I can do to help with this!

-

FYI, we are discussing with AMD and another external company to find the culprit, active work is in the pipes.

-

@timewasted Thanks for sharing, as long as we haven't found a solution, it is always good to have more feedback, so thanks for that.

For FreeBSD it usus the same principle of network driver, but it seems to have lower performances, not only on EPYC system, this could be another investigation for later

I am indeed surprised by your vm/host results, I generally get a way greater performance there in my tests. I agree the management NIC speed should not impact it at all… You said no tuning so I guess no pinning or anything, therefore I don't really see why that is right now.

-

@bleader I stumpled upon this thread and this issue kept me wondering so I did a quick test on our systems:

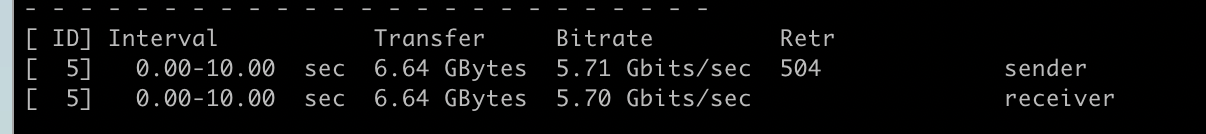

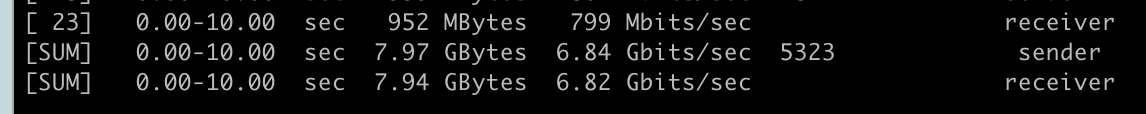

Running iperf3 on ou HP's with AMD EPYC 7543P cpu's, debian12 to debian12 vm I get

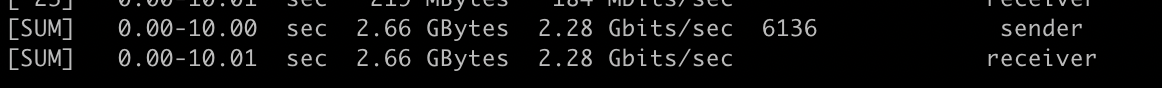

iperf3 -c 192.168.1.19 -P 10

iperf3 -c 192.168.1.19

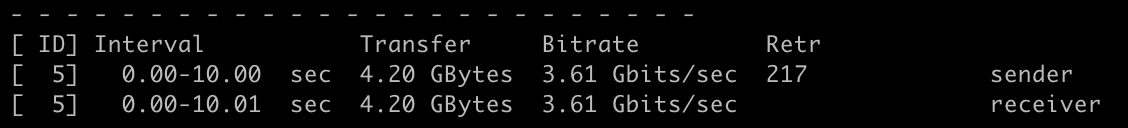

Same on a HP with Intel Xeon E5-2667

iperf3 -c 192.168.1.113 -P 10

iperf3 -c 192.168.1.113

FREAKY!

Doesn't affect us because we don't have inter-VM traffic to speak off.

-

@manilx thanks for the additional results, and yes, it is a pretty big issue, but even with multiple people looking at it or trying to help out, we were not able to pinpoint the root cause yet

-

This is over 4 months old, and is affecting a LOT of my customers.

It is a BIG problem for my company.Is there anything that we can do to help resolve this?

-

@nicols Hello, you are not alone in this. We have delayed the deployment of our core ISP routing as virtual routers due to this.

We have an XOA premium and xcp-ng enterprise subscription and an open case on this.

I cannot go into detail about what is being said in there but i can say that the approach @olivierlambert and vates have is very good and they have my full confidence on this one.

@manilx i can say this affects all vm traffic as we have vm out to switch over to a second hardware host into a VM there and we see the limit there aswell just the roof is twice as high as inside the same HW box

Either way. Vates is VERY well aware of how serious this is and are taking good efforts to fix it.

-

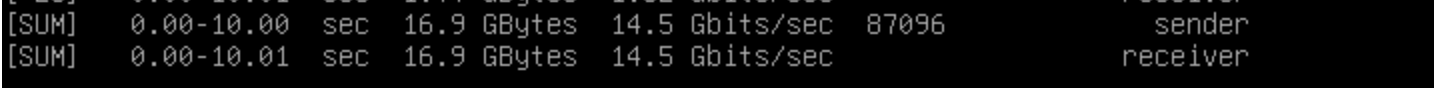

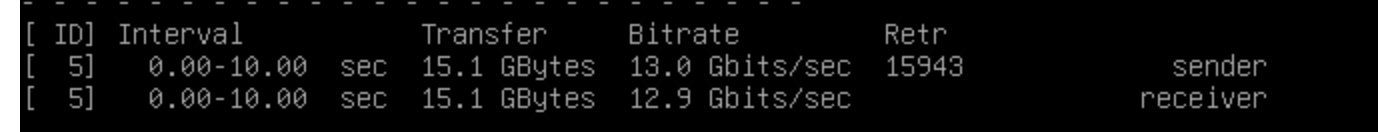

@Seneram True. Just tested 2 debian12 sitting on 2 EPYC host:

10 parallel tests:

One:

Bummer! Worse than I thought. But Vates will certainly get to the bottom of this.

-

Just for additional insights, have tests been made with different BIOS/firmware settings? Especially EPYC firmware has a lot of settings affecting internal latency vs throughput for different workloads. I recently deployed an EPYC 2x48c/96t system for a simulation software. The changes in the firmware could make 20% difference in rendering time for this application. Not saying it is a root cause, but it could possibly improve the situation here. My guess is that much of the issue is due to bad latency and erroneous scheduling leading to additional latency.

-

@Forza We have tried all the settings avail to tweak on our hardware, We have a full big twin chassi we have dedicated to vates doing testing of this issue and the first roughly month was spent going over settings together with vates and making sure everything was tweaked properly.

AMD is involved themself and if anyone knows AMD firmware settings and tweaking it would be AMD.

In all seriousness it is a very good suggestion but it has been looked into and unfortunately do minimal or no difference.

-

@Seneram thanks, was guessing it was the case. I hope the issue is resolved soon.

-

Please note that we are actively investigating to it, and believe me it's costly (25k€ already invested to track this down). So as you can see, it's a priority and something we are actively working on. AMD is also aware of it.

-

@bleader Had some time to test this on an AMD system (HP T740 Thin Client). Hopefully it helps a bit, even it is not an Epyc CPU.

Host:

- CPU: AMD Ryzen Embedded V1756B

- Number of sockets: 1

- CPU pinning: no

- XCP-NG version: 8.3 beta 1 (updated with

yum updateas of today) - Output of xl info -n:

[22:05 hpt740 ~]# xl info -n host : hpt740 release : 4.19.0+1 version : #1 SMP Wed Jan 24 17:19:11 CET 2024 machine : x86_64 nr_cpus : 8 max_cpu_id : 15 nr_nodes : 1 cores_per_socket : 4 threads_per_core : 2 cpu_mhz : 3244.038 hw_caps : 178bf3ff:7ed8320b:2e500800:244033ff:0000000f:209c01a9:00000000:00000500 virt_caps : pv hvm hvm_directio pv_directio hap shadow total_memory : 30636 free_memory : 17610 sharing_freed_memory : 0 sharing_used_memory : 0 outstanding_claims : 0 free_cpus : 0 cpu_topology : cpu: core socket node 0: 0 0 0 1: 0 0 0 2: 1 0 0 3: 1 0 0 4: 2 0 0 5: 2 0 0 6: 3 0 0 7: 3 0 0 device topology : device node No device topology data available numa_info : node: memsize memfree distances 0: 34803 17610 10 xen_major : 4 xen_minor : 13 xen_extra : .5-10.58 xen_version : 4.13.5-10.58 xen_caps : xen-3.0-x86_64 hvm-3.0-x86_32 hvm-3.0-x86_32p hvm-3.0-x86_64 xen_scheduler : credit xen_pagesize : 4096 platform_params : virt_start=0xffff800000000000 xen_changeset : 708e83f0e7d1, pq 8e58b4872724 xen_commandline : watchdog ucode=scan dom0_max_vcpus=1-8 crashkernel=256M,below=4G console=vga vga=mode-0x0311 dom0_mem=4294967296B,max:4294967296B cc_compiler : gcc (GCC) 11.2.1 20210728 (Red Hat 11.2.1-1) cc_compile_by : mockbuild cc_compile_domain : [unknown] cc_compile_date : Thu Jan 25 10:20:16 CET 2024 build_id : ae0904d024e04d4daf2ecdfddc37ea146f48d7e1 xend_config_format : 4Server and client VMs are both Debian 12 (Linux 6.1.0-18-amd64) with 4 cores and 4G RAM.

xentopisclient/server/dom0V2V 1T 90/140/210 5.1 Gbits/sec V2V 4T 150/220/260 8.1 Gbits/sec H2V 1T 0/170/210 10.2 Gbits/sec* H2V 4T 0/310/340 11.9 Gbits/sec* *: with some spread of cpu utilization and throughputMinimum of three runs per test scenario.

-

We made tests on Ryzen CPUs and we couldn't really reproduce the problem: it seems to be EPYC specific.

-

@olivierlambert We did talk in DM before, I told him any data is always welcome, especially as I didn't even know this range of CPUs

-

@gskger It does seem quite lower than the 5950x and the 7600 we tested, but:

- it is a zen1 if I'm not mistaken

- in the 4 threads case, with 8 threads on the physical CPU, the VMs and dom0 are actually sharing ressources

- for single thread I guess the generation and memory speed could explain the difference.

I would say that this confirms these ryzen cpus are not really impacted either.

Thanks for sharing, I'll update the table tomorrow.

-

@bleader you are correct, the V1756B is a low power (45W TDP) desktop CPU of the AMD Ryzen embedded v1000 series based on the ZEN microarchitecture with 4 cores and 8 threads. It operates at a base freqeuncy of 3.25 GHz and a boost frequency of 3.6 GHz max. The HP T740 thin client is a capable low power, low noise computer for running XCP-ng in a homelab, but it's not a real match for serious AMD desktop or server CPUs.

-

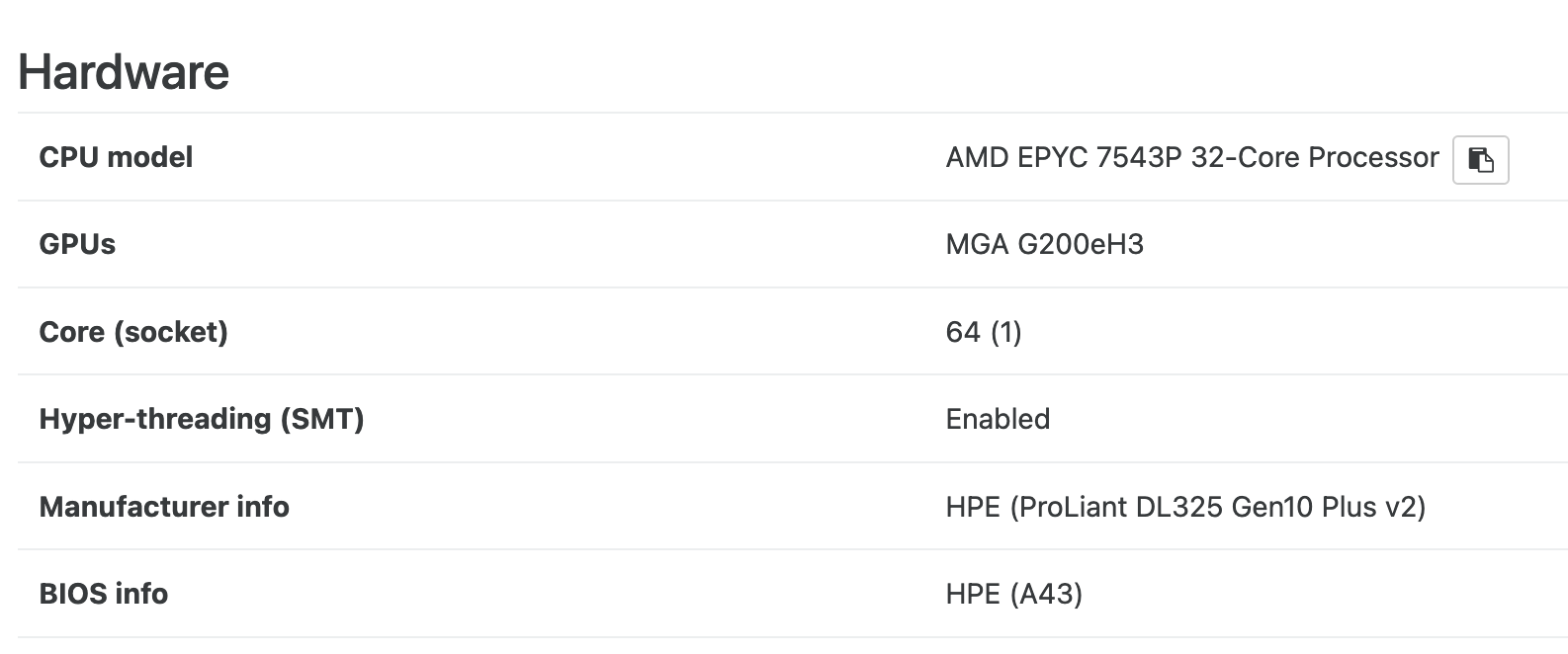

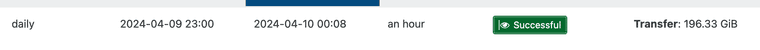

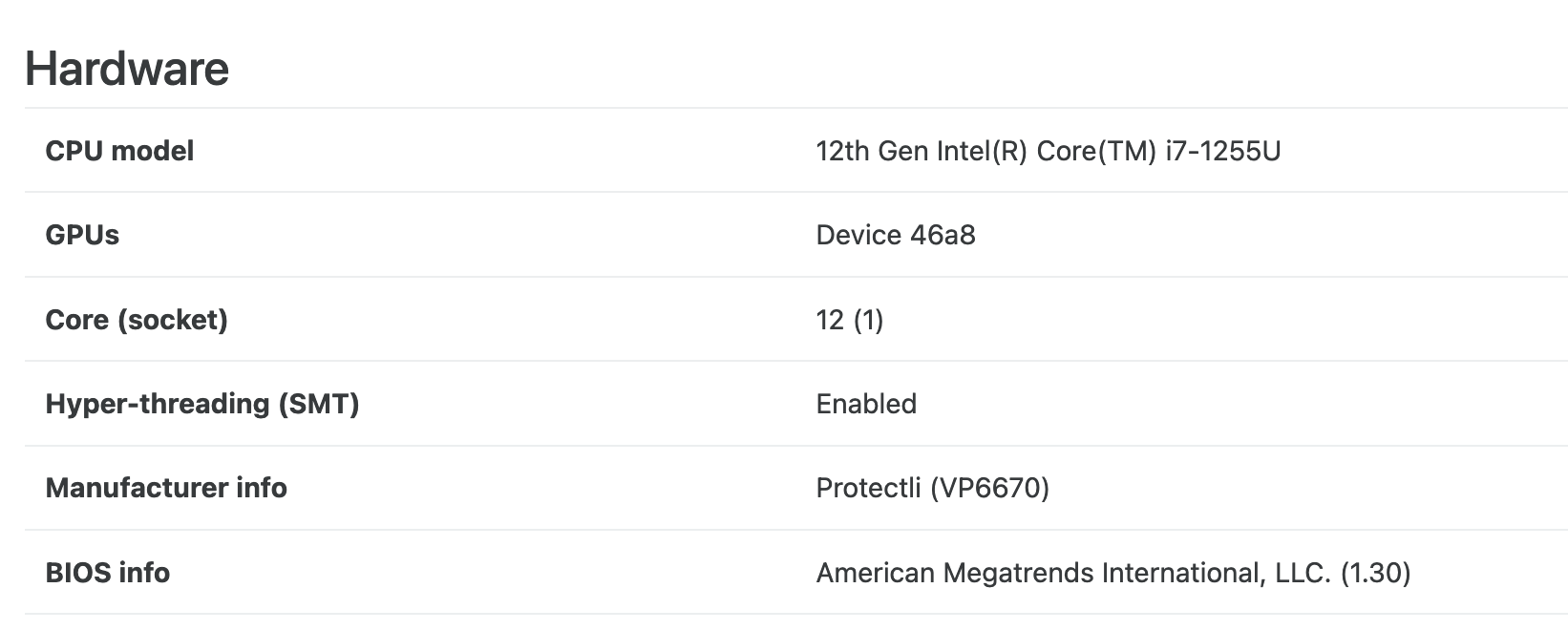

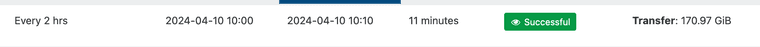

@gskger Returning to this:

Our backups on our business deployment, 2 HPE, (,ProLiant DL325 Gen10 Plus v2,) with AMD EPYC 7543P 32-Core Processor connected via redundant 10G nic's and switched to 10G NAS (QNAP, Synology) for storage and backups we get backup speeds of 80-90MiB/s tops with NBP.

On my Homelab with Protectli Mini PC connected via 10G also to 10G QNAP I get 250-300 MiB/s !!!!!!

This really is a problem for us now since we started with XCPNG 1 yr ago. Slow backup/restore speeds are a hindrance in our backup strategy.

Now that I switched my Homelab from Proxmox (using it for 3yrs) to XCPNG I stumbled upn this speed difference and it is incredible.

I wonder if it is not also related to this issue with EPYC networking.

P.S: I have opened a ticket BUT I wanted ti share this here also.

-

@manilx hi, I am working on the backup side, that is a very interesting finding. I have some question to rule out some hypothesis :

What storage do you use on both side ? iscsi / nfs ?

Is XO running on the master ?