First SMAPIv3 driver is available in preview

-

@olivierlambert said in First SMAPIv3 driver is available in preview:

That's normal, there's no stat gathering with SMAPIv3. I'll update the blog post accordingly, I forgot about it

Oh, just now or will there never be stats?

Its a pretty important feature in an enterprise setup

-

Obviously just now

Observability is crucial

Observability is crucial

-

@olivierlambert Easy to install and setup on a dedicated host. Did some basic testing with VM creation, snapshots, (re-) import-/exporting, copying, removing and all worked.

The blog post on the SMAPIv3 preview states that it is not yet possible to use this SR type for live storage motion. But it seems that no storage migration is possible at this point (neither live, warm nor cold migration from or to the SMAPIv3 volume). Copying a VM from the SMAPIv3 to a local SMAPIv1 SR works and vice versa.

Looking forward to more capabilities of the SMAPIv3 implementation. Keep up the great work

!

!Eidt: typos and some clarifications

-

I will add a precision because it's indeed normal there's no storage motion at all for now

(but in the "green" callout, we explain copy is a first migration path)

(but in the "green" callout, we explain copy is a first migration path)edit: now the sentence is more clear with "you won't be able to use this SR type for live or offline storage motion".

-

From the blog post

Size: grow a VDI in size. It's fully thin provisioned!

Does this mean we finally have live/hot disk expansion ?

-

Not right now, but likely in the future, yes

-

@olivierlambert said in First SMAPIv3 driver is available in preview:

right now, but likely in the future,

Are we going to eventually be able to have larger than 2TB volumes on EXT4 partitions? I'm not really setup to do ZFS and not really sure I want the headache that come along with it. My PERC controllers have always been super reliable so Ext4 is fin with me as for FS trype.

-

We will likely not use VHD anymore in SMAPIv3 (there's no point), so yes, if/when we decide to make an ext4 driver, it will store other files format (like maybe qcow2)

-

created, clone some VMs into, run, looks fine.

Hard to say anything about perfomance, etc. Need a some real production (impossible), or compare benchmarks (fio for example, but ZFS should be slower of basic lvm anyway, so what result is good?). Is it anything we need to test?provisioning unknown)

same for VM, probably >

no stat gathering with SMAPIv3.

-

Yeah its not going to display any stats for now, so you'll have to look at the numbers inside the VM.

-

The goal is to test the fact that it runs OK for a bit, so we are sure to not miss anything. Fio is your friend to benchmark in a VM, remember that it's still blktap behind, so if you want better performance numbers, do it with multiple VDIs at once.

-

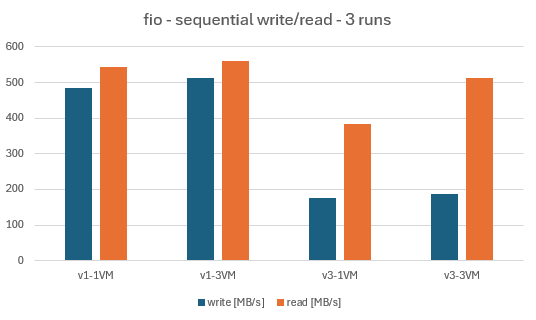

I tested SMAPIv1 on XCP 8.2.1 against SMAPIv3 on XCP 8.3b2 using the same host (a HP ProDesk 400 G6 with a i5-10500T CPU, 32GB RAM). A 1 TB Samsung 860 EVO SSD drive was used as the test SR, while XCP was booted from a 512 M.2 KIOXA NVMe drive. Fio (

fio-3.37) was compiled from source on an up-to-date Debian 12 VM (2 vCPU, 4 GiB RAM, 32GiB drive) which was copied twice so that three identical VM could runfioin parallel.After an initial

fiorun to create the files, a script run three sequential write and read tests (e.g.fio --name=fio --ioengine=libaio --randrepeat=1 --direct=1 --fallocate=none --ramp_time=10 --size=4G --iodepth=64 --loops=50 --group_reporting --numjobs=1 --rw=write --bs=1M). The script first ran on one VM, followed by a run on three VMs in parallel. IOPs and bandwidths were averaged.

v1-1VMare the results for one VM on a SMAPIv1 SR (XCP 8.2.1) whilev3-3VMare the results for three VMs in parallel an a SMAPIv3 SR (XCP 8.3b2).While I'm not sure if this approach is really valid (e.g. the average load of the host went through the roof when three VMs performed

fioin parallel), it does suggest that the bandwidth of SMAPIv3 is not yet en-par to that of SMAPIv1. But I could be wrong and this is an early previews of SMAPIv3. Looking forward to more performance results on SMAPIv3. -

Hi,

I'm not sure to understand. What kind of SMAPIv1 SR did you try to compare with ZFS on v3?

-

Can you provide a link to the github repo where we can find the source-code of this smapiv3 driver?

-

-

@olivierlambert

i meant the source for this package: xcp-ng-xapi-storage-volume-zfsvolso that we can see how this new driver is implemented

-

That's inside the repo I posted

-

Has anyone tried a backup using the new driver? I created a new test pool with one of my previous hosts and made SMAPIv3 ZFS storage. I can create a VM just fine, but when I try and add it to my existing backup job, it keeps erroring out with "stream has ended with not enough data (actual: 485, expected: 512)"

Is this expected?

-

You can only do full backup for now, not incremental.

-

@olivierlambert Since it's the first backup, it should be full, correct? Does Delta backup not work at all even if force full is enabled?