Three-node Networking for XOSTOR

-

@olivierlambert Is it possible to go switchless?

I have 3 hosts that have 4 25gb connections on them. I bonded the adapters that go to each host in pairs.

I have looked quite a bit for a setup and network guide to XOSTOR.

Do you have a link?

Thank you so much for your time

-

Question for @ronan-a

-

@T3CCH What you might be looking for: https://xcp-ng.org/docs/networking.html#full-mesh-network

-

I am experimenting with xcp-ng as a viable option to replace my company's 3 node clusters (2 main nodes + 1 witness node) at customer sites. The hardware we are using has 2 10Gig NICs and rest are 1Gig.

Since XOSTOR is based on LINSTOR I experimented with implementing LINSTOR HA cluster on 3 nodes on ProxMox first. Although mesh network was an option, I wanted to explore other options of implementing a storage network without a switch, since mesh network is only 'community-supported' at the moment. I ended up doing the following:

- Only 2 nodes contribute to the storage pool. 3rd node is just there to maintain quorum for hypervisor and for LINSTOR.

- All 3 nodes are connected to each other via a 1Gig Ethernet switch. This is the management network for the cluster.

- 2 main nodes are directly connected to each other via a 10Gig link. This is the storage network. Note that the witness node is not connected to storage network.

- Create a loopback interface on witness node which has an IP in the storage network subnet.

- Enable ipv_forward=1 on all 3 nodes.

- Add static routes to 2 main hosts like following:

ip route add <witness_node_storage_int_ip>/32 dev <main_host_mgmt_int_name> - Add static route to witness node like following:

ip route add <main_node1_storage_int_ip>/32 dev <witness_host_mgmt_int_name>

ip route add <main_node2_storage_int_ip>/32 dev <witness_host_mgmt_int_name> - After this all 3 nodes can talk to each other on storage subnet. LINSTOR traffic to and from witness node will use the management network. Since this traffic would not be much, it will not hamper other traffic on management network.

Now I want to do a similar implementation in xcp-ng and XOSTOR. Proxmox was basically a learning ground to iron out issues and get a grasp on LINSTOR concepts. So now the questions are:

- Is the above method doable on xcp-ng?

- Is it advisable to do 3 node storage network without switches this way?

- Any issues with enterprise support if someone does this?

Thanks.

-

@ha_tu_su Hi, I come from a similar setup and did the following

- Create a pool with two physcial nodes

- Add third node which is virtual in my case AND not running on one of the two physical nodes

- Create XOSTOR with replication count 2. The virtual nodes will be marked as diskless by default.

In XOSTOR the diskless node will be called "tie breaker". From my understanding very similar to the witness in vsan.

You can also go ahead select a dedicated network for the XOSTOR. This can be done post create as well. But i'm not sure if this will work without a switch.

-

@456Q

Your setup makes sense to me. Although I guess would have still required some physical hardware to run the virtual witness. Correct?I am pretty sure that mesh network will work for a 3 node setup (at least it makes sense to me). It's the support part that I wanted to get answer on since we will be deploying on customer sites which require official support contracts.

I am planning to test the 'routing' method this week. If it works and will be supported by Vates then that is what we will go with.

Thanks.

-

@456Q

Also when you use replication count of 2 do you get redundant linstor-controllers which are managed by drbd-reactor?I saw some posts with commands about drbd reactor in the forum so I am assuming it is used to manage redundant linstor-controllers.

When I experimented in proxmox I used only one linstor-controller because getting up drbd-reactor to install was tedious. I hope XOSTOR does this automatically.

-

@ha_tu_su

Ok, I have setup everything as I said I would. The only thing I wasn't able to setup was a loopback adapter on witness node mentioned in step 4. I assigned the storage network IP on spare physical interface instead. This doesn't change the logic of how everything should work together.Till now, I have created XOSTOR, using disks from 2 main nodes. I have enabled routing and added necessary static routes on all hosts, enabled HA on the pool, created a test VM and have tested migration of that VM between main nodes. I haven't yet tested HA by disconnecting network or powering off hosts. I am planning to do this testing in next 2 days.

@olivierlambert: Can you answer the question on the enterprise support for such topology? And do you see any technical pitfalls with this approach?

I admit I am fairly new to HA stuff related to virtualization, so any feedback from the community is appreciated, just to enlighten me.

Thanks.

-

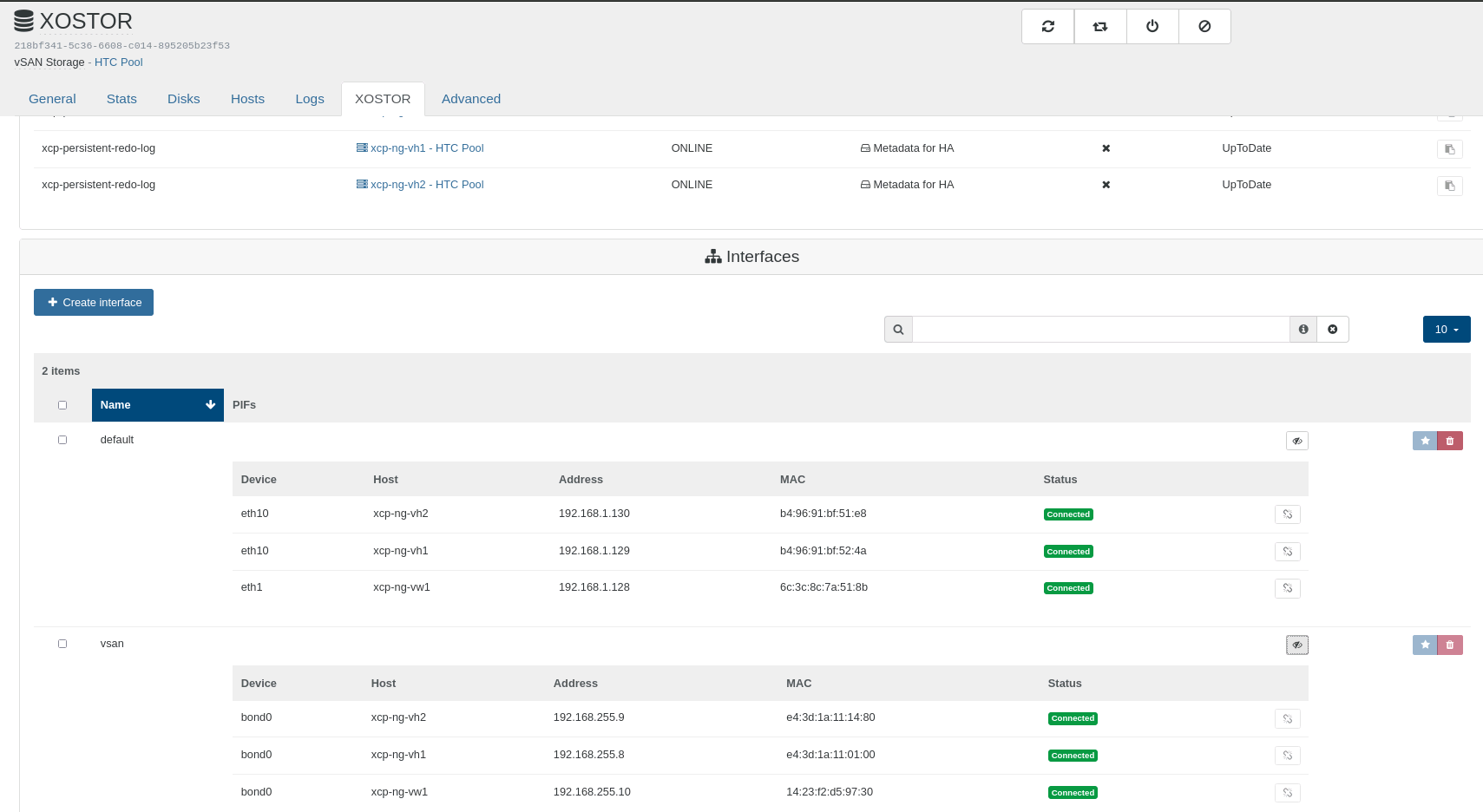

I have executed above steps and currently my XOSTOR network looks like this:

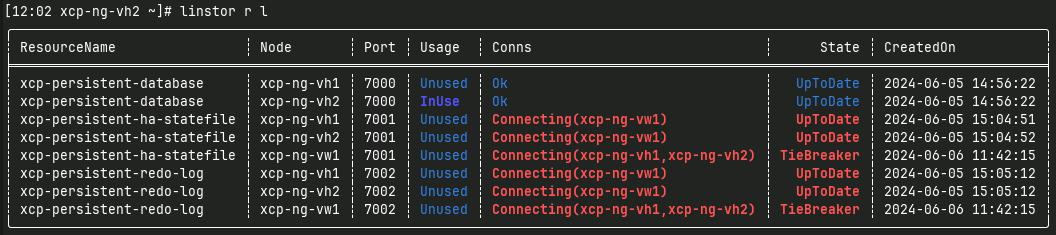

When I set 'vsan' as my preferred NIC, I get below output on linstor-controller node:

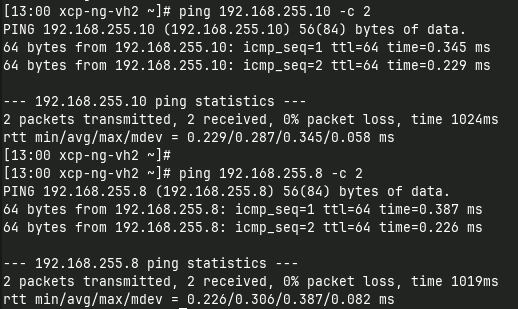

Connectivity between all 3 nodes is present:

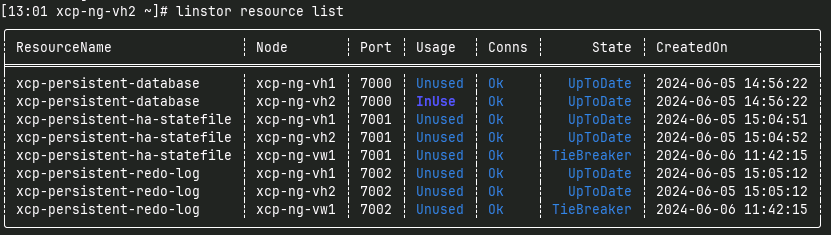

When I set 'default' as my preferred NIC, I get correct output on linstor-controller:

@ronan-a: Can you help out here?

Thanks.

-

@ha_tu_su

@olivierlambert @ronan-a : Any insight into this? -

@ha_tu_su

Regarding your previous message, for an SR LINSTOR to be functional:- Each node in the pool must have a PBD attached.

- A node may not have a local physical disk.

ip routeshould not be used manually. LINSTOR has an API for using dedicated network interfaces.- XOSTOR effectively supports configurations with hosts without disks and quorum can still be used.

Could you list the interfaces using

linstor node interface list <NODE>? -

@ronan-a

Unfortunately, I am in the process of reinstalling XCP-ng on the nodes to start from scratch. Just thought I have tried too many things and somewhere forgot to undo the ‘wrong’ configs. So can’t run the command now. Although I had run this command before when I posted all the screenshots. The output had 2 entries (from my memory):1. StltCon <mgmt_ip> 3366 Plain 2. <storage_nw_ip> 3366 PlainI will repost with the required data when I get everything configured again.

Thanks.