What should i expect from VM migration performance from Xen-ng ?

-

Hi,

I think it's related to how it's transferred, I wouldn't expect much more.

-

@henri9813 said in What should i expect from VM migration performance from Xen-ng ?:

Hello,

So, does someone know why I can't go over 145MB/s and how to increase the vdi speed transfert ?

( I have 5Gb/s between nodes )

Thanks !

Are you migrating storage or just the VM?

How can you have 5Gb/s between the nodes? What kind of networking setup are you using? -

Hello,

Thanks for your answer @nikade

I migrate both storage and vm.

I have 25Gb/s nic, but I have a 5Gb/s limitation at switch level.

But I found on another topic an explanation about this, this could be related to SMAPIv1.

Best regards

-

@henri9813 said in What should i expect from VM migration performance from Xen-ng ?:

Hello,

Thanks for your answer @nikade

I migrate both storage and vm.

I have 25Gb/s nic, but I have a 5Gb/s limitation at switch level.

But I found on another topic an explanation about this, this could be related to SMAPIv1.

Best regards

Yeah, when migrating storage the speeds are pretty much the same no matter what NIC you have...

This is a known limitation and I hope it will be resolved soon. -

I've spent a bunch of time trying to find some dark magic to making the VDI migration faster, so far nothing. My VM (memory) migration is fast enough that I'm not concerned right now. and don't have any testing to show for it.

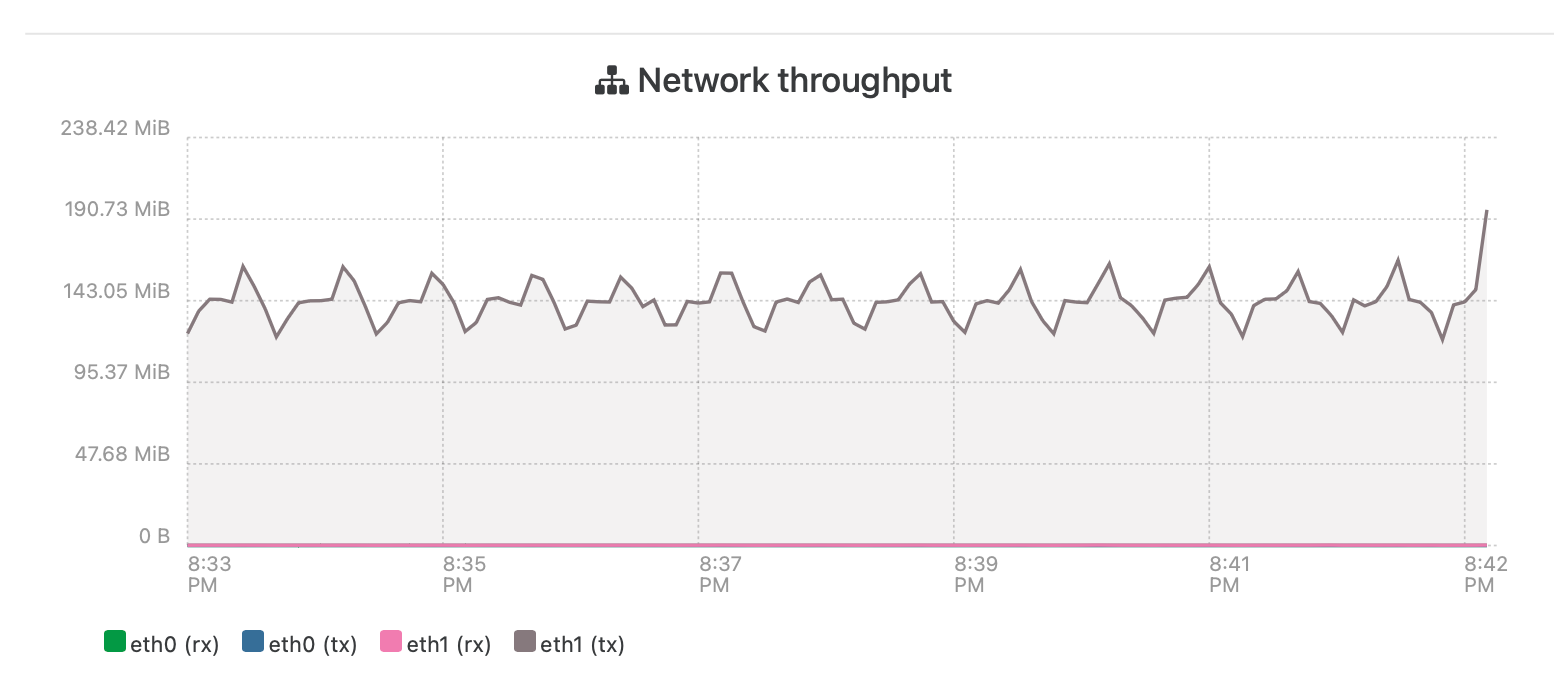

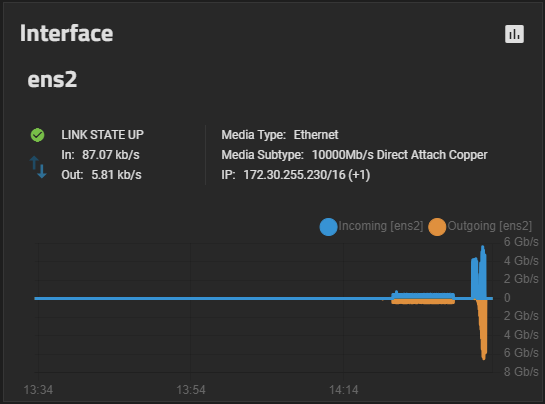

Currently migrating the test VDI from storage1 to storage2 (again) and getting an average of 400/400mbps (lower case m and b). If I do three VDI at once, I can get over a gigbit and sometimes close to 2 gigabit.

It's either SMAPIv1 or it is a file "block" size issue, bigger blocks can get me benchmarks up to 600MBps to almost 700MBps (capital M and B) on my slow storage over a 10gbps network. Testing this with XCP-NG 8.3 release to see if anything changed from the Beta, so far all is the same. Also all testing done with thin provisioned file shares (SMB and NFS). If I could get half my maximum tests for the VDI migration, I'd be happy. In fact I'm extremely pleased that my storage can go as fast as it is showing, it's all old stuff on SATA.

I have a whole thread on this testing if you want to read more.

You can see the migrate which was 400/400 and then the benchmark across the ethernet interface of my Truenas, this example was migrate from SMB to NFS, and benchmark on the NFS. Settings for that NFS are in the thread mentioned and certainly my fastest non-real world performance to date.

-

@Greg_E said in What should i expect from VM migration performance from Xen-ng ?:

I've spent a bunch of time trying to find some dark magic to making the VDI migration faster, so far nothing. My VM (memory) migration is fast enough that I'm not concerned right now. and don't have any testing to show for it.

Currently migrating the test VDI from storage1 to storage2 (again) and getting an average of 400/400mbps (lower case m and b). If I do three VDI at once, I can get over a gigbit and sometimes close to 2 gigabit.

It's either SMAPIv1 or it is a file "block" size issue, bigger blocks can get me benchmarks up to 600MBps to almost 700MBps (capital M and B) on my slow storage over a 10gbps network. Testing this with XCP-NG 8.3 release to see if anything changed from the Beta, so far all is the same. Also all testing done with thin provisioned file shares (SMB and NFS). If I could get half my maximum tests for the VDI migration, I'd be happy. In fact I'm extremely pleased that my storage can go as fast as it is showing, it's all old stuff on SATA.

I have a whole thread on this testing if you want to read more.

You can see the migrate which was 400/400 and then the benchmark across the ethernet interface of my Truenas, this example was migrate from SMB to NFS, and benchmark on the NFS. Settings for that NFS are in the thread mentioned and certainly my fastest non-real world performance to date.

That's impressive!

We're not seeing as high speeds are you are, we have 3 different storages, mostly doing NFS tho. We're still running 8.2.0 but I dont really think it matters as the issue is most likely tied to the SMAPIv1.We also noted that it goes a bit faster when doing 3-4 VDI's in parallell, but the individual speed per migration is about the same.

-

I'm doing upgrades on both XCP-NG and Truenas, when they are done I have 4 small VMs that I'm going to fire up at the same time and see what I can see. I might be able to hit 2gbps reads while all 4 are booting.

I have one last thing to try in my speed testing, going to see if increasing the RAM for each host makes a difference. Probably not since each already has a decent amount (defaults go up when you pass a certain amount of available). I read somewhere that going up to 16GB for each host might help, with my lab being so lightly used, I might try going up to 32GB for fun. I have lots of slots in my production system where I can add RAM if this helps on lab, the lab machines are full.

-

@Greg_E said in What should i expect from VM migration performance from Xen-ng ?:

I'm doing upgrades on both XCP-NG and Truenas, when they are done I have 4 small VMs that I'm going to fire up at the same time and see what I can see. I might be able to hit 2gbps reads while all 4 are booting.

I have one last thing to try in my speed testing, going to see if increasing the RAM for each host makes a difference. Probably not since each already has a decent amount (defaults go up when you pass a certain amount of available). I read somewhere that going up to 16GB for each host might help, with my lab being so lightly used, I might try going up to 32GB for fun. I have lots of slots in my production system where I can add RAM if this helps on lab, the lab machines are full.

We usually set our busy hosts to 16Gb for the dom0 - It does make a stability difference in our case (We could have 30-40 running VM's per host) especially when there is a bit of load inside the VM's.

Normal hosts gets 4-8Gb ram depending on the total amount of ram in the host, 4Gb on the ones with 32Gb and then 6Gb for 64Gb and 8Gb and upwards for the ones with +128Gb. -

BTW, the ram increase made zero difference in the VDI migration times.

I may still change the ram for each host in production, but I only have like 12 VMs right now and probably not many more to create in my little system. Might be useful if I ever get down to a single host because of a catastrophe. Currently running what it chose for default at 7.41 MiB, roughly doubled that in my lab to see what might happen.

Just got my VMware lab started so all this other stuff is going to back burner for a while. I will say I like the basic ESXi interface that they give you, I can see why smaller systems may not bother buying VCenter. I hope XO-lite gives us the same amount of function when it gets done (or more).

-

@Greg_E yeah I can imagine, I dont think the ram does much except gives you some "margins" under some heavier load. With 12 VM's I wouldn't bother increasing it from the default values

Yea the mgmt interface in ESXi is nice, I think the standalone interface is almost better than vCenter. Im pretty sure XO Lite will be able to do more when it is done, for example you'll be able to manage a small pool with it.