Gpu passthrough on Asrock rack B650D4U3-2L2Q will not work

-

@Danp Ok sorry didn't know that. will do that next time

-

@steff22 weird bug. Is that W10 VM a fresh install on Xen? It seems that the driver or the dGPU are timing out somehow. Could be related to PCI power management (ASPM), but I'm not sure. You could try booting dom0 with

pcie_aspm=offjust for testing./opt/xensource/libexec/xen-cmdline --set-dom0 "pcie_aspm=off" rebootAnother option that comes to mind is to compare the VM attributes on Proxmox and try to spot any VM config differences by set/unset the

PCI Expressoption.Tux

-

@tuxen Yes it fresh install but after the drivers failed the first time I ran ddu (Display Driver Uninstaller ) and took a snapshot. and revert to this snapshot before I test again

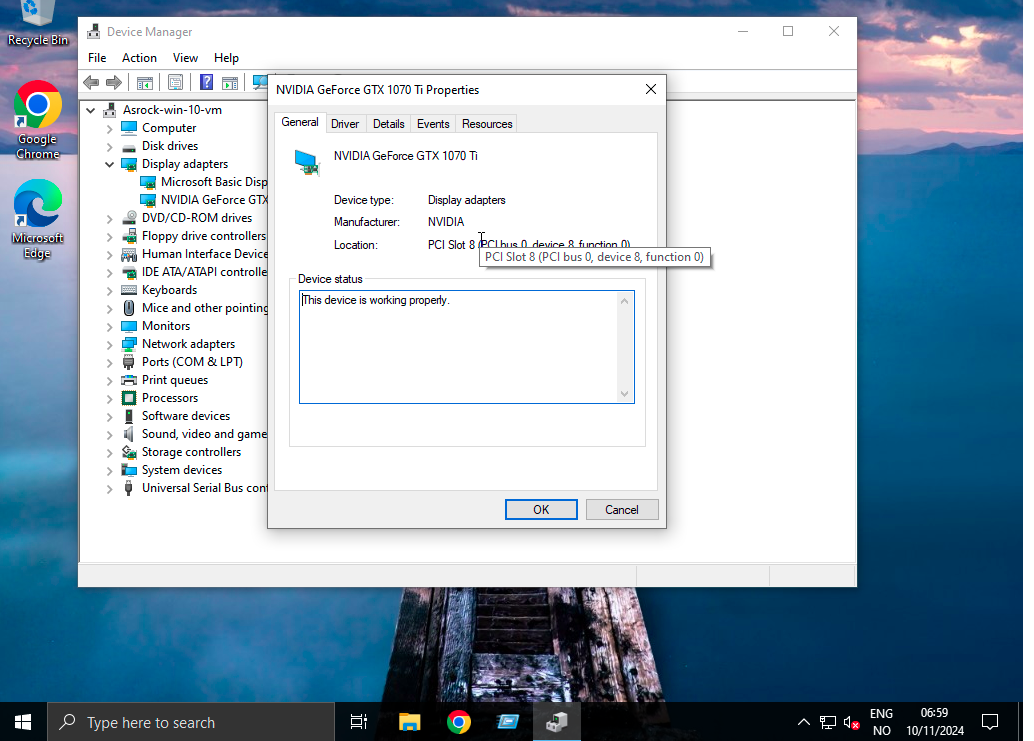

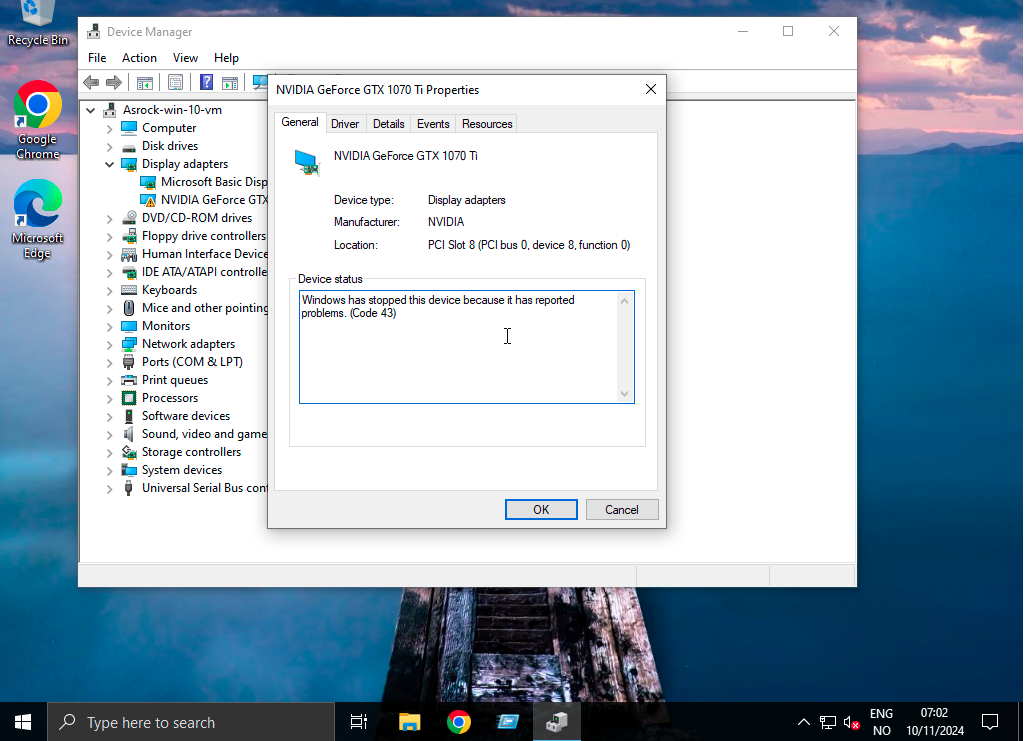

can test tomorrow. but the strange thing is that sometimes the drivers install without error and windows thinks the gpu is working as it should but has no other screens available in windows. after reboot, error 43 is back

-

@steff22 Tried "pcie_aspm=off" now still got BSODs,

But win restarted, I installed drivers without running DDU. and rather choose (perform a clean installation) under the nvidia driver guide. Then the drivers installed as they should. But after the reboot error 43

was back

-

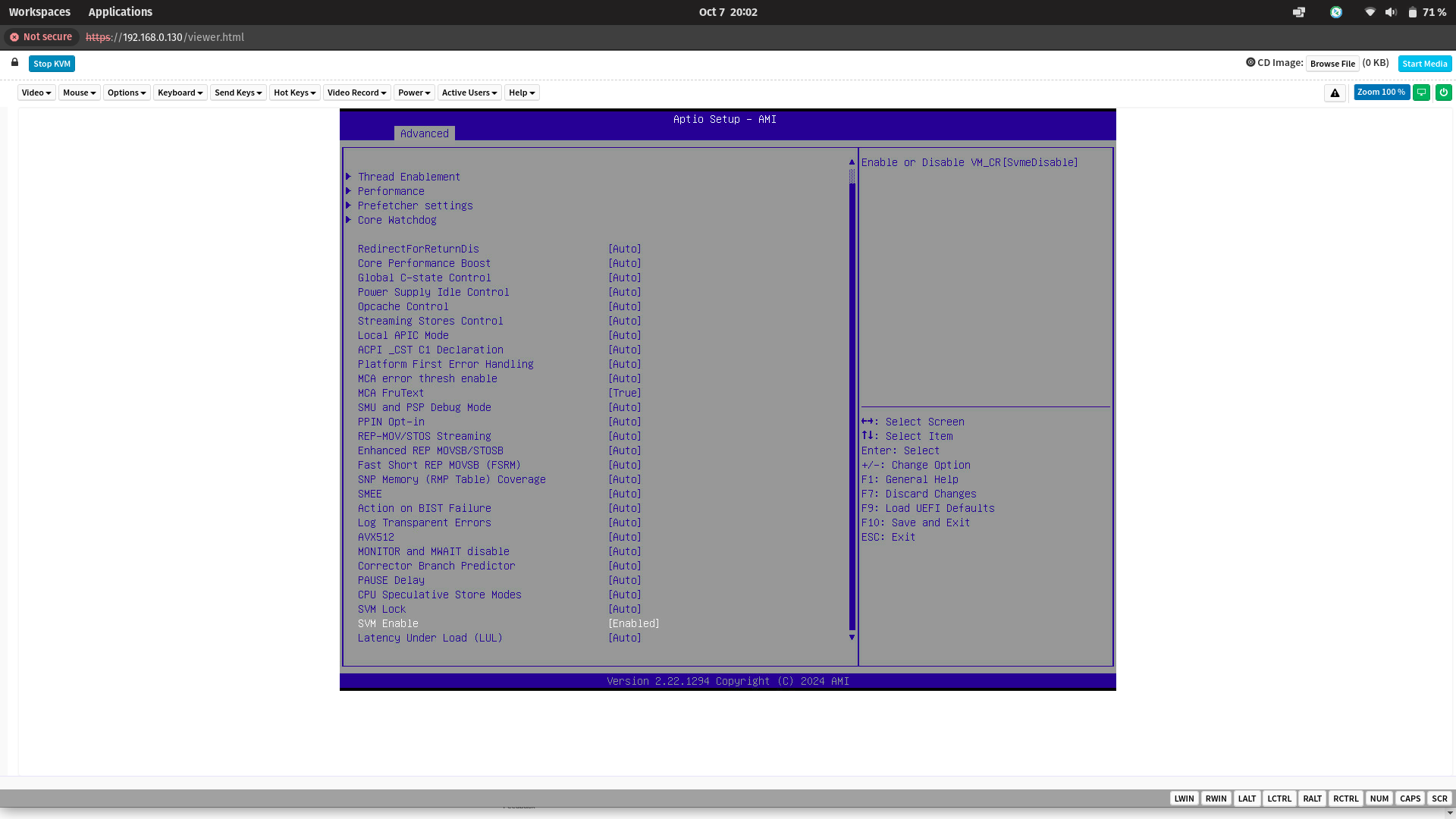

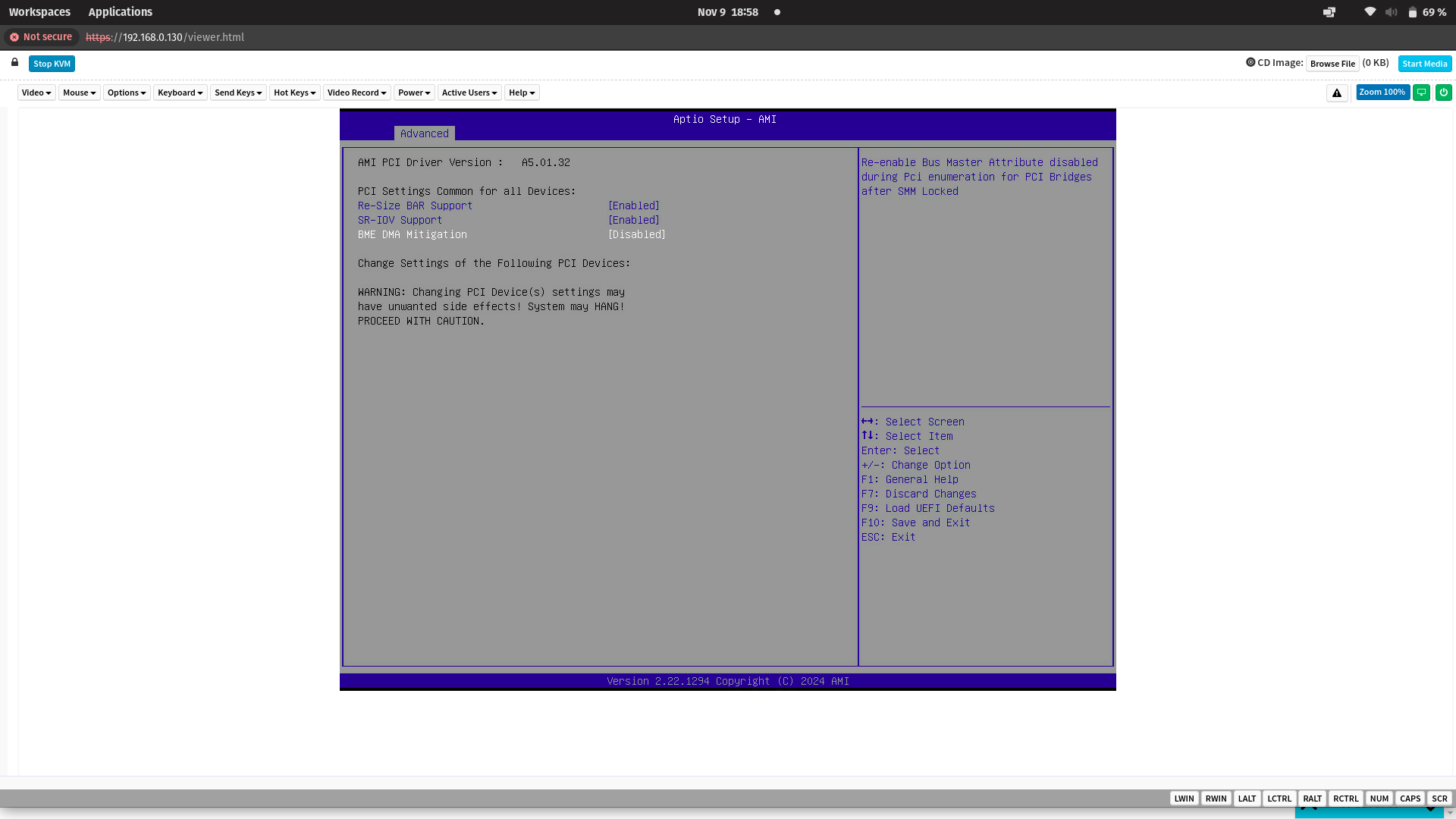

@tuxen Could it have something to do with a change in agesa 1.2.x.x as that changes literally everything with the pci passthrough mentioned on another forum.

I have the impression that a lot has changed with Amd AM5

Also have re-size Bar enabled, have also tried with this disabled.

Sees that there is an option called SVM Lock, some advantage when this is enabled.

N

ot sure if the thing with Proxmox and try to spot any VM config differences will be a bit too technical for me

ot sure if the thing with Proxmox and try to spot any VM config differences will be a bit too technical for me -

@steff22 Can't find much info about what this proxmox pci express selection with searching.

I think you have to have Proxmox Subscriptions to write on the forum there.

But found this, but it's only about the installation of drivers.

((it's mostly to be compatible with different guest drivers. some are a bit picky when it comes to that. if it works with pci, you problably won't gain anything switching to pcie, but it shouldn't hurt to try and see

what is better always depends on the hw, guest os and guest driver, so we cannot give a general recommendation either way)) -

@steff22 After reading this Blue Iris topic, I wonder if it's related. As of Xen 4.15, there was a change on MSRs handling that would cause a guest crash if it tries to access those registers. XCP-ng 8.3 has the Xen 4.17 version. The issue seems to be CPU-vendor-model dependent too.

https://xcp-ng.org/forum/topic/8873/windows-blue-iris-xcp-ng-8-3

It's worth to test the solution provided there (VM shutdown/start cycle is required to take effect):

xe vm-param-add uuid=<VM-UUID> param-name=platform msr-relaxed=trueReplace the

<VM-UUID>with your VM W10 uuid.Tux

-

@tuxen that didn't work either.

probably not so interesting but tried Xcp-ng 8.2.1 also same error there

-

@tuxen Got hold of custom bios with G4 available now. But disabling 4g didn't help.. It was hidden but always enabled

But see that in Proxmox the pci slot does not change. it stays at slot 1 with Xcp-ng it changes to slot 8.

Proxmox user uses hex pci id for gpu passthrough does this matter?

-

It shouldn't. Any idea here @Teddy-Astie ?

-

@steff22 said in Gpu passthrough on Asrock rack B650D4U3-2L2Q will not work:

@tuxen Got hold of custom bios with G4 available now. But disabling 4g didn't help.. It was hidden but always enabled

But see that in Proxmox the pci slot does not change. it stays at slot 1 with Xcp-ng it changes to slot 8.

Is this slot change in the guest or in XCP-ng itself ?

I would not be supprised to see it chaging in the guest (it's a part of what QEMU pci passthrough can do) but that would be weird for Dom0.Proxmox user uses hex pci id for gpu passthrough does this matter?

I don't think it's a guarantee that you see the same BDF in guest and host regarding in proxmox (more likely a coincidence). But that's a behavior that would change in XCP-ng with Q35/proper PCIe support.

Aside that, it "appears" to work in a Linux VM (albeit with no display), have you managed to make CUDA running there (to see if the device actually works) ? And if the BAR window message still exist.

-

@Teddy-Astie no only in guest.

Haven't tried anything more with pop os than trying to extend the screen.

can try to look at it with CUDA running -

@Teddy-Astie I have installed nvidia drivers and CUDA Toolkit. It seems like the drivers are running properly and I see that the pop os gpu card in popos but ran dmesg again and got an error

ation="profile_load" profile="unconfined" name="libreoffice-xpdfimport" pid=516 comm="apparmor_parser" [ 5.425026] Adding 4193784k swap on /dev/mapper/cryptswap. Priority:-2 extents:1 across:4193784k SS [ 5.427939] nvidia: module license 'NVIDIA' taints kernel. [ 5.427941] Disabling lock debugging due to kernel taint [ 5.427943] nvidia: module license taints kernel. [ 5.473953] nvidia-nvlink: Nvlink Core is being initialized, major device number 238 [ 5.475202] xen: --> pirq=24 -> irq=17 (gsi=17) [ 5.475401] nvidia 0000:00:08.0: vgaarb: VGA decodes changed: olddecodes=io+mem,decodes=none:owns=io+mem [ 5.476296] NVRM: loading NVIDIA UNIX x86_64 Kernel Module 560.35.03 Fri Aug 16 21:39:15 UTC 2024 [ 5.502521] nvidia-modeset: Loading NVIDIA Kernel Mode Setting Driver for UNIX platforms 560.35.03 Fri Aug 16 21:21:48 UTC 2024 [ 5.505900] [drm] [nvidia-drm] [GPU ID 0x00000008] Loading driver [ 5.829042] zram: Added device: zram0 [ 6.103268] kauditd_printk_skb: 18 callbacks suppressed [ 6.103273] audit: type=1400 audit(1731496933.838:29): apparmor="DENIED" operation="capable" class="cap" profile="/usr/sbin/cupsd" pid=671 comm="cupsd" capability=12 capname="net_admin" [ 6.288396] snd_hda_intel 0000:00:09.0: azx_get_response timeout, switching to polling mode: last cmd=0x000f0000 [ 6.385067] [drm] Initialized nvidia-drm 0.0.0 20160202 for 0000:00:08.0 on minor 1 [ 6.422276] nvidia_uvm: module uses symbols nvUvmInterfaceDisableAccessCntr from proprietary module nvidia, inheriting taint. [ 6.452377] nvidia-uvm: Loaded the UVM driver, major device number 236. [ 6.844962] zram0: detected capacity change from 0 to 32735232 [ 7.215079] input: HDA NVidia HDMI/DP,pcm=3 as /devices/pci0000:00/0000:00:09.0/sound/card0/input6 [ 7.216764] input: HDA NVidia HDMI/DP,pcm=7 as /devices/pci0000:00/0000:00:09.0/sound/card0/input7 [ 7.217068] input: HDA NVidia HDMI/DP,pcm=8 as /devices/pci0000:00/0000:00:09.0/sound/card0/input8 [ 7.217363] input: HDA NVidia HDMI/DP,pcm=9 as /devices/pci0000:00/0000:00:09.0/sound/card0/input9 [ 7.332815] Adding 16367612k swap on /dev/zram0. Priority:1000 extents:1 across:16367612k SS [ 10.684565] rfkill: input handler disabled [ 20.569595] rfkill: input handler enabled [ 23.259676] rfkill: input handler disabled [ 23.572353] [drm:nv_drm_master_set [nvidia_drm]] *ERROR* [nvidia-drm] [GPU ID 0x00000008] Failed to grab modeset ownership steff@pop-os:~$ lspci | grep VGA 00:02.0 VGA compatible controller: Device 1234:1111 (rev 02) 00:08.0 VGA compatible controller: NVIDIA Corporation GP104 [GeForce GTX 1070 Ti] (rev a1) -

@steff22 This was a known bug that had no significance.

((The warning message is expected. When a client (such as the modesetting driver) attempts to open our DRM device node while modesetting permission is already acquired by something else (like the NVIDIA X driver), it has to fail, but the kernel won’t let us return a failure after v5.9-rc1, so we print this message. It won’t impact functionality of the NVIDIA X driver that already has modesetting permission. Safe to ignore as long as long as you didn’t need the other client to actually get modesetting permission. If you want to suppress the error, you would need to find which client is attempting to open the NVIDIA DRM device node and prevent it from doing so.))

-

@steff22 I have some questions:

- Is the host being powered up with a monitor or a dummy plug (headless) already attached to the dGPU?

- Without rebooting the VM and right after the driver installation succeeds (showing that the device is working OK), what happens if you click the

[Detect]button at the display settings window? - Instead of a reboot, did you try a VM shutdown/start cycle for the 1st time after the driver installation?

Nonetheless, if the same dGPU card works normally on another XCP-ng host, a possible Xen passthrough incompatibility with that AM5 board should be considered. For example:

- CSM/UEFI GPU compat issues, as referenced by @Teddy-Astie

- Beta BIOS/IOMMU broken or lacking features (eg. ACS support for PCI/PCIe isolation)

Tux

-

@tuxen No. 1 yes there is an hdmi screen connected to the dGPU.

Is also an ipmi with dedicated vga connected.But is an error that makes the primary screen not work completely.

The bios disabled internal ipma when an Ext GPU card is connected even though int gpu is selected as primary gpu in the bios. So I only see xcp-ng startup on screen no xsconsole. Have tried without a screen connected extgpu same error then

no. 2 Have tried pressing Detect only to be told that there is no more screen. Have only tried reboot

At first I thought there was something wrong with the bios. But this works with Vmware esxi and proxmox.

-

@steff22 I think I remembered that there were also some others who struggled with the same problem with Asrock rack B650D4U with gpu passthrough, not sure if it was with AM5.

-

@steff22 said in Gpu passthrough on Asrock rack B650D4U3-2L2Q will not work:

The bios disabled internal ipma when an Ext GPU card is connected even though int gpu is selected as primary gpu in the bios. So I only see xcp-ng startup on screen no xsconsole. Have tried without a screen connected extgpu same error then

I suggest to call the Asrock support and explain this behavior.

@steff22 said in Gpu passthrough on Asrock rack B650D4U3-2L2Q will not work:

no. 2 Have tried pressing Detect only to be told that there is no more screen. Have only tried reboot

Could you try the shutdown/start after the driver installation?

@steff22 said in Gpu passthrough on Asrock rack B650D4U3-2L2Q will not work:

At first I thought there was something wrong with the bios. But this works with Vmware esxi and proxmox.

Considering it worked with the same XCP-ng version, but on a different hardware, that's why I'm more inclined to a Xen incompatibility issue with the combo Nvidia + some AMD motherboards. If you search the forum, there's a mixed result about that.

-

@tuxen tried shutdown/start now same error.

Yes I have that opinion too when it comes to Nvidia the drivers are a bit picky.

Got it to work Asrock motherboard and an old Radeon HD 5450 so I think I'll sell the 1070ti card is starting to get old. Oh, I'd rather invest in a new Radeon GPU. Then I'll have to bet that this works on the Asrock motherboard.Does vGPU only work like that on enterprise GPUs like Nvidia Tesla?

-

Thank you for the time you spent on the troubleshooting process