Continuous replication problem with multiple SR

-

Hello,

I have problems with continuous replication, meaning that if I choose to do it on a single SR everything is ok but if I add two or more SRs it crashes and gives the error message below.

The problem is that it does nothing, it stays stuck and it is always the first SR in the list.

Info:- XCP-ng 8.3 up to date,

- XOCE commit 6ecab

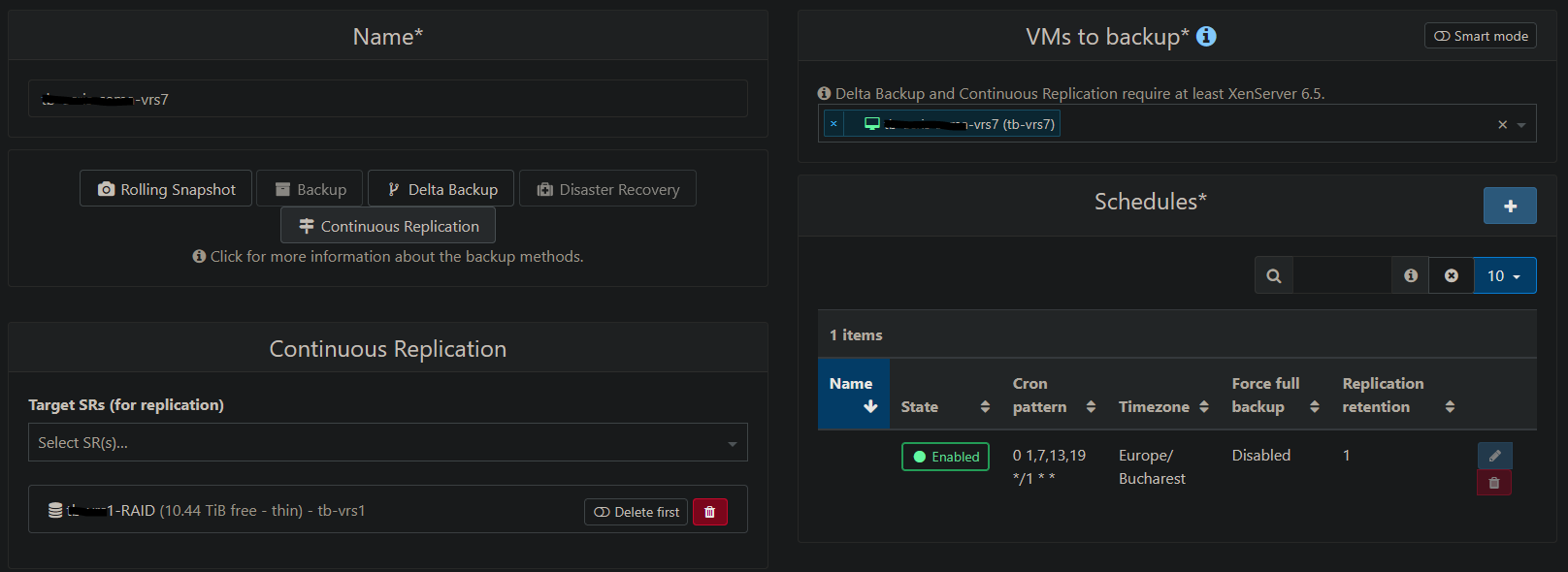

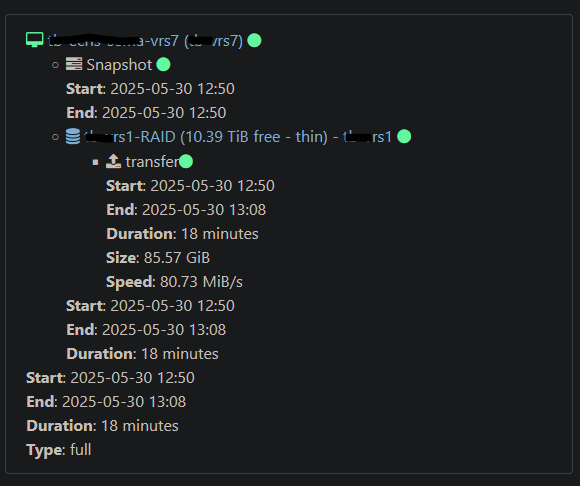

Here it is with only one SR and everything is ok:

-

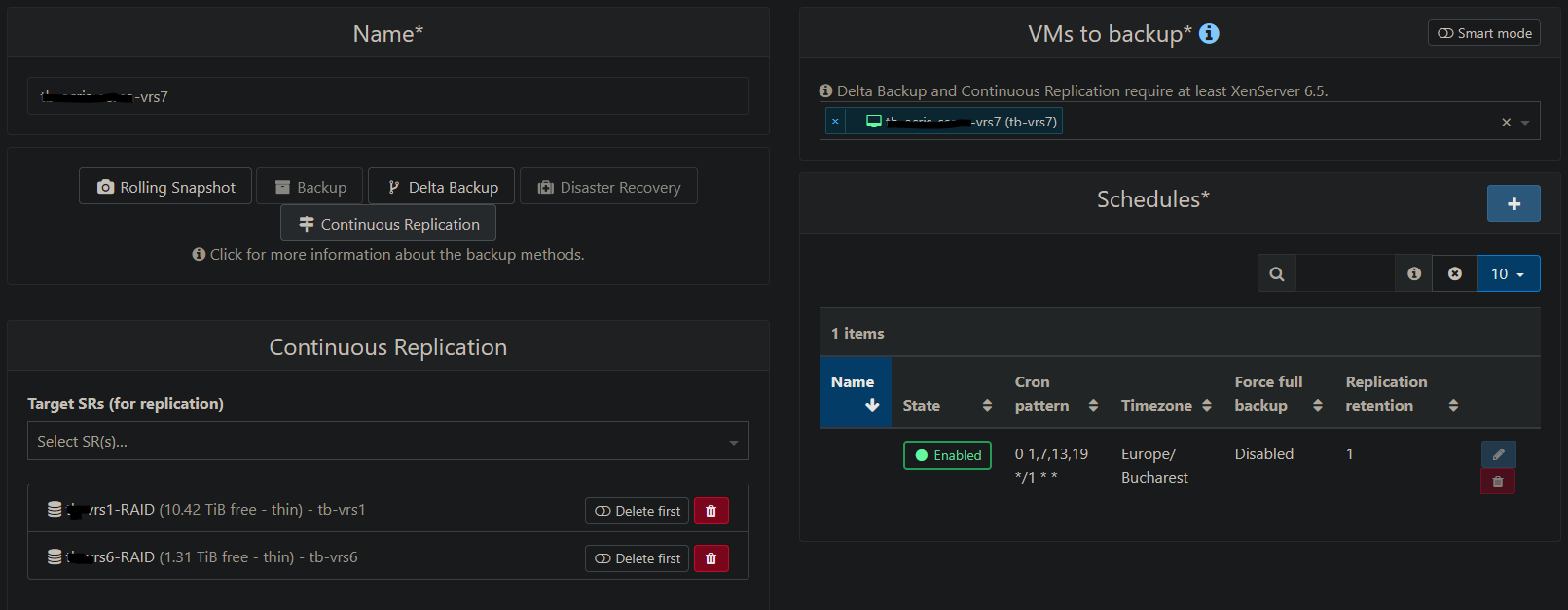

configuration:

-

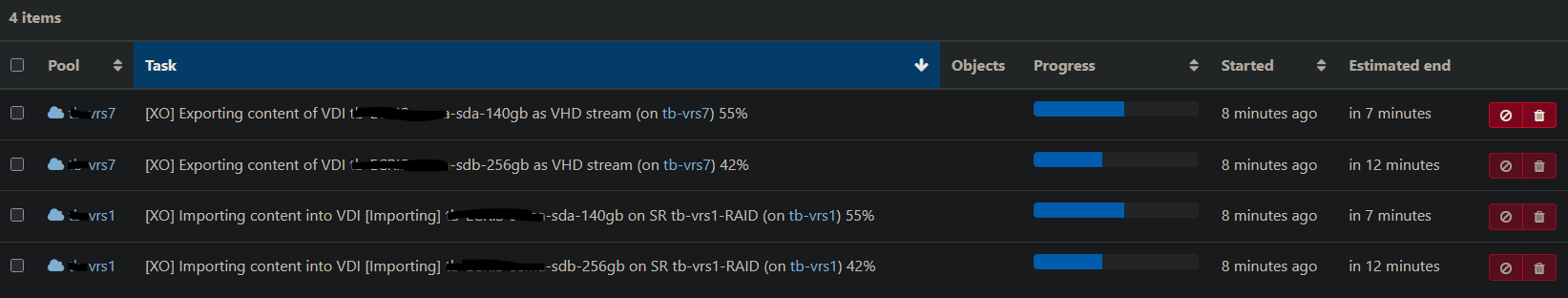

transfer:

-

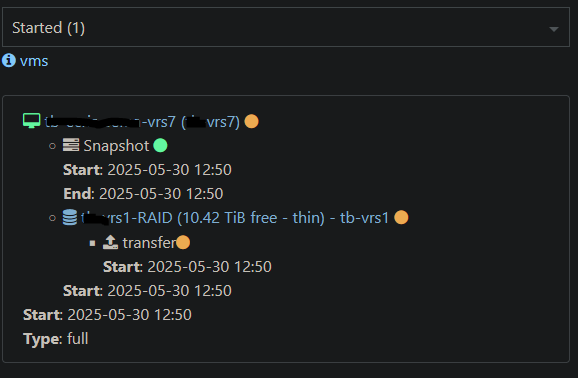

message

-

-

Here it is with two SRs

-

configuration:

-

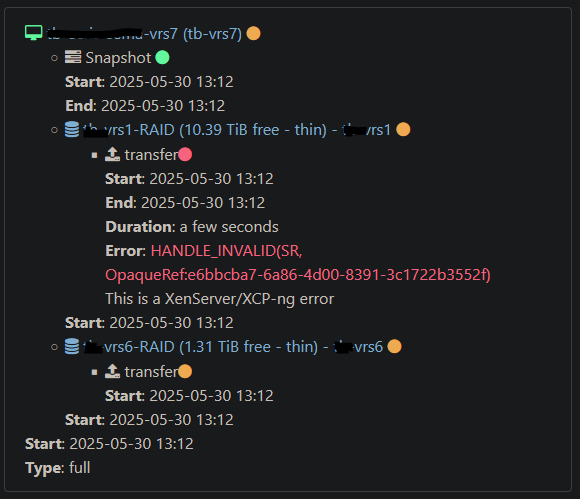

transfer:

-

message:

-

log

{ "data": { "mode": "delta", "reportWhen": "always" }, "id": "1748599936065", "jobId": "109e74e9-b59f-483b-860f-8f36f5223789", "jobName": "********-vrs7", "message": "backup", "scheduleId": "40f57bd8-2557-4cf5-8322-705ec1d811d2", "start": 1748599936065, "status": "pending", "infos": [ { "data": { "vms": [ "629bdfeb-7700-561c-74ac-e151068721c2" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "629bdfeb-7700-561c-74ac-e151068721c2", "name_label": "********-vrs7" }, "id": "1748599941103", "message": "backup VM", "start": 1748599941103, "status": "pending", "tasks": [ { "id": "1748599941671", "message": "snapshot", "start": 1748599941671, "status": "success", "end": 1748599945608, "result": "5c030f40-0b34-d1b4-10aa-f849548aa0b7" }, { "data": { "id": "1afcdfda-6ede-3cb5-ecbf-29dc09ea605c", "isFull": true, "name_label": "********-RAID", "type": "SR" }, "id": "1748599945609", "message": "export", "start": 1748599945609, "status": "pending", "tasks": [ { "id": "1748599948875", "message": "transfer", "start": 1748599948875, "status": "failure", "end": 1748599949159, "result": { "code": "HANDLE_INVALID", "params": [ "SR", "OpaqueRef:e6bbcba7-6a86-4d00-8391-3c1722b3552f" ], "call": { "duration": 6, "method": "VDI.create", "params": [ "* session id *", { "name_description": "********-sdb-256gb", "name_label": "********-sdb-256gb", "other_config": { "xo:backup:vm": "629bdfeb-7700-561c-74ac-e151068721c2", "xo:copy_of": "59ee458d-99c8-4a45-9c91-263c9729208b" }, "read_only": false, "sharable": false, "SR": "OpaqueRef:e6bbcba7-6a86-4d00-8391-3c1722b3552f", "tags": [], "type": "user", "virtual_size": 274877906944, "xenstore_data": {} } ] }, "message": "HANDLE_INVALID(SR, OpaqueRef:e6bbcba7-6a86-4d00-8391-3c1722b3552f)", "name": "XapiError", "stack": "XapiError: HANDLE_INVALID(SR, OpaqueRef:e6bbcba7-6a86-4d00-8391-3c1722b3552f)\n at XapiError.wrap (file:///opt/xen-orchestra/packages/xen-api/_XapiError.mjs:16:12)\n at file:///opt/xen-orchestra/packages/xen-api/transports/json-rpc.mjs:38:21\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)" } } ] }, { "data": { "id": "a5d2b22e-e4be-c384-9187-879aa41dd70f", "isFull": true, "name_label": "********-vrs6-RAID", "type": "SR" }, "id": "1748599945617", "message": "export", "start": 1748599945617, "status": "pending", "tasks": [ { "id": "1748599948887", "message": "transfer", "start": 1748599948887, "status": "pending" } ] } ] } ] } -

@Gheppy Hi,

Worth an investigation for @florent (adding also @lsouai-vates in the loop)

-

@olivierlambert @Gheppy that is a nice catch and it gives us a interesting clue

I am currently working on it

-

I have a branch that fix this case, at least on our lab fix_multiple_replication , PR is here : https://github.com/vatesfr/xen-orchestra/pull/8668

it should be in master tomorrow , and hopefully in latest by the end of the week

-

said in Continuous replication problem with multiple SR:

I have a branch that fix this case, at least on our lab fix_multiple_replication , PR is here : https://github.com/vatesfr/xen-orchestra/pull/8668

it should be in master tomorrow , and hopefully in latest by the end of the week

it's merged in master (also with the same fix for mirror backups)

-

I tested with commit e64c434 and it is ok.

Thank you -

-

@Gheppy it's always easier to fix when we can build a minimal setup that reproduce the issue

thanks for your report -

O olivierlambert marked this topic as a question on

O olivierlambert marked this topic as a question on

-

O olivierlambert has marked this topic as solved on

O olivierlambert has marked this topic as solved on

-

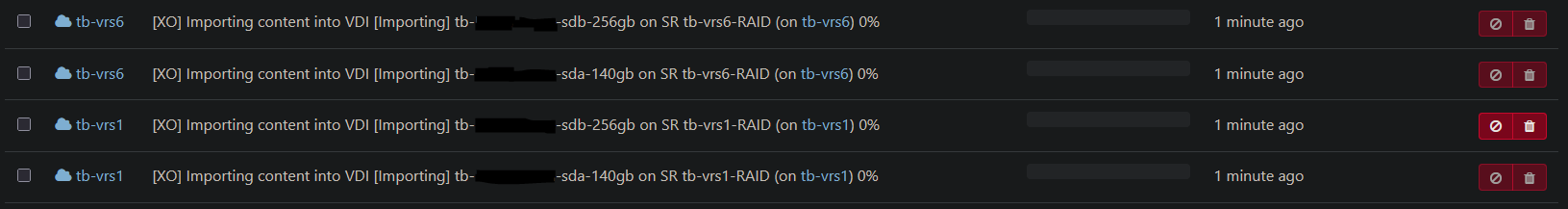

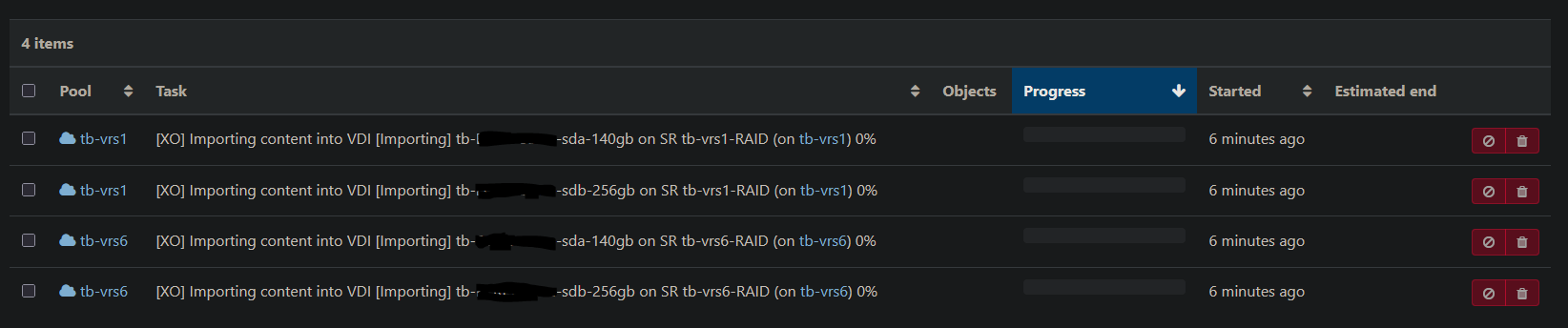

Hello, the problem has reappeared.

This time with a different behavior.

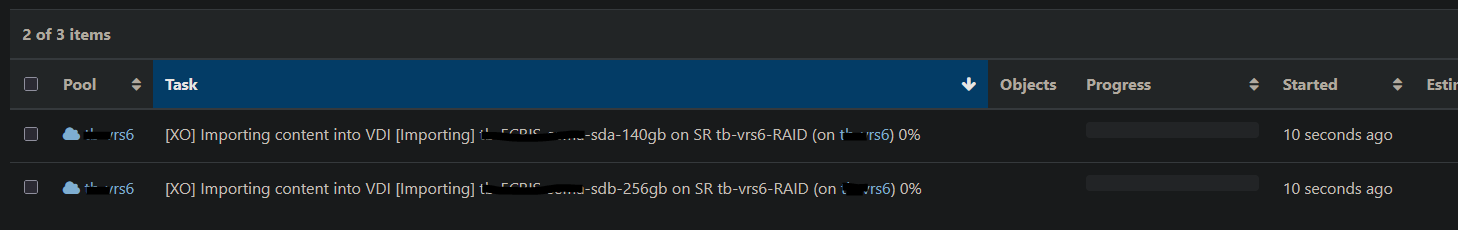

It hangs at this stage, and the export tasks do not even start/show.Info:

XCP-ng 8.3 up to date, XOCE commit fcefaaea85651c3c0bb40bfba8199dd4e963211c

-

@Gheppy Are you sure you are on latest commit on

master? -

@olivierlambert

The problem start with this commit cbc07b319fea940cab77bc163dfd4c4d7f886776

This is ok 7994fc52c31821c2ad482471319551ee00dc1472 -

@Gheppy It's not clear to me. Is it fixed on latest commit on

masteras of now? -

@olivierlambert

I update to last commit, two more change is on git now

The problem still exists. From what I've noticed, export tasks start being created and then disappear shortly after. And then a few moments later the import tasks appear and it remains blocked like this.

Only up to this commit 7994fc52c31821c2ad482471319551ee00dc1472 (inclusive) , everything is ok.

EDIT: I read on the forum about similar problems. I don't have NBD enabled.

-

@Gheppy is there anything in the xo ? like an error on "readblock" ?

-

@florent

I will update to the latest version and look at the logs.

Now I have version 7994fc52c31821c2ad482471319551ee00dc1472 -

@Gheppy if this is what I think it should have been fixed by https://github.com/vatesfr/xen-orchestra/commit/3c8efb4dc3e1cb3955521063047227849cfb6ed3

-

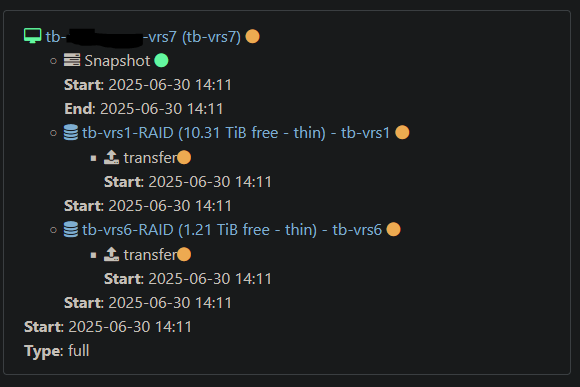

@florent

The same result

Logs, but task is blocked on importing step{ "data": { "mode": "delta", "reportWhen": "always" }, "id": "1751375761951", "jobId": "109e74e9-b59f-483b-860f-8f36f5223789", "jobName": "tb-xxxx-xxxx-vrs7", "message": "backup", "scheduleId": "40f57bd8-2557-4cf5-8322-705ec1d811d2", "start": 1751375761951, "status": "pending", "infos": [ { "data": { "vms": [ "629bdfeb-7700-561c-74ac-e151068721c2" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "629bdfeb-7700-561c-74ac-e151068721c2", "name_label": "tb-xxxx-xxxx-vrs7" }, "id": "1751375768046", "message": "backup VM", "start": 1751375768046, "status": "pending", "tasks": [ { "id": "1751375768586", "message": "snapshot", "start": 1751375768586, "status": "success", "end": 1751375772595, "result": "904c2b00-087f-45ae-9799-b6dad1680aff" }, { "data": { "id": "1afcdfda-6ede-3cb5-ecbf-29dc09ea605c", "isFull": true, "name_label": "tb-vrs1-RAID", "type": "SR" }, "id": "1751375772596", "message": "export", "start": 1751375772596, "status": "pending", "tasks": [ { "id": "1751375773638", "message": "transfer", "start": 1751375773638, "status": "pending" } ] }, { "data": { "id": "a5d2b22e-e4be-c384-9187-879aa41dd70f", "isFull": true, "name_label": "tb-vrs6-RAID", "type": "SR" }, "id": "1751375772611", "message": "export", "start": 1751375772611, "status": "pending", "tasks": [ { "id": "1751375773654", "message": "transfer", "start": 1751375773654, "status": "pending" } ] } ] } ] } -

@Gheppy and nothing in the journalctl log ?

-

hmm,

I found the solution, after I read here on forum.

I enable this options and save and then disable and save and now is ok. Task is running again. It is running with last commit to

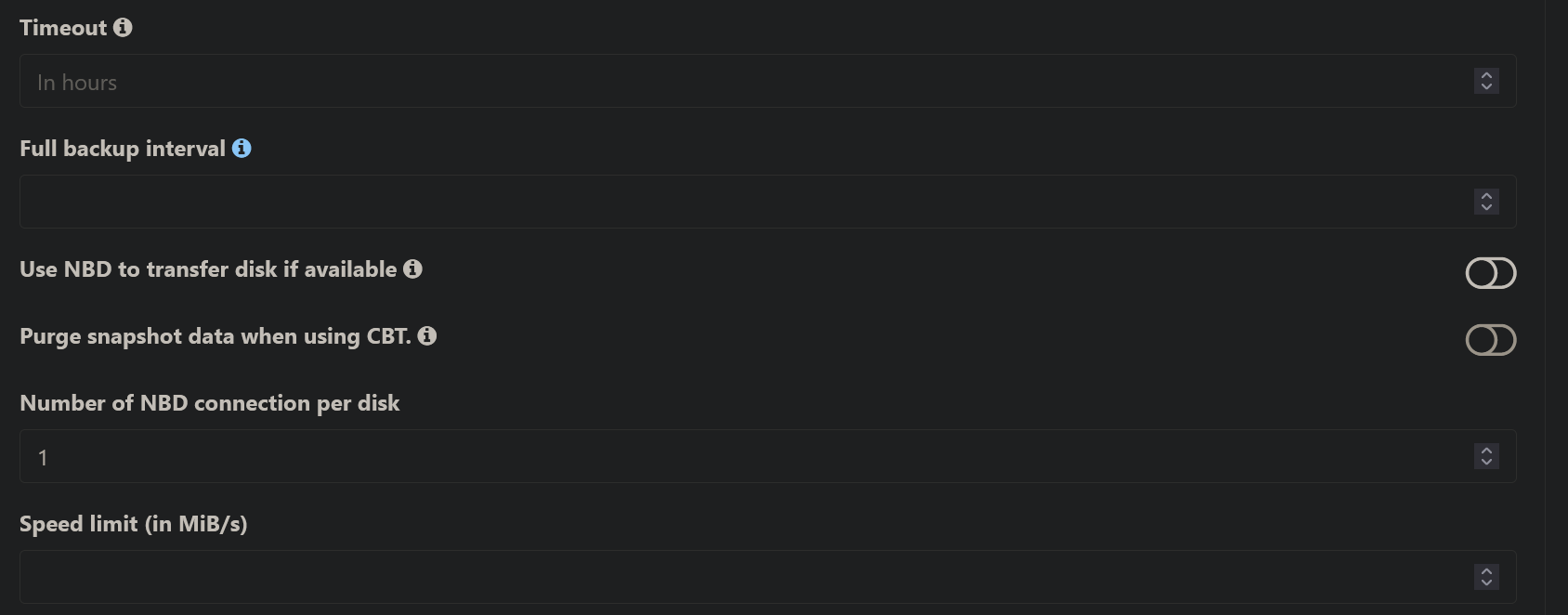

So the problem is with these settings which may not exist in the task created before the update.