Error: invalid HTTP header in response body

-

@olivierlambert Yes, I understand that you need a bit more details about the configuration to be able to find out what's causing the problems.

I'm using XO for now, latest version as of yesterday (as I try with updating on each day I get these recurring failures), so I'm just 3 commits behind (Xen Orchestra, commit c5a26).

Tonight (as expected) 6 machines got that same backup failure. I'm now running one of them manually with a script I wrote, and it looks good so far.

I asked for more debug-options to enable, to be able to catch these errors, but did not get any reply in time for tonight's failure. I don't know how much the short backup logs might help (there is actually nothing else useful in there than the mentioned error).The next failure is scheduled for 03:15 tonight (15 3 * * 3)

This is the configuration you might find useful:

Sequence (3c05) "Special-task machines" (15 1 * * 2)

Unnamed schedule (Docker)

Unnamed schedule (HomeAssistant)

weekly tuesday (TrueNAS)

Unnamed schedule (Win10 22H2 (XCP-VM-BU1))

Unnamed schedule (pfSense)

Unnamed schedule (unifi)The schedules included in the sequence are disabled in the backup job setup.

Backup log for job "Docker"

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1748301300016", "jobId": "b9142eba-4954-414a-bbc0-180b071a6490", "jobName": "Docker", "message": "backup", "scheduleId": "148eb0e1-1a22-4966-9143-ce53849875de", "start": 1748301300016, "status": "failure", "infos": [ { "data": { "vms": [ "2af0192f-3cb3-daa2-33e6-ec8f18b0cfb1" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "2af0192f-3cb3-daa2-33e6-ec8f18b0cfb1", "name_label": "Docker" }, "id": "1748301324573", "message": "backup VM", "start": 1748301324573, "status": "failure", "tasks": [ { "id": "1748301324578", "message": "clean-vm", "start": 1748301324578, "status": "success", "end": 1748301326826, "result": { "merge": false } }, { "id": "1748301327442", "message": "snapshot", "start": 1748301327442, "status": "success", "end": 1748301336945, "result": "2bfe93f6-4c49-2756-2e27-f620946392ab" }, { "data": { "id": "2b919467-704c-4e35-bac9-2d6a43118bda", "isFull": false, "type": "remote" }, "id": "1748301336945:0", "message": "export", "start": 1748301336945, "status": "success", "tasks": [ { "id": "1748301360976", "message": "clean-vm", "start": 1748301360976, "status": "success", "end": 1748301362952, "result": { "merge": false } } ], "end": 1748301362953 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:ccff14c1-2a28-38fb-57e2-4040e4081ea3" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:72d435e0-19f9-7b84-2f57-950c66d0c5a7" }, "message": "Snapshot data has been deleted" } ], "end": 1748301362953, "result": { "message": "invalid HTTP header in response body", "name": "Error", "stack": "Error: invalid HTTP header in response body\n at checkVdiExport (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:37:19)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:244:5)\n at async #getExportStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:123:20)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:135:23)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamNbd.mjs:31:5)\n at async #openNbdStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/Xapi.mjs:69:7)\n at async XapiDiskSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/disk-transform/dist/DiskPassthrough.mjs:28:41)\n at async file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/backups/_incrementalVm.mjs:65:5\n at async Promise.all (index 0)" } } ], "end": 1748301362954 }Schedule for backup job "Docker"

{ id: 'b9142eba-4954-414a-bbc0-180b071a6490', mode: 'delta', name: 'Docker', remotes: { id: '2b919467-704c-4e35-bac9-2d6a43118bda' }, settings: { '': { cbtDestroySnapshotData: true, fullInterval: 28, longTermRetention: { daily: { retention: 32, settings: {} }, monthly: { retention: 13, settings: {} }, weekly: { retention: 5, settings: {} } }, nbdConcurrency: 3, preferNbd: true, timezone: 'Europe/Stockholm' }, '148eb0e1-1a22-4966-9143-ce53849875de': { exportRetention: 1 } }, srs: { id: { __or: [] } }, type: 'backup', vms: { id: '2af0192f-3cb3-daa2-33e6-ec8f18b0cfb1' } },Backup log for job "HomeAssistant"

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1748301362981", "jobId": "38f0068f-c124-4876-85d3-83f1003db60c", "jobName": "HomeAssistant", "message": "backup", "scheduleId": "dcb1c759-76b8-441b-9dc0-595914e60608", "start": 1748301362981, "status": "failure", "infos": [ { "data": { "vms": [ "ed4758f3-de34-7a7e-a46b-dc007d52f5c3" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "ed4758f3-de34-7a7e-a46b-dc007d52f5c3", "name_label": "HomeAssistant" }, "id": "1748301365217", "message": "backup VM", "start": 1748301365217, "status": "failure", "tasks": [ { "id": "1748301365221", "message": "clean-vm", "start": 1748301365221, "status": "success", "end": 1748301367237, "result": { "merge": false } }, { "id": "1748301411356", "message": "snapshot", "start": 1748301411356, "status": "success", "end": 1748301414333, "result": "a3284471-7138-9fed-b6c2-c1c4314a6569" }, { "data": { "id": "2b919467-704c-4e35-bac9-2d6a43118bda", "isFull": false, "type": "remote" }, "id": "1748301414334", "message": "export", "start": 1748301414334, "status": "success", "tasks": [ { "id": "1748301433923", "message": "clean-vm", "start": 1748301433923, "status": "success", "end": 1748301436079, "result": { "merge": false } } ], "end": 1748301436080 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:ee706ccd-faeb-3c3e-3f17-153b9767a44e" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:6be5cd98-da3e-9c9b-e193-aa80b78608d5" }, "message": "Snapshot data has been deleted" } ], "end": 1748301436080, "result": { "message": "invalid HTTP header in response body", "name": "Error", "stack": "Error: invalid HTTP header in response body\n at checkVdiExport (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:37:19)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:244:5)\n at async #getExportStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:123:20)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:135:23)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamNbd.mjs:31:5)\n at async #openNbdStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/Xapi.mjs:69:7)\n at async XapiDiskSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/disk-transform/dist/DiskPassthrough.mjs:28:41)\n at async file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/backups/_incrementalVm.mjs:65:5\n at async Promise.all (index 0)" } } ], "end": 1748301436081 }Schedule for backup job "HomeAssistant"

{ id: '38f0068f-c124-4876-85d3-83f1003db60c', mode: 'delta', name: 'HomeAssistant', remotes: { id: '2b919467-704c-4e35-bac9-2d6a43118bda' }, settings: { '': { cbtDestroySnapshotData: true, checkpointSnapshot: false, fullInterval: 28, longTermRetention: { daily: { retention: 8, settings: {} }, monthly: { retention: 13, settings: {} }, weekly: { retention: 5, settings: {} } }, nbdConcurrency: 3, offlineSnapshot: true, preferNbd: true, timezone: 'Europe/Stockholm' }, 'dcb1c759-76b8-441b-9dc0-595914e60608': { exportRetention: 1 } }, srs: { id: { __or: [] } }, type: 'backup', vms: { id: 'ed4758f3-de34-7a7e-a46b-dc007d52f5c3' } },Backup log for job "TrueNAS"

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1748301436105", "jobId": "d40f8444-0364-4945-acd9-01f85ef4a53d", "jobName": "TrueNAS", "message": "backup", "scheduleId": "ffd808a2-563f-4f3c-84b3-445e724ce78c", "start": 1748301436105, "status": "failure", "infos": [ { "data": { "vms": [ "086b19ec-2162-6b28-c9d2-0e2214ab85ad" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "086b19ec-2162-6b28-c9d2-0e2214ab85ad", "name_label": "TrueNAS" }, "id": "1748301438810", "message": "backup VM", "start": 1748301438810, "status": "failure", "tasks": [ { "id": "1748301438815", "message": "clean-vm", "start": 1748301438815, "status": "success", "end": 1748301441801, "result": { "merge": false } }, { "id": "1748301442293", "message": "snapshot", "start": 1748301442293, "status": "success", "end": 1748301447502, "result": "e6988956-774f-7421-d14d-5585bc9f06a8" }, { "data": { "id": "2b919467-704c-4e35-bac9-2d6a43118bda", "isFull": false, "type": "remote" }, "id": "1748301447503", "message": "export", "start": 1748301447503, "status": "success", "tasks": [ { "id": "1748301452311", "message": "clean-vm", "start": 1748301452311, "status": "success", "end": 1748301454142, "result": { "merge": false } } ], "end": 1748301454143 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:a6285710-3e8d-a7cc-1f87-284146c0e5be" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:02477e9a-146e-34f8-0729-a04deb9ce7a2" }, "message": "Snapshot data has been deleted" } ], "end": 1748301454143, "result": { "message": "invalid HTTP header in response body", "name": "Error", "stack": "Error: invalid HTTP header in response body\n at checkVdiExport (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:37:19)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:244:5)\n at async #getExportStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:123:20)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:135:23)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamNbd.mjs:31:5)\n at async #openNbdStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/Xapi.mjs:69:7)\n at async XapiDiskSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/disk-transform/dist/DiskPassthrough.mjs:28:41)\n at async file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/backups/_incrementalVm.mjs:65:5\n at async Promise.all (index 1)" } } ], "end": 1748301454143 }Schedule for backup job "TrueNAS"

{ id: 'd40f8444-0364-4945-acd9-01f85ef4a53d', mode: 'delta', name: 'TrueNAS', remotes: { id: '2b919467-704c-4e35-bac9-2d6a43118bda' }, settings: { '': { cbtDestroySnapshotData: true, fullInterval: 28, longTermRetention: { daily: { retention: 8, settings: {} }, monthly: { retention: 13, settings: {} }, weekly: { retention: 5, settings: {} } }, nbdConcurrency: 3, preferNbd: true, timezone: 'Europe/Stockholm' }, 'ffd808a2-563f-4f3c-84b3-445e724ce78c': { exportRetention: 1 } }, srs: { id: { __or: [] } }, type: 'backup', vms: { id: '086b19ec-2162-6b28-c9d2-0e2214ab85ad' } },Backup log for job "Win10 22H2 (XCP-VM-BU1)"

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1748301454157", "jobId": "006447e3-d632-4088-871d-d31925146165", "jobName": "Win10 22H2 (XCP-VM-BU1)", "message": "backup", "scheduleId": "49db3524-1fea-4964-9eb9-1ee78c7f1533", "start": 1748301454157, "status": "failure", "infos": [ { "data": { "vms": [ "8a2b28e8-17fe-1a22-6b69-dc9e70b54440" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "8a2b28e8-17fe-1a22-6b69-dc9e70b54440", "name_label": "Win10 22H2 (XCP-VM-BU1)" }, "id": "1748301457105", "message": "backup VM", "start": 1748301457105, "status": "failure", "tasks": [ { "id": "1748301457116", "message": "clean-vm", "start": 1748301457116, "status": "success", "end": 1748301459104, "result": { "merge": false } }, { "id": "1748301459708", "message": "snapshot", "start": 1748301459708, "status": "success", "end": 1748301465288, "result": "6eeb6ce1-3867-1bf2-ee02-61629c335998" }, { "data": { "id": "2b919467-704c-4e35-bac9-2d6a43118bda", "isFull": false, "type": "remote" }, "id": "1748301465289", "message": "export", "start": 1748301465289, "status": "success", "tasks": [ { "id": "1748301469585", "message": "clean-vm", "start": 1748301469585, "status": "success", "end": 1748301471474, "result": { "merge": false } } ], "end": 1748301471474 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:d93298c1-6b15-75f1-abfe-23d7f09bea18" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:4962f8a3-17bf-2724-1b11-52a0b718bc93" }, "message": "Snapshot data has been deleted" } ], "end": 1748301471474, "result": { "message": "invalid HTTP header in response body", "name": "Error", "stack": "Error: invalid HTTP header in response body\n at checkVdiExport (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:37:19)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:244:5)\n at async #getExportStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:123:20)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:135:23)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamNbd.mjs:31:5)\n at async #openNbdStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/Xapi.mjs:69:7)\n at async XapiDiskSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/disk-transform/dist/DiskPassthrough.mjs:28:41)\n at async file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/backups/_incrementalVm.mjs:65:5\n at async Promise.all (index 1)" } } ], "end": 1748301471474 }Schedule for backup job "Win10 22H2 (XCP-VM-BU1)"

{ id: '006447e3-d632-4088-871d-d31925146165', mode: 'delta', name: 'Win10 22H2 (XCP-VM-BU1)', remotes: { id: '2b919467-704c-4e35-bac9-2d6a43118bda' }, settings: { '': { cbtDestroySnapshotData: true, fullInterval: 28, longTermRetention: { daily: { retention: 8, settings: {} }, monthly: { retention: 13, settings: {} }, weekly: { retention: 5, settings: {} } }, nbdConcurrency: 3, preferNbd: true, timezone: 'Europe/Stockholm' }, '49db3524-1fea-4964-9eb9-1ee78c7f1533': { exportRetention: 1 } }, srs: { id: { __or: [] } }, type: 'backup', vms: { id: '8a2b28e8-17fe-1a22-6b69-dc9e70b54440' } },Backup log for job "pfSense"

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1748301471487", "jobId": "ed71705b-61aa-4081-b765-bfa5e2bb8dd6", "jobName": "pfSense", "message": "backup", "scheduleId": "2f5fc714-9c4d-4b46-8da6-db771926de68", "start": 1748301471487, "status": "failure", "infos": [ { "data": { "vms": [ "34bb1a0f-d662-669d-6e07-39f3ef102129" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "34bb1a0f-d662-669d-6e07-39f3ef102129", "name_label": "pfSense" }, "id": "1748301474076", "message": "backup VM", "start": 1748301474076, "status": "failure", "tasks": [ { "id": "1748301474081", "message": "clean-vm", "start": 1748301474081, "status": "success", "end": 1748301475569, "result": { "merge": false } }, { "id": "1748301476075", "message": "snapshot", "start": 1748301476075, "status": "success", "end": 1748301479451, "result": "6fb562a0-e544-cc43-92a8-b250f0d6e55f" }, { "data": { "id": "2b919467-704c-4e35-bac9-2d6a43118bda", "isFull": false, "type": "remote" }, "id": "1748301479451:0", "message": "export", "start": 1748301479451, "status": "success", "tasks": [ { "id": "1748301484740", "message": "clean-vm", "start": 1748301484740, "status": "success", "end": 1748301486146, "result": { "merge": false } } ], "end": 1748301486147 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:9bbe8ff4-5366-e76f-c422-b11c02f2dbeb" }, "message": "Snapshot data has been deleted" } ], "end": 1748301486147, "result": { "message": "invalid HTTP header in response body", "name": "Error", "stack": "Error: invalid HTTP header in response body\n at checkVdiExport (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:37:19)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:244:5)\n at async #getExportStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:123:20)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:135:23)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamNbd.mjs:31:5)\n at async #openNbdStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/Xapi.mjs:69:7)\n at async XapiDiskSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/disk-transform/dist/DiskPassthrough.mjs:28:41)\n at async file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/backups/_incrementalVm.mjs:65:5\n at async Promise.all (index 0)" } } ], "end": 1748301486148 }Schedule for backup job "pfSense"

{ id: 'ed71705b-61aa-4081-b765-bfa5e2bb8dd6', mode: 'delta', name: 'pfSense', remotes: { id: '2b919467-704c-4e35-bac9-2d6a43118bda' }, settings: { '': { cbtDestroySnapshotData: true, fullInterval: 28, longTermRetention: { daily: { retention: 8, settings: {} }, monthly: { retention: 13, settings: {} }, weekly: { retention: 5, settings: {} } }, nbdConcurrency: 3, preferNbd: true, timezone: 'Europe/Stockholm' }, '2f5fc714-9c4d-4b46-8da6-db771926de68': { exportRetention: 1 } }, srs: { id: { __or: [] } }, type: 'backup', vms: { id: '34bb1a0f-d662-669d-6e07-39f3ef102129' } },Backup log for job "unifi"

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1748301486170", "jobId": "167accc6-65e1-4782-a7e1-b7af72070286", "jobName": "unifi", "message": "backup", "scheduleId": "25636582-2efd-45a9-b90a-f0e2e96df179", "start": 1748301486170, "status": "failure", "infos": [ { "data": { "vms": [ "95e2e5a4-4f21-bd2c-0af5-0a1216d05d0e" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "95e2e5a4-4f21-bd2c-0af5-0a1216d05d0e", "name_label": "unifi" }, "id": "1748301488467", "message": "backup VM", "start": 1748301488467, "status": "failure", "tasks": [ { "id": "1748301488472", "message": "clean-vm", "start": 1748301488472, "status": "success", "end": 1748301490266, "result": { "merge": false } }, { "id": "1748301490816", "message": "snapshot", "start": 1748301490816, "status": "success", "end": 1748301494252, "result": "513ce90c-c893-45ce-6693-a74e39be90b8" }, { "data": { "id": "2b919467-704c-4e35-bac9-2d6a43118bda", "isFull": false, "type": "remote" }, "id": "1748301494253", "message": "export", "start": 1748301494253, "status": "success", "tasks": [ { "id": "1748301497980", "message": "clean-vm", "start": 1748301497980, "status": "success", "end": 1748301499587, "result": { "merge": false } } ], "end": 1748301499587 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:062d4f15-9f44-cfb9-840c-4af51ef8a4aa" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:67d3668b-bd8d-db05-76a2-f9d0da5db47a" }, "message": "Snapshot data has been deleted" } ], "end": 1748301499587, "result": { "message": "invalid HTTP header in response body", "name": "Error", "stack": "Error: invalid HTTP header in response body\n at checkVdiExport (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:37:19)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:244:5)\n at async #getExportStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:123:20)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:135:23)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamNbd.mjs:31:5)\n at async #openNbdStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/Xapi.mjs:69:7)\n at async XapiDiskSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/disk-transform/dist/DiskPassthrough.mjs:28:41)\n at async file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/backups/_incrementalVm.mjs:65:5\n at async Promise.all (index 1)" } } ], "end": 1748301499587 }Schedule for backup job "unifi"

{ id: '167accc6-65e1-4782-a7e1-b7af72070286', mode: 'delta', name: 'unifi', remotes: { id: '2b919467-704c-4e35-bac9-2d6a43118bda' }, settings: { '': { cbtDestroySnapshotData: true, fullInterval: 28, longTermRetention: { daily: { retention: 8, settings: {} }, monthly: { retention: 13, settings: {} }, weekly: { retention: 5, settings: {} } }, nbdConcurrency: 3, preferNbd: true, timezone: 'Europe/Stockholm' }, '25636582-2efd-45a9-b90a-f0e2e96df179': { exportRetention: 1 } }, srs: { id: { __or: [] } }, type: 'backup', vms: { id: '95e2e5a4-4f21-bd2c-0af5-0a1216d05d0e' } },The job ("HomeAssistant") I ran manually after the failure, without any changes to it, from my shellscript succeeded. This is the log:

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1748332955709", "jobId": "38f0068f-c124-4876-85d3-83f1003db60c", "jobName": "HomeAssistant", "message": "backup", "scheduleId": "dcb1c759-76b8-441b-9dc0-595914e60608", "start": 1748332955709, "status": "success", "infos": [ { "data": { "vms": [ "ed4758f3-de34-7a7e-a46b-dc007d52f5c3" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "ed4758f3-de34-7a7e-a46b-dc007d52f5c3", "name_label": "HomeAssistant" }, "id": "1748332999004", "message": "backup VM", "start": 1748332999004, "status": "success", "tasks": [ { "id": "1748332999009", "message": "clean-vm", "start": 1748332999009, "status": "success", "end": 1748333001471, "result": { "merge": false } }, { "id": "1748333024461", "message": "snapshot", "start": 1748333024461, "status": "success", "end": 1748333027231, "result": "7c1c5df6-5a0f-28c6-eaa3-8cf96b871bf8" }, { "data": { "id": "2b919467-704c-4e35-bac9-2d6a43118bda", "isFull": true, "type": "remote" }, "id": "1748333027231:0", "message": "export", "start": 1748333027231, "status": "success", "tasks": [ { "id": "1748333029816", "message": "transfer", "start": 1748333029816, "status": "success", "end": 1748333658179, "result": { "size": 38681968640 } }, { "id": "1748333661183", "message": "clean-vm", "start": 1748333661183, "status": "success", "end": 1748333669221, "result": { "merge": false } } ], "end": 1748333669222 } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:e40df229-f55d-e658-5242-cd0746079661" }, "message": "Snapshot data has been deleted" }, { "data": { "vdiRef": "OpaqueRef:f03dc466-24a3-5269-3e9b-5634a99219f9" }, "message": "Snapshot data has been deleted" } ], "end": 1748333669222 } ], "end": 1748333669223 } -

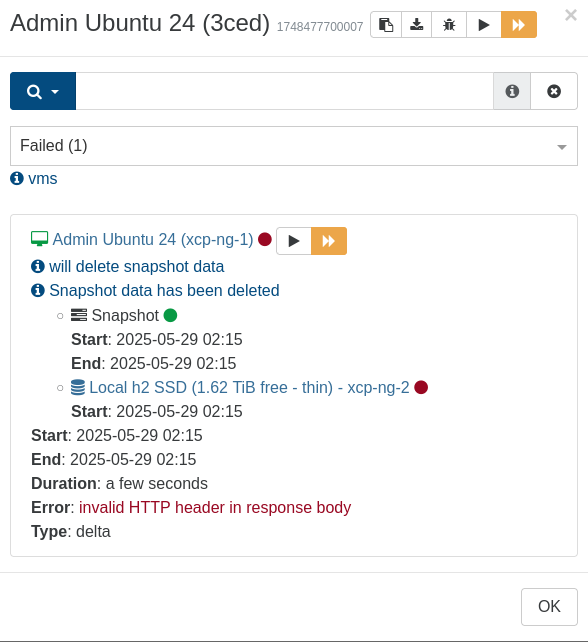

One of the latest failures of this kind ruled out the remote as a possible source for the failure:

The virtual machine is being replicated every week to another host, so the storage is local storage on that host.

As almost shown in the log, the XO version used was 'c5a268277' (before the update I did today).

Backup log (ask for other details if interested in investigating this further):

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1748477700007", "jobId": "0bb53ced-4d52-40a9-8b14-7cd1fa2b30fe", "jobName": "Admin Ubuntu 24", "message": "backup", "scheduleId": "69a05a67-c43b-4d23-b1e8-ada77c70ccc4", "start": 1748477700007, "status": "failure", "infos": [ { "data": { "vms": [ "1728e876-5644-2169-6c62-c764bd8b6bdf" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "1728e876-5644-2169-6c62-c764bd8b6bdf", "name_label": "Admin Ubuntu 24" }, "id": "1748477701623", "message": "backup VM", "start": 1748477701623, "status": "failure", "tasks": [ { "id": "1748477702092", "message": "snapshot", "start": 1748477702092, "status": "success", "end": 1748477703786, "result": "729ae454-1570-2838-5449-0271113ee53e" }, { "data": { "id": "46f9b5ee-c937-ff71-29b1-520ba0546675", "isFull": false, "name_label": "Local h2 SSD", "type": "SR" }, "id": "1748477703786:0", "message": "export", "start": 1748477703786, "status": "interrupted" } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:299ed677-2b2e-4e28-4300-3f7d053be0ac" }, "message": "Snapshot data has been deleted" } ], "end": 1748477707592, "result": { "message": "invalid HTTP header in response body", "name": "Error", "stack": "Error: invalid HTTP header in response body\n at checkVdiExport (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:37:19)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/vdi.mjs:244:5)\n at async #getExportStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:123:20)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamSource.mjs:135:23)\n at async XapiVhdStreamNbdSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/XapiVhdStreamNbd.mjs:31:5)\n at async #openNbdStream (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/xapi/disks/Xapi.mjs:69:7)\n at async XapiDiskSource.init (file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/disk-transform/dist/DiskPassthrough.mjs:28:41)\n at async file:///opt/xo/xo-builds/xen-orchestra-202505261454/@xen-orchestra/backups/_incrementalVm.mjs:65:5\n at async Promise.all (index 1)" } } ], "end": 1748477707593 } -

I get the same error now. This started with the new backup code, has been working the whole time before.

Latest XO, remote nfs storage (reachable over internet via IP).

Backup jobs are delta.

Same jobs to local/LAN nfs storage works without issues. It's only the remote nfs gives problems (sometimes works).

-

Ping @lsouai-vates and @florent

-

I can also add in that my specific backup ("Admin Ubuntu 24") is not part of a sequence, it's a separate "Continuous replication" job (failed again today).

I will try this now: disable the schedule in XO, and schedule it using cron (and my script calling xo-cli) on the machine running XO.

As I now have tested by initiating the backup job using crontab, I have found out that it fails every second time. The difference in the backup logs between success and failure is the "Type" at the end of the in XO visible result display: "Delta" fails and "Full" succeeds.

I will not clutter up the thread with more details unless asked for. -

@manilx Does your backup job succeed if you run it manually after a failure (as I have figured out is happening for me) ?

I did some more tests and found out that the setting "Purge snapshot data when using CBT" under advanced backup settings might be the cause of the problem. The only thing is that with this setting disabled, every machine being backed up will keep a space-wasting snapshot in the same storage repository as the snapshotted disk. -

@peo I don't have this setting set. The errors appear inconsistently.

-

@manilx as you describe it, it might be a problem with the remote NFS storage, but still, the it would be nice if that error message could be a bit clearer.

-

@peo Nope. Remote has no issue whatsoever.

The issue is with the backup code because this is what changed. Worked before for many months without issues.

And it's still working at the office on stable XOA. -

dear all i am facing the same issue

Error: invalid HTTP header in response body

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1749107336350", "jobId": "89511625-67f3-46be-98fe-8d7a7584386f", "jobName": "All-2-50G-Delta", "message": "backup", "scheduleId": "405be84c-8b49-408a-871e-b21c211ee326", "start": 1749107336350, "status": "failure", "infos": [ { "data": { "vms": [ "20e7d42f-bf69-186d-ec65-82e08230e557" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "20e7d42f-bf69-186d-ec65-82e08230e557", "name_label": "PfSense_Ip_92_61_60_23_EXT_172_16_16_99_INT_2022_03_03_07_24_57" }, "id": "1749107339106", "message": "backup VM", "start": 1749107339106, "status": "failure", "tasks": [ { "id": "1749107339113", "message": "clean-vm", "start": 1749107339113, "status": "success", "end": 1749107339118, "result": { "merge": false } }, { "id": "1749107339665", "message": "snapshot", "start": 1749107339665, "status": "success", "end": 1749107341927, "result": "02828209-8cc6-8694-8fdd-af5aa04d5b90" }, { "data": { "id": "455eee8b-49ca-4552-9431-ebb417fbd8f8", "isFull": true, "type": "remote" }, "id": "1749107341927:0", "message": "export", "start": 1749107341927, "status": "success", "tasks": [ { "id": "1749107347491", "message": "clean-vm", "start": 1749107347491, "status": "success", "end": 1749107347494, "result": { "merge": false } } ], "end": 1749107347496 } ], "end": 1749107347496, "result": { "message": "invalid HTTP header in response body", "name": "Error", "stack": "Error: invalid HTTP header in response body\n at checkVdiExport (file:///opt/xo/xo-builds/xen-orchestra-202503211145/@xen-orchestra/xapi/vdi.mjs:40:19)\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)\n at async Xapi.exportContent (file:///opt/xo/xo-builds/xen-orchestra-202503211145/@xen-orchestra/xapi/vdi.mjs:326:7)\n at async file:///opt/xo/xo-builds/xen-orchestra-202503211145/@xen-orchestra/backups/_incrementalVm.mjs:56:34\n at async Promise.all (index 1)\n at async cancelableMap (file:///opt/xo/xo-builds/xen-orchestra-202503211145/@xen-orchestra/backups/_cancelableMap.mjs:11:12)\n at async exportIncrementalVm (file:///opt/xo/xo-builds/xen-orchestra-202503211145/@xen-orchestra/backups/_incrementalVm.mjs:25:3)\n at async IncrementalXapiVmBackupRunner._copy (file:///opt/xo/xo-builds/xen-orchestra-202503211145/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:44:25)\n at async IncrementalXapiVmBackupRunner.run (file:///opt/xo/xo-builds/xen-orchestra-202503211145/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:396:9)\n at async file:///opt/xo/xo-builds/xen-orchestra-202503211145/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } } ], "end": 1749107347497 } -

@olivierlambert Have you heard of any progress in this case ? I found out that I got rid of the error when I disabled "Purge snapshot data when using CBT" on all my backups, so the backups are no longer failing, except from the Docker VM I have mentioned in another case "Success or failure" (or similar), which indicates failure, but restores successfully (which is weird).

-

I'm not aware of similar issues for our XOA users

@florent does it ring any bell?

-

today same issue again

Snapshot

Start: 2025-06-12 07:16

End: 2025-06-12 07:16

50G-Backup-Delta

Start: 2025-06-12 07:16

End: 2025-06-12 07:16

Duration: a few seconds

Start: 2025-06-12 07:16

End: 2025-06-12 07:16

Duration: a few seconds

Error: invalid HTTP header in response body

Type: fullvm is a PFSense

with template Other install media

Management agent 6.2.0-76888 detected

Hardware virtualization with paravirtualization drivers enabled (PVHVM) -

@markxc if you are on master you should have something on the xo logs , starting with

invalid HTTP header in response bodyCan you check and tell us what is the error message attached ?

-

un 12 07:16:23 xenorchestra xo-server[1679463]: 2025-06-12T05:16:23.729Z xo:xapi:vdi WARN invalid HTTP header in response body { Jun 12 07:16:23 xenorchestra xo-server[1679463]: body: 'HTTP/1.1 500 Internal Error\r\n' + Jun 12 07:16:23 xenorchestra xo-server[1679463]: 'content-length: 266\r\n' + Jun 12 07:16:23 xenorchestra xo-server[1679463]: 'content-type:text/html\r\n' + Jun 12 07:16:23 xenorchestra xo-server[1679463]: 'connection:close\r\n' + Jun 12 07:16:23 xenorchestra xo-server[1679463]: 'cache-control:no-cache, no-store\r\n' + Jun 12 07:16:23 xenorchestra xo-server[1679463]: '\r\n' + Jun 12 07:16:23 xenorchestra xo-server[1679463]: '<html><body><h1>HTTP 500 internal server error</h1>An unexpected error occurred; please wait a whi>Jun 12 07:16:23 xenorchestra xo-server[1679463]: } Jun 12 07:16:28 xenorchestra xo-server[1679463]: 2025-06-12T05:16:28.522Z xo:backups:MixinBackupWriter WARN cleanVm: incorrect backup size in metadata { Jun 12 07:16:28 xenorchestra xo-server[1679463]: path: '/xo-vm-backups/04d4ce05-81fd-e2b6-ef0d-4e9b91f3ffb1/20250610T051506Z.json', Jun 12 07:16:28 xenorchestra xo-server[1679463]: actual: 1116023296, Jun 12 07:16:28 xenorchestra xo-server[1679463]: expected: 1116023808 Jun 12 07:16:28 xenorchestra xo-server[1679463]: } Jun 12 07:16:29 xenorchestra xo-server[1675344]: 2025-06-12T05:16:29.954Z xo:backups:mergeWorker INFO merge in progress { Jun 12 07:16:29 xenorchestra xo-server[1675344]: done: 59198, Jun 12 07:16:29 xenorchestra xo-server[1675344]: parent: '/xo-vm-backups/eda7fcd9-484f-7f19-b5ae-0cfd94ca2207/vdis/89511625-67f3-46be-98fe-8d7a7584386>Jun 12 07:16:29 xenorchestra xo-server[1675344]: progress: 83, Jun 12 07:16:29 xenorchestra xo-server[1675344]: total: 71442 Jun 12 07:16:29 xenorchestra xo-server[1675344]: } Jun 12 07:16:39 xenorchestra xo-server[1675344]: 2025-06-12T05:16:39.954Z xo:backups:mergeWorker INFO merge in progress { Jun 12 07:16:39 xenorchestra xo-server[1675344]: done: 59467, Jun 12 07:16:39 xenorchestra xo-server[1675344]: parent: '/xo-vm-backups/eda7fcd9-484f-7f19-b5ae-0cfd94ca2207/vdis/89511625-67f3-46be-98fe-8d7a7584386 un 12 07:16:39 xenorchestra xo-server[1675344]: 2025-06-12T05:16:39.954Z xo:backups:mergeWorker INFO merge in progress { Jun 12 07:16:39 xenorchestra xo-server[1675344]: done: 59467, Jun 12 07:16:39 xenorchestra xo-server[1675344]: parent: '/xo-vm-backups/eda7fcd9-484f-7f19-b5ae-0cfd94ca2207/vdis/89511625-67f3-46be-98fe-8d7a7584386> Jun 12 07:16:39 xenorchestra xo-server[1675344]: progress: 83, Jun 12 07:16:39 xenorchestra xo-server[1675344]: total: 71442 Jun 12 07:16:39 xenorchestra xo-server[1675344]: } Jun 12 07:16:46 xenorchestra xo-server[1679463]: 2025-06-12T05:16:46.783Z @xen-orchestra/xapi/disks/Xapi WARN openNbdCBT Error: CBT is disabled Jun 12 07:16:46 xenorchestra xo-server[1679463]: at XapiVhdCbtSource.init (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xen-orchestra/xapi/d> Jun 12 07:16:46 xenorchestra xo-server[1679463]: at process.processTicksAndRejections (node:internal/process/task_queues:105:5) Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async #openNbdCbt (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xen-orchestra/xapi/disks>Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async XapiDiskSource.init (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xen-orchestra/di>Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xen-orchestra/backups/_incrementalVm.> Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async Promise.all (index 1) Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async cancelableMap (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xen-orchestra/backups/>Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async exportIncrementalVm (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xen-orchestra/ba>Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async IncrementalXapiVmBackupRunner._copy (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@>Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async IncrementalXapiVmBackupRunner.run (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xe> Jun 12 07:16:46 xenorchestra xo-server[1679463]: code: 'CBT_DISABLED' Jun 12 07:16:46 xenorchestra xo-server[1679463]: } Jun 12 07:16:46 xenorchestra xo-server[1679463]: 2025-06-12T05:16:46.863Z @xen-orchestra/xapi/disks/Xapi WARN openNbdCBT Error: CBT is disabled Jun 12 07:16:46 xenorchestra xo-server[1679463]: at XapiVhdCbtSource.init (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xen-orchestra/xapi/d>Jun 12 07:16:46 xenorchestra xo-server[1679463]: at process.processTicksAndRejections (node:internal/process/task_queues:105:5) Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async #openNbdCbt (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xen-orchestra/xapi/disks>Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async XapiDiskSource.init (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xen-orchestra/di>Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xen-orchestra/backups/_incrementalVm.> Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async Promise.all (index 2) Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async cancelableMap (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xen-orchestra/backups/>Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async exportIncrementalVm (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xen-orchestra/ba>Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async IncrementalXapiVmBackupRunner._copy (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@>Jun 12 07:16:46 xenorchestra xo-server[1679463]: at async IncrementalXapiVmBackupRunner.run (file:///opt/xo/xo-builds/xen-orchestra-202506050918/@xe> Jun 12 07:16:46 xenorchestra xo-server[1679463]: code: 'CBT_DISABLED' Jun 12 07:16:46 xenorchestra xo-server[1679463]: } -

@markxc thank you that is the part I wanted

I think someone on the xapi side should look into it

-

Hello everyone,

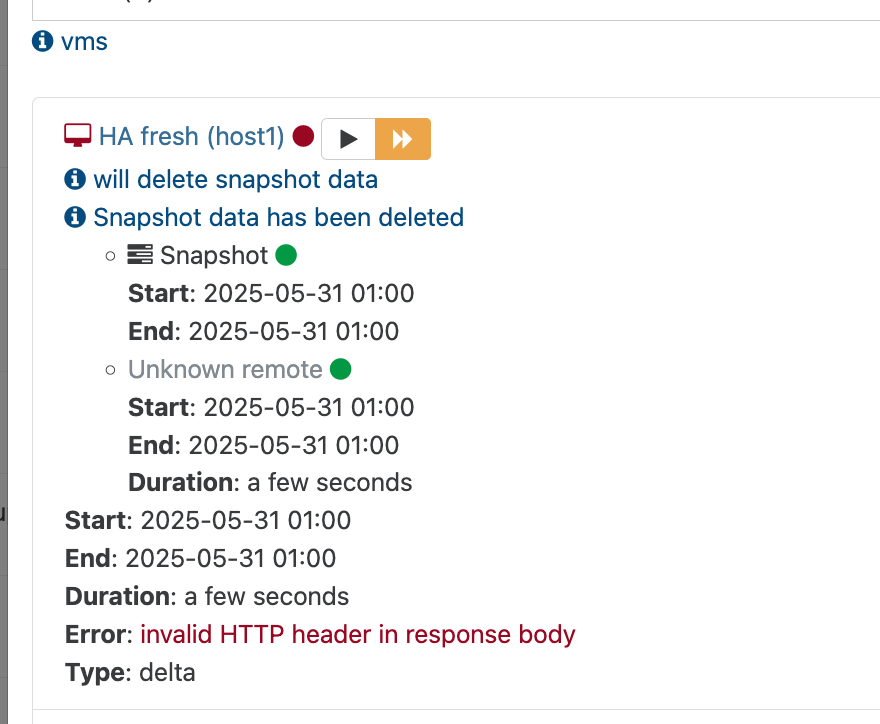

this type of error now popped up at my delta backup as well.

Some specs:

OS: Debian 12 patched

Xen Orchestra: as of 20250616 0005

XEN-NG: 8.3 latest patches applied

Job Type: Delta Backup with 8 VMs backing up to remote Synology NASThe job run for a quiet a few weeks without any problems. But I have to admit, that I cannot say, what exactly induced the problem, since I updated xen orchestra and modified the backup job (I removed 2 VM, moved them to another machine, added additional disks and readded them the same backup job). Manually triggering the backup via "Restart VM backup" upon the failures dialog successfully runs the backup.

I get the following error in the log:

... Jun 18 02:41:28 xoa xo-server[258369]: 2025-06-18T00:41:28.527Z xo:backups:MixinBackupWriter INFO merge in progress { Jun 18 02:41:28 xoa xo-server[258369]: done: 6895, Jun 18 02:41:28 xoa xo-server[258369]: parent: '/xo-vm-backups/924b4cf4-c8b3-18ab-5f78-d1daa77bc3fc/vdis/8c0477b9-b6e8-45ca-bcac-b78549e05b6f/ab2c3be9-bec5-4361-9ad2-81acfc14c16e/20250611T005140Z.vhd', Jun 18 02:41:28 xoa xo-server[258369]: progress: 97, Jun 18 02:41:28 xoa xo-server[258369]: total: 7132 Jun 18 02:41:28 xoa xo-server[258369]: } Jun 18 02:41:38 xoa xo-server[258369]: 2025-06-18T00:41:38.528Z xo:backups:MixinBackupWriter INFO merge in progress { Jun 18 02:41:38 xoa xo-server[258369]: done: 7073, Jun 18 02:41:38 xoa xo-server[258369]: parent: '/xo-vm-backups/924b4cf4-c8b3-18ab-5f78-d1daa77bc3fc/vdis/8c0477b9-b6e8-45ca-bcac-b78549e05b6f/ab2c3be9-bec5-4361-9ad2-81acfc14c16e/20250611T005140Z.vhd', Jun 18 02:41:38 xoa xo-server[258369]: progress: 99, Jun 18 02:41:38 xoa xo-server[258369]: total: 7132 Jun 18 02:41:38 xoa xo-server[258369]: } Jun 18 02:41:46 xoa xo-server[258369]: 2025-06-18T00:41:46.228Z xo:backups:MixinBackupWriter WARN cleanVm: incorrect backup size in metadata { Jun 18 02:41:46 xoa xo-server[258369]: path: '/xo-vm-backups/924b4cf4-c8b3-18ab-5f78-d1daa77bc3fc/20250617T235823Z.json', Jun 18 02:41:46 xoa xo-server[258369]: actual: 108580044800, Jun 18 02:41:46 xoa xo-server[258369]: expected: 108606965248 Jun 18 02:41:46 xoa xo-server[258369]: } Jun 18 02:46:20 xoa xo-server[258369]: 2025-06-18T00:46:20.182Z xo:backups:MixinBackupWriter WARN cleanVm: incorrect backup size in metadata { Jun 18 02:46:20 xoa xo-server[258369]: path: '/xo-vm-backups/9960fd34-ad5a-8854-6a90-3b1e88c1398f/20250618T004205Z.json', Jun 18 02:46:20 xoa xo-server[258369]: actual: 12184453120, Jun 18 02:46:20 xoa xo-server[258369]: expected: 12190142976 Jun 18 02:46:20 xoa xo-server[258369]: } Jun 18 02:46:41 xoa xo-server[258369]: 2025-06-18T00:46:41.281Z @xen-orchestra/xapi/disks/Xapi WARN openNbdCBT Error: can't connect to any nbd client Jun 18 02:46:41 xoa xo-server[258369]: at connectNbdClientIfPossible (file:///opt/xo/xo-builds/xen-orchestra-202506160005/@xen-orchestra/xapi/disks/utils.mjs:23:19) Jun 18 02:46:41 xoa xo-server[258369]: at process.processTicksAndRejections (node:internal/process/task_queues:105:5) Jun 18 02:46:41 xoa xo-server[258369]: at async XapiVhdCbtSource.init (file:///opt/xo/xo-builds/xen-orchestra-202506160005/@xen-orchestra/xapi/disks/XapiVhdCbt.mjs:75:20) Jun 18 02:46:41 xoa xo-server[258369]: at async #openNbdCbt (file:///opt/xo/xo-builds/xen-orchestra-202506160005/@xen-orchestra/xapi/disks/Xapi.mjs:129:7) Jun 18 02:46:41 xoa xo-server[258369]: at async XapiDiskSource.init (file:///opt/xo/xo-builds/xen-orchestra-202506160005/@xen-orchestra/disk-transform/dist/DiskPassthrough.mjs:28:41) Jun 18 02:46:41 xoa xo-server[258369]: at async file:///opt/xo/xo-builds/xen-orchestra-202506160005/@xen-orchestra/backups/_incrementalVm.mjs:65:5 Jun 18 02:46:41 xoa xo-server[258369]: at async Promise.all (index 1) Jun 18 02:46:41 xoa xo-server[258369]: at async cancelableMap (file:///opt/xo/xo-builds/xen-orchestra-202506160005/@xen-orchestra/backups/_cancelableMap.mjs:11:12) Jun 18 02:46:41 xoa xo-server[258369]: at async exportIncrementalVm (file:///opt/xo/xo-builds/xen-orchestra-202506160005/@xen-orchestra/backups/_incrementalVm.mjs:28:3) Jun 18 02:46:41 xoa xo-server[258369]: at async IncrementalXapiVmBackupRunner._copy (file:///opt/xo/xo-builds/xen-orchestra-202506160005/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:38:25) { Jun 18 02:46:41 xoa xo-server[258369]: code: 'NO_NBD_AVAILABLE' Jun 18 02:46:41 xoa xo-server[258369]: } Jun 18 02:46:43 xoa xo-server[258369]: 2025-06-18T00:46:43.098Z xo:xapi:vdi WARN invalid HTTP header in response body { Jun 18 02:46:43 xoa xo-server[258369]: body: 'HTTP/1.1 500 Internal Error\r\n' + Jun 18 02:46:43 xoa xo-server[258369]: 'content-length: 318\r\n' + Jun 18 02:46:43 xoa xo-server[258369]: 'content-type: text/html\r\n' + Jun 18 02:46:43 xoa xo-server[258369]: 'connection: close\r\n' + Jun 18 02:46:43 xoa xo-server[258369]: 'cache-control: no-cache, no-store\r\n' + Jun 18 02:46:43 xoa xo-server[258369]: '\r\n' + Jun 18 02:46:43 xoa xo-server[258369]: '<html><body><h1>HTTP 500 internal server error</h1>An unexpected error occurred; please wait a while and try again. If the problem persists, please contact your support representative.<h1> Additional information </h1>VDI_INCOMPATIBLE_TYPE: [ OpaqueRef:1ed06eb9-ed6f-d8f0-25a4-647a4ff22ca6; CBT metadata ]</body></html>' Jun 18 02:46:43 xoa xo-server[258369]: } ...Anyone has any ideas?

-

@FritzGerald Do you have "Purge snapshot data when using CBT" enabled?

I had and when I disabled this, it didn't happen since.

-

Hi @manilx.

thank you for the quick reply. I indeed do have it enabled.

I am just surprised since the exact same job worked previously and it does not make sense to me to keep it. Nevertheless I have to admit, that my knowledge is limited to these types of snapshots and its impact upon storage usage. Just FYI, there is this discussion I found: https://xcp-ng.org/forum/topic/10400/purge-snapshot-data-when-using-cbt-why-wouldn-t-i-enable-this.

However, your point is very good. I just disabled it in order to validate your thesis. If so, I think upon our system behavior we could then report some sort of "bug". Did you backup did not work from the start either or was it also after "some sort of modifications"?

-

@FritzGerald This error happened only on my backups to a remore (a bit slower location but also mounted as nfs like to the local one). It started out of the blue after running perfectly and didn't happen all the time. No rime.

I read about disabling this option in this thread and tried it. Seems to have worked and it is most definitely a bug.