Discarded my start of this post yesterday because I was too far behind with the updates. I yesterday updated to Xen Orchestra, commit c2144 (currently 2 commits behind), and the problem remains.

I have, after the update of XO restarted that machine, also updated and restarted the other (first below) machine.

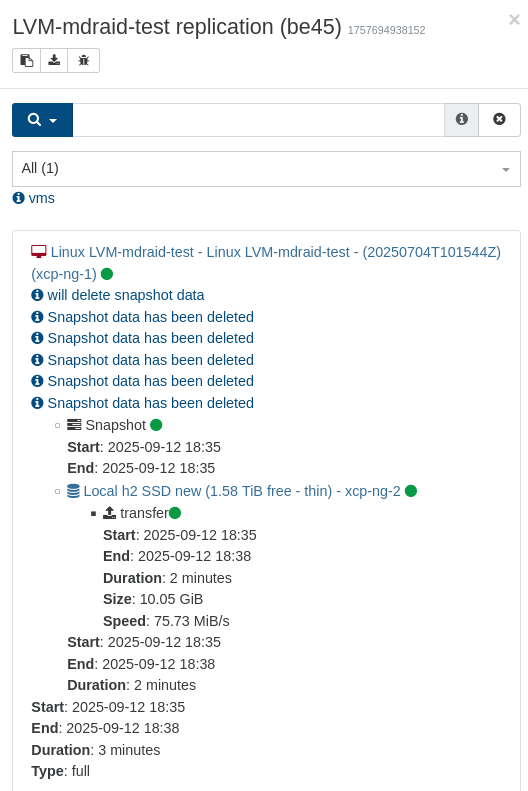

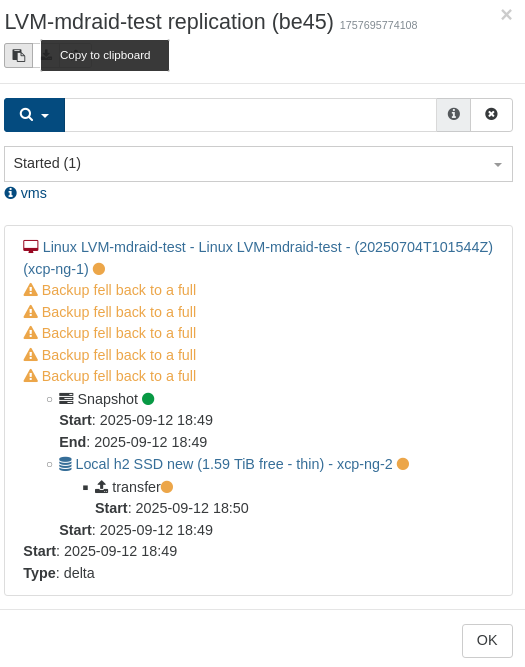

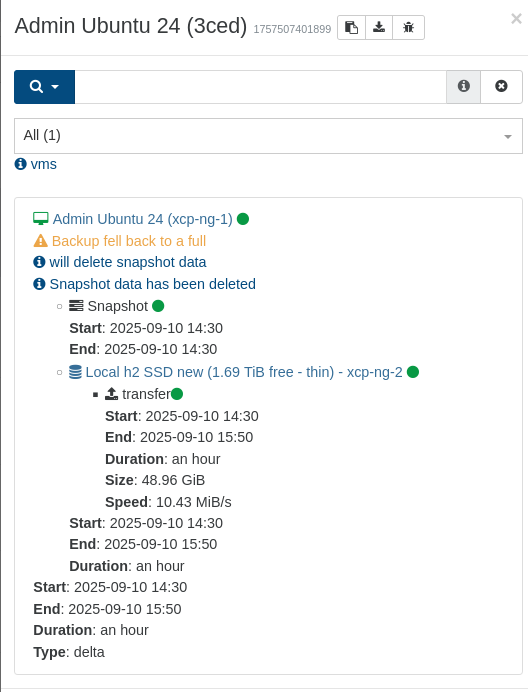

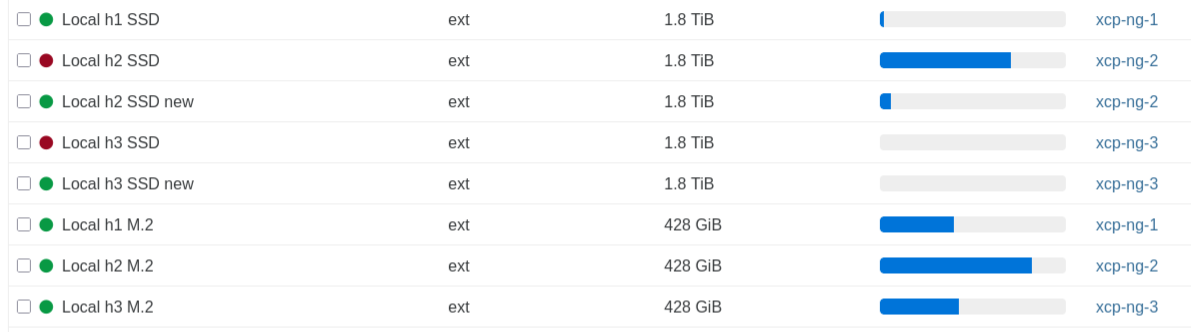

Replication is transferred to a host with its own local storage on SSD.

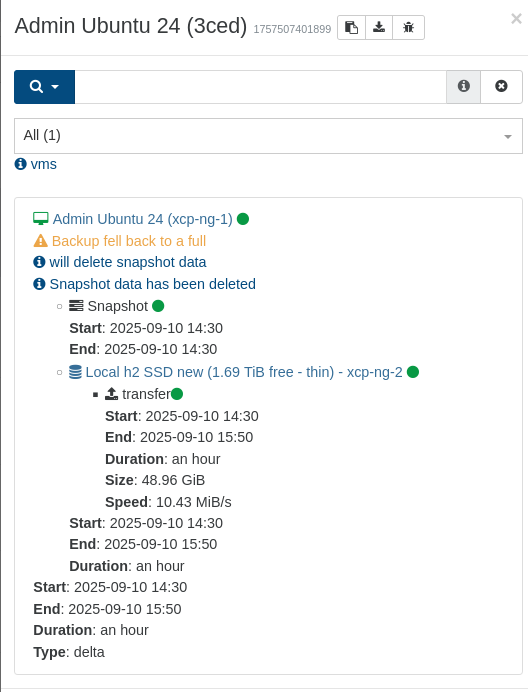

{

"data": {

"mode": "delta",

"reportWhen": "failure"

},

"id": "1757507401899",

"jobId": "0bb53ced-4d52-40a9-8b14-7cd1fa2b30fe",

"jobName": "Admin Ubuntu 24",

"message": "backup",

"scheduleId": "69a05a67-c43b-4d23-b1e8-ada77c70ccc4",

"start": 1757507401899,

"status": "success",

"infos": [

{

"data": {

"vms": [

"1728e876-5644-2169-6c62-c764bd8b6bdf"

]

},

"message": "vms"

}

],

"tasks": [

{

"data": {

"type": "VM",

"id": "1728e876-5644-2169-6c62-c764bd8b6bdf",

"name_label": "Admin Ubuntu 24"

},

"id": "1757507403766",

"message": "backup VM",

"start": 1757507403766,

"status": "success",

"tasks": [

{

"id": "1757507404364",

"message": "snapshot",

"start": 1757507404364,

"status": "success",

"end": 1757507406083,

"result": "79b28f7e-ded1-ed95-4b3b-005f64e69796"

},

{

"data": {

"id": "9d2121f8-6839-39d4-4e90-850a5b6f1bbb",

"isFull": false,

"name_label": "Local h2 SSD new",

"type": "SR"

},

"id": "1757507406083:0",

"message": "export",

"start": 1757507406083,

"status": "success",

"tasks": [

{

"id": "1757507408827",

"message": "transfer",

"start": 1757507408827,

"status": "success",

"end": 1757512215875,

"result": {

"size": 52571406336

}

}

],

"end": 1757512216056

}

],

"warnings": [

{

"message": "Backup fell back to a full"

}

],

"infos": [

{

"message": "will delete snapshot data"

},

{

"data": {

"vdiRef": "OpaqueRef:47180590-40ab-f301-57bf-85c2fbe9b51d"

},

"message": "Snapshot data has been deleted"

}

],

"end": 1757512217031

}

],

"end": 1757512217032

}

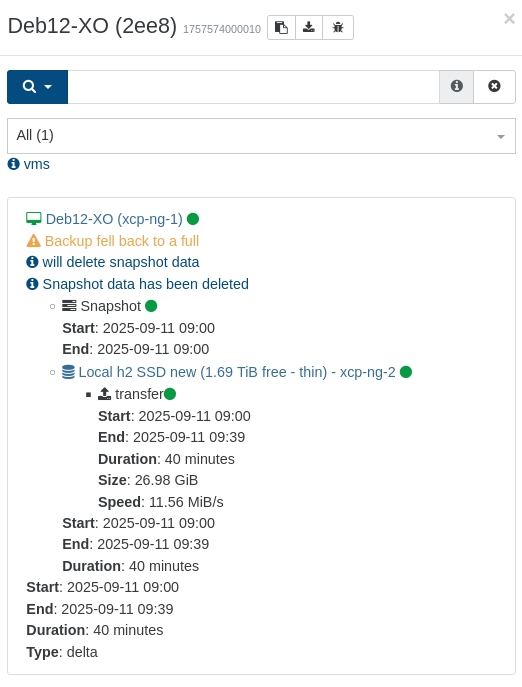

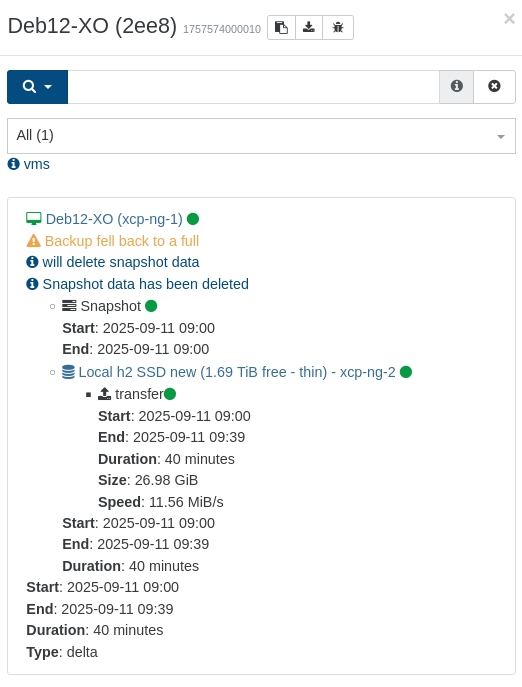

{

"data": {

"mode": "delta",

"reportWhen": "failure"

},

"id": "1757574000010",

"jobId": "883e2ee8-00c8-43f8-9ecd-9f9aa7aa01d1",

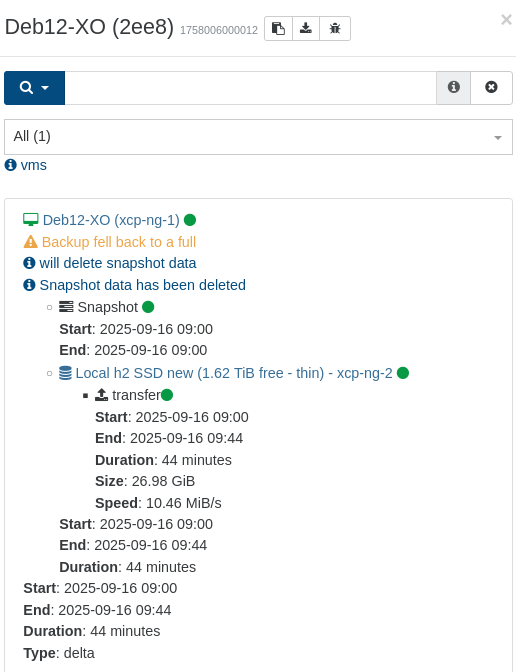

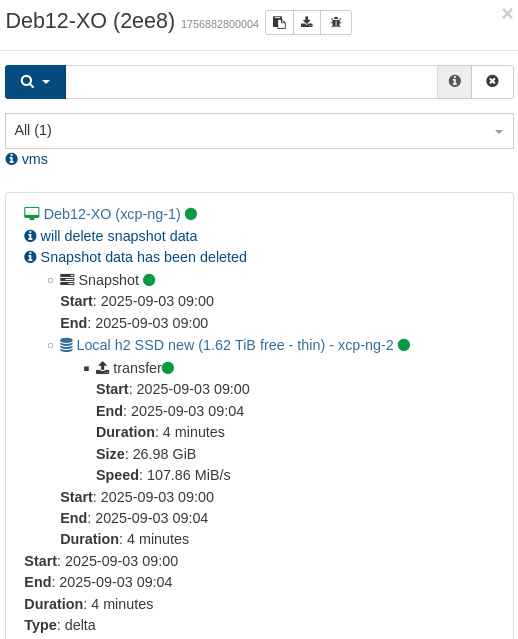

"jobName": "Deb12-XO",

"message": "backup",

"scheduleId": "19aab592-cd48-431e-a82c-525eba60fcc7",

"start": 1757574000010,

"status": "success",

"infos": [

{

"data": {

"vms": [

"30829107-2a1b-6b20-a08a-f2c1e612b2ee"

]

},

"message": "vms"

}

],

"tasks": [

{

"data": {

"type": "VM",

"id": "30829107-2a1b-6b20-a08a-f2c1e612b2ee",

"name_label": "Deb12-XO"

},

"id": "1757574001999",

"message": "backup VM",

"start": 1757574001999,

"status": "success",

"tasks": [

{

"id": "1757574002518",

"message": "snapshot",

"start": 1757574002518,

"status": "success",

"end": 1757574004197,

"result": "73a6e7d5-fcd6-e65f-ddd8-9dd700596505"

},

{

"data": {

"id": "9d2121f8-6839-39d4-4e90-850a5b6f1bbb",

"isFull": false,

"name_label": "Local h2 SSD new",

"type": "SR"

},

"id": "1757574004197:0",

"message": "export",

"start": 1757574004197,

"status": "success",

"tasks": [

{

"id": "1757574006959",

"message": "transfer",

"start": 1757574006959,

"status": "success",

"end": 1757576397226,

"result": {

"size": 28974252032

}

}

],

"end": 1757576397820

}

],

"warnings": [

{

"message": "Backup fell back to a full"

}

],

"infos": [

{

"message": "will delete snapshot data"

},

{

"data": {

"vdiRef": "OpaqueRef:b0ab2f7c-6606-bc15-cdcc-f7f002f3181a"

},

"message": "Snapshot data has been deleted"

}

],

"end": 1757576398882

}

],

"end": 1757576398883

}