Continuous Replication jobs creates full backups every time since 2025-09-06 (xo from source)

-

Discarded my start of this post yesterday because I was too far behind with the updates. I yesterday updated to Xen Orchestra, commit c2144 (currently 2 commits behind), and the problem remains.

I have, after the update of XO restarted that machine, also updated and restarted the other (first below) machine.Replication is transferred to a host with its own local storage on SSD.

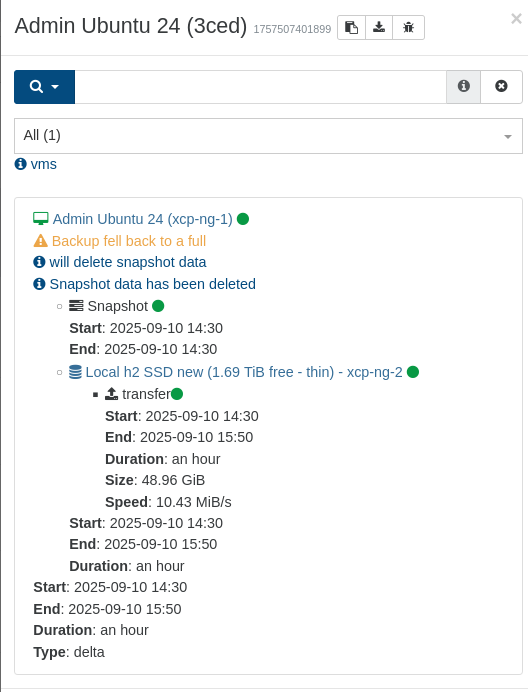

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1757507401899", "jobId": "0bb53ced-4d52-40a9-8b14-7cd1fa2b30fe", "jobName": "Admin Ubuntu 24", "message": "backup", "scheduleId": "69a05a67-c43b-4d23-b1e8-ada77c70ccc4", "start": 1757507401899, "status": "success", "infos": [ { "data": { "vms": [ "1728e876-5644-2169-6c62-c764bd8b6bdf" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "1728e876-5644-2169-6c62-c764bd8b6bdf", "name_label": "Admin Ubuntu 24" }, "id": "1757507403766", "message": "backup VM", "start": 1757507403766, "status": "success", "tasks": [ { "id": "1757507404364", "message": "snapshot", "start": 1757507404364, "status": "success", "end": 1757507406083, "result": "79b28f7e-ded1-ed95-4b3b-005f64e69796" }, { "data": { "id": "9d2121f8-6839-39d4-4e90-850a5b6f1bbb", "isFull": false, "name_label": "Local h2 SSD new", "type": "SR" }, "id": "1757507406083:0", "message": "export", "start": 1757507406083, "status": "success", "tasks": [ { "id": "1757507408827", "message": "transfer", "start": 1757507408827, "status": "success", "end": 1757512215875, "result": { "size": 52571406336 } } ], "end": 1757512216056 } ], "warnings": [ { "message": "Backup fell back to a full" } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:47180590-40ab-f301-57bf-85c2fbe9b51d" }, "message": "Snapshot data has been deleted" } ], "end": 1757512217031 } ], "end": 1757512217032 }

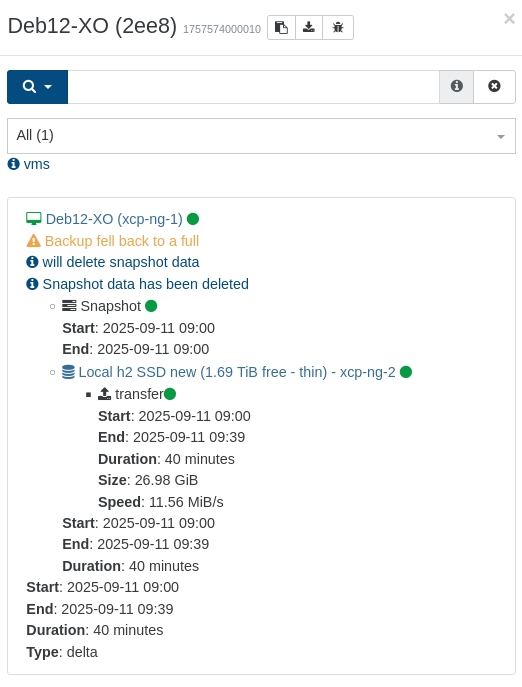

{ "data": { "mode": "delta", "reportWhen": "failure" }, "id": "1757574000010", "jobId": "883e2ee8-00c8-43f8-9ecd-9f9aa7aa01d1", "jobName": "Deb12-XO", "message": "backup", "scheduleId": "19aab592-cd48-431e-a82c-525eba60fcc7", "start": 1757574000010, "status": "success", "infos": [ { "data": { "vms": [ "30829107-2a1b-6b20-a08a-f2c1e612b2ee" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "30829107-2a1b-6b20-a08a-f2c1e612b2ee", "name_label": "Deb12-XO" }, "id": "1757574001999", "message": "backup VM", "start": 1757574001999, "status": "success", "tasks": [ { "id": "1757574002518", "message": "snapshot", "start": 1757574002518, "status": "success", "end": 1757574004197, "result": "73a6e7d5-fcd6-e65f-ddd8-9dd700596505" }, { "data": { "id": "9d2121f8-6839-39d4-4e90-850a5b6f1bbb", "isFull": false, "name_label": "Local h2 SSD new", "type": "SR" }, "id": "1757574004197:0", "message": "export", "start": 1757574004197, "status": "success", "tasks": [ { "id": "1757574006959", "message": "transfer", "start": 1757574006959, "status": "success", "end": 1757576397226, "result": { "size": 28974252032 } } ], "end": 1757576397820 } ], "warnings": [ { "message": "Backup fell back to a full" } ], "infos": [ { "message": "will delete snapshot data" }, { "data": { "vdiRef": "OpaqueRef:b0ab2f7c-6606-bc15-cdcc-f7f002f3181a" }, "message": "Snapshot data has been deleted" } ], "end": 1757576398882 } ], "end": 1757576398883 } -

That's a feedback for @lsouai-vates

-

Anyone else experiencing the same problem, or is it just a problem of my imagination?

Recap:

Since 2025-09-06, all Continuous Replication jobs are creating full backups instead of incrementals.

Environment: XO from source, updated to commit c2144 (2 commits behind at the time of test).

Replication target: a standalone host that is part of the pool, on the same local network, with local SSD storage.

Logs and status screenshots were attached in my first post.

What I’ve done so far:

2025-09-11- Updated Xen Orchestra to the latest commits (as of 2025-09-11).

- Restarted the XO VM and the source VM after update.

2025-09-12:

- Applied pool patches as they became available.

- Installed host updates and rebooted hosts.

- Restarted the source and target hosts (since all hosts were restarted).

- Verified behavior again today → still a full backup for the “Admin Ubuntu 24” VM.

Observation:

The issue began exactly after the 2025-09-05 updates (which included a set of host patches).

Since then, every daily replication runs as a full. -

You can try to pinpoint the exact commit to be 100% sure, with git bissect

-

@olivierlambert said in Continuous Replication jobs creates full backups every time since 2025-09-06 (xo from source):

git bissect

That might be quite a bunch of commits in between the working one and when I discovered the problems (I actually let it do it's thing a couple of days, quickly filling up the backup drive)

I keep only the current and the previous build of XO, so I only know it's somewhere between Aug 16 (from the 'lastlog' to find out when I was logged in) and late Sep 5. -

Bissect is rather fast, it… bissects between 2 commits. If you can pinpoint the exact commit generating that, it means we could probably solve this a LOT faster

For ref: https://en.wikipedia.org/wiki/Bisection_(software_engineering)

-

@olivierlambert Has the problem been confirmed ? I can try to pinpoint where the always-full replications were introduced, but more time efficient to do it on a machine that is fast do replicate, not the one that now takes 2.5 hours (a few minutes for the diff before it borked starting at the backup made 6 Sept)

-

No, no confirmation so far, that's why we need more to investigate

-

@olivierlambert said in Continuous Replication jobs creates full backups every time since 2025-09-06 (xo from source):

No, no confirmation so far, that's why we need more to investigate

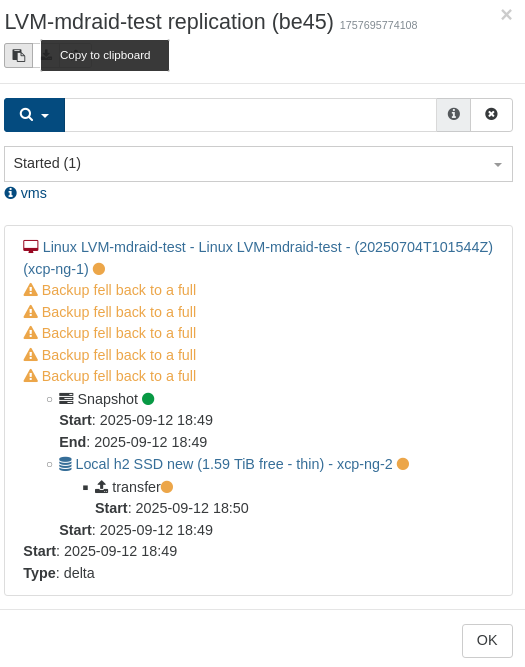

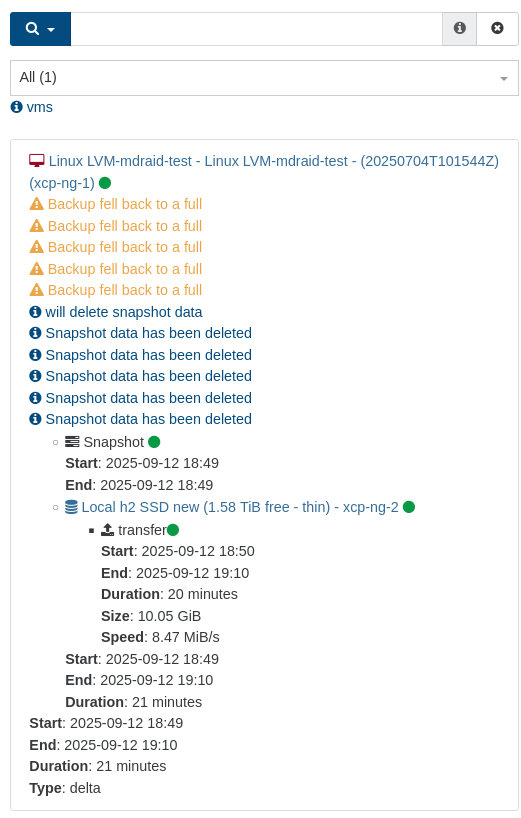

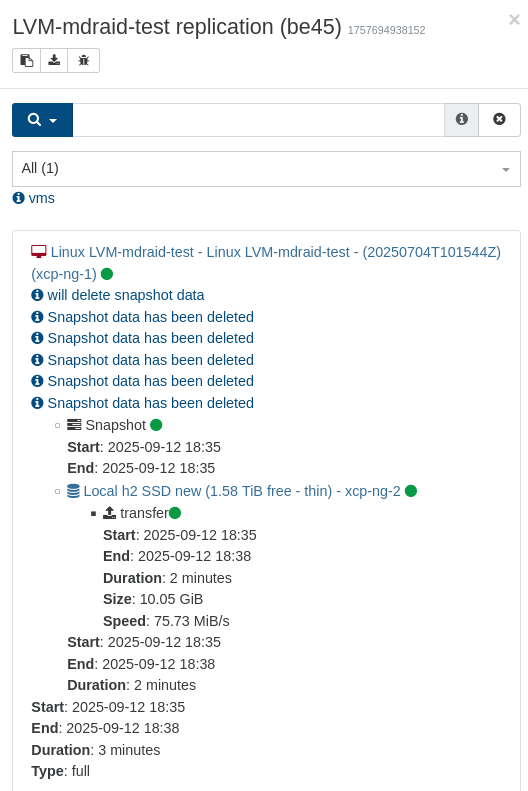

Great (or maybe not) news.. At least on my system this is very easy to replicate.. On a tiny test machine that only have had a normal backup before, first time doing replication copy (to H2 SSD) it sends it as full as expected. Just did some simple operations on the machine (like apt update, no upgrade), then retried:

-

Too bad it becomes that unexplainable slow when it has to fall back to a full backup:

The first (full) backup was 10x faster:

-

@olivierlambert This happens to me too with a459015ca91c159123bb682f16237b4371a312a6.

I did open an issue https://github.com/vatesfr/xen-orchestra/issues/8969

-

@Andrew And it doesn't with the commit just before?

-

@olivierlambert Correct. Running commit 4944ea902ff19f172b1b86ec96ad989e322bec2c works.

-

@florent so it's like https://github.com/vatesfr/xen-orchestra/commit/a459015ca91c159123bb682f16237b4371a312a6 might introduced a regression?

-

@Andrew then again, with such a precise report, the fix is easier

the fix should join master soon

-

If you want to test, you can switch to the relevant branch, which is

fix_replication -

@olivierlambert

fix_replicationworks for some... but most of my VMs now have an error:

the writer IncrementalXapiWriter has failed the step writer.checkBaseVdis() with error Cannot read properties of undefined (reading 'managed')."result": { "message": "Cannot read properties of undefined (reading 'managed')", "name": "TypeError", "stack": "TypeError: Cannot read properties of undefined (reading 'managed')\n at file:///opt/xo/xo-builds/xen-orchestra-202509150025/@xen-orchestra/backups/_runners/_writers/IncrementalXapiWriter.mjs:29:15\n at Array.filter (<anonymous>)\n at IncrementalXapiWriter.checkBaseVdis (file:///opt/xo/xo-builds/xen-orchestra-202509150025/@xen-orchestra/backups/_runners/_writers/IncrementalXapiWriter.mjs:26:8)\n at file:///opt/xo/xo-builds/xen-orchestra-202509150025/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:159:54\n at callWriter (file:///opt/xo/xo-builds/xen-orchestra-202509150025/@xen-orchestra/backups/_runners/_vmRunners/_Abstract.mjs:33:15)\n at IncrementalXapiVmBackupRunner._callWriters (file:///opt/xo/xo-builds/xen-orchestra-202509150025/@xen-orchestra/backups/_runners/_vmRunners/_Abstract.mjs:52:14)\n at IncrementalXapiVmBackupRunner._selectBaseVm (file:///opt/xo/xo-builds/xen-orchestra-202509150025/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:158:16)\n at process.processTicksAndRejections (node:internal/process/task_queues:105:5)\n at async IncrementalXapiVmBackupRunner.run (file:///opt/xo/xo-builds/xen-orchestra-202509150025/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:378:5)\n at async file:///opt/xo/xo-builds/xen-orchestra-202509150025/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } -

Feedback for you @florent

-

@peo Yes, same problem!

-

@olivierlambert @florent Looks like 1471ab0c7c79fa6dca9a1598e7be2a141753ba91 (in current master) has fixed the issue.

But, during testing I found a new related issue (no error messages). Running current XO master b16d5...

During the normal CR delta backup job, two VMs shows the warning message:

Backup fell back to a fullbut it did a delta (not full) backup.I caused this by running a different CR backup job (for testing) that used the two same VMs but to a different SR. The new test job did a full backup and delta backup correctly. Since there is only one CBT snapshot the normal backup job did recognize that the snapshot was not for the original normal backup job and should have done a full (and started a new CBT snapshot), but it only did a short delta.

As the VMs were off, I guess it could correctly use the other CBT snapshot as no blocks had changed... Just odd that CR backup said it was going to do a full and then did a delta.