Error: invalid HTTP header in response body

-

@manilx okay. I will wait and see. Backup runs tonight.

-

@manilx said in Error: invalid HTTP header in response body:

@peo I don't have this setting set. The errors appear inconsistently.

So you confirmed my "solution" to the problem, even if you did not had that setting enabled when I suggested turning it off.

It's great that more people have this problem and it gets resolved with the same "solution". I was starting to think I imagined the problems week after week before I first reported it.

-

@peo I had the setting turned on and turned it off! Seems to have helped.

-

Hi everyone, just FYI. During my delta backup testing, I ran out of space on my NAS (although it should have had enough?!?). It must have created more data then I expected. Therefore I had other priorities, I removed the backups and set up new delta backups. However, thereby I could not dive into further exploring the problems.

-

Hi everyone again. I pretty much got back to square one. What I can observer is, that all my VMs where I added additional disc run into the above error code. So 1 disc per VM works fine for 6 backups, the two others VMs (on with 2 disc, one with 5 discs) fail. Does CBT based delta backups only work if there is no disc attached? I really appreciate any help.

-

@FritzGerald It has nothing to do with the number of disks attached to the VM. It just fails every second time:

https://xcp-ng.org/forum/post/93508The "solution" (until there is a real solution) is in the reply below the linked one: turn off "Purge snapshot data when using CBT" under advanced settings for all backup jobs.

-

@peo Hi, thank you for your quick reply. Since I had this storage issues after disabling it, I am a little bit careful. My knowledge is really limited about CBT based backups, can you tell me, what it means in terms of storage use. To my understanding it will keep the snapshots and thereby significantly increase space usage, or do I miss something? And have you heard about whether the bug is officially known and worked on?

-

@FritzGerald The snapshots (left on the disk until next backup) will only consist of the differences between the previous backup and the current one.

BUT.. when you do the first backup of a machine, the snapshot will use the full (used) size of each disk attached to the machine (this might be what happened at your first attempt).If you have the space for it, just do one backup at the time with snapshot deletion disabled, then do another one when it's finished. The snapshots will then be reduced to only the difference between the first and second backup.

-

@peo

Hi thank you. Your are most likely right about the backup storage overflow.Just two more questions:

- have you every experienced this problem on a VM with only one disc attached? I am asking since at my site only delta backups fail with additional discs attached.

- Is this somehow addressed as a bug or shall I officially report a bug, since now quite a few users experience this problem.

-

@FritzGerald I have not had any problems like this since I disabled the deletion of the in-between-backup snapshots. I have for example a couple of machines with 50GB+ disks (one with a 100+ GB, mostly unused now, so the snapshot in between the backups takes less than a MB).

All backups were failing (most of my VMs have more than one disk, a trick I use to lock it to a specific host) until I disabled the deletion of the snapshot. Not at once, but more and more of them until all..I still have the other "imaginary problem" with my VM for Docker (but that's a completely other problem which have not yet been acknowledged - backups "fail" but I'm able to restore them to a fully working new VM)

-

@peo

Hi. Sorry for bothering you. I may have expressed in-precise in my question.For reporting a bug we need to get the most specific information possible. That helps developers to pinpoint a bug. Since I have only trouble with VMs having additional virtual discs (disc created on a local SR on the same host as the VM) attached to it, I would like to know if you have a counter example. Or in other words: Do all your VMs only have a single virtual disc?

@manilx feedback would be very interesting as well.

Thank you for your support.

-

@FritzGerald As I replied, most of my VMs have at least two virtual disks (also mixed locations: some on local NVMe, some on local SSD and some on the NAS over NFS).

I would say this is not a reportable issue until you have the backups running again (without deletion of the snapshots). When you activate the snapshot deletion again, the problem will appear on every second run of a backup job (the first is "full" and it succeeds, the second attempt will be "delta" and it fails, the third attempt will again be "full" and will succeed). This is (was at least for me) independent of the number of attached disks per VM.

-

@peo

I am sorry, I must have missed your statement about the discs.Okay. We can both confirm:

- CBT based delta backups fail on some VMs, causing an iterating success / failure backup behavior.

- removing "Purge snapshot data when using CBT" flag works around it, but at the costs of additional "doubling" storage space.

- In addition only I observed, that it always and only happens to me, when there is are additional discs involves. Delta backup of VMs within the same backup job but different machines work at my place.

I will observe this during this week and then file a bug report.

-

Just jumping in here,

Running XOCE. Updated on the 21st June, never had backup issues but now all jobs fail as above. Don't have (and never have had) "Purge snapshot data when using CBT" enabled.

Rolled back to the update i did on the 4th June and all backups working as expected.

Thanks god for a decent rollback plan.

For those running XO from sources, you can "upgrade" again and select an older version. May get you out of the woods.

-

Things were fixed in

masterrecently, so please try again

-

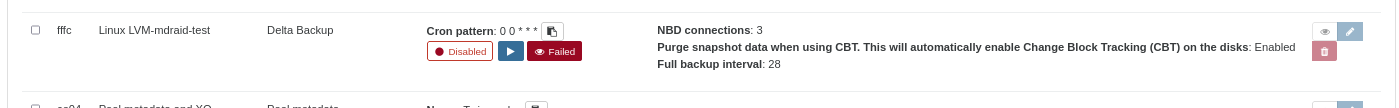

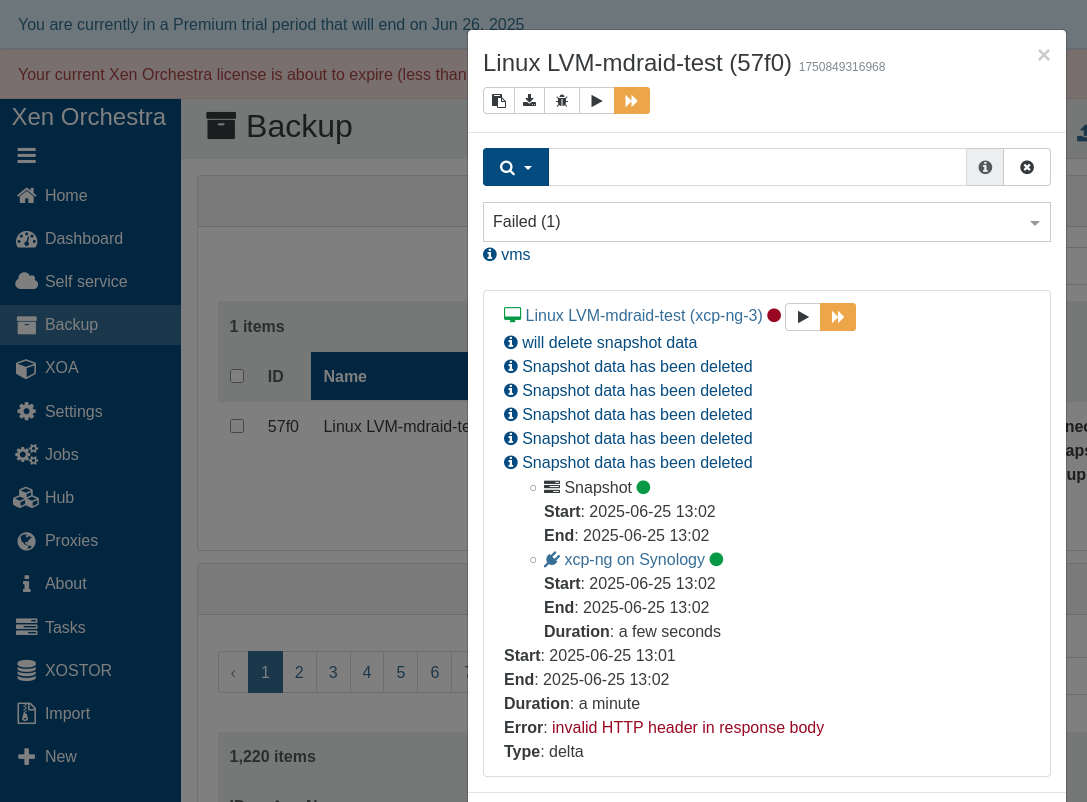

@olivierlambert I just updated to ('a348ce07d') and the problem remains:

(this backup job was set up just as a test yesterday, only local VDI in the VM and storing backups to NAS over NFS)

As before, it happens on every second manual run of the job (when the last line of the error says "Type: Delta")

Also, a slight UI problem, but that's not there (or at least was not yesterday) in the real version.

-

@olivierlambert Also the same in XOA, switched "latest" in the "release channel" before updating:

Initial backup succeeds, the second one fails, the third one succeeds (but is transferred as "full" again, as the first one)

-

Yes but I was talking about the sources.

latestisn'tmaster. -

@peo said in Error: invalid HTTP header in response body:

@olivierlambert Also the same in XOA, switched "latest" in the "release channel" before updating:

this is probably a CBT issue

you will have more infomation in the log ( journalctl as root )

can you try running the backup without NBD/CBT enabled ? -

@florent as described by myself and others in this thread the error occurs only when "Purge snapshot data when using CBT" is enabled.

As expected, it runs fine (every time) when "Use NBT+CBT if available" is disabled, which also disables "Purge snapshot data when using CBT".Jun 25 06:58:25 xoa xo-server[2661108]: 2025-06-25T10:58:25.998Z xo:backups:worker INFO starting backup Jun 25 06:58:26 xoa nfsrahead[2661133]: setting /run/xo-server/mounts/2ad70aa9-8f27-4353-8dde-5623f31cd49f readahead to 128 Jun 25 06:59:03 xoa xo-server[2661108]: 2025-06-25T10:59:03.721Z @xen-orchestra/xapi/disks/Xapi WARN can't connect through NBD, fallback to stream export Jun 25 06:59:03 xoa xo-server[2661108]: 2025-06-25T10:59:03.798Z @xen-orchestra/xapi/disks/Xapi WARN can't connect through NBD, fallback to stream export Jun 25 06:59:03 xoa xo-server[2661108]: 2025-06-25T10:59:03.822Z @xen-orchestra/xapi/disks/Xapi WARN can't connect through NBD, fallback to stream export Jun 25 06:59:03 xoa xo-server[2661108]: 2025-06-25T10:59:03.952Z @xen-orchestra/xapi/disks/Xapi WARN can't connect through NBD, fallback to stream export Jun 25 06:59:04 xoa xo-server[2661108]: 2025-06-25T10:59:04.031Z @xen-orchestra/xapi/disks/Xapi WARN can't connect through NBD, fallback to stream export Jun 25 07:01:37 xoa xo-server[2661108]: 2025-06-25T11:01:37.465Z xo:backups:MixinBackupWriter WARN cleanVm: incorrect backup size in metadata { Jun 25 07:01:37 xoa xo-server[2661108]: path: '/xo-vm-backups/30db3746-fecc-4b49-e7af-8f15d13d573c/20250625T105907Z.json', Jun 25 07:01:37 xoa xo-server[2661108]: actual: 10166992896, Jun 25 07:01:37 xoa xo-server[2661108]: expected: 10169530368 Jun 25 07:01:37 xoa xo-server[2661108]: } Jun 25 07:01:37 xoa xo-server[2661108]: 2025-06-25T11:01:37.555Z xo:backups:worker INFO backup has ended Jun 25 07:01:37 xoa xo-server[2661108]: 2025-06-25T11:01:37.607Z xo:backups:worker INFO process will exit { Jun 25 07:01:37 xoa xo-server[2661108]: duration: 191607947, Jun 25 07:01:37 xoa xo-server[2661108]: exitCode: 0, Jun 25 07:01:37 xoa xo-server[2661108]: resourceUsage: { Jun 25 07:01:37 xoa xo-server[2661108]: userCPUTime: 122499805, Jun 25 07:01:37 xoa xo-server[2661108]: systemCPUTime: 32534032, Jun 25 07:01:37 xoa xo-server[2661108]: maxRSS: 125060, Jun 25 07:01:37 xoa xo-server[2661108]: sharedMemorySize: 0, Jun 25 07:01:37 xoa xo-server[2661108]: unsharedDataSize: 0, Jun 25 07:01:37 xoa xo-server[2661108]: unsharedStackSize: 0, Jun 25 07:01:37 xoa xo-server[2661108]: minorPageFault: 585389, Jun 25 07:01:37 xoa xo-server[2661108]: majorPageFault: 0, Jun 25 07:01:37 xoa xo-server[2661108]: swappedOut: 0, Jun 25 07:01:37 xoa xo-server[2661108]: fsRead: 2056, Jun 25 07:01:37 xoa xo-server[2661108]: fsWrite: 19863128, Jun 25 07:01:37 xoa xo-server[2661108]: ipcSent: 0, Jun 25 07:01:37 xoa xo-server[2661108]: ipcReceived: 0, Jun 25 07:01:37 xoa xo-server[2661108]: signalsCount: 0, Jun 25 07:01:37 xoa xo-server[2661108]: voluntaryContextSwitches: 112269, Jun 25 07:01:37 xoa xo-server[2661108]: involuntaryContextSwitches: 90074 Jun 25 07:01:37 xoa xo-server[2661108]: }, Jun 25 07:01:37 xoa xo-server[2661108]: summary: { duration: '3m', cpuUsage: '81%', memoryUsage: '122.13 MiB' } Jun 25 07:01:37 xoa xo-server[2661108]: } Jun 25 07:01:58 xoa xo-server[2661382]: 2025-06-25T11:01:58.035Z xo:backups:worker INFO starting backup Jun 25 07:02:23 xoa xo-server[2661382]: 2025-06-25T11:02:23.856Z @xen-orchestra/xapi/disks/Xapi WARN openNbdCBT Error: can't connect to any nbd client Jun 25 07:02:23 xoa xo-server[2661382]: at connectNbdClientIfPossible (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/xapi/disks/utils.mjs:23:19) Jun 25 07:02:23 xoa xo-server[2661382]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Jun 25 07:02:23 xoa xo-server[2661382]: at async XapiVhdCbtSource.init (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/xapi/disks/XapiVhdCbt.mjs:75:20) Jun 25 07:02:23 xoa xo-server[2661382]: at async #openNbdCbt (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/xapi/disks/Xapi.mjs:129:7) Jun 25 07:02:23 xoa xo-server[2661382]: at async XapiDiskSource.init (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/disk-transform/dist/DiskPassthrough.mjs:28:41) Jun 25 07:02:23 xoa xo-server[2661382]: at async file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_incrementalVm.mjs:65:5 Jun 25 07:02:23 xoa xo-server[2661382]: at async Promise.all (index 0) Jun 25 07:02:23 xoa xo-server[2661382]: at async cancelableMap (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_cancelableMap.mjs:11:12) Jun 25 07:02:23 xoa xo-server[2661382]: at async exportIncrementalVm (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_incrementalVm.mjs:28:3) Jun 25 07:02:23 xoa xo-server[2661382]: at async IncrementalXapiVmBackupRunner._copy (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:38:25) { Jun 25 07:02:23 xoa xo-server[2661382]: code: 'NO_NBD_AVAILABLE' Jun 25 07:02:23 xoa xo-server[2661382]: } Jun 25 07:02:27 xoa xo-server[2661382]: 2025-06-25T11:02:27.312Z xo:xapi:vdi WARN invalid HTTP header in response body { Jun 25 07:02:27 xoa xo-server[2661382]: body: 'HTTP/1.1 500 Internal Error\r\n' + Jun 25 07:02:27 xoa xo-server[2661382]: 'content-length: 318\r\n' + Jun 25 07:02:27 xoa xo-server[2661382]: 'content-type: text/html\r\n' + Jun 25 07:02:27 xoa xo-server[2661382]: 'connection: close\r\n' + Jun 25 07:02:27 xoa xo-server[2661382]: 'cache-control: no-cache, no-store\r\n' + Jun 25 07:02:27 xoa xo-server[2661382]: '\r\n' + Jun 25 07:02:27 xoa xo-server[2661382]: '<html><body><h1>HTTP 500 internal server error</h1>An unexpected error occurred; please wait a while and try again. If the problem persists, please contact your support representative.<h1> Additional information </h1>VDI_INCOMPATIBLE_TYPE: [ OpaqueRef:31a2142e-c677-6c86-e916-0ac19ffbe40f; CBT metadata ]</body></html>' Jun 25 07:02:27 xoa xo-server[2661382]: } Jun 25 07:02:39 xoa xo-server[2661382]: 2025-06-25T11:02:39.117Z @xen-orchestra/xapi/disks/Xapi WARN openNbdCBT Error: can't connect to any nbd client Jun 25 07:02:39 xoa xo-server[2661382]: at connectNbdClientIfPossible (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/xapi/disks/utils.mjs:23:19) Jun 25 07:02:39 xoa xo-server[2661382]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Jun 25 07:02:39 xoa xo-server[2661382]: at async XapiVhdCbtSource.init (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/xapi/disks/XapiVhdCbt.mjs:75:20) Jun 25 07:02:39 xoa xo-server[2661382]: at async #openNbdCbt (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/xapi/disks/Xapi.mjs:129:7) Jun 25 07:02:39 xoa xo-server[2661382]: at async XapiDiskSource.init (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/disk-transform/dist/DiskPassthrough.mjs:28:41) Jun 25 07:02:39 xoa xo-server[2661382]: at async file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_incrementalVm.mjs:65:5 Jun 25 07:02:39 xoa xo-server[2661382]: at async Promise.all (index 3) { Jun 25 07:02:39 xoa xo-server[2661382]: code: 'NO_NBD_AVAILABLE' Jun 25 07:02:39 xoa xo-server[2661382]: } Jun 25 07:02:39 xoa xo-server[2661382]: 2025-06-25T11:02:39.539Z @xen-orchestra/xapi/disks/Xapi WARN openNbdCBT Error: can't connect to any nbd client Jun 25 07:02:39 xoa xo-server[2661382]: at connectNbdClientIfPossible (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/xapi/disks/utils.mjs:23:19) Jun 25 07:02:39 xoa xo-server[2661382]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) Jun 25 07:02:39 xoa xo-server[2661382]: at async XapiVhdCbtSource.init (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/xapi/disks/XapiVhdCbt.mjs:75:20) Jun 25 07:02:39 xoa xo-server[2661382]: at async #openNbdCbt (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/xapi/disks/Xapi.mjs:129:7) Jun 25 07:02:39 xoa xo-server[2661382]: at async XapiDiskSource.init (file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/disk-transform/dist/DiskPassthrough.mjs:28:41) Jun 25 07:02:39 xoa xo-server[2661382]: at async file:///usr/local/lib/node_modules/xo-server/node_modules/@xen-orchestra/backups/_incrementalVm.mjs:65:5 Jun 25 07:02:39 xoa xo-server[2661382]: at async Promise.all (index 2) { Jun 25 07:02:39 xoa xo-server[2661382]: code: 'NO_NBD_AVAILABLE' Jun 25 07:02:39 xoa xo-server[2661382]: } Jun 25 07:02:42 xoa xo-server[2661382]: 2025-06-25T11:02:42.588Z xo:xapi:vdi WARN invalid HTTP header in response body { Jun 25 07:02:42 xoa xo-server[2661382]: body: 'HTTP/1.1 500 Internal Error\r\n' + Jun 25 07:02:42 xoa xo-server[2661382]: 'content-length: 318\r\n' + Jun 25 07:02:42 xoa xo-server[2661382]: 'content-type: text/html\r\n' + Jun 25 07:02:42 xoa xo-server[2661382]: 'connection: close\r\n' + Jun 25 07:02:42 xoa xo-server[2661382]: 'cache-control: no-cache, no-store\r\n' + Jun 25 07:02:42 xoa xo-server[2661382]: '\r\n' + Jun 25 07:02:42 xoa xo-server[2661382]: '<html><body><h1>HTTP 500 internal server error</h1>An unexpected error occurred; please wait a while and try again. If the problem persists, please contact your support representative.<h1> Additional information </h1>VDI_INCOMPATIBLE_TYPE: [ OpaqueRef:f0379a82-6fce-c6fa-a4c7-b7b6dcc5df26; CBT metadata ]</body></html>' Jun 25 07:02:42 xoa xo-server[2661382]: } Jun 25 07:02:42 xoa xo-server[2661382]: 2025-06-25T11:02:42.950Z xo:xapi:vdi WARN invalid HTTP header in response body { Jun 25 07:02:42 xoa xo-server[2661382]: body: 'HTTP/1.1 500 Internal Error\r\n' + Jun 25 07:02:42 xoa xo-server[2661382]: 'content-length: 318\r\n' + Jun 25 07:02:42 xoa xo-server[2661382]: 'content-type: text/html\r\n' + Jun 25 07:02:42 xoa xo-server[2661382]: 'connection: close\r\n' + Jun 25 07:02:42 xoa xo-server[2661382]: 'cache-control: no-cache, no-store\r\n' + Jun 25 07:02:42 xoa xo-server[2661382]: '\r\n' + Jun 25 07:02:42 xoa xo-server[2661382]: '<html><body><h1>HTTP 500 internal server error</h1>An unexpected error occurred; please wait a while and try again. If the problem persists, please contact your support representative.<h1> Additional information </h1>VDI_INCOMPATIBLE_TYPE: [ OpaqueRef:85222592-ba8f-e189-8389-6cb4d8dd038b; CBT metadata ]</body></html>' Jun 25 07:02:42 xoa xo-server[2661382]: } Jun 25 07:02:43 xoa xo-server[2661382]: 2025-06-25T11:02:43.467Z @xen-orchestra/xapi/disks/Xapi WARN openNbdCBT XapiError: HANDLE_INVALID(VDI, OpaqueRef:0671251f-d1f0-2a16-53c8-125f2b357e0d) Jun 25 07:02:43 xoa xo-server[2661382]: at XapiError.wrap (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_XapiError.mjs:16:12) Jun 25 07:02:43 xoa xo-server[2661382]: at default (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_getTaskResult.mjs:13:29) Jun 25 07:02:43 xoa xo-server[2661382]: at Xapi._addRecordToCache (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1072:24) Jun 25 07:02:43 xoa xo-server[2661382]: at file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1106:14 Jun 25 07:02:43 xoa xo-server[2661382]: at Array.forEach (<anonymous>) Jun 25 07:02:43 xoa xo-server[2661382]: at Xapi._processEvents (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1096:12) Jun 25 07:02:43 xoa xo-server[2661382]: at Xapi._watchEvents (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1269:14) Jun 25 07:02:43 xoa xo-server[2661382]: at process.processTicksAndRejections (node:internal/process/task_queues:95:5) { Jun 25 07:02:43 xoa xo-server[2661382]: code: 'HANDLE_INVALID', Jun 25 07:02:43 xoa xo-server[2661382]: params: [ 'VDI', 'OpaqueRef:0671251f-d1f0-2a16-53c8-125f2b357e0d' ], Jun 25 07:02:43 xoa xo-server[2661382]: call: undefined, Jun 25 07:02:43 xoa xo-server[2661382]: url: undefined, Jun 25 07:02:43 xoa xo-server[2661382]: task: task { Jun 25 07:02:43 xoa xo-server[2661382]: uuid: '19892e76-0681-defa-7d86-8adff4c519df', Jun 25 07:02:43 xoa xo-server[2661382]: name_label: 'Async.VDI.list_changed_blocks', Jun 25 07:02:43 xoa xo-server[2661382]: name_description: '', Jun 25 07:02:43 xoa xo-server[2661382]: allowed_operations: [], Jun 25 07:02:43 xoa xo-server[2661382]: current_operations: {}, Jun 25 07:02:43 xoa xo-server[2661382]: created: '20250625T11:02:23Z', Jun 25 07:02:43 xoa xo-server[2661382]: finished: '20250625T11:02:43Z', Jun 25 07:02:43 xoa xo-server[2661382]: status: 'failure', Jun 25 07:02:43 xoa xo-server[2661382]: resident_on: 'OpaqueRef:38c38c49-d15f-e42a-7aca-ae093fca92c6', Jun 25 07:02:43 xoa xo-server[2661382]: progress: 1, Jun 25 07:02:43 xoa xo-server[2661382]: type: '<none/>', Jun 25 07:02:43 xoa xo-server[2661382]: result: '', Jun 25 07:02:43 xoa xo-server[2661382]: error_info: [ Jun 25 07:02:43 xoa xo-server[2661382]: 'HANDLE_INVALID', Jun 25 07:02:43 xoa xo-server[2661382]: 'VDI', Jun 25 07:02:43 xoa xo-server[2661382]: 'OpaqueRef:0671251f-d1f0-2a16-53c8-125f2b357e0d' Jun 25 07:02:43 xoa xo-server[2661382]: ], Jun 25 07:02:43 xoa xo-server[2661382]: other_config: {}, Jun 25 07:02:43 xoa xo-server[2661382]: subtask_of: 'OpaqueRef:NULL', Jun 25 07:02:43 xoa xo-server[2661382]: subtasks: [], Jun 25 07:02:43 xoa xo-server[2661382]: backtrace: '(((process xapi)(filename ocaml/xapi-client/client.ml)(line 7))((process xapi)(filename ocaml/xapi-client/client.ml)(line 19))((process xapi)(filename ocaml/xapi-client/client.ml)(line 11643))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 144))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml)(line 39))((process xapi)(filename ocaml/xapi/rbac.ml)(line 188))((process xapi)(filename ocaml/xapi/rbac.ml)(line 197))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 77)))' Jun 25 07:02:43 xoa xo-server[2661382]: } Jun 25 07:02:43 xoa xo-server[2661382]: } Jun 25 07:02:46 xoa xo-server[2661382]: 2025-06-25T11:02:46.867Z xo:xapi:vdi WARN invalid HTTP header in response body { Jun 25 07:02:46 xoa xo-server[2661382]: body: 'HTTP/1.1 500 Internal Error\r\n' + Jun 25 07:02:46 xoa xo-server[2661382]: 'content-length: 346\r\n' + Jun 25 07:02:46 xoa xo-server[2661382]: 'content-type: text/html\r\n' + Jun 25 07:02:46 xoa xo-server[2661382]: 'connection: close\r\n' + Jun 25 07:02:46 xoa xo-server[2661382]: 'cache-control: no-cache, no-store\r\n' + Jun 25 07:02:46 xoa xo-server[2661382]: '\r\n' + Jun 25 07:02:46 xoa xo-server[2661382]: '<html><body><h1>HTTP 500 internal server error</h1>An unexpected error occurred; please wait a while and try again. If the problem persists, please contact your support representative.<h1> Additional information </h1>Db_exn.Read_missing_uuid("VDI", "", "OpaqueRef:0671251f-d1f0-2a16-53c8-125f2b357e0d")</body></html>' Jun 25 07:02:46 xoa xo-server[2661382]: } Jun 25 07:02:52 xoa xo-server[2661382]: 2025-06-25T11:02:52.120Z xo:backups:worker INFO backup has ended Jun 25 07:02:52 xoa xo-server[2661382]: 2025-06-25T11:02:52.133Z xo:backups:worker INFO process will exit { Jun 25 07:02:52 xoa xo-server[2661382]: duration: 54097776, Jun 25 07:02:52 xoa xo-server[2661382]: exitCode: 0, Jun 25 07:02:52 xoa xo-server[2661382]: resourceUsage: { Jun 25 07:02:52 xoa xo-server[2661382]: userCPUTime: 2370678, Jun 25 07:02:52 xoa xo-server[2661382]: systemCPUTime: 266735, Jun 25 07:02:52 xoa xo-server[2661382]: maxRSS: 37208, Jun 25 07:02:52 xoa xo-server[2661382]: sharedMemorySize: 0, Jun 25 07:02:52 xoa xo-server[2661382]: unsharedDataSize: 0, Jun 25 07:02:52 xoa xo-server[2661382]: unsharedStackSize: 0, Jun 25 07:02:52 xoa xo-server[2661382]: minorPageFault: 22126, Jun 25 07:02:52 xoa xo-server[2661382]: majorPageFault: 0, Jun 25 07:02:52 xoa xo-server[2661382]: swappedOut: 0, Jun 25 07:02:52 xoa xo-server[2661382]: fsRead: 0, Jun 25 07:02:52 xoa xo-server[2661382]: fsWrite: 0, Jun 25 07:02:52 xoa xo-server[2661382]: ipcSent: 0, Jun 25 07:02:52 xoa xo-server[2661382]: ipcReceived: 0, Jun 25 07:02:52 xoa xo-server[2661382]: signalsCount: 0, Jun 25 07:02:52 xoa xo-server[2661382]: voluntaryContextSwitches: 2163, Jun 25 07:02:52 xoa xo-server[2661382]: involuntaryContextSwitches: 658 Jun 25 07:02:52 xoa xo-server[2661382]: }, Jun 25 07:02:52 xoa xo-server[2661382]: summary: { duration: '54s', cpuUsage: '5%', memoryUsage: '36.34 MiB' } Jun 25 07:02:52 xoa xo-server[2661382]: }

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login