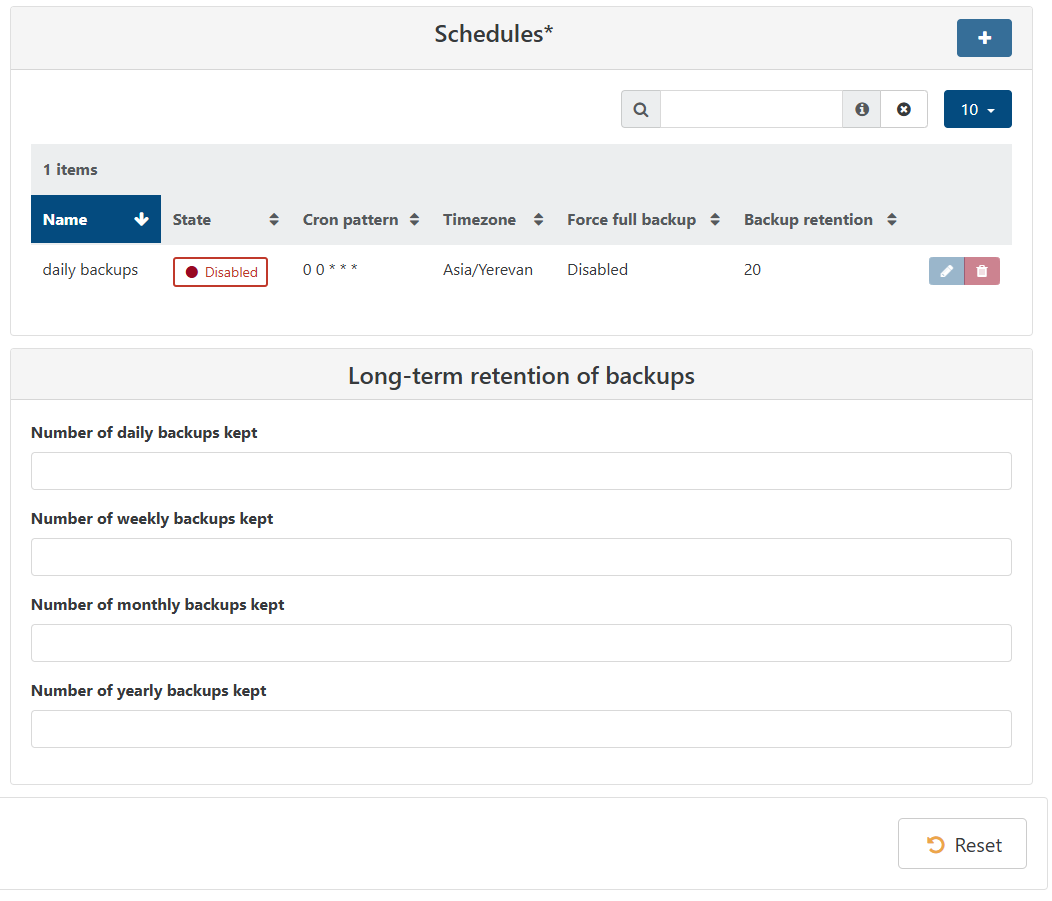

Long-term retention of backups

-

I compared the 2 jobs to make sure they where exactly the same.

Ran the new job. Success.

Ran the old job. Failed.Removed all data from Long-term retention of backups in the old job

Number of daily backups kept7 to "blank"

Number of weekly backups kept2 to "blank"

Number of monthly backups kept1 to "blank"

Number of yearly backups kept1 to "blank"Ran the old job again. Success !

Put the data back to 7 2 1 1

Ran the old job. Failed.Hmmm.. I think there must be an undocumented feature here

I will run the new job alongside the old job an hour apart to see what will happen. -

I'm getting similar too, I get the

"entries must be sorted in asc order 2025-01 2025-52"error (see below) hope that's helpful.Based on the comment about removing the

Long-term retention of backupsdata, I just removed theNumber of weekly backups keptentry and re-ran, this allowed the backup to run. I kept myNumber of daily backups keptlimit which I haven't reached yet."message": "backup VM", "start": 1735640611472, "status": "failure", "tasks": [ { "id": "1735640611748", "message": "snapshot", "start": 1735640611748, "status": "success", "end": 1735640616464, "result": "1f91db46-fbfe-ba09-9f8b-1c350d7493fd" }, { "data": { "id": "59e1ac20-f03d-45b4-953d-6fb7277537c2", "type": "remote", "isFull": true }, "id": "1735640616501", "message": "export", "start": 1735640616501, "status": "failure", "end": 1735640616505, "result": { "generatedMessage": false, "code": "ERR_ASSERTION", "actual": false, "expected": true, "operator": "strictEqual", "message": "entries must be sorted in asc order 2025-01 2025-52", "name": "AssertionError", "stack": "AssertionError [ERR_ASSERTION]: entries must be sorted in asc order 2025-01 2025-52\n at getOldEntries (file:///home/root/xen-orchestra/@xen-orchestra/backups/_getOldEntries.mjs:116:18)\n at FullRemoteWriter._run (file:///home/root/xen-orchestra/@xen-orchestra/backups/_runners/_writers/FullRemoteWriter.mjs:39:24)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async FullRemoteWriter.run (file:///home/root/xen-orchestra/@xen-orchestra/backups/_runners/_writers/_AbstractFullWriter.mjs:6:14)" } -

Thank you for reporting this fix, after I removed the

Number of weekly backups keptentry my backups are working again. Last night all our backups failed with theentries must be sorted in asc order 2025-01 2025-52error. -

I have now, on the new year, added a new schedule to see if there will be any difference

-

@David Thanks, my backups suddenly fail since today, after removing all retention settings except “daily backup”, they work perfectly as last year.

-

@olivierlambert said in Long-term retention of backups:

@ph7 said in Long-term retention of backups:

VM_LACKS_FEATURE

This means there's no tools installed or working on that VM

The original job is still not running

I was thinking about this and came up with this cunning plan

- Shut down the VM to see if there is still a problem with the tools

- Run the original job manually

No. it did not work, still failing

entries must be sorted in asc order 2025-01 2025-52{ "data": { "mode": "delta", "reportWhen": "failure", "backupReportTpl": "mjml" }, "id": "1735908223694", "jobId": "6a12854b-cc07-4269-b780-fe87df6cd867", "jobName": "LongTerm-5+2", "message": "backup", "scheduleId": "4f8cc819-2cf7-42ac-94ae-ee005727ecf7", "start": 1735908223694, "status": "failure", "infos": [ { "data": { "vms": [ "1b75faf0-f4db-5aa9-d204-9bee6f2abfd6" ] }, "message": "vms" } ], "tasks": [ { "data": { "type": "VM", "id": "1b75faf0-f4db-5aa9-d204-9bee6f2abfd6", "name_label": "Debian" }, "id": "1735908225556", "message": "backup VM", "start": 1735908225556, "status": "failure", "tasks": [ { "id": "1735908225574", "message": "clean-vm", "start": 1735908225574, "status": "success", "end": 1735908225783, "result": { "merge": false } }, { "id": "1735908225605", "message": "clean-vm", "start": 1735908225605, "status": "success", "end": 1735908225828, "result": { "merge": false } }, { "id": "1735908226496", "message": "snapshot", "start": 1735908226496, "status": "success", "end": 1735908231603, "result": "4cf9de45-ff3f-f1a1-8a86-7b5cb03c9dd5" }, { "data": { "id": "446f82eb-2794-4c01-bd71-e247abd6a39c", "isFull": true, "type": "remote" }, "id": "1735908231603:0", "message": "export", "start": 1735908231603, "status": "failure", "end": 1735908231670, "result": { "generatedMessage": false, "code": "ERR_ASSERTION", "actual": false, "expected": true, "operator": "strictEqual", "message": "entries must be sorted in asc order 2025-01 2025-52", "name": "AssertionError", "stack": "AssertionError [ERR_ASSERTION]: entries must be sorted in asc order 2025-01 2025-52\n at getOldEntries (file:///root/xen-orchestra/@xen-orchestra/backups/_getOldEntries.mjs:116:18)\n at IncrementalRemoteWriter._prepare (file:///root/xen-orchestra/@xen-orchestra/backups/_runners/_writers/IncrementalRemoteWriter.mjs:96:24)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)" } }, { "data": { "id": "0927f4dc-4476-4ac3-959a-bc37e85956df", "isFull": true, "type": "remote" }, "id": "1735908231605", "message": "export", "start": 1735908231605, "status": "failure", "end": 1735908231657, "result": { "generatedMessage": false, "code": "ERR_ASSERTION", "actual": false, "expected": true, "operator": "strictEqual", "message": "entries must be sorted in asc order 2025-01 2025-52", "name": "AssertionError", "stack": "AssertionError [ERR_ASSERTION]: entries must be sorted in asc order 2025-01 2025-52\n at getOldEntries (file:///root/xen-orchestra/@xen-orchestra/backups/_getOldEntries.mjs:116:18)\n at IncrementalRemoteWriter._prepare (file:///root/xen-orchestra/@xen-orchestra/backups/_runners/_writers/IncrementalRemoteWriter.mjs:96:24)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)" } }, { "id": "1735908232625", "message": "clean-vm", "start": 1735908232625, "status": "success", "end": 1735908232700, "result": { "merge": false } }, { "id": "1735908232656", "message": "clean-vm", "start": 1735908232656, "status": "success", "end": 1735908232767, "result": { "merge": false } }, { "id": "1735908232981", "message": "snapshot", "start": 1735908232981, "status": "success", "end": 1735908234120, "result": "f614fec5-87a5-c227-4e0c-8fa76acbb271" }, { "data": { "id": "446f82eb-2794-4c01-bd71-e247abd6a39c", "isFull": true, "type": "remote" }, "id": "1735908234121", "message": "export", "start": 1735908234121, "status": "failure", "end": 1735908234135, "result": { "generatedMessage": false, "code": "ERR_ASSERTION", "actual": false, "expected": true, "operator": "strictEqual", "message": "entries must be sorted in asc order 2025-01 2025-52", "name": "AssertionError", "stack": "AssertionError [ERR_ASSERTION]: entries must be sorted in asc order 2025-01 2025-52\n at getOldEntries (file:///root/xen-orchestra/@xen-orchestra/backups/_getOldEntries.mjs:116:18)\n at IncrementalRemoteWriter._prepare (file:///root/xen-orchestra/@xen-orchestra/backups/_runners/_writers/IncrementalRemoteWriter.mjs:96:24)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)" } }, { "data": { "id": "0927f4dc-4476-4ac3-959a-bc37e85956df", "isFull": true, "type": "remote" }, "id": "1735908234121:0", "message": "export", "start": 1735908234121, "status": "failure", "end": 1735908234129, "result": { "generatedMessage": false, "code": "ERR_ASSERTION", "actual": false, "expected": true, "operator": "strictEqual", "message": "entries must be sorted in asc order 2025-01 2025-52", "name": "AssertionError", "stack": "AssertionError [ERR_ASSERTION]: entries must be sorted in asc order 2025-01 2025-52\n at getOldEntries (file:///root/xen-orchestra/@xen-orchestra/backups/_getOldEntries.mjs:116:18)\n at IncrementalRemoteWriter._prepare (file:///root/xen-orchestra/@xen-orchestra/backups/_runners/_writers/IncrementalRemoteWriter.mjs:96:24)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)" } }, { "id": "1735908234974", "message": "clean-vm", "start": 1735908234974, "status": "success", "end": 1735908235063, "result": { "merge": false } }, { "id": "1735908234994", "message": "clean-vm", "start": 1735908234994, "status": "success", "end": 1735908235091, "result": { "merge": false } }, { "id": "1735908235345", "message": "snapshot", "start": 1735908235345, "status": "success", "end": 1735908236519, "result": "319c12f9-6f35-411d-2a99-b2c55f7d469b" }, { "data": { "id": "446f82eb-2794-4c01-bd71-e247abd6a39c", "isFull": true, "type": "remote" }, "id": "1735908236520", "message": "export", "start": 1735908236520, "status": "failure", "end": 1735908236533, "result": { "generatedMessage": false, "code": "ERR_ASSERTION", "actual": false, "expected": true, "operator": "strictEqual", "message": "entries must be sorted in asc order 2025-01 2025-52", "name": "AssertionError", "stack": "AssertionError [ERR_ASSERTION]: entries must be sorted in asc order 2025-01 2025-52\n at getOldEntries (file:///root/xen-orchestra/@xen-orchestra/backups/_getOldEntries.mjs:116:18)\n at IncrementalRemoteWriter._prepare (file:///root/xen-orchestra/@xen-orchestra/backups/_runners/_writers/IncrementalRemoteWriter.mjs:96:24)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)" } }, { "data": { "id": "0927f4dc-4476-4ac3-959a-bc37e85956df", "isFull": true, "type": "remote" }, "id": "1735908236520:0", "message": "export", "start": 1735908236520, "status": "failure", "end": 1735908236527, "result": { "generatedMessage": false, "code": "ERR_ASSERTION", "actual": false, "expected": true, "operator": "strictEqual", "message": "entries must be sorted in asc order 2025-01 2025-52", "name": "AssertionError", "stack": "AssertionError [ERR_ASSERTION]: entries must be sorted in asc order 2025-01 2025-52\n at getOldEntries (file:///root/xen-orchestra/@xen-orchestra/backups/_getOldEntries.mjs:116:18)\n at IncrementalRemoteWriter._prepare (file:///root/xen-orchestra/@xen-orchestra/backups/_runners/_writers/IncrementalRemoteWriter.mjs:96:24)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)" } } ], "warnings": [ { "data": { "attempt": 1, "error": "all targets have failed, step: writer.prepare()" }, "message": "Retry the VM backup due to an error" }, { "data": { "attempt": 2, "error": "all targets have failed, step: writer.prepare()" }, "message": "Retry the VM backup due to an error" } ], "end": 1735908237352, "result": { "errors": [ { "generatedMessage": false, "code": "ERR_ASSERTION", "actual": false, "expected": true, "operator": "strictEqual", "message": "entries must be sorted in asc order 2025-01 2025-52", "name": "AssertionError", "stack": "AssertionError [ERR_ASSERTION]: entries must be sorted in asc order 2025-01 2025-52\n at getOldEntries (file:///root/xen-orchestra/@xen-orchestra/backups/_getOldEntries.mjs:116:18)\n at IncrementalRemoteWriter._prepare (file:///root/xen-orchestra/@xen-orchestra/backups/_runners/_writers/IncrementalRemoteWriter.mjs:96:24)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)" }, { "generatedMessage": false, "code": "ERR_ASSERTION", "actual": false, "expected": true, "operator": "strictEqual", "message": "entries must be sorted in asc order 2025-01 2025-52", "name": "AssertionError", "stack": "AssertionError [ERR_ASSERTION]: entries must be sorted in asc order 2025-01 2025-52\n at getOldEntries (file:///root/xen-orchestra/@xen-orchestra/backups/_getOldEntries.mjs:116:18)\n at IncrementalRemoteWriter._prepare (file:///root/xen-orchestra/@xen-orchestra/backups/_runners/_writers/IncrementalRemoteWriter.mjs:96:24)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)" } ], "message": "all targets have failed, step: writer.prepare()", "name": "Error", "stack": "Error: all targets have failed, step: writer.prepare()\n at IncrementalXapiVmBackupRunner._callWriters (file:///root/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/_Abstract.mjs:64:13)\n at process.processTicksAndRejections (node:internal/process/task_queues:95:5)\n at async IncrementalXapiVmBackupRunner._copy (file:///root/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/IncrementalXapi.mjs:42:5)\n at async IncrementalXapiVmBackupRunner.run (file:///root/xen-orchestra/@xen-orchestra/backups/_runners/_vmRunners/_AbstractXapi.mjs:396:9)\n at async file:///root/xen-orchestra/@xen-orchestra/backups/_runners/VmsXapi.mjs:166:38" } } ], "end": 1735908237354 }Maybe I'm wrong but I think we have a problem here. Could have something to do with the new year.

-

@ph7 This is being investigated by the XOA team. We will update this thread when more information is available.

-

D DustinB referenced this topic on

-

@Danp Any news?

I have no delta-backups since new year and I don't know what will happen if I set retention "Backup retention" to 0.

I don't see any options to disable retention temporarily so I disabled daily backups. We have full monthly backups, but daily and weekly stopped working and it's disturbing.

We use open-source edition, no offence or pressure intended; just curious since maybe it's reasonable to delete all backups after next monthly full-backup is complete -

@al-indigo I believe that a fix should be out by EOM.

-

@Danp Could you please tell what will happen if I set "0" in retention field and enable backups again? Will it erase all my delta-backups there?

-

@al-indigo AFAIK, the backup retention settings from the schedule won't be affected by changing the LTR values to zeros.

-

@Danp Do you have any updates on fix release estimation?

-

@al-indigo The fix is included in XOA 5.103.0, which was released last week.

https://github.com/vatesfr/xen-orchestra/blob/master/CHANGELOG.md

[Backup/LTR] Fix computation for the last week of the year (PR #8269)

-

@Danp Thank you very much! Missed it

-

O olivierlambert marked this topic as a question on

O olivierlambert marked this topic as a question on

-

O olivierlambert has marked this topic as solved on

O olivierlambert has marked this topic as solved on