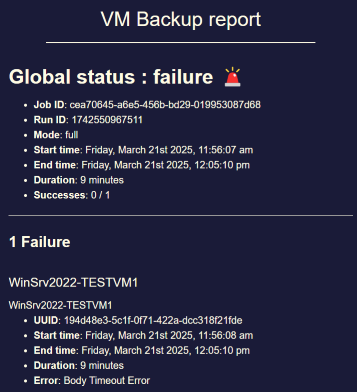

Potential bug with Windows VM backup: "Body Timeout Error"

-

@olivierlambert said in Potential bug with Windows VM backup: "Body Timeout Error":

I think we have a lead to explore, we'll keep you posted when we have a branch to test

Sure! Thank you very much.

When there is a branch available I will be happy to compile, test and provide any information / log needed. -

I did 2 more tests.

- using full backup with encryption disabled on the remote (had it enabled before) --> same issue

- switching from zstd to gzip --> same issue

-

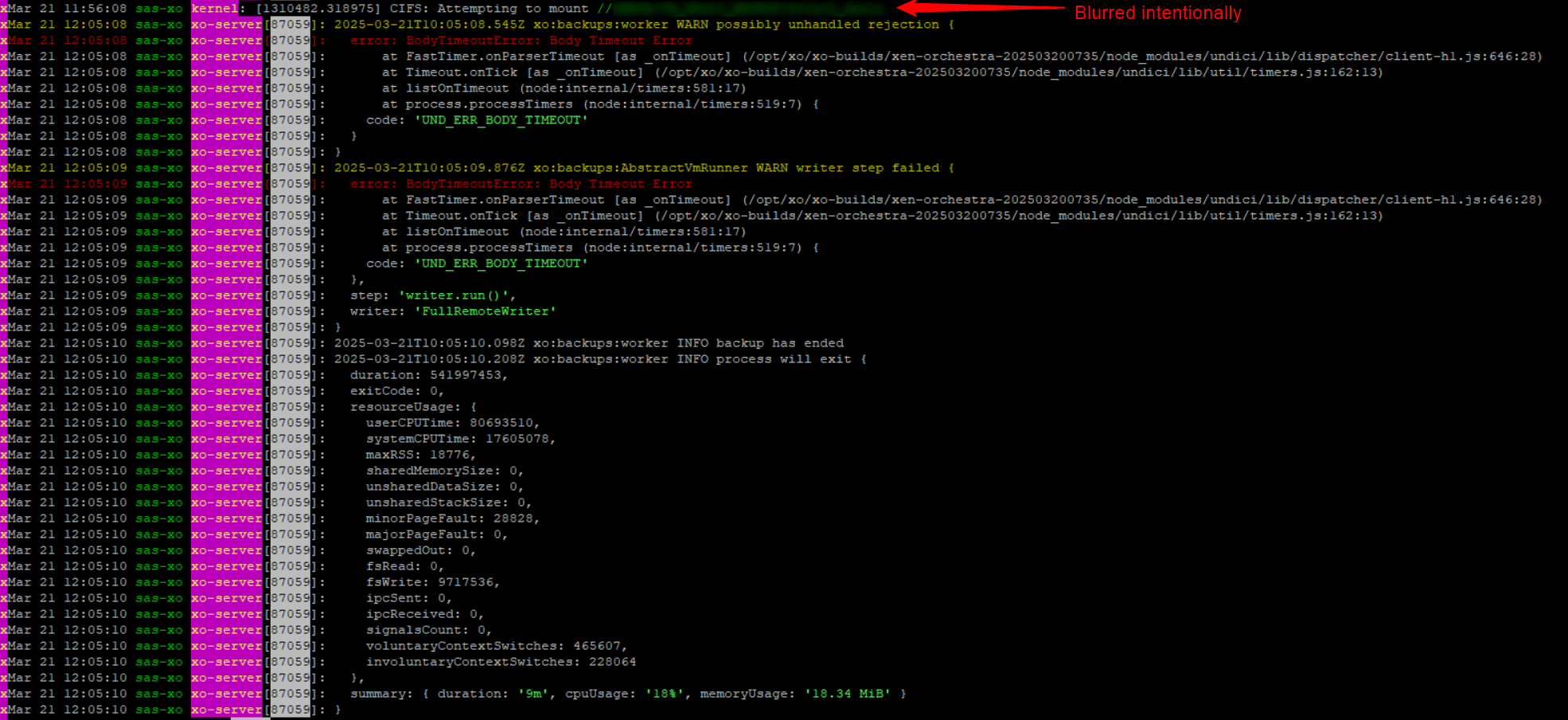

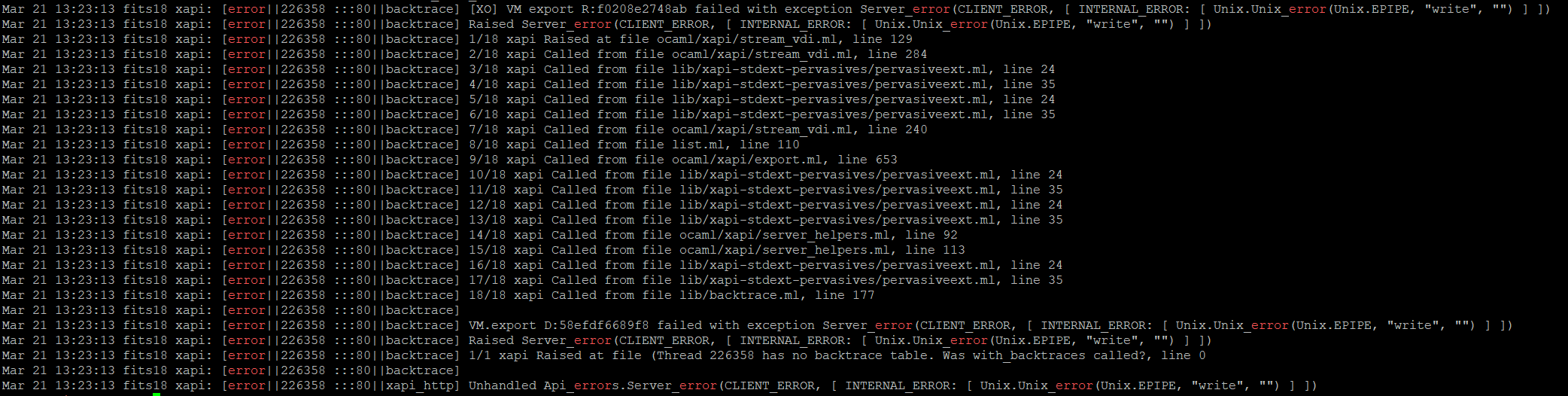

Hey, I did some more digging.

I found some log entries that were written at the moment the backup job threw the "body timeout error". (Those blocks of log entries appear for all VMs that show this problem).grep -i "VM.export" xensource.log | grep -i "error"

Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 HTTPS 192.168.60.30->:::80|[XO] VM export R:edfb08f9b55b|xapi_compression] nice failed to compress: exit code 70 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] [XO] VM export R:edfb08f9b55b failed with exception Server_error(CLIENT_ERROR, [ INTERNAL_ERROR: [ Unix.Unix_error(Unix.EPIPE, "write", "") ] ]) Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] Raised Server_error(CLIENT_ERROR, [ INTERNAL_ERROR: [ Unix.Unix_error(Unix.EPIPE, "write", "") ] ]) Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 1/16 xapi Raised at file ocaml/xapi/stream_vdi.ml, line 127 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 2/16 xapi Called from file ocaml/xapi/stream_vdi.ml, line 307 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 3/16 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 4/16 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 39 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 5/16 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 6/16 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 39 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 7/16 xapi Called from file ocaml/xapi/stream_vdi.ml, line 263 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 8/16 xapi Called from file list.ml, line 110 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 9/16 xapi Called from file ocaml/xapi/export.ml, line 707 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 10/16 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 11/16 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 39 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 12/16 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 24 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 13/16 xapi Called from file ocaml/libs/xapi-stdext/lib/xapi-stdext-pervasives/pervasiveext.ml, line 39 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 14/16 xapi Called from file ocaml/xapi/server_helpers.ml, line 75 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 15/16 xapi Called from file ocaml/xapi/server_helpers.ml, line 97 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] 16/16 xapi Called from file ocaml/libs/log/debug.ml, line 250 Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|VM.export D:6f2ee2bc66b8|backtrace] Nov 4 08:02:33 dat-xcpng01 xapi: [error||141766 :::80|handler:http/get_export D:b71f8095b88f|backtrace] VM.export D:6f2ee2bc66b8 failed with exception Server_error(CLIENT_ERROR, [ INTERNAL_ERROR: [ Unix.Unix_error(Unix.EPIPE, "write", "") ] ])daemon.log shows:

Nov 4 08:02:31 dat-xcpng01 forkexecd: [error||0 ||forkexecd] 135416 (/bin/nice -n 19 /usr/bin/ionice -c 3 /usr/bin/zstd) exited with code 70Maybe this gives some insights

To me it appears that the issue is caused by the compression (I had zstd enabled during the backup run).Best regards

//EDIT: This is what ChatGPT thinks about this (I know AI responses have to be taken with a grain of salt):

Why would there be long “no data” gaps? With full exports, XAPI compresses on the host side. When it encounters very large runs of zeros / sparse/unused regions, compression may yield almost nothing for long stretches. If the stream goes quiet longer than Undici’s bodyTimeout (default ~5 minutes), XO aborts. This explains why it only hits some big VMs and why delta (NBD) backups are fine. -

@MajorP93 said in Potential bug with Windows VM backup: "Body Timeout Error":

Why would there be long “no data” gaps? With full exports, XAPI compresses on the host side. When it encounters very large runs of zeros / sparse/unused regions, compression may yield almost nothing for long stretches. If the stream goes quiet longer than Undici’s bodyTimeout (default ~5 minutes), XO aborts. This explains why it only hits some big VMs and why delta (NBD) backups are fine.

I think that's exactly the issue in here (if I remember our internal discussion).

-

@olivierlambert said in Potential bug with Windows VM backup: "Body Timeout Error":

@MajorP93 said in Potential bug with Windows VM backup: "Body Timeout Error":

Why would there be long “no data” gaps? With full exports, XAPI compresses on the host side. When it encounters very large runs of zeros / sparse/unused regions, compression may yield almost nothing for long stretches. If the stream goes quiet longer than Undici’s bodyTimeout (default ~5 minutes), XO aborts. This explains why it only hits some big VMs and why delta (NBD) backups are fine.

I think that's exactly the issue in here (if I remember our internal discussion).

Do you think it could be a good idea to make the timeout configurable via Xen Orchestra?

-

I can't answer for my devs, just let them come here to provide an answer

-

Going back up to the top of the thread, removing compression was what fixed this for me, and my backups are still saying they are successful (maybe I better check).

I wish there was an easier way to "right size" the storage on these Windows VMs, the suggestion is to copy the VM to a new and smaller "disk" but I haven't looked into what is involved to get this done.

-

We will fix it with compression, now we are pretty sure to know where the issue is

-

Happy to hear that there's a potential lead, im also happy I found this thread so I can kick back and wait for Vates to fix it

-

I can imagine that a fix could be to send "keepalive" packets in addition to the XCP-ng export-VM-data-stream so that the timeout on XO side does not occur