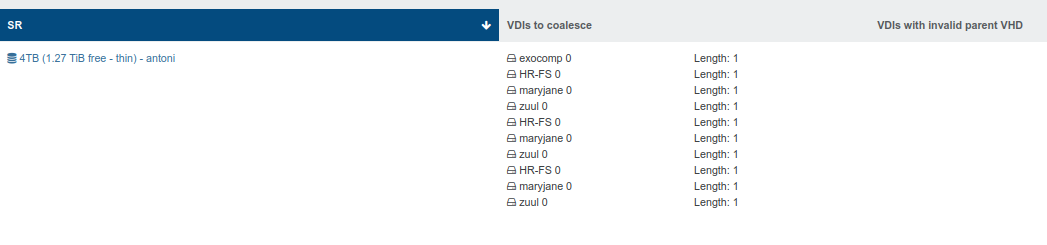

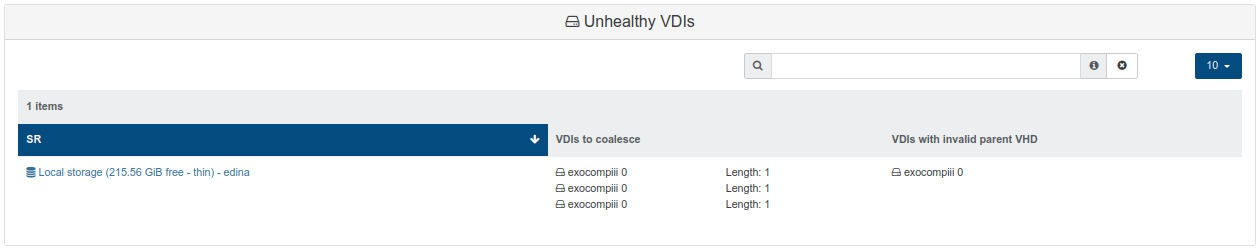

Every VM in a CR backup job creates an "Unhealthy VDI"

-

Three separate hosts are involved. HR-FS and zuul are on one, maryjane on the second, exocomp on the third.

Total, over 17,000 lines in SMlog for the hour during the CR job. No errors, no corruptions, no exceptions.

Actually, there are some reported exceptions and corruptions on farmer, but none that involve these VMs or this CR job. A fifth VM not part of the job has a corruption that I'm still investigating, but it's on a test VM I don't care about. The VM HR-FS does have a long-standing coalesce issue where two .vhd files always remain, the logs showing:

FAILED in util.pread: (rc 22) stdout: '/var/run/sr-mount/7bc12cff- ... -ce096c635e66.vhd not created by xen; resize not supported... but this long predates the CR job, and seems related to the manner in which the original .vhd file was created on the host. It doesn't seem relevant, since three other VMs with no history of exceptions/errors in SMlog are showing the same unhealthy VDI behaviour, and two of those aren't even on the same host. One is on a separate pool.

SMlog is thick and somewhat inscrutable to me. Is there a specific message I should be looking for?

-

Can you grep on the word

exception? (with -i to make sure you get them all) -

[09:24 farmer ~]# zcat /var/log/SMlog.{31..2}.gz | cat - /var/log/SMlog.1 /var/log/SMlog | grep -i "nov 12 21" | grep -i -e exception -e e.x.c.e.p.t.i.o.nNov 12 21:12:51 farmer SMGC: [17592] * E X C E P T I O N * Nov 12 21:12:51 farmer SMGC: [17592] coalesce: EXCEPTION <class 'util.CommandException'>, Invalid argument Nov 12 21:12:51 farmer SMGC: [17592] raise CommandException(rc, str(cmdlist), stderr.strip()) Nov 12 21:16:52 farmer SMGC: [17592] * E X C E P T I O N * Nov 12 21:16:52 farmer SMGC: [17592] leaf-coalesce: EXCEPTION <class 'util.SMException'>, VHD *6c411334(8.002G/468.930M) corrupted Nov 12 21:16:52 farmer SMGC: [17592] raise util.SMException("VHD %s corrupted" % self) Nov 12 21:16:54 farmer SMGC: [17592] * E X C E P T I O N * Nov 12 21:16:54 farmer SMGC: [17592] coalesce: EXCEPTION <class 'util.SMException'>, VHD *6c411334(8.002G/468.930M) corrupted Nov 12 21:16:54 farmer SMGC: [17592] raise util.SMException("VHD %s corrupted" % self)None relevant to the CR job. The one at 21:12:51 local time is related to the 'resize not supported' issue I mention above. The two at 21:16:52 and 21:16:54 are related to a fifth VM not in the CR job (the test VM I don't care about, but may continue to investigate).

The other two hosts' SMlog are clean.

-

If its any help check out my post - https://xcp-ng.org/forum/topic/11525/unhealthy-vdis/4

-

Thank you. I've had a look at your logs in that post, and they don't bear much resemblance to mine. Your VDI is qcow2, mine are vhd. The specific errors you see in your logs:

Child process exited with errorCouldn't coalesce onlineThe request is missing the serverpath parameter... don't appear in my logs, so at least on first blush it doesn't appear to be related to either of the two exceptions in my own logs... and >>mine<< don't appear to belated to the subject of my OP.

@joeymorin said:

These fixes, would they stop the accumulation of unhealthy VDIs for existing CR chains already manifesting them? Or should I purge all of the CR VMs and snapshots?Anyone have any thoughts on this? I can let another incremental run tonight with these latest fixes already applied. If I get find round of unhealthy VDIs added to the pile, I could try removing all trace of the existing CR chains and their snapshots, and the let the CR job try again from an empty slate...

-

The issue is unrelated to XO, but a storage issue with corrupted VHD. As long it's here and not removed, nothing will coalesce.

-

Are you sure? Because that doesn't appear to be true. Coalescing works just fine on every other VM and VDI I have in my test environment. I can create and later delete snapshots on any VM and they coalesce in short order.

If you're suggesting that an issue with coalescing one VDI chain could adversely affect or even halt coalescing on completely unrelated VDI chains on the same host, on a different host in the same pool, and in a completely different pool, I have to say I can't fathom how that could be so. If the SR framework were so brittle, I'd have to reconsider XCP-ng as a virtualisation platform.

If someone can explain how I'm wrong, I am more than willing to listen and be humbled.

As I've tried to explain, the two VDI that don't coalesce are not related to the CR job. They amount to a base copy and the very first snapshot that was taken before the first boot of the VM. The SMlog error mentions 'vhd not created by xen; resize not supported'. I deleted that snapshot a long time ago. The delete 'worked', but the coalesce never happens as a result of the 'not created by xen' error.

I can even create snapshots in this very VM (the one with the persistent base copy and successfully-deleted-snapshots VDIs). Later I can delete those snapshots, and they coalesce happily and quickly. They all coalesce into that first snapshot VDI deleted long ago. It is that VDI which is not coalescing into the base copy.

The other exception is on a VDI belonging to a VM which is not part of the CR job.

I'd like to return to the OP. Why does every incremental CR leave behind a persistent unhealthy VDI. This cannot be related to a failed coalesce on one VM on one host in one pool. All my pools, hosts, and VMs are affected.

-

I'm not sure to get everything but I can answer the question: not on a different pool, but coalesce for the whole pool with a shared SR yes (a broken VHD will break coalesce on the entire SR it resides on, not on the others).

CR shouldn't leave any persistent coalesce. Yes, as soon a snap is deleted, it will need to coalesce, but then, after a while, coalesce should be finished, except if your CR fast enough to have almost not long before you have another coalesce and so on.

-

I can answer the question: not on a different pool, but coalesce for the whole pool with a shared SR yes (a broken VHD will break coalesce on the entire SR it resides on, not on the others).

There are no shared SR in my test environment, except for a CIFS ISO SR.

This is a thumbnail sketch:

Pool X: - Host A - Local SR A1 - Running VMs: - VM 1 (part of CR job) - VM 2 (part of CR job) - VM 3 - Halted VMs: - Several - Host B - Local SR B1 - Running VMs: - VM 4 (part of CR job) - VM 5 - VM 6 - Halted VMs: - Several - Local SR B2 - Destination SR for CR job - No other VMs, halted or running Pool Y: - Host C (single-host pool) - Local SR C' - Running VMs: - VM 7 (part of CR job) (also, instance of XO managing the CR job) - Halted VMs: - SeveralThere are other pools/hosts, but they're not implicated in any of this.

All of the unhealthy VDIs are on local SR B2, the destination for the CR job. How can an issue with coalescing a VDI on local SR A1 cause that? How can a VM's VDI on pool Y, host C, local SR C1, replicated to pool X, host B, local SR B2, be affected by a coalesce issue on with a VDI on pool X, host A, local SR A1?

Regarding shared SR, I'm somewhat gobsmacked by your assertion that a bad VDI can basically break an entire shared SR. Brittle doesn't quite capture it. I honestly don't think I could recommend XCP-ng to anyone if that were really true. At least for now, I can say that assertion is demonstrably false when it comes to local SR. As I've mentioned previously, I can create and destroy snapshots on any VM/host/SR in my test environment, and they coalesce quickly and without a fuss, >>including<< snapshots against the VMs which are suffering exceptions as detailed above.

By the way, the CR ran again this evening. Four more unhealthy VDIs.

Tomorrow I will purge all CR VMs and snapshots, and start over. The first incremental will be Saturday night. We'll see.

I've also spun up a new single host pool, new XO instance, and a new CR job to see if it does the same thing, or if it behaves as everyone seems to say it should behave. I'm more interested in learning why my test environment >>doesn't<<.

-

@joeymorin said in Every VM in a CR backup job creates an "Unhealthy VDI":

How can an issue with coalescing a VDI on local SR A1 cause that?

It can't. Coalesce issues are local to their SR. But you must fix those anyway, because this will cause big troubles over time.

How can a VM's VDI on pool Y, host C, local SR C1, replicated to pool X, host B, local SR B2, be affected by a coalesce issue on with a VDI on pool X, host A, local SR A1?

It can't.

But it depends on what you mean by "affecting". Coalesce is a normal process as soon your remove a snapshot. Snapshot removal is done both on source and destination during a CR.

CR isn't a different operation than doing a regular snapshot, there's nothing special about it.

So why it fails on your specific case? I would first make things clean in the first place, remove corrupted VHD, check all chains are clear everywhere and start a CR job again. I bet on a environment issue, causing a specific bug, but there's no many factors that's it's really hard to answer.

-

@olivierlambert said in Every VM in a CR backup job creates an "Unhealthy VDI":

So why it fails on your specific case? I would first make things clean in the first place, remove corrupted VHD, check all chains are clear everywhere and start a CR job again. I bet on a environment issue, causing a specific bug, but there's no many factors that's it's really hard to answer.

How about this specific case?:

Completely different test environment. Completely different host (single-host pool), completely different local SR, completely different VMs, NO exceptions in SMlog, similar CR job. Same result. Persistent unhealthy VDI reported in Dashboard->Health, one for each incremental of each VM in the CR job (currently only one, the XO VM).

Since nobody seems to think this should be happening, are there any thoughts on what I'm missing? Is there some secret incantation required on job setup?:

-

@joeymorin try to check the merge backup synchronously to avoid other jobs messing with this one ?

-

There are no other jobs.

I've now spun up a completely separate, fresh install of XCP-ng 8.3 to test the symptoms mentioned in the OP.

Steps taken

- Installed XCP-ng 8.3

- Text console over SSH

-

xe host-disable

-

xe host-evacuate(not needed yet of course since it's a brand-new install)

-

yum update

-

- Reboot

- Text console over SSH again

-

- Created local ISO SR

-

-

xe sr-create name-label="Local ISO" type=iso device-config:location=/opt/var/iso_repository device-config:legacy_mode=true content-type=iso

-

-

-

cd /opt/var/iso_repository

-

-

-

wget # ISO for Ubuntu Server

-

-

-

xe sr-scan uuid=07dcbf24-761d-1332-9cd3-d7d67de1aa22

-

- XO Lite

-

- New VM

-

- Booted from ISO, installed Server

- Text console to VM over SSH

-

- apt update/upgrade

-

- installed xe-guest-utilities

-

- Installed XO from source (ronivay script)

- XO

-

- Import ISO for Ubuntu Mate

-

- New VM

-

-

- Booted from ISO, installed Mate

-

-

-

- apt update/upgrade

-

-

-

- xe-guest-utilities

-

-

- New CR backup job

-

-

- Nightly

-

-

-

- VMs: 1 (Mate)

-

-

-

- Retention: 15

-

-

-

- Full: every 7

-

Exact same behaviour. After first (full) CR job run, additional (incremental) CR job runs results in one more 'unhealthy VDI'.

I've engaged in no other shenanigans. Plain vanilla XCP-ng and XO. There are only two VMs on this host, the XO from source VM, and a desktop OS VM which is the only target of the CR job. There are zero exceptions in SMlog.

What do you need to see?