-

I did a fresh install using "xcp-ng-8.2.1.iso". However after doing that it seems that I have no local storage. I do have a couple of error messages

More info:

- I did install on a 500GB sata SSD previously used for other purposes

- I have other sata and nvme drives in the system ZFS-formated, those drive are in use in case I boot the system as TrueNas store (SO NOT USED BY XCP-NG)

- during boot I get following alarms:

- EFI_MEMMAP is not enabled

- fcore_driver CRITICAL TRace back most recent call File "/opt/xensource/libexec/fcoe_driver", line 34 CalledProcessError: Command '['fcoeadm', '--i] returned non-zero exit status 2\n"]

- It seems that I have no local storage ..

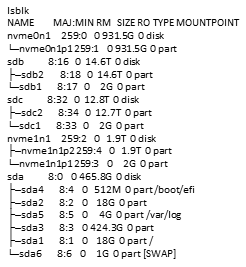

lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT nvme0n1 259:0 0 931.5G 0 disk └─nvme0n1p1 259:1 0 931.5G 0 part sdb 8:16 0 14.6T 0 disk ├─sdb2 8:18 0 14.6T 0 part └─sdb1 8:17 0 2G 0 part sdc 8:32 0 12.8T 0 disk ├─sdc2 8:34 0 12.7T 0 part └─sdc1 8:33 0 2G 0 part nvme1n1 259:2 0 1.9T 0 disk ├─nvme1n1p2 259:4 0 1.9T 0 part └─nvme1n1p1 259:3 0 2G 0 part sda 8:0 0 465.8G 0 disk ├─sda4 8:4 0 512M 0 part /boot/efi ├─sda2 8:2 0 18G 0 part ├─sda5 8:5 0 4G 0 part /var/log ├─sda3 8:3 0 424.3G 0 part ├─sda1 8:1 0 18G 0 part / └─sda6 8:6 0 1G 0 part [SWAP]- I tried to add local storage ==> LVM creation failed

[12:16 Tiger ~]# xe sr-create name-label="LocalStore" type=ext device-config-device=/dev/sda3 share=false content-type=user Error code: SR_BACKEND_FAILURE_77 Error parameters: , Logical Volume group creation failed,- Trying to fix the problems, I decided to update the system (as described in this tread, which did not solve the problems!

So, three questions:

- is it possible to make an installer available for the actual test version, so that I can do an real installation using that installer !!

That would make testing easier and more realistic - how to solve the described problems

- I would appreciate formal zfs support, as far as possible I use ZFS

-

@gduperrey Updated my two host playlab without a problem. Installed and/or update guest tools (now reporting

7.30.0-11) on some mainstream Linux distros worked as well as the usual VM operations in the pool. Looks good

-

@gduperrey I jumped in all the way by mistake... I updated a wrong host, so I just did them all. Older AMD, Intel E3/E5, NUC11, etc. So far, so good. Add/migrate/backup/etc VMs are working as usual. Good for guest tools too, but mine are mostly Debian 7-11. Stuff is as usual so far.

-

The updates are unrelated to your issue.

You are welcome to start a new thread about it.

That way, rather than commenting in this thread, you will receive greater assistance from the community.Did you look at the wiki's troubleshooting page to analyze your issue?

https://xcp-ng.org/docs/troubleshooting.html#the-3-step-guideWe don't see anything in your lsblk. Perhaps you could include the 'xe sr-list' result in your new topic.

Have you tried to create your SR using XOA or just the server's command line?

We don't offer an updated installer right now. Although we're working on a method to regularly and automatically build an updated iso. It will take some time before we can use it for the community.

As we know, ZFS is supported, some users use it. What exactly do you mean by zfs support?

-

I did post here, because I am almost sure that I am facing serious bugs in the actual version. Of course I can be wrong, however I do think that the described problems are not related to things I am doing wrong. So it is more that I intended to make the team aware of the problems, then that I think I need help .....

Because I assume bugs, I did upgrade intermediately after the install, .... however, that did not solve the noted issues ...

I also installed Ubuntu in an VM on my windows10 system and compiled XOA. And tryed to generate storage from there, which not so strange, did lead to the same error messages seen before (I tried to define storage types EXT and ZFS).

Related to ZFS, I wrote that since I did read some were that it was not yet formally supported.

Note that my server does have disks installed (not intended for XCP-ng). The disks are ZFS storage pools active when I boot the server as TrueNAS server. That should not be a problem .... IMHO.

Note that I can not detach them, since it also involves NVME-drivers on the motherboard.

Also note that the SSD use for XCP-ng has been used for other purposes before probably including ZFS-partitions. I write that here because I noted people reporting issues related to disks previously used for ZFS. -

@gduperrey

Applied on my test systems and all seems to be working well here. -

@Louis There's no immediate evidence that the update candidates that this thread is about are related to your issues, so I second @gduperrey's request that you open one or more separate threads for your issues.

Expected feedback in this thread is something like : "I was on a fully updated XCP-ng 8.2.1 pool which worked well and then I installed the update candidates, and now I notice something doesn't work anymore" (regression due to the update candidates) or "I have tested this and that after installing the update candidates, and it looks fine".

-

Updated and tested:

- one single Host

- lab-Pool (3 Hosts) - NFS Storage

- lab-Pool (4 Hosts) - iscsi Storage

Everthing seems to work as expected.

(new VM, live-migration, snapshots, clone VMs, import/export VMs) -

updated and tested:

test pool (2 hosts) iscsi storage

no problems so far.

(live-migration, snapshots, changed master in pool)guest tools updated on

Debian 10 / 11 / Rocky Linux 8.5 -

Sorry for my impatience.

Is there any news on ETA?We are actually planning a maintenance downtime for firmware Upgrades and some other changes on a bunch of hosts and it would be cool do the updates on the same time slot to avoid double reboot/shutdown in short time.

Kind Regards

Alex -

Ping @stormi

-

If no last minute issue is discovered with the updates, they should be released next week.

-

@stormi

Thx for your reply.

I try to wait so i can do it all in one Task.Kind Regards

Alex -

The update is published. Thanks for your tests!

Blog post: https://xcp-ng.org/blog/2022/10/05/october-2022-maintenance-update/

-

I am completely lost in relation to the way new software or updates are released.

- If I download the actual file, I get exactly the same file as I used to get for months

- there is an 8.2.1 which is not visual in the release overview

- I would have expect that an update has the name 8.2.2 ........

So, I am lost .....

-

@Louis said in Updates announcements and testing:

I am completely lost in relation to the way new software or updates are released.

- If I download the actual file, I get exactly the same file as I used to get for months

- there is an 8.2.1 which is not visual in the release overview

- I would have expect that an update has the name 8.2.2 ........

So, I am lost .....

This is not a major release triggering a dot version update. This is a set of patches.

yum update

-

IMHO every set of patches should lead to a new version number and a changed initial download,

so if this is only a small update than it could be 8.2.1.1My opinion of course but I would like to see a far more traceable update process

-

Take a look at what other distros are doing: at some point, Debian will add a new "patch version". Initially you had Debian 11.0, but after some time and patches added, there's a bump (now we are at Debian 11.5).

There's not a patch increment at each update released

-

This is not a new available patch like the ones which become available on any OS every day!

It is a tested set of patches which become available as a "package". So I stay which my opinion that it is lets say a "dot-release". With a new number, a new download and a version history.

And yep of course you can update via the regular update method .

-

@Louis said in Updates announcements and testing:

This is not a new available patch like the ones which become available on any OS every day!

Why? It's exactly that. It's up to us to decide if we want to group them more or less (depending on the urgency of the patch) but that's entirely on us.