Nested Virtualization of Windows Hyper-V on XCP-ng

-

Thanks a lot @XCP-ng-JustGreat !

By working together like that, I'm sure we'll be able to point the exact issue

-

@olivierlambert @stormi OK. My draft to the Xen-devel ML follows. Feel free to critique if you think it will strengthen our case. Once finalized, I'll send it to the ML.

SUBJECT: Nested Virtualization of Hyper-V on Xen Not Working

RATIONALE: Features in recent versions of Windows now REQUIRE Hyper-V support to work. In particular, Windows Containers, Sandbox, Docker Desktop and the Windows Subsystem for Linux version 2 (WSL2). Running Windows in a VM as a development and test platform is currently a common requirement for various user segments and will likely become necessary for production in the future. Nested virtualization of Hyper-V currently works on VMware ESXi, Microsoft Hyper-V and KVM-based hypervisors. This puts Xen and its derivatives at a disadvantage when choosing a hypervisor.

WHAT IS NOT WORKING? Provided the requirements set forth in: https://wiki.xenproject.org/wiki/Nested_Virtualization_in_Xen have been met, an hvm guest running Windows 10 PRO Version 21H1 x64 shows that all four requirements for running Hyper-V are available using the msinfo32.exe or systeminfo.exe commands. More granular knowledge of the CPU capabilities exposed to the guest can be observed using the Sysinternals Coreinfo64.exe command. CPUID flags present appear to mirror those on other working nested hypervisor configurations. Enabling Windows Features for Hyper-V, Virtual Machine Platform, etc. all appear to work without error. However, after the finishing reboot, Hyper-V is simply not active. This--despite the fact that vmcompute.exe (Hyper-V host compute service) is running and there are no errors in the logs. In addition, all four Hyper-V prerequisites continue to show as available.

By contrast, after the finishing reboot of an analogous Windows VM running on ESXi, the four prerequisites are reversed: hypervisor is now active; vmx, ept and urg (unrestricted guest) are all off as viewed with the Coreinfo64.exe –v command. Furthermore, all functions requiring Hyper-V are now active and working as expected.

This deficiency has been observed in two test setups running Xen 4.15 from source and XCP-ng 8.2, both running on Intel with all of the latest, generally available patches. We presume that the same behavior is present on Citrix Hypervisor 8.2 as well.

SUMMATION:

Clearly, much effort has already been expended to support the Viridian enlightenments that optimize running Windows on Xen. It also looks like a significant amount of effort has been put forth to advance nested virtualization in general.Therefore, if it would be helpful, I am willing to perform testing and provide feedback and logs as appropriate in order to get this working. While my day job is managing a heterogeneous collection of systems running on various hypervisors, I have learned the rudiments of integrating patches and rebuilding Xen from source so could no doubt be useful in assisting you with this worthwhile endeavor.

-

@xcp-ng-justgreat said in Nested Virtualization of Windows Hyper-V on XCP-ng:

While it is widely understood that nested virtualization is officially unsupported in production scenarios

I'm not sure about this. It is clearly unsupported in Citrix Hypervisor, but I'm not sure such statement is true for the Xen project.

Is the capability to run fully-functional nested Hyper-V on Xen a priority that Xen's developers expect to get working?

I'd change this part, assume there's no need to ask about priorities here, and orient it directly towards troubleshooting. You're talking to developers, mainly:

- you're ready to do any tests and provide any logs to help debugging

- you can rebuild Xen with any additional patches (now that you learned how to do it).

-

@stormi Yes. That is better. I'll update it.

-

@olivierlambert Incorporating suggested changes from @stormi above in bold italics.

-

@stormi @olivierlambert It looks like we now have the attention of Andrew Cooper at Citrix. For anyone interested in following or participating in the Xen developer list nested virtualization thread we originated, it begins here: https://lists.xenproject.org/archives/html/xen-devel/2021-07/msg01269.html (Just click Thread Next to go through it sequentially.) For the purposes of that list, I have become Xentrigued. Cooper readily admits that nested virtualization in Xen is "a disaster" and has suffered from neglect. With the upcoming launch of Windows 11 and Server 2022, nested virtualization of Hyper-V and, likely, vTPM 2.0 support will become "musts" for hypervisor certification by Microsoft so there are some strong tail-winds that may aid in pushing this forward beyond the XCP-ng community. I will try to be of some use toward that end.

-

So your post was great and its seriousness provided traction to move forward. Congrats!

Andy is one of the best (likely the best) Xen expert I know. He's also a bit "pessimistic" sometimes, one would say realistic

(the word "disaster" is from his point of view, I mean it's far from being great, but it's enough for some basic use cases).

(the word "disaster" is from his point of view, I mean it's far from being great, but it's enough for some basic use cases).Anyway, with more and more urgency coming to get things sorted in Windows world, I'm pretty sure more resources will be attached to fix some Xen parts on that. As he said, an incremental approach will be the solution, testing some patches for Xen devs will be a tremendous help.

That's a very good example on why cooperation is a powerful way to move forward. Thanks a lot for your efforts @XCP-ng-JustGreat

-

anything new on this issue? @XCP-ng-JustGreat @olivierlambert

-

Everything is on the public mailing list, I suggest you ask there

-

@alexanderk @olivierlambert Sorry to have not responded sooner to your question. It has been a very long, slow slog so far and I haven't been able to devote as much time as I'd like to working on this. Here's what I've done so far: Based on Andrew Cooper's recommendation, I installed a fully patched Windows Server 2008 R2 VM to Xen. (Hyper-V was initially released with Server 2008 so this is almost as far back as you can go.) Using the current unmodified Xen source code, the VM will permit Hyper-V to be enabled in the Windows Server 2008 R2 guest, but--as with newer versions of Windows--once you perform the finishing reboot, Hyper-V is not actually active. Adding the two recommended source-code patches, recompiling and performing the same test causes the VM to hang following the enablement of Hyper-V. I know that I need to set up a serial console for the VM in order to view any logging that might provide a clue as to what's failing during the boot, but I haven't worked that out just yet.

I've also spent some considerable time reading through the Xen Dev email posts on the history of the development of nested virtualization in Xen. One very significant learning from that reading is that nested virtualization on Xen was initially developed by an AMD developer. Development of the NV feature-set for Intel came later after the AMD-focused design die had been cast. As far as I can tell given that I'm running Server 2008 R2, this never worked on Intel. (Maybe it did on an older Intel processor, but I am currently working with SkyLake i7-6700s so have no way to test older hardware.) Unfortunately, I also don't have appropriate AMD hardware on which to perform the same test to see whether or not it might work on AMD.

On the Microsoft Hyper-V side, it seems as though the opposite evolution happened. Nested virtualization was developed on Intel first, then (very recently) AMD. This makes me suspect that it doesn't work on AMD either. In other words, I don't know that nested virtualization of Windows on Xen ever worked such that Hyper-V was actually active in the guest. I would be delighted to have somebody prove me wrong.

-

W warriorcookie referenced this topic on

-

X XCP-ng-JustGreat referenced this topic on

-

W warriorcookie referenced this topic on

-

Hello, just decided to also share my experience with this. This specific topic seems to be the closest on point.

I'm developer and yes, Win/Docker is my setup. In order to upgrade my hardware transparently I use VMs as more power is required, so that's my case.

I'm moving from VMWare recently (free ESXi 6.5 restrictions on 8 vCPUs is starting to be significant), so I'm exploring my options. XCP-ng has my sympaties because of the best import/export comparing to others, so it was my first hypervisor to try moving to.

I successfully converted the image and, as expected, without Nested V checkbox in Xen Orchestra, Hyper-V Driver in Windows wan't active.

But when I enable Nested-V - I'm not even able to boot. It's the kind of boot failure that different BIOS would help to boot. But there is no other BIOS except UEFI (I mean, single UEFI and no other UEFIs, others don't work anyway).

So technically, I can't say whether Hyper-V would work if machine booted.After that I moved to Proxmox, for which I had to specify specific BIOS (OVMF) to boot my image (so yes, it might matter). Regarding Nested-V all I had to do in Proxmox is to specify CPU:host to make it work. Which proves it is possible in KVM.

I'm trying to make a point that this topic is still important, and apart from Hyper-V itself ability to boot VM images from other hypervisors is also important, so more BIOSes or startup settings might be required.

My sympathies are still with XCP-ng because of the import/export features, they really help (and yes, kudos to XOA April 2022 release with the additional formats). If you're importing/exporting from Proxmox, be prepared to SSH/WinSCP all the time, have reserved about x1.5 of Thick VM disk size on Proxmox server or some local one where you make a conversion (but download 200G+ to your local machine first lol). I'll be back on XCP-ng as long this will be claimed as working.

-

@olivierlambert I think this issue is the single biggest problem with XCP-ng (Xen). 2nd is just general windows guest support.

While I have installed older Windows on XCP/Xen, the whole lack of Hyper-V support is an important issue because it's becoming a requirement and it works on other hypervisors. I know newer versions of Xen do a better job without guest tools installed, but they are still important.

This is a major blocker for customers moving from VMware to XCP-ng/Xen. They just can't do it because Hyper-V fails and easy/good guest tools are also a problem.

-

Thanks for your feedback. We'd love to have better support for that, but those 2 problems are hard. This require a lot of work to debug and understand what's wrong.

We are still a small company despite growing fast, so we do our best with what we have.

-

@clockware you can convert your machine from bios booting to uefi booting. Simple by converting MBR to GPT but you will need a win 10 iso

-

@AlexanderK VMWare setup had UEFI BIOS, when I'm portable on my laptop I use Hyper-V built into Win10Pro, it also uses UEFI / Gen2, in XCP-ng I tried both BIOS and UEFI settings. BIOS doesn't boot, checked just for the record. UEFI booting works, but UEFI+Nested-V doesn't (causes Win10 to troubleshoot itself). So it doesn't seem like partition recognition problem, it feels like Windows + Virtual Hardware to me (but may be UEFI image also matters, in my experience in different envs yes it might).

-

@clockware ok i misunderstood...

-

Is there any updates on nested virtualization and hyper-v?

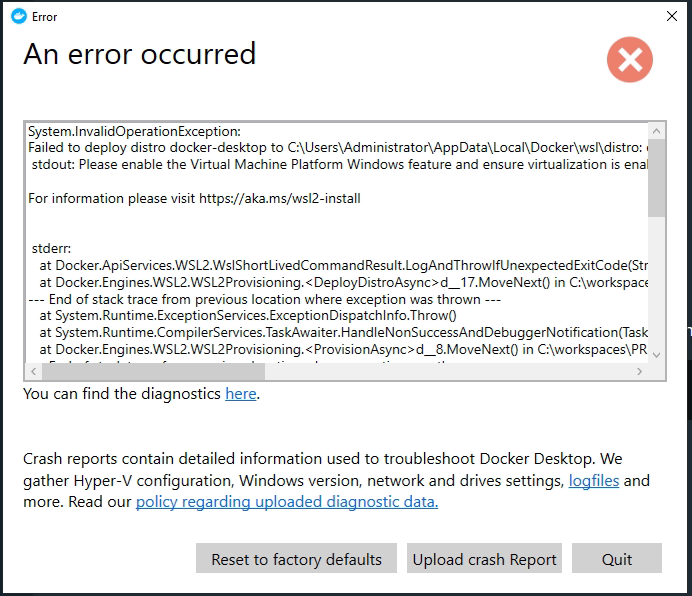

I've tried to run docker on windows 10 vm and didn't succeed on this.

-

Sadly, there's not a lot of market traction for it. Obviously, contributions are very welcome, otherwise, it won't make progress by itself (not until we continue to grow enough to tackle more stuff in our backlog at once. Note: we almost made +30% in headcount just in 2022, so it's moving fast, but still plenty to do and we can't do everything!)

-

@olivierlambert thank you for your comment.

-

I am finding myself in this really unfortunate situation. We want to use Microsoft Connected Cache which requires Hyper-V. Microsoft Connected Cache is a way to cache inTune installation packages to reduce internet usage.