Backup timeout - hang or didn't work

-

- i have delta backup job with 8hours rotation and 4hours timeout.

20 VMs from this job succeed, but 1 last stuck for 8+ hours so next job run failed too.

Usually it takes 2-3min, full backup maybe will take a hour.

Next scheduled job succeed, but takes 2-3 hours, what a very long. Just 3-10Gb at each VM, so it not a full backup.

not a problem if orchestra can't make backup for some reason, but why it not interrupted?

anything useful in this logs?

https://pastebin.com/3eNdNPSi

https://pastebin.com/wvZtKrQA

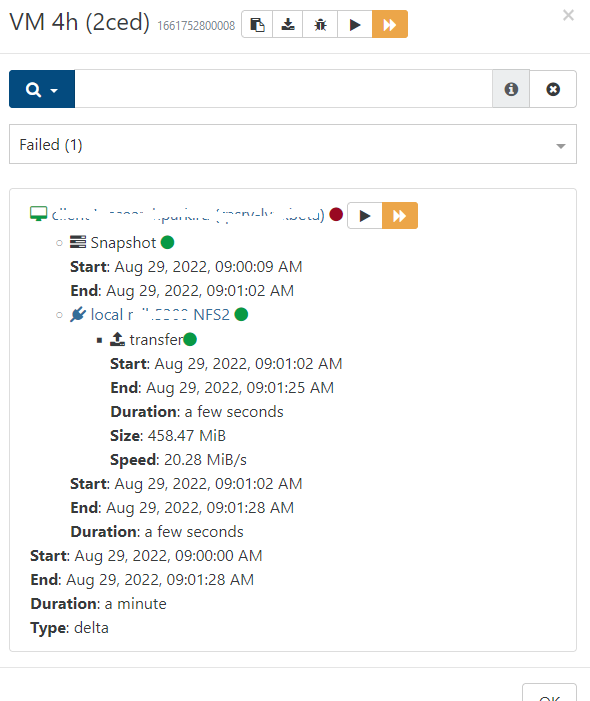

https://pastebin.com/98mgqmHs- next question, i see some weird warnings inside succeed backup.

But i can't find them anywhere else (backup health, dashboard health). Is it important and what it's mean?

- i have delta backup job with 8hours rotation and 4hours timeout.

-

Hi,

Can you give some details: are you on XOA or XO from the sources?

-

@olivierlambert sure, XO from the sources.

Xen Orchestra, commit 1c955

xo-server 5.100.0

xo-web 5.101.0 -

First, as usual, please follow the documentation when you report a problem: https://xen-orchestra.com/docs/community.html#report-a-bug

Don't miss the big warning in yellow. Update on latest

mastercommit and try again

-

@olivierlambert yes sorry forget again) not sure if it a bug.

And what about question #2? -

This is precisely fixed by a more recent commit... Please update first and then later see the outcome

Otherwise, this is only wasting time for people giving their help for free.

Otherwise, this is only wasting time for people giving their help for free. -

@olivierlambert latest build, same problem.

i start job manually in 2 hours before scheduled.

it still running with last one VM, 19 succeed. shedule canceled.xo-server 5.100.1

xo-web 5.101.1

Xen Orchestra, commit 2f38e -

job still runs for 4 days

Every time it can't write 1 vm backup to 1 of 2 storages. And every time it different vm.

Other jobs works fine with same storage. -

Weird problems continue.

Looks like endless backup fixed now, but it happens for a week after previous update.One VM say it failed about 15 times in row already, but i always have fresh snapshot available.

Same job, same VM every time. Others without errors.

what i supposed to do if XO don't say me what the problem?Tried to disable "Store backup as multiple data blocks instead of a whole VHD file." no difference.

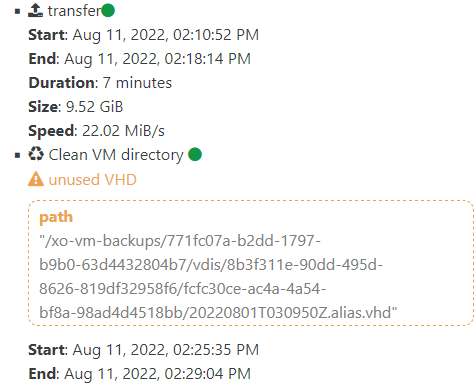

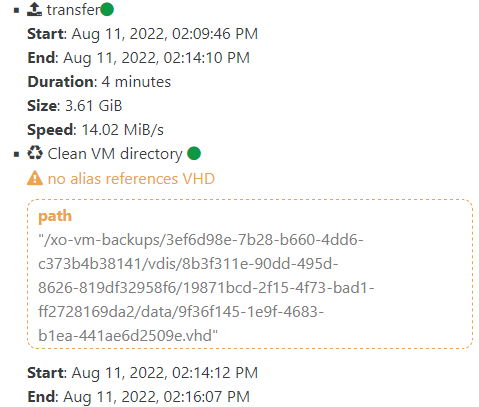

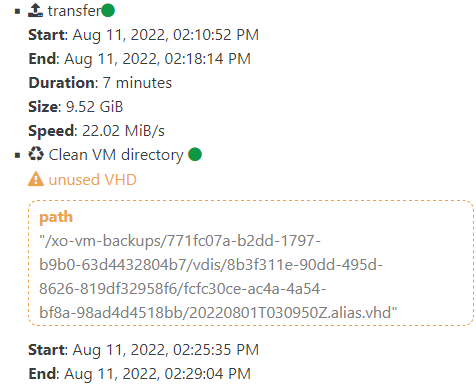

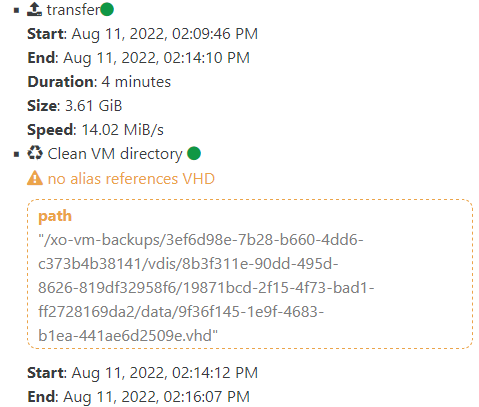

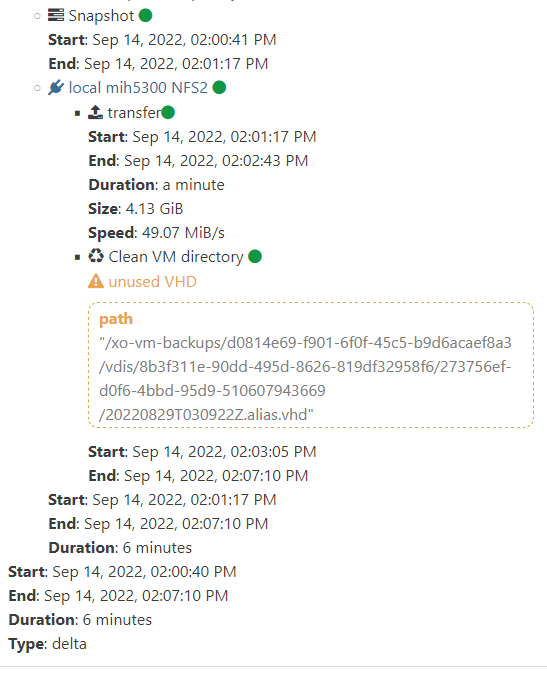

Also what to do with warnings "unused VHD" and others?xo-server 5.100.1

xo-web 5.101.1

commit c1846 -

-

@Tristis-Oris hi,

can you paste the full backup logs (click on the downlod logs button on the top of the popup)

regards

-

@florent

24 hours delta, usualy no problems with it. But 1 VM failed here. Today it still runnig with full. https://pastebin.com/99u0m2QT

4 hours delta, same VM failed for a few days already. https://pastebin.com/nS3HV9VE -

@Tristis-Oris the seond pastbin is private, I can read it

I will look into it this afternoon -

@florent sorry, fixed.

-

@florent any news about?

-

Different jobs stil fail only 1 VM from whole backup. Few times in row, then it happens with another job or VM.

-

After bunch of last commits it looks fixed,

9d09anow. Thanks.

Only 1 vm stuck at this state. I already tried remove backup chain to force the full one, move vdi to maybe fix some merging problem. But that don't help.Also this warnings still here. What to do and how to find them?

"result": { "errno": -2, "code": "ENOENT", "syscall": "open", "path": "/mnt/mih-5300-2/xo-vm-backups/5fc68102-9705-4918-317d-dff5e4e58237/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/d8b005d2-ca6f-4ae5-a3b3-0cb4ef8a3016/data/1f95640b-b699-4dfd-98ea-9398ccc2b685.vhd/blocks/20/0", "syncStack": "Error\n at LocalHandler.addSyncStackTrace [as _addSyncStackTrace] (/opt/xo/xo-builds/xen-orchestra-202209121459/@xen-orchestra/fs/dist/local.js:28:26)\n at LocalHandler._readFile (/opt/xo/xo-builds/xen-orchestra-202209121459/@xen-orchestra/fs/dist/local.js:184:23)\n at LocalHandler.readFile (/opt/xo/xo-builds/xen-orchestra-202209121459/@xen-orchestra/fs/dist/abstract.js:326:29)\n at LocalHandler.readFile (/opt/xo/xo-builds/xen-orchestra-202209121459/node_modules/limit-concurrency-decorator/dist/index.js:107:24)\n at VhdDirectory._readChunk (/opt/xo/xo-builds/xen-orchestra-202209121459/packages/vhd-lib/Vhd/VhdDirectory.js:153:40)\n at VhdDirectory.readBlock (/opt/xo/xo-builds/xen-orchestra-202209121459/packages/vhd-lib/Vhd/VhdDirectory.js:211:35)\n at VhdDirectory.mergeBlock (/opt/xo/xo-builds/xen-orchestra-202209121459/packages/vhd-lib/Vhd/VhdDirectory.js:277:37)\n at async asyncEach.concurrency.concurrency (/opt/xo/xo-builds/xen-orchestra-202209121459/packages/vhd-lib/merge.js:181:38)", "chain": [ "xo-vm-backups/5fc68102-9705-4918-317d-dff5e4e58237/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/d8b005d2-ca6f-4ae5-a3b3-0cb4ef8a3016/20220801T221142Z.alias.vhd", "xo-vm-backups/5fc68102-9705-4918-317d-dff5e4e58237/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/d8b005d2-ca6f-4ae5-a3b3-0cb4ef8a3016/20220802T221205Z.alias.vhd" ], "message": "ENOENT: no such file or directory, open '/mnt/mih-5300-2/xo-vm-backups/5fc68102-9705-4918-317d-dff5e4e58237/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/d8b005d2-ca6f-4ae5-a3b3-0cb4ef8a3016/data/1f95640b-b699-4dfd-98ea-9398ccc2b685.vhd/blocks/20/0'", "name": "Error", "stack": "Error: ENOENT: no such file or directory, open '/mnt/mih-5300-2/xo-vm-backups/5fc68102-9705-4918-317d-dff5e4e58237/vdis/90d0b5ca-9364-4011-adc4-b8c74a534da9/d8b005d2-ca6f-4ae5-a3b3-0cb4ef8a3016/data/1f95640b-b699-4dfd-98ea-9398ccc2b685.vhd/blocks/20/0'" } -

@Tristis-Oris The unused VHD warning are normal after failling snapshot, they happen when a previous backup failed and we are removing the "trash" files.

For the other error did you try to launch the job manually to see if you have the same error? -

@Darkbeldin got it, so it not a problem and no need to do anything.

just started it manually, still error. But shapshot always created, i dont understand why it say failed.

-

@Tristis-Oris @florent will have to take a look at it because i'm not sure.

Hello! It looks like you're interested in this conversation, but you don't have an account yet.

Getting fed up of having to scroll through the same posts each visit? When you register for an account, you'll always come back to exactly where you were before, and choose to be notified of new replies (either via email, or push notification). You'll also be able to save bookmarks and upvote posts to show your appreciation to other community members.

With your input, this post could be even better 💗

Register Login