VMware migration tool: we need your feedback!

-

@Touille As @florent said in a previous message probably the full SR object is sent to the backend instead of its id. This happens when you change the destination SR from the default one to another. You can try to proceed with the export on the default SR.

Take a look at this branch: https://github.com/vatesfr/xen-orchestra/pull/6696/files

It seems to fix the error. -

It's even merged in

master(few seconds ago)

-

Hi @ismo-conguairta.

As you and Florent said, before the update, when I tried to import to another SR than the default one, it wouldn't work.Since the update an hour ago, it's fixed

I've just imported a VM from VMware to any SR I want

I've just imported a VM from VMware to any SR I wantthank you!!

-

It may have already been mentioned in here more recently, but I still think some kind of system to select specific VHDs from the ESXi box would be super nice.

Sometimes one may only need to move the data of specific VHDs (excluding say logging or other temporary data style VHDs) and this would greatly speed up migrations.

It would also help in the event someone has VHDs that are larger than 2TiB, since you'd be able to only move the smaller ones and find an alternate solution for the larger than 2TiB VHDs.

Just a thought though; this tool is still amazing and has helped me start migrations!

-

@planedrop the issue is that we are about to add multi-VM migration, so it will be a bit harder to make individual choices per disk. However, we could always create a convention based on a tag/name of the disk to ignore it, what do you think?

-

@olivierlambert I think a tag or name based exclusion would be a great idea! Helps in the more niche use cases where not all drives have to be moved.

As an example use case, I have a VM I am going to migrate sometime in the next few weeks, but I only need it's C drive (the other drives are for logging, etc... and are more than 2TiB anyway). Right now I plan to export the VMDK and try importing it but I've had mixed results with that working correctly in the past, whereas this new V2V migration tool has been super reliable so far, so I'd have more confidence in it working (not to mention it's less work haha).

-

In VMware, can you easily tag a VM disk?

-

@olivierlambert I don't think there are tags for them, per-se but you can rename a VMDK file to whatever you want within vCenter. Maybe just looking at the name of the VMDK files and allowing someone to enter ones to exclude would work?

-

Sure, I just need to know what's the easiest way to do this in VMware, since I don't know it at all

-

@olivierlambert Totally understand.

I think going based on the name of the disk would be the best option.

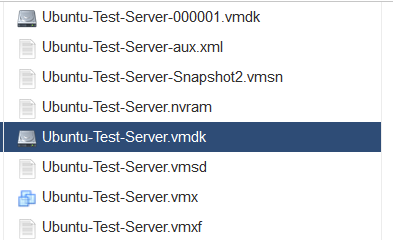

For example, on a test VM I have, the disks all show up like this:

And I can rename them if need be.

Does the current V2V tool just look for .vmdk files and then migrate those?

I guess what I'm thinking is like something that says "exclude the below disks" and then has a list system where you can enter the disk name as it shows up here in ESXi so that it ignores those disks?

I'm not sure how complex this would be though so just throwing out suggestions haha!

-

I would prefer something that's not configured in XO but a convention on the VMware side so it can scale during one import on tons of VMs

-

@olivierlambert I see, this makes sense.

Maybe a naming convention can be used and then users can rename their VMDK files to something specific that is ignored?

Like say you have Ubuntu1.vmdk, Ubuntu2.vmdk, Ubuntu3.vmdk and someone only wants Ubuntu1.vmdk, they could rename Ubuntu2 and 3 ones to ignore1.vmdk and ignore2.vmdk?

-

Frankly IDK, and I'd like more input from VMware users to make a decision based on multiple opinions

-

@olivierlambert I agree, would be good to hear from more people. We only run a handful of VMs on VMware so I may not be the best person to give detailed feedback.

-

@brezlord said in VMware migration tool: we need your feedback!:

TypeError

I got something similar on the latest build of XO.

System: ESXi 6.7.0 Update 3 (Build 15160138)vm.importFromEsxi { "host": "10.20.0.105", "network": "1816a2e5-562a-4559-531d-6b59f8ec1a5c", "password": "* obfuscated *", "sr": "eb23cb22-5b8c-9641-b801-c86397e05520", "sslVerify": false, "stopSource": true, "thin": false, "user": "root", "vm": "9" } { "message": "Cannot read properties of undefined (reading 'stream')", "name": "TypeError", "stack": "TypeError: Cannot read properties of undefined (reading 'stream') at file:///home/node/xen-orchestra/packages/xo-server/src/xo-mixins/migrate-vm.mjs:272:30 at Task.runInside (/home/node/xen-orchestra/@vates/task/index.js:158:22) at Task.run (/home/node/xen-orchestra/@vates/task/index.js:137:20)" } -

@chrispro-21 we're not able to import VM with snapshots from esxi 6.5+ , for now

At least the next release will be able to ignore them or give a meaningfull error, if we have not finished the implementation of sesparse reading ( the disk snapshot format for esxi 6.5+)

-

Hi @florent I'm getting the same on ESXi 6.7 - have tried migrating the disk storage within VMWare first to see if it updated. Looking forwards to testing this new feature as soon as disk format sorted - in the mean time will look for a workaround.

vm.importFromEsxi { "host": "172.29.49.11", "network": "b4d861c9-a735-b21c-6f58-655911cf8fb2", "password": "* obfuscated *", "sr": "1216d3f2-a60d-e68b-ea93-0b1b594a53cb", "sslVerify": true, "stopSource": false, "thin": false, "user": "administrator@blue.local", "vm": "vm-4292" } { "message": "sesparse Vmdk reading is not functionnal yet gbr-stf-blue-dc/gbr-stf-blue-dc-000001-sesparse.vmdk", "name": "Error", "stack": "Error: sesparse Vmdk reading is not functionnal yet gbr-stf-blue-dc/gbr-stf-blue-dc-000001-sesparse.vmdk at openDeltaVmdkasVhd (file:///opt/xo/xo-builds/xen-orchestra-202303171405/@xen-orchestra/vmware-explorer/openDeltaVmdkAsVhd.mjs:7:11) at file:///opt/xo/xo-builds/xen-orchestra-202303171405/packages/xo-server/src/xo-mixins/migrate-vm.mjs:267:27 at Task.runInside (/opt/xo/xo-builds/xen-orchestra-202303171405/@vates/task/index.js:158:22) at Task.run (/opt/xo/xo-builds/xen-orchestra-202303171405/@vates/task/index.js:137:20)" } -

@alexredston you can workaround it by stopping the VM and that will work

-

@olivierlambert Thanks - already underway.

3 hours elapsed, 3 hours to go 80GB thick provisioned primary domain controller, unfortunately it was on a slow network card 1G, averaging about 42 MB per second during the transfer. Will try the next one on 10G and will see what the speed is like.

RAID 1 on old server to RAID10 ADM triple mirrored and striped.

-

Select thin on the XO V2V tool, that might be faster in the end. Patience is key