Epyc VM to VM networking slow

-

@olivierlambert said in Epyc VM to VM networking slow:

If I wouldn't be convinced to fix it, I wouldn't throw money & time to solve the problem

I think everyone knows this. Nevertheless, it is frustrating anyone if it becomes a bottleneck.

I am curious, do we know if this happens on Xen systems, or if it happens on xcp-ng systems where Open vSwitch is not used?

-

It happens on all Xen version we tested, the issue is clearly inside the Xen Hypervisor, and related on how the netif calls are triggering something slow inside AMD EPYC CPUs (not even Ryzen ones)

-

@manilx do you use NBD for delta backups ?

in the advanced settings -

@florent Florent, yes I do use NBD for all backups. And checking the backup log of the completed jobs I see that NBD is being used.

-

@manilx said in Epyc VM to VM networking slow:

@florent Florent, yes I do use NBD for all backups. And checking the backup log of the completed jobs I see that NBD is being used.

could you test disabling NBD ?

-

This post is deleted! -

@manilx Running a test backup, one with NBD and then again without. Will report asap.

-

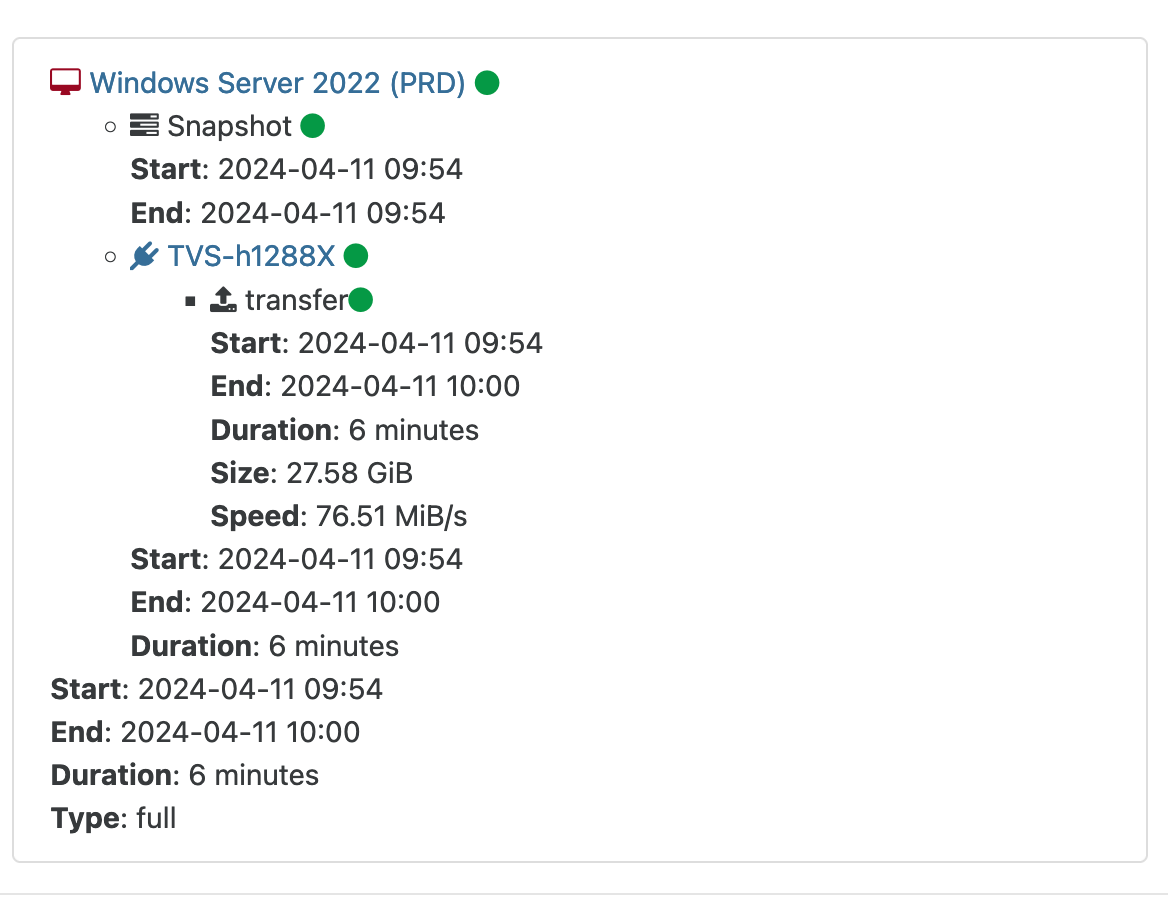

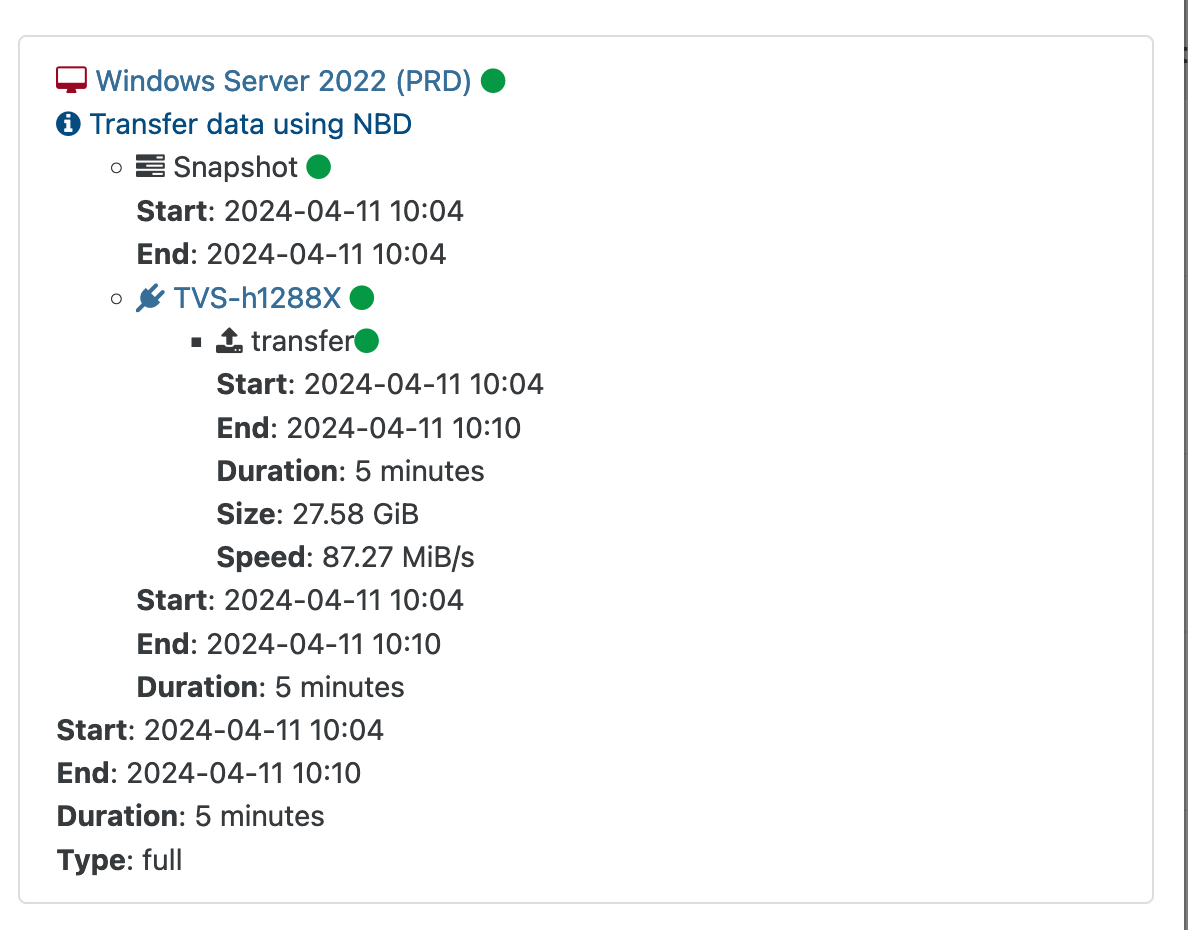

@florent Result of backup tests:

-

It may not be of any help, but wanted to add a little bit of info this anyway.

I'm seeing the same results on a set of Ubuntu 22.04 in my Threadripper based cluster. I didn't expect to see different results, since Threadripper is really just EPYC with some stuff turned off.

Specifically tested on a 16 core 1950X host and a 32 core 3970X host, both with 8 vCPUs on each VM, they topped out at 8 gigabit like most others are seeing.

Figured I'd add it in here.

-

Thanks! We didn't test on threadrippers yet, so it's useful info

-

This post is deleted! -

Is there any hope for a solution to this issue? How much do I understand this affects all generations?

-

We're still actively working on it, we're still not a 100% sure what the root cause is unfortunately.

It does seem to affect all Zen generations, from what we could gather, sligthly differently: it seems to be a bit better on zen3 and 4, but still always leading to underwhelming network performance for such machines.

To provide some status/context to you guys: I worked on this internally for a while, then as I had to attend other tasks we hired external help, which gave us some insight but no solution, and now we have @andSmv working on it (but not this week as he's at the Xen Summit).

From the contractors we had, we found that grant table and event channels have more occurences than on an intel xeon, looking like we're having more packet processed at first, but then they took way more time.

What Andrei found most recently is that PV & PVH (which we do not support officially), are getting about twice the performance of HVM and PVHVM. Also, having both dom0 and a guest pinned to a single physical core is also having better results. It seems to indicate it may come from the handling of cache coherency and could be related to guest memory settings that differs between intel and amd. That's what is under investigation right now, but we're unsure there will be any possibilty to change that.

I hope this helps make things a bit clearer to you guys, and shows we do invest a lot of time and money digging into this.

-

@bleader said in Epyc VM to VM networking slow:

We're still actively working on it, we're still not a 100% sure what the root cause is unfortunately.

I can also vouch for that they are taking it seriously and working on it. I have an ticket with them since the start of this essentially and work is definitely being done in said ticket to solve this once and for all.

-

Do I understand correctly that the problem also occurs when exchanging a server with a shared NFS storage, where the VHDs are located, and not just between the VMs themselves? That is, the exchange of a virtual machine with disks attached to it on shared storage will be slowed down?

-

@alex821982 no, we have seen no such indication... With that said we have not tested for that either and it is "possible" the main issue at hand is that all epycs processors hit an upper limit with network traffic going in and out of a VM. And it is a per physical host limit so if you have both VMs on same machine the limit is halved.

-

Posting my results:

XCP ng stable 8.2, up-to-date.

Epyc 7402 (1 socket)

512GB RAM 3200Mhz

Supermicro h12ssl-iNo cpu pinning

VM's: Ubuntu 22.04 Kernel 6.5.0-41-generic

v2m 1 thread: 3.5Gb/s - Dom0 140%, vm1 60%, vm2 55%

v2m 4 threads: 9.22Gb/s - Dom0 555%, vm1 320%, vm2 380%

h2m 1 thread: 10.4Gb/s - Dom0 183%, vm1 180%, vm2 0%

h2m 4 thread: 18.0Gb/s - Dom0 510%, vm1 490%, vm2 0%host : xcp-ng-7402 release : 4.19.0+1 version : #1 SMP Tue Jan 23 14:12:55 CET 2024 machine : x86_64 nr_cpus : 48 max_cpu_id : 47 nr_nodes : 1 cores_per_socket : 24 threads_per_core : 2 cpu_mhz : 2800.047 hw_caps : 178bf3ff:7ed8320b:2e500800:244037ff:0000000f:219c91a9:00400004:00000500 virt_caps : pv hvm hvm_directio pv_directio hap shadow total_memory : 524149 free_memory : 39528 sharing_freed_memory : 0 sharing_used_memory : 0 outstanding_claims : 0 free_cpus : 0 cpu_topology : cpu: core socket node 0: 0 0 0 1: 0 0 0 2: 1 0 0 3: 1 0 0 4: 2 0 0 5: 2 0 0 6: 4 0 0 7: 4 0 0 8: 5 0 0 9: 5 0 0 10: 6 0 0 11: 6 0 0 12: 8 0 0 13: 8 0 0 14: 9 0 0 15: 9 0 0 16: 10 0 0 17: 10 0 0 18: 12 0 0 19: 12 0 0 20: 13 0 0 21: 13 0 0 22: 14 0 0 23: 14 0 0 24: 16 0 0 25: 16 0 0 26: 17 0 0 27: 17 0 0 28: 18 0 0 29: 18 0 0 30: 20 0 0 31: 20 0 0 32: 21 0 0 33: 21 0 0 34: 22 0 0 35: 22 0 0 36: 24 0 0 37: 24 0 0 38: 25 0 0 39: 25 0 0 40: 26 0 0 41: 26 0 0 42: 28 0 0 43: 28 0 0 44: 29 0 0 45: 29 0 0 46: 30 0 0 47: 30 0 0 device topology : device node No device topology data available numa_info : node: memsize memfree distances 0: 525554 39528 10 xen_major : 4 xen_minor : 13 xen_extra : .5-9.40 xen_version : 4.13.5-9.40 xen_caps : xen-3.0-x86_64 hvm-3.0-x86_32 hvm-3.0-x86_32p hvm-3.0-x86_64 xen_scheduler : credit xen_pagesize : 4096 platform_params : virt_start=0xffff800000000000 xen_changeset : 708e83f0e7d1, pq 9a787e7255bc xen_commandline : dom0_mem=8192M,max:8192M watchdog ucode=scan dom0_max_vcpus=1-16 crashkernel=256M,below=4G console=vga vga=mode-0x0311 cc_compiler : gcc (GCC) 4.8.5 20150623 (Red Hat 4.8.5-28) cc_compile_by : mockbuild cc_compile_domain : [unknown] cc_compile_date : Thu Apr 11 18:03:32 CEST 2024 build_id : fae5f46d8ff74a86c439a8b222c4c8d50d11eb0a xend_config_format : 4 -

What do you mean by v2m and h2m ?

-

hi @olivierlambert ! it's the nomenclature @bleader used in the report table, sorry for the misunderstanding:

https://xcp-ng.org/forum/post/67750v2m 1 thread: throughput / cpu usage from xentop³ v2m 4 threads: throughput / cpu usage from xentop³ h2m 1 thread: througput / cpu usage from xentop³ h2m 4 threads: througput / cpu usage from xentop³it's vm to vm and host (dom0) to vm.

Btw I'm super happy to do any more test that could help, with different kernels, OS's, xcp ng versions... whatever you need.

PS: vm to host resulted in unreachable host even though I could ping from vm to host just fine, I checked the iptables are blocked for the iperf port but open to ping, but I didn't want to mess with dom0.

-

Just for completeness. I have the same issue with older AMD 6380 Opterons. The same hardware had full speed on esxi hypervisor. Also have full speed using harvester / rancher. I'm using xcp-ng for more than two years and have that issue since day one.

----------------------------------------------------------- Server listening on 5201 (test #1 - dom0 to workstation) ----------------------------------------------------------- [ ID] Interval Transfer Bitrate [ 5] 0.00-10.00 sec 10.8 GBytes 9.29 Gbits/sec receiver ----------------------------------------------------------- Server listening on 5201 (test #2 - vm to workstation) ----------------------------------------------------------- [ ID] Interval Transfer Bitrate [ 5] 0.00-10.00 sec 3.18 GBytes 2.73 Gbits/sec receiver ----------------------------------------------------------- Server listening on 5201 (test #3 dom0 to vm) ----------------------------------------------------------- [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 2.23 GBytes 1.91 Gbits/sec receiver ----------------------------------------------------------- Server listening on 5201 (test #4 vom to vm) ----------------------------------------------------------- [ ID] Interval Transfer Bitrate [ 5] 0.00-10.00 sec 1.97 GBytes 1.69 Gbits/sec receiver -----------------------------------------------------------