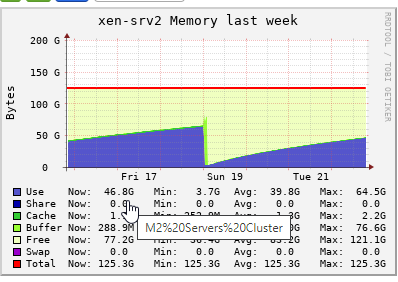

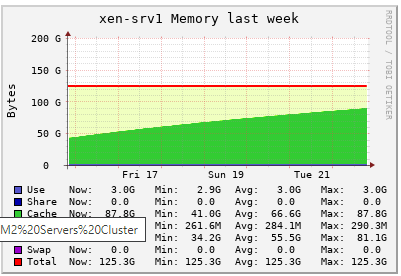

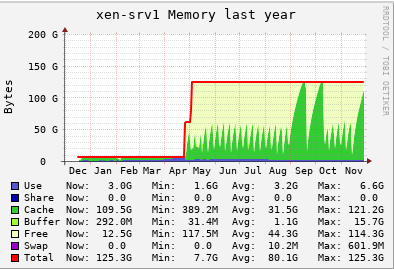

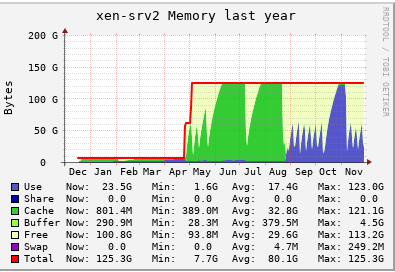

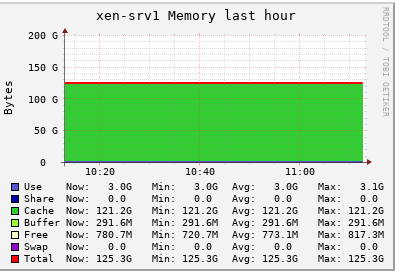

Memory Consumption goes higher day by day

-

-

@dhiraj26683 Could you detail these alerts and what they are based on? A linux system with most memory allocated to cache is exactly what is expected, after a few hours, days or weeks of use. On my computer, right now, I only use 6 GB out of 16 GB, but 9 GB are used by cache and buffers, and only 1 GB is free. However, I don't have any performance issues because, should I open a demanding process, cache will be reclaimed. Cache is just free memory which happens to contain stuff that might be useful again before it is thrown away due to more prioritary uses of memory.

-

I think he's talking about XAPI alerts, done via the message object (see https://xapi-project.github.io/xen-api/classes/message.html). That's what we display in XO in the Dashboard/Health view (we call them "Alerts")

-

@dhiraj26683 let me try to rephrase - I hear 2 things:

- "buff/cache" has to come from somewhere: right. Pages used as read cache ought to be traceable to data read from a disk, and some (intrusive) monitoring could be put in place to find out what data and who pulls them into RAM. But checking this would only make sense if you can observe the cached memory not being released when another process allocates memory. Also note in "buff/cache" we don't only have read cache (

Cachefrom/proc/meminfo) but also write buffers (Buffersinmeminfo) - you had memory issues when you used vGPU, and now you don't (or you still have but less?): this could look like a memory leak in the vGPU driver?

- "buff/cache" has to come from somewhere: right. Pages used as read cache ought to be traceable to data read from a disk, and some (intrusive) monitoring could be put in place to find out what data and who pulls them into RAM. But checking this would only make sense if you can observe the cached memory not being released when another process allocates memory. Also note in "buff/cache" we don't only have read cache (

-

@yann @stormi We have used vGPU for about 8-9 months. Initially we never faced any issue. When we were about to stop the vGPU workflow, it started and even after stoping that workflow, it is. We need to restart the servers to release the memory. We use to migrate all VM's to one server and restart individual host. And this issue started resently, before we never faced such thing.

Providing below is a year graph of both servers.

But i will surely try the new drivers and see if that helps. Thanks for your inputs guys. Much appreciated.

-

@dhiraj26683 seeing the htop output, there's some HA-LIZARD PIDs running. So, yes, there's "extra stuff" installed on dom0

HA-LIZARD uses the TGT iSCSI driver which in turn has an implicit

write-cacheoption enabled by default, if not set [1][2]. Is this option disabled in/etc/tgt/targets.conf?

[1] https://www.halizard.com/ha-iscsi

[2] https://manpages.debian.org/testing/tgt/targets.conf.5.en.html -

@dhiraj26683 Ahh yes, we are using ha-lizard in our two node cluster. Thanks for pointing that out @tuxen. But the thing is, we are using it since 4-5 years and it's with basic configuration. I don't thing that we are using TGT iSCSI drivers or enabled any kind of write-cache. If that's the case then, we would have seen same behaviour in all the nodes we have in same pool and other pools.

We have configured below parameters only related to ha-lizard and that's since we start using it, no change in between.

DISABLED_VAPPS=() DISK_MONITOR=1 ENABLE_ALERTS=1 ENABLE_LOGGING=1 FENCE_ACTION=stop FENCE_ENABLED=1 FENCE_FILE_LOC=/etc/ha-lizard/fence FENCE_HA_ONFAIL=0 FENCE_HEURISTICS_IPS=10.66.0.1 FENCE_HOST_FORGET=0 FENCE_IPADDRESS= FENCE_METHOD=POOL FENCE_MIN_HOSTS=2 FENCE_PASSWD= FENCE_QUORUM_REQUIRED=1 FENCE_REBOOT_LONE_HOST=0 FENCE_USE_IP_HEURISTICS=1 GLOBAL_VM_HA=0 HOST_SELECT_METHOD=0 MAIL_FROM=xen-cluster1@xxx.xx MAIL_ON=1 MAIL_SUBJECT="SYSTEM_ALERT-FROM_HOST:$HOSTNAME" MAIL_TO=it@xxxxx.xxx MGT_LINK_LOSS_TOLERANCE=5 MONITOR_DELAY=15 MONITOR_KILLALL=1 MONITOR_MAX_STARTS=20 MONITOR_SCANRATE=10 OP_MODE=2 PROMOTE_SLAVE=1 SLAVE_HA=1 SLAVE_VM_STAT=0 SMTP_PASS="" SMTP_PORT="25" SMTP_SERVER=10.66.1.241 SMTP_USER="" XAPI_COUNT=2 XAPI_DELAY=10 XC_FIELD_NAME='ha-lizard-enabled' XE_TIMEOUT=10@yann @stormi @olivierlambert we have updated the ixgbe driver on one of the node a day back. Lets see. I will update asap hold on commenting on this thread unless we hit a issue again. Thank you so much for your help guys.

-

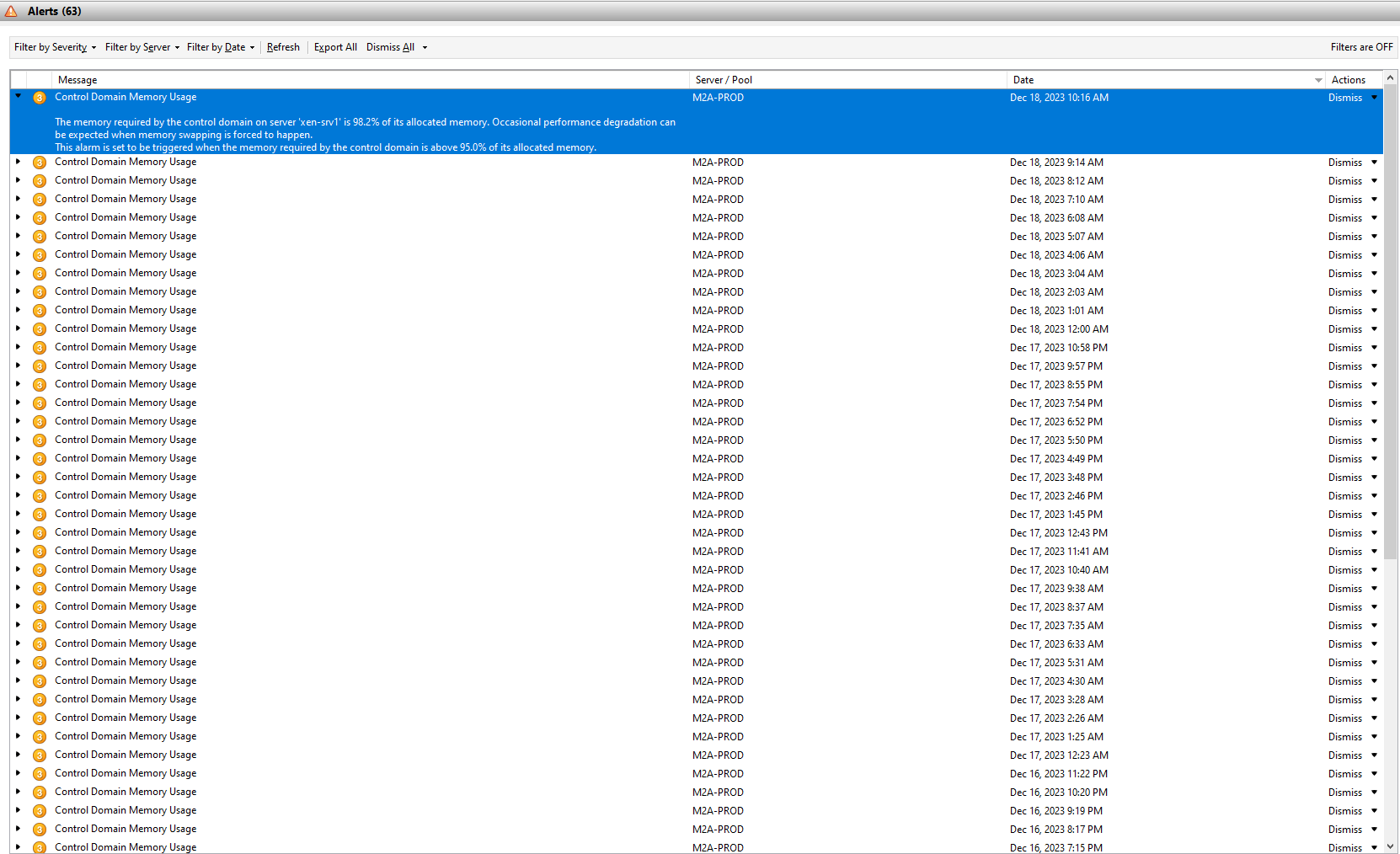

@dhiraj26683 Here we go. Memory accumulated in cache and XCP-NG console started to say about 98% of allocated memory got use, performance degradation might happen.

-

@dhiraj26683 I know xcp-ng center is not supported anymore. But whenever this happens, inspite of lot of memory in cache, the VM's performance gets impacted.

-

@dhiraj26683 How do you see VM performance is impacted?

-

@dhiraj26683 We have production related infrastructure setup on these Hosts. Access to the services hosted gets a little slower than usual. For e.g among other services, one of the service is our deployent server, which we use to deploy softwares from a repository hosted on that server itself. We could see the delays in operations, even if the deployment servers utilization is not that high. If we move the server to another hosts, whose utilization is low, the service works well.

We need to restart the XCP host and everything works well like before. So at the end, we have only one solution to restart the impacted XCP hosts, when this memory utilization reach it's limit and alerts being started about it. -

@dhiraj26683 Sorry for late reply, I'm interested if you solve this issue?

I have similar issue on one node in cluster, the other working well.

When memory issue happened last time I moved all VMs on 'healty' node and on problematic node I applied:

# free && sync && echo 3 > /proc/sys/vm/drop_caches && freeAfter few seconds I was prompted with login (all the time server was responsive so I think it is not restarted).

I know that doesn't solve problem of not releasing cache, but I didn't have to restart server, at least - maybe

So if you have something better, please let me know!