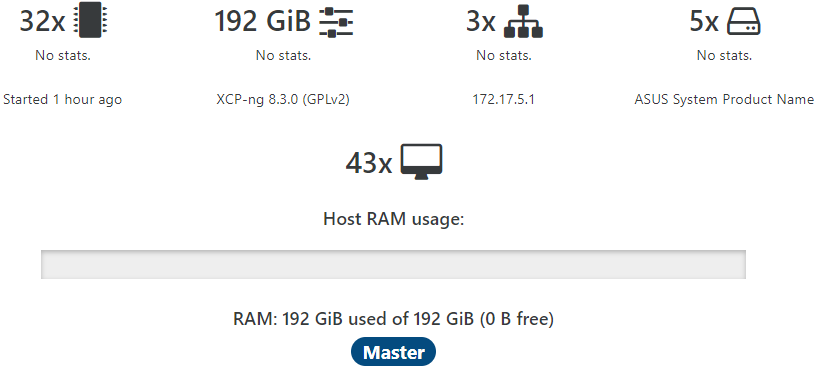

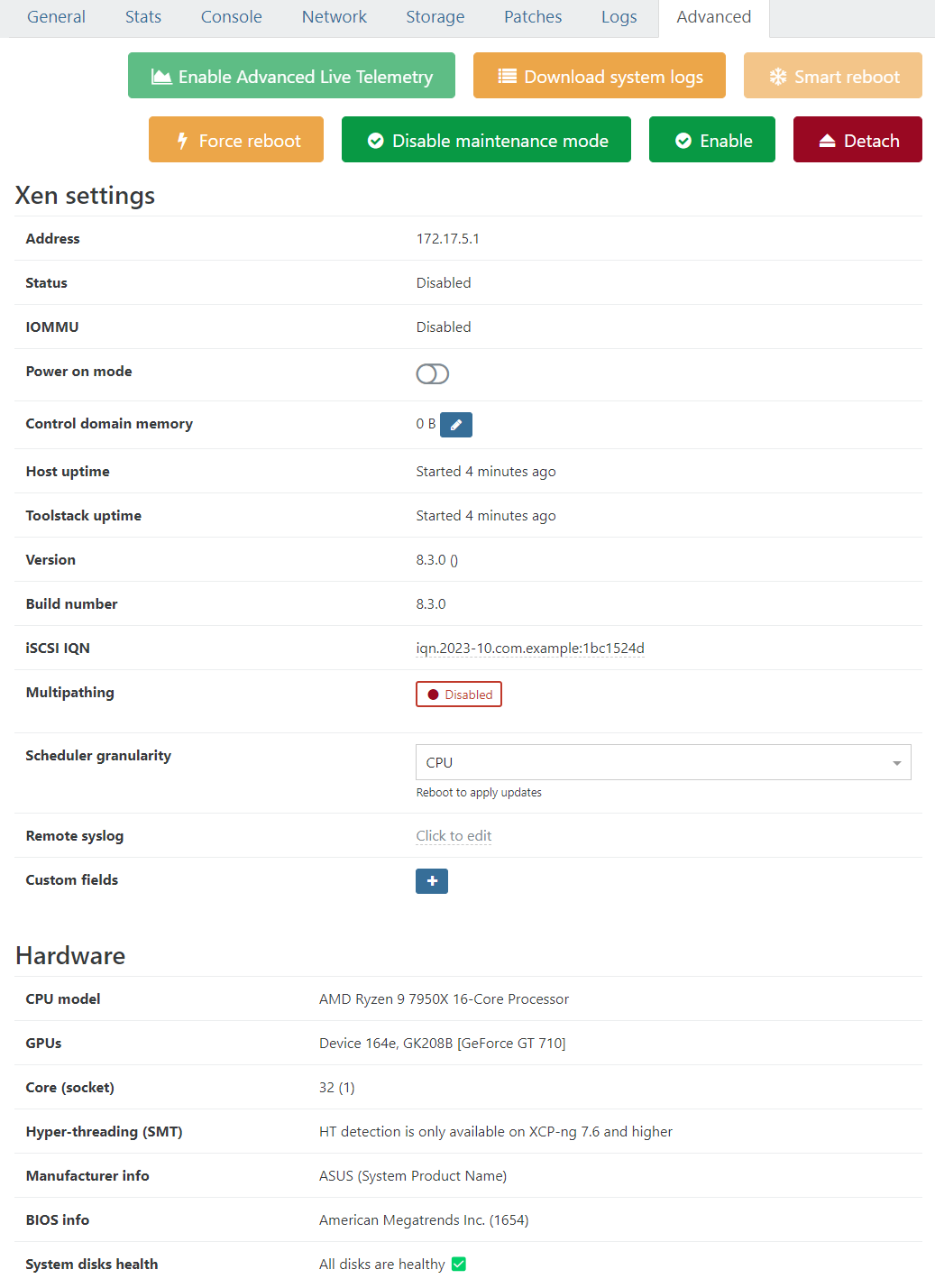

After installing updates: 0 bytes free, Control domain memory = 0B

-

@olivierlambert

I love and respect your attitude

Agree wholeheartedly with @nikade

-

So

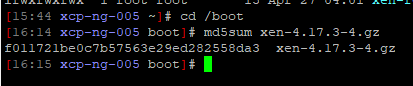

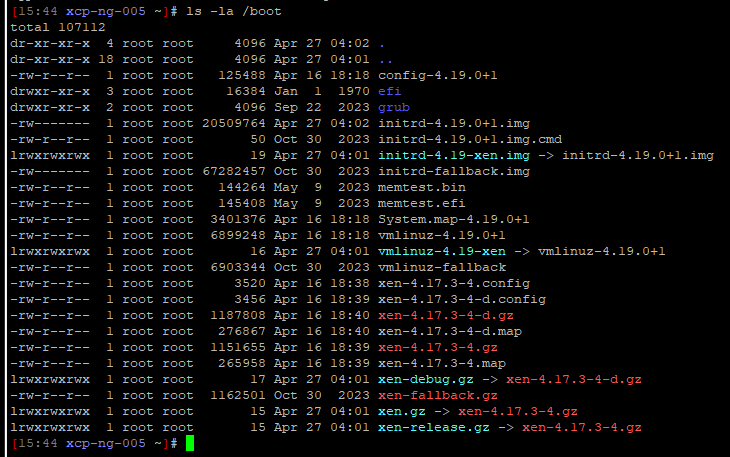

/boot/xen.gzis pointing toxen-4.17.3-4.gz, which sounds correct. So why you are still running on Xen 4.13? It's like you did not reboot, but since you showed me the Grub menu, I'm assuming you already did

It's like you did not reboot, but since you showed me the Grub menu, I'm assuming you already did

It would be interesting to compare the existing xen file and see if it's the right one from our repo. Something is fishy here

-

Can you

md5sum xen-4.17.3-4.gzFrom the mirror & RPM, I have

f011721be0c7b57563e29ed282558da3 -

Adding @yann in the loop in case I'm missing something obvious

-

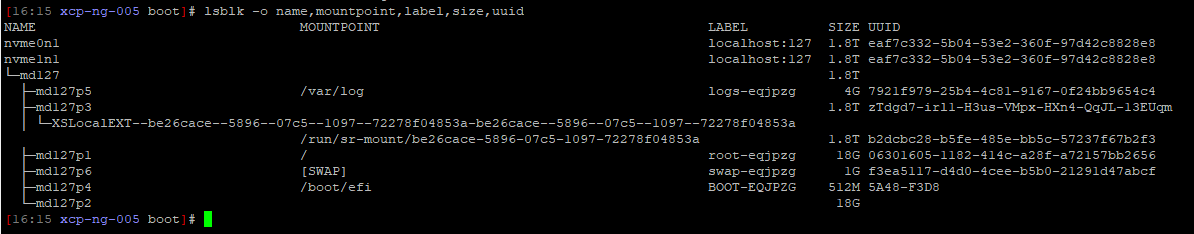

@Dataslak what does

lsblk -o name,mountpoint,label,size,uuidshow? -

-

@yann

Hello Yann, thank you for pitching in.

-

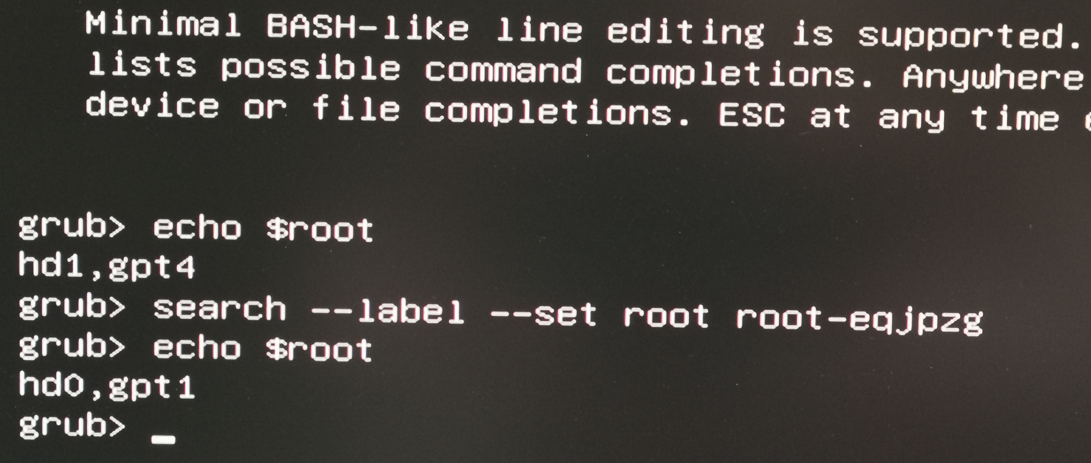

@Dataslak can you please request a commandline from GRUB (hit

con the boot menu), and issue the following commands:echo $root search --label --set root root-eqjpzg echo $root -

Also a

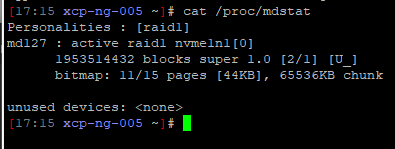

cat /proc/mdstatin the Dom0 would help. -

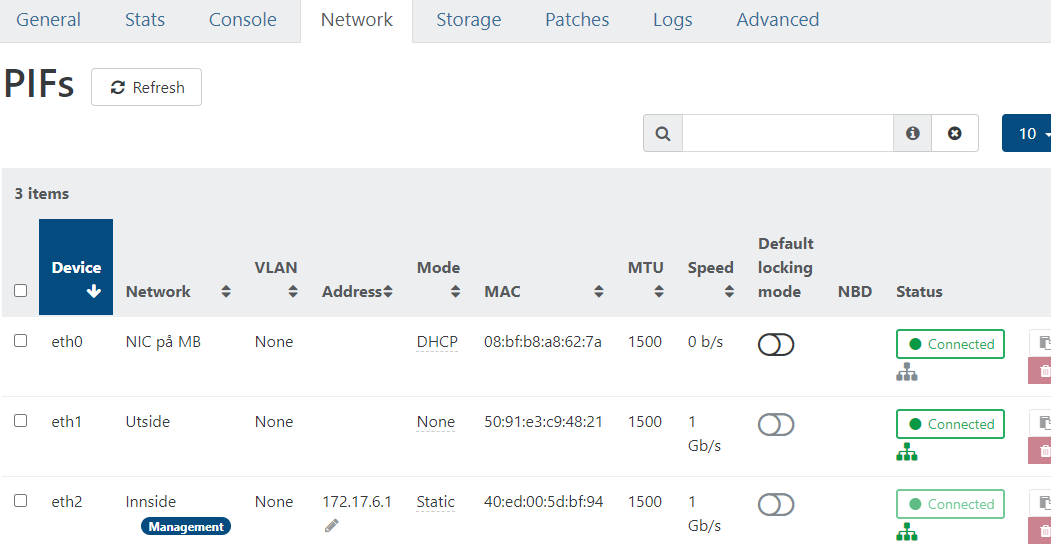

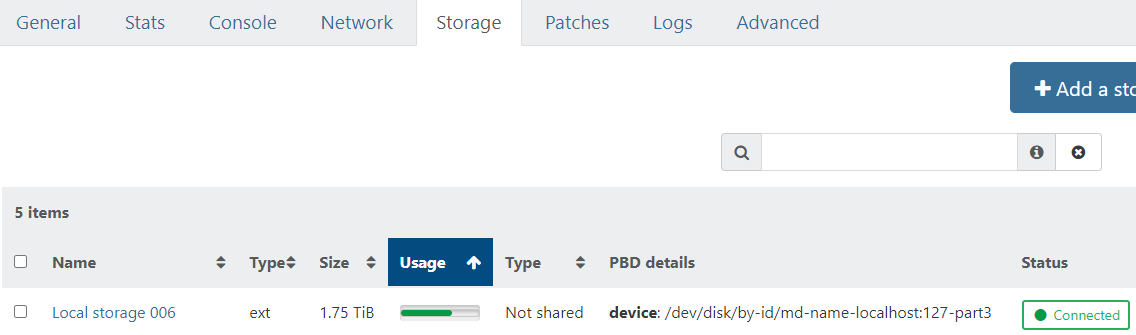

Info: I am mirroring two M.2 SSDs ! Software RAID established by the installation routine of v8.3.

Could the mirror be broken and cause this somehow? -

@olivierlambert said in After installing updates: 0 bytes free, Control domain memory = 0B:

Also a cat /proc/mdstat in the Dom0 would help.

Please forgive my ignorance: How do I execute this command in Dom0 ?

I've read https://wiki.xenproject.org/wiki/Dom0 and it helped a little. Do I run the command in the console within XOA?

-

@Dataslak so it is choosing to "boot from the 1st disk of the raid1", we could try to tell him to boot from the 2nd one:

- on the grub menu hit

eto edit the boot commands - replace that

search ...line withset root=hd1,gpt1 - then hit Ctrl-x to boot

- on the grub menu hit

-

@Dataslak Dom0 is "the host" (if you think it's the host it's not really but anyway), ie the machine you are connected to and showing results since the start

-

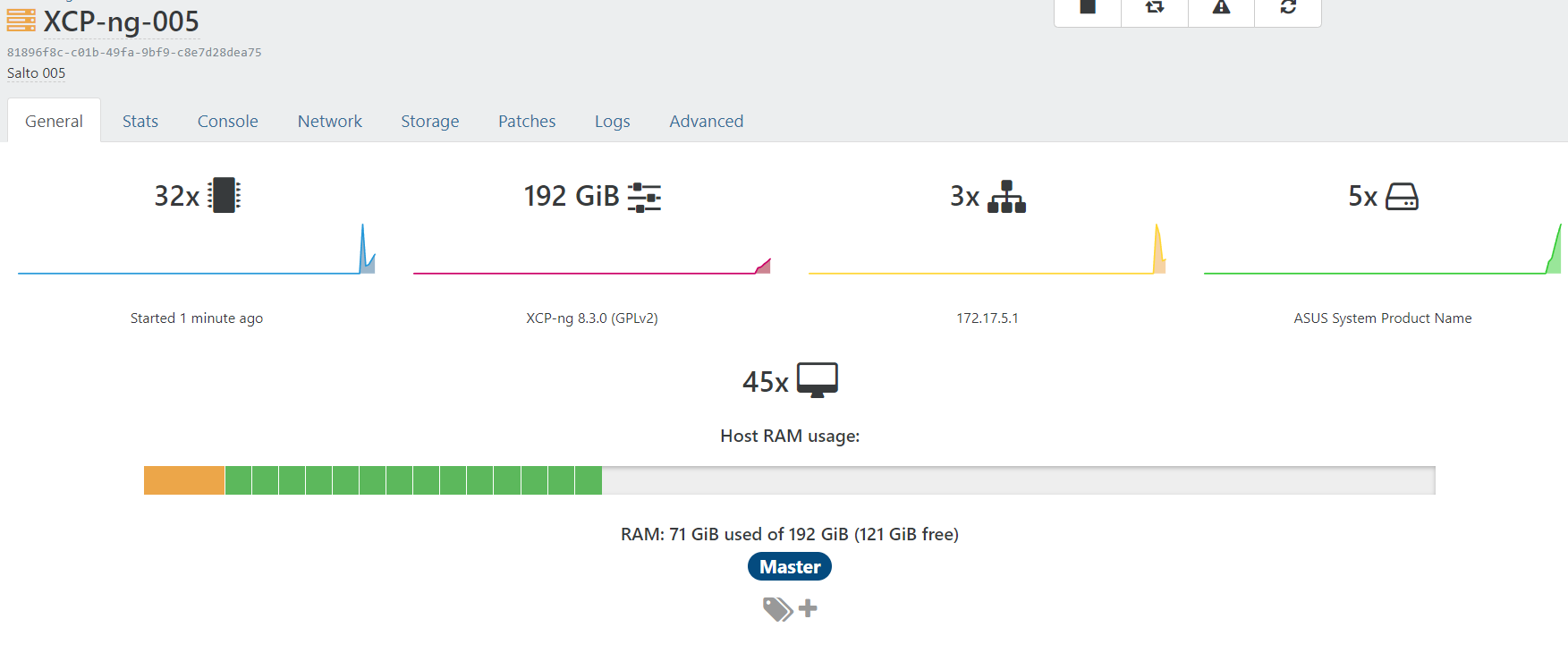

@yann

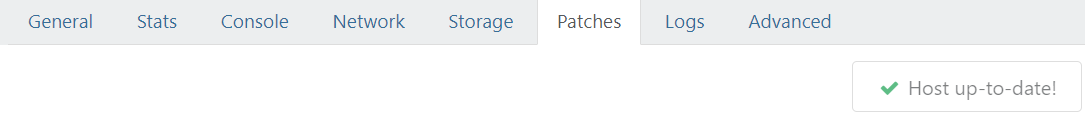

Wohoo!!

All VMs came up!

Host is not in maintenance mode.

Control domain memory = 12GiB

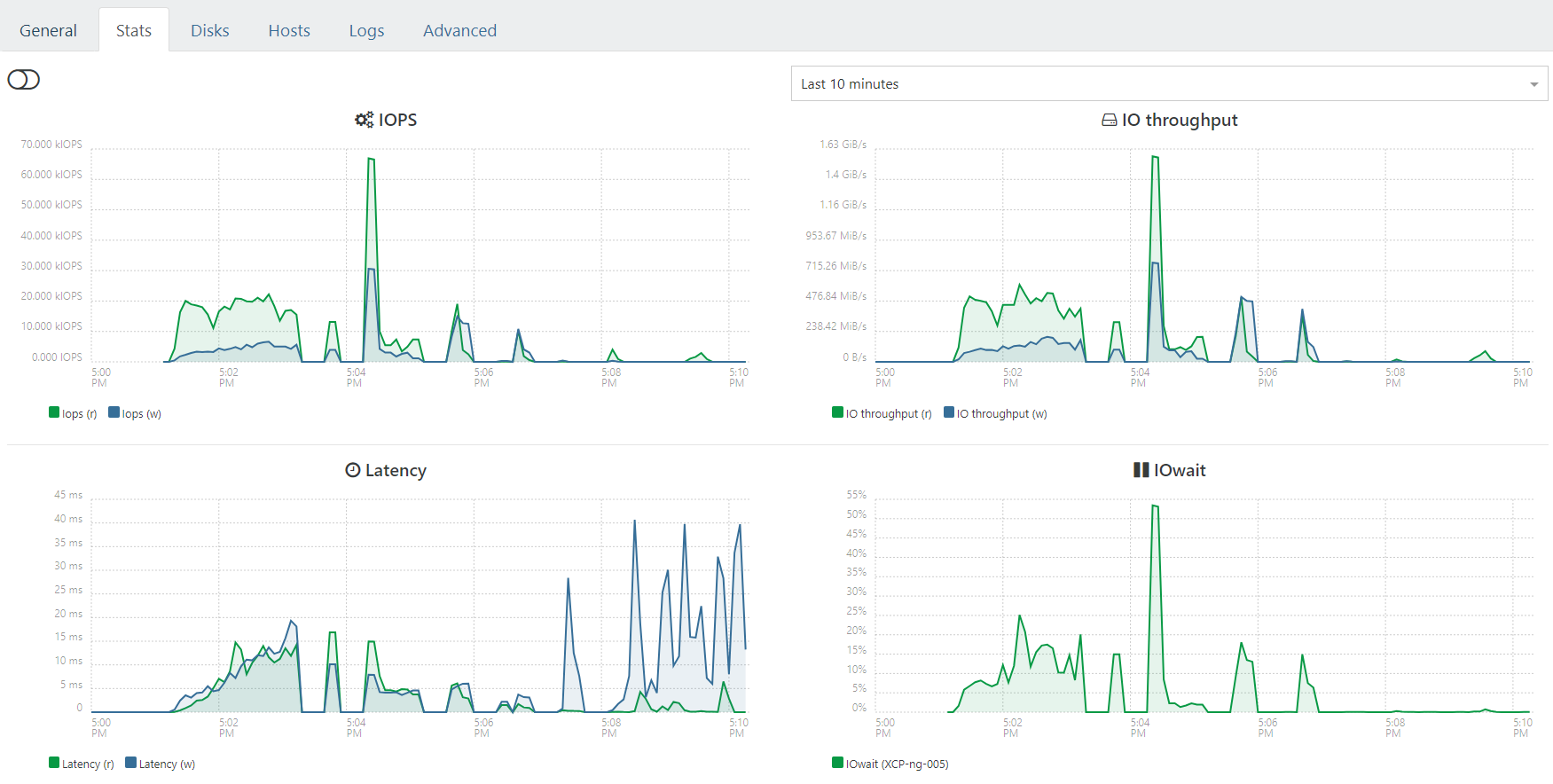

Stats are back

Etc....

As far as I can see (which is limited) everything looks good?

How can I see the status of the RAID1 and see if the mirror is intact ?

-

@olivierlambert

Thank you for explaining to me. I will look more into details when (if) I find time

Ah - I see you were ahead of me !

How can I interpret this? Raid1 OK? Synched? Ready to deal with a single drive failure?

How will XO inform me if one of the drives fails? Will I have to scour through logs, or will there be a clear visible notice in the interface?

-

That's the problem. Your RAID1 lost the sync. And so it continued to boot on the disk out of sync, loading the old Xen from the boot while the rest (root partition) was up to date.

-

@olivierlambert

Since this happened on six servers simultaneously when applying updates through XO I guess we may have found an error ?If so then all of this was not in vain, and I can be happy to have made a tiny tiny contribution to the development of 8.3 ?

Will the modification of the Grub boot loader be safe to apply to all remaining 5 servers? Or should I do some verification on each before applying it?

Is the modification of Grub what I will have to do if a drive fails? Change that one line from set root=hd1,gpt1 to set root=hd0,gpt1 or something?

-

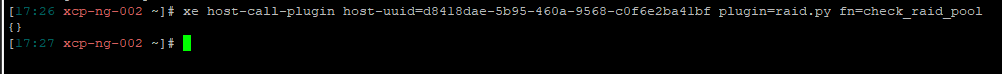

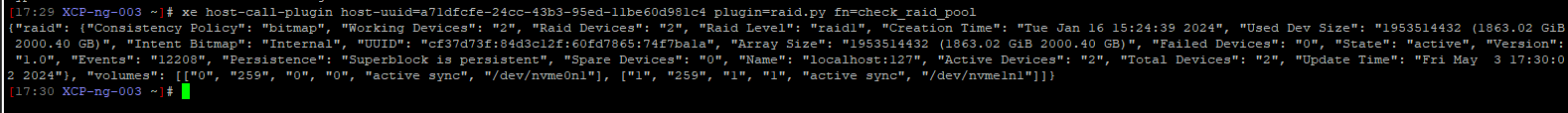

I don't know yet, but you lost one drive. Can you run

xe host-call-plugin host-uuid=<uuid> plugin=raid.py fn=check_raid_pool? (replace with the UUID of the host)edit: check that on all your other hosts

-

XCP-ng-002:

This runs 8.2.1 with only one drive. I was planning on upgrading it to 8.3 and insert another drive to obtain redundancy when time was available:

XCP-ng-003:

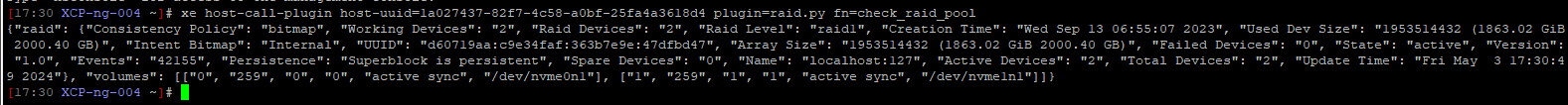

XCP-ng-004:

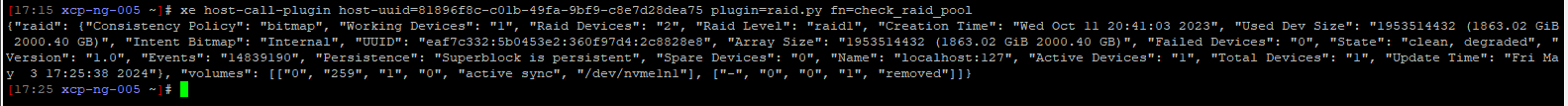

XCP-ng-005:

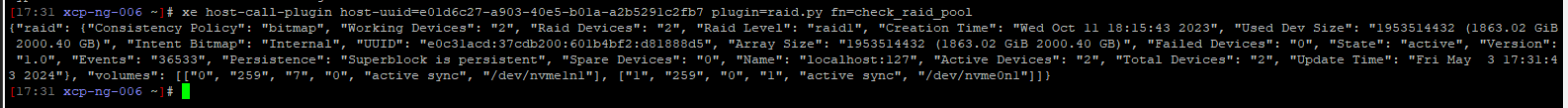

XCP-ng-006:

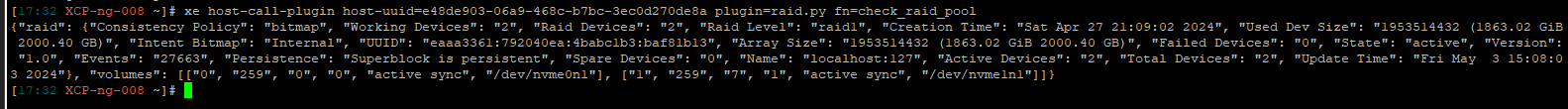

XCP-ng-008:

This server is clean installed after the problems

Can your trained eyes see anything I should be aware of?

-

I can immediately see the hosts with the State: "clean, degraded" on XCP-ng 005. The rest is in the state "active", which is OK.

So you don't have a similar issue on your other hosts, it's only with this one, you have a dead disk (not syncing since a while). Try to check the dead disk and if you can, force a RAID1 sync on it.