Best way to migrate VMs from old pool to new?

-

I have an old pool running XCP-ng 8.1.0, and as the hardware is getting long of tooth I've got a couple of new hosts configured that I'm trying to migrate to, running XCP-ng 8.2.1. These are plugged into a gigabit switch for management and the DMZ the VMs are on, and a pair of 10G switches are used for the storage network, with VM storage being provided by TrueNAS. Probably pretty straightforward.

I run a couple of forums on this cluster, and I'd like to avoid down-time as I migrate to avoid user complaints. So I'm looking at the best way to do that.

I've used the warm migration tool before and this would be a wonderful way to move the VMs, but it looks like 8.1.0 is just too old to support warm migration.

So the question: how to transfer the VMs with the least down-time possible?

Right now I've got 2 ideas:

-

Get a trial of XOA and let it update the pool to 8.2.1, then warm migrate. I've been on the fence here as far as paying for XOA anyway, but haven't because it's essentially a hobby cluster. Can XOA perform an update of the XCP-ng hosts, or will I be setting up an FTP server and updating this the way I did with Xenserver a decade ago?

-

Create a new VM on my new cluster, with access to the 10G network, and figure out hardware pass-through so I can plug in a USB SSD drive large enough to hold the VMs I'm migrating, and just download the virtual machines then upload them to the new server. I've never done this but I think it should probably work.

Maybe there's a third option I haven't figured out yet.

What would you do?

-

-

I would setup a Continuous Replication backup job to create a duplicate VM on the new storage repository. When you are ready to perform the cutover, shutdown the original VM, perform one final run of the CR job, and then start the new VM on the new pool.

-

-

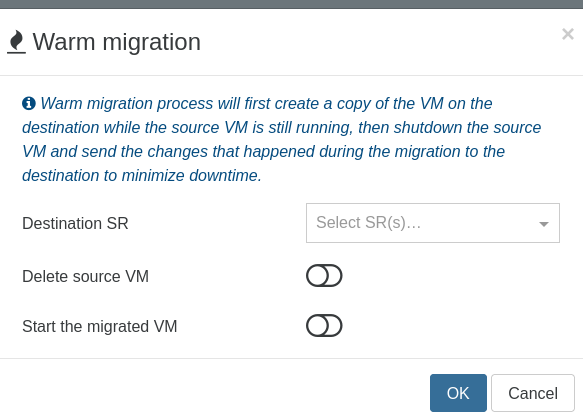

Note this is done almost automagically via our warm migration option (doing the same but integrated)

-

@Danp said in Best way to migrate VMs from old pool to new?:

I would setup a Continuous Replication backup job to create a duplicate VM on the new storage repository. When you are ready to perform the cutover, shutdown the original VM, perform one final run of the CR job, and then start the new VM on the new pool.

This is exactly what I did years ago on another environment that I managed. Worked great and was seemless.

-

@olivierlambert There is a slight but important difference: with the replication you still have the VM in the old pool as a failsafe. I just love warm migrations between clusters. They look like magic to me BUT precisely that magic feeling makes me worry about "what happens if something goes wrong?"

It would be super cool to be able to check "leave the old machine powered off, I will delete it myself" when doing a warm migration between clusters.

-

In that case, use replication, it's exactly what you need. With warm, you can always "warm back" to the original machine though.

-

@olivierlambert That is what I do right now. It would be very convenient to have warm migration without deleting the VM disk and configuration in the old cluster, though, as I would have the convenience of warm migration (don't need to shutdown, all actions automated) and the peace of mind that if something goes wrong, I can always start the VM again in the old cluster.

Thanks for the answer!

-

@ediazcomellas

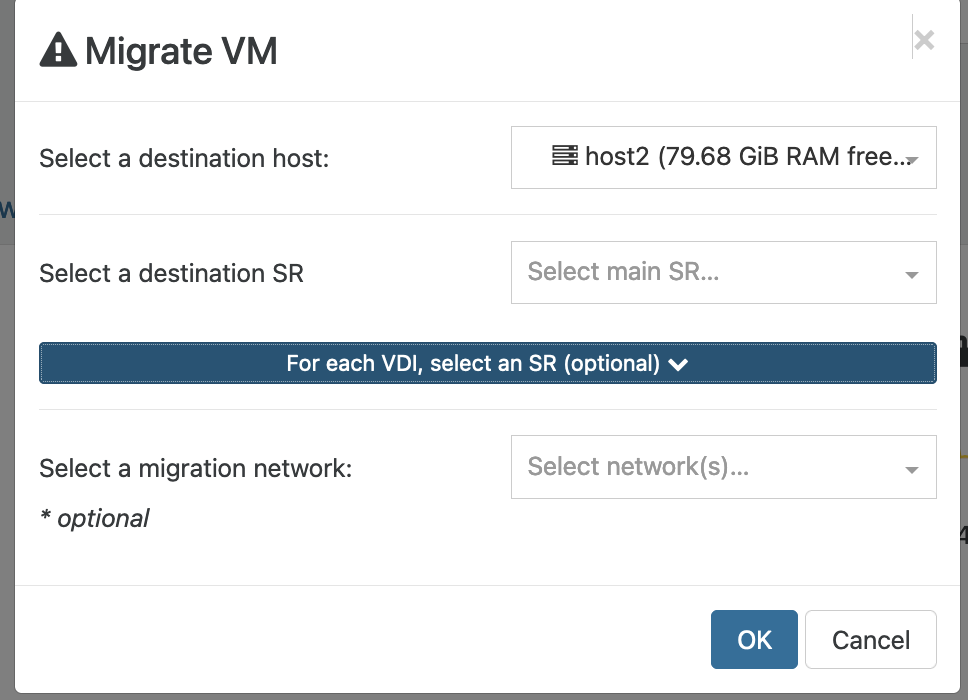

Did I miss anything, or: Why don't you just live-migrate between the hosts? Did you change the CPU manufacturer?If the VMs size is below 1TB, that should work fine, but - of course - the VM is removed from the source.

-

@KPS live/warm migration between clusters is what we were discussing here. Yes, we do it routinely and it is a fantastic feature.

We have some VMs with more than 1 and 2 TBs of storage, so that takes ages. In that case, it is easier to assume some downtime and follow the continuous replication strategy, with the last copy done while the VM is powered off. The only advantage of this strategy over the live migration is that you retain a copy of the VM in the old cluster.

The ability to do a live migration but retain a copy of the VM in the old cluster, just in case, would be greatly appreciated. Best of both worlds.

-

Issue created: https://github.com/vatesfr/xen-orchestra/issues/8034

-

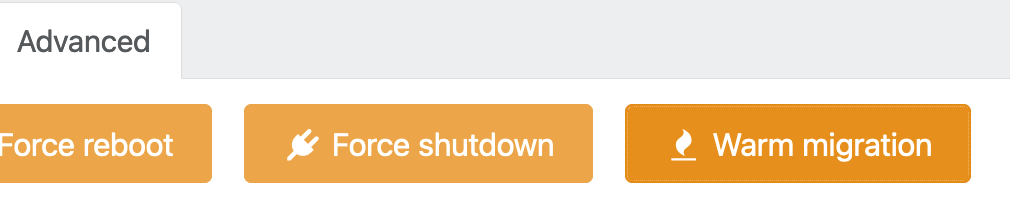

But… but. It's already there

I don't know how it could have been missed

-

@olivierlambert Never seen that !?

Use this:

-

@manilx Just out about this

Stupid me.... Could have used that a few times, between our production and backup pools which are different.

There's always something "new"

-

D danieeljosee referenced this topic on