CBT: the thread to centralize your feedback

-

So with the latest XO update released this week i experience a new behavior when trying to run a backup after a VM has moved from Host A to Host B (while staying on the same shared NFS SR)

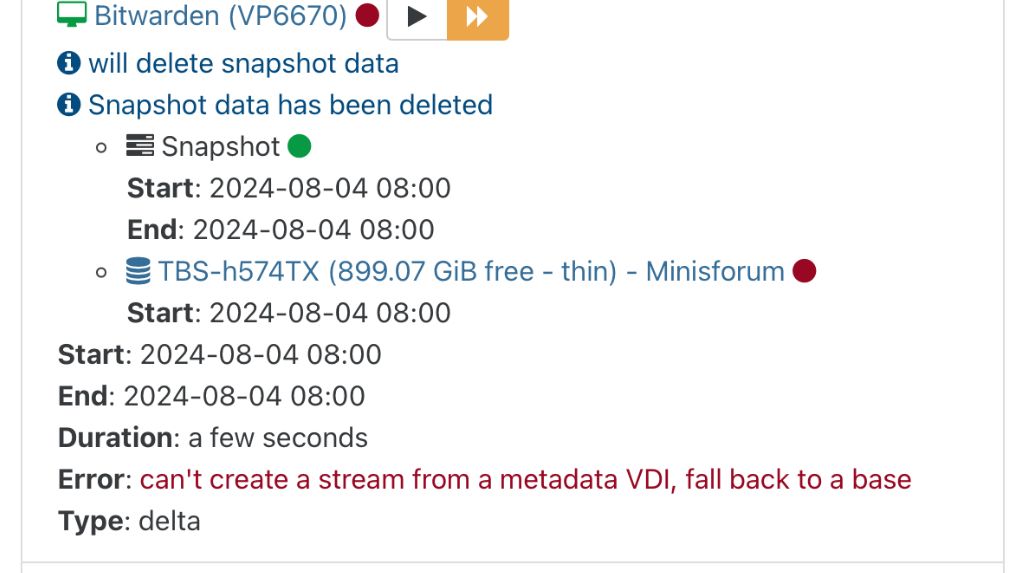

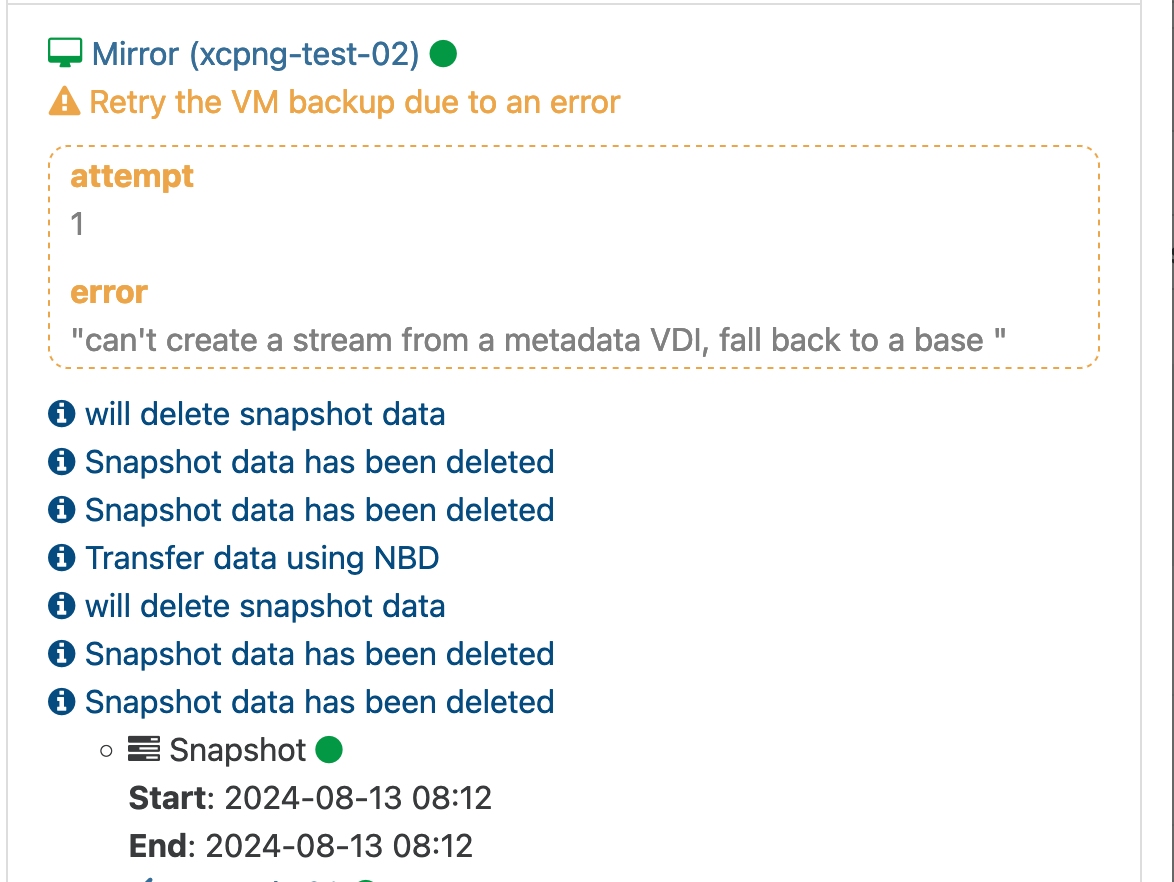

The new error is "Error: can't create a stream from a metadata VDI, fall back to a base " it then retries and runs a full backup.

-

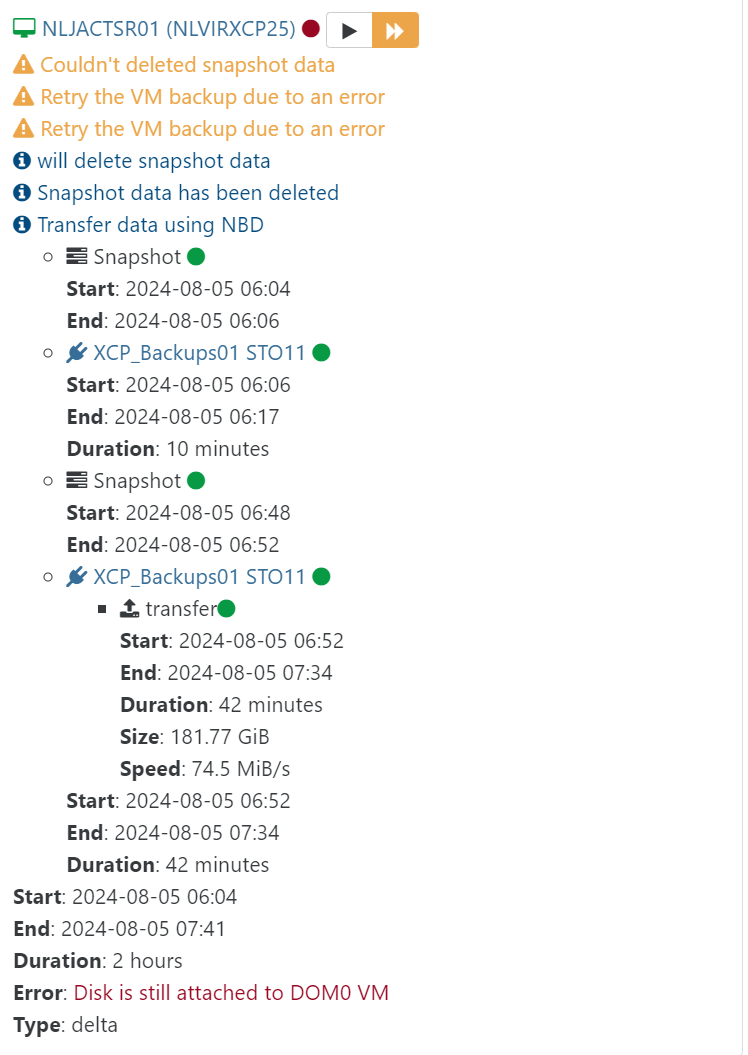

On my CR job I got this error again:

on all VM’s

on all VM’s

Next run was ok.

Running commit cb6cf -

@manilx we see this error on some backups as well. ot so often as we saw them prior to this version so it seems like it has been a bit better.

as a fix i tried setting the retry on backups and this resolves it in most of the situations but sometimes i get this error

Also we still have the VDI in use errors now and then, vdi-data-destroy is not done in that situation leaving it a normal snapshot with CBT, not such a big deal as it is only on a very small number of vms, however they showup as orphan vdi's in XOA Health page what makes it a bit weird, i think they should not be visible there.

-

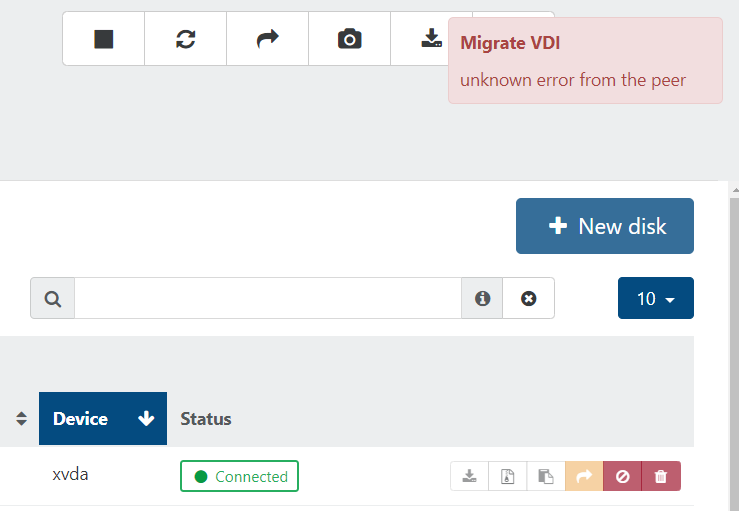

Has anyone seen issues migrating VDIs once CBT is enabled? We're seeing VDI_CBT_ENABLED errors when we try to live migrate disks between SRs. Obviously disabling CBT on the disk allows for the migration to move forward. 'Users' who have limited access don't seem to see specifics on the error but us as admins get a VDI_CBT_ENABLED error. Ideally I think we'd want to be able to still migrate VDIs with CBT enabled or maybe as a part of a VDI migration process CBT would be disabled temporarily, migrated then re-enabled?

User errors:

Admins see:

{ "id": "7847a7c3-24a3-4338-ab3a-0c1cdbb3a12a", "resourceSet": "q0iE-x7MpAg", "sr_id": "5d671185-66f6-a292-e344-78e5106c3987" } { "code": "VDI_CBT_ENABLED", "params": [ "OpaqueRef:aeaa21fc-344d-45f1-9409-8e1e1cf3f515" ], "task": { "uuid": "9860d266-d91a-9d0e-ec2a-a7752fa01a6d", "name_label": "Async.VDI.pool_migrate", "name_description": "", "allowed_operations": [], "current_operations": {}, "created": "20240807T21:33:29Z", "finished": "20240807T21:33:29Z", "status": "failure", "resident_on": "OpaqueRef:8d372a96-f37c-4596-9610-1beaf26af9db", "progress": 1, "type": "<none/>", "result": "", "error_info": [ "VDI_CBT_ENABLED", "OpaqueRef:aeaa21fc-344d-45f1-9409-8e1e1cf3f515" ], "other_config": {}, "subtask_of": "OpaqueRef:NULL", "subtasks": [], "backtrace": "(((process xapi)(filename ocaml/xapi/xapi_vdi.ml)(line 470))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 4696))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 199))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 203))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 42))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 51))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 4708))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 4711))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename ocaml/xapi/helpers.ml)(line 1503))((process xapi)(filename ocaml/xapi/message_forwarding.ml)(line 4705))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 35))((process xapi)(filename lib/xapi-stdext-pervasives/pervasiveext.ml)(line 24))((process xapi)(filename ocaml/xapi/rbac.ml)(line 205))((process xapi)(filename ocaml/xapi/server_helpers.ml)(line 95)))" }, "message": "VDI_CBT_ENABLED(OpaqueRef:aeaa21fc-344d-45f1-9409-8e1e1cf3f515)", "name": "XapiError", "stack": "XapiError: VDI_CBT_ENABLED(OpaqueRef:aeaa21fc-344d-45f1-9409-8e1e1cf3f515) at Function.wrap (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_XapiError.mjs:16:12) at default (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/_getTaskResult.mjs:13:29) at Xapi._addRecordToCache (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1033:24) at file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1067:14 at Array.forEach (<anonymous>) at Xapi._processEvents (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1057:12) at Xapi._watchEvents (file:///usr/local/lib/node_modules/xo-server/node_modules/xen-api/index.mjs:1230:14)" }``` -

@jimmymiller as part of the migration the cbt should be disabled allready, this feature has been created as part of the first release, however it seems that there is a bug that does only disable cbt but leaves the metadata only snapshots on the vm, i believe this is causing the issue.

I think this pull request is created to solve this in the next release

https://github.com/vatesfr/xen-orchestra/pull/7903 -

So testing CBT in our test environment with migrations and this is what i have observed:

Host 1 and Host 2 are in a pool together with a shared NFS SR. If TestVM-01 i son Host 1 using the NFS SR with CBT backups enabled all is fine. Clicking on the VM and then disks shows that CBT is enabled on the drives. If i migrate the VM over to host 2, CBT is disabled and the VM is migrated successfully. On the next backup job run however the job will initially fail with the errror "can't create a stream from a metadata VDI, fall back to a base "...after a retry then the job will run.

If multiple jobs exist for a VM. Say a backup job and a replication job, will that result in 2 CBT snapshots then? That is a ton of space savings vs keeping 2 regular snapshots with the old backup method and cuts down on GC time and storage IO by quite a bit!

-

@flakpyro that’s exactly the reason we were asking for the cbt option to come available. It’s a huge difference in storage usage and the amount of writes done to the storage. Huge improvement!

-

In theory, migrating a VM to another host (but keeping the same shared SR) shouldn't re-trigger a full. It only happens when the VDI is migrated to another SR (the VDI UUID will change and the metadata will be lost).

At least, we can try to reproduce this internally (I couldn't on my prod).

-

@olivierlambert Looks like since the last XOA update it no longer triggers a full which is great news. Instead after a migration i see "can't create a stream from a metadata VDI, fall back to a base" when the job next runs. After that it retries and runs as usual.

-

Interesting

Where are you seeing this message exactly?

Where are you seeing this message exactly? -

@olivierlambert

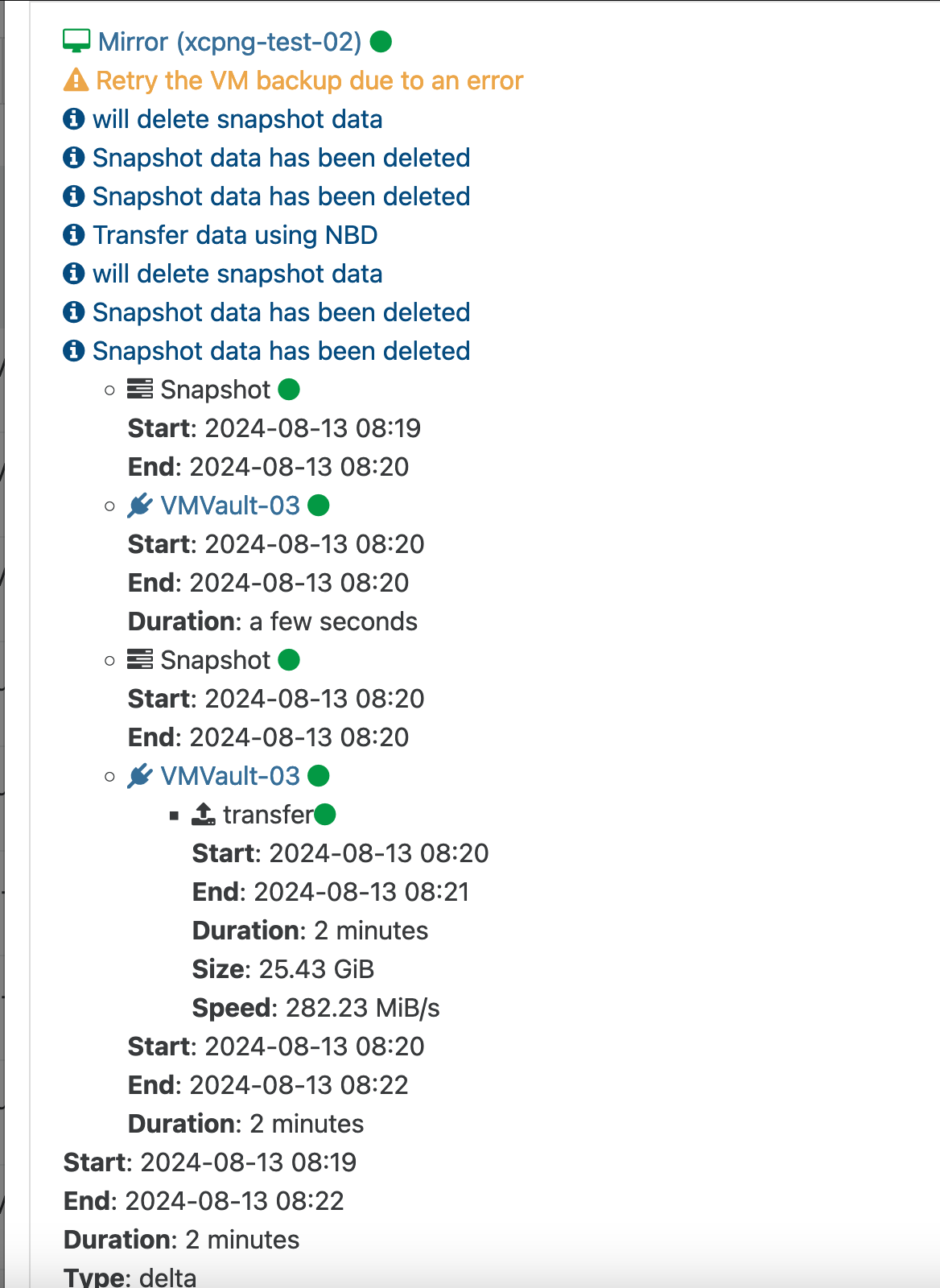

For me it appears in the backup summary on the failed task

And after the job retries. (I have retries set to 3)

I believe others a few posts up are also seeing this same error message. @rtjdamen @manilx

-

And only after migrating the VM from a host to another, without changing its SR?

-

@olivierlambert Correct. The VM in question is on a NFS 4 SR. The next backup run will run without the error for as long as it stays on that host, however if i move it again the process repeats it self.

We are running paid XOA so if it helps i can enable a support tunnel for you guys to take a look at logs. However we have quite a few nightly backups now so not sure if the logs will have rotated. Either way its in our test environment so we can run the job anytime to generate fresh data.

-

That's weird, I'm using RPU in our prod so the VM are moving, but the VDI doesn't change. Anyway, another test to try internally

-

@olivierlambert we do not see this error in relation to migration, they just happen at a random situation. I have seen issues with cbt and multidisk vms, could it be that these are multi disk vms?

-

@rtjdamen Yes the VM i have been testing with above is a multi disk VM. I will do some testing with a single disk VM and see if that makes a difference.

-

@flakpyro this issues we saw were only happening on nfs and multidisk vms. The error was cbt was invalid if i am correct. This is not a xoa error but we saw it on my 3th party backup tool also using cbt

-

@rtjdamen After testing with both single disk VMs and multi disk i am seeing it on both. However it is much more common on multi disk VMs.

-

@flakpyro are u able to test it on iscsi?

-

@rtjdamen I dont not have any iscsi luns mapped to our hosts to test with. We went all in with NFS from iscsi as we moved from ESXi to XCP-NG.